In an period of customized experiences, advice methods are extremely useful for e-commerce purposes. Helping these purposes with profitable advice methods can improve consumer expertise and engagement.

Whereas there are numerous methods to categorize advice methods, these methods might be distinguished as offline and real-time methods primarily based on their occasion processing strategies. Offline methods typically make the most of consumer interplay knowledge to generate suggestions periodically. Actual-time methods use current consumer knowledge for inference primarily based on skilled machine studying fashions.

The aptitude to offer real-time product suggestions can have quite a few advantages in comparison with advice methods that make the most of offline (batch) suggestions. These can embody;

- Higher relevancy & contextual product suggestions: Processing current consumer interactions to generate product suggestions for the present consumer periods can lead to elevated relevancy of suggestions.

- Quicker altering & adapting suggestions: Reflecting consumer occasions in real-time inference can enable the system to replace customized advice outcomes shortly.

- Much less chilly begin points: Having a advice system that may generate product suggestions with a minimal quantity of consumer interactions might be helpful to handle attainable chilly begin points.

With such guarantees, this weblog publish will deal with the technical elements of integrating real-time advice fashions into our present methods. The rest of the doc will deal with a Advice API developed in Scala and a Person Engagement advice pipeline constructed throughout the Python machine studying ecosystem.

At Insider, we attempt to ship customized suggestions with our algorithms and marketing campaign methods. Current advice algorithms course of the consumer interactions every day and produce batch suggestions. Additional personalization primarily based on consumer interactions is then launched to the candidate advice merchandise. To allow this advice pipeline,

- We retailer advice product pairs and product rankings scores in Product Catalog Databases,

- Latest consumer habits and interactions are fetched from the Unified Buyer Database,

- Based mostly on given question parameters, we apply personalization operations with current consumer occasions (comparable to boosting classes & attributes that the consumer has extra affinity for, excluding merchandise that the consumer has already considered or bought).

Whereas profitable advice algorithm buildings and post-ranking personalization cowl many circumstances the place advice relevancy and recency are desired, these strategies might fall quick in delivering product suggestions greatest suited to the present context and consumer interplay patterns.

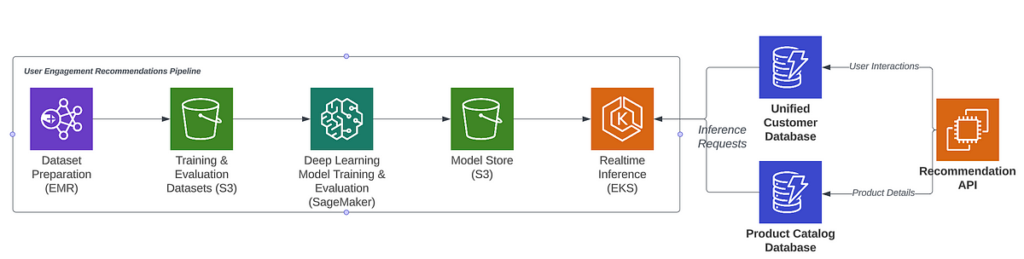

We aimed to fill this hole with our final venture, the Person Engagement pipeline. Person Engagement pipeline makes use of transformer-based fashions skilled with the session knowledge of customers. These fashions are hosted on an API specialised for mannequin serving on Kubernetes. Throughout inference, offering the present session particulars of customers and the proper mannequin identifier is ample to generate customized suggestions primarily based on the present context.

Adapting to a real-time advice system with our API additionally introduced new MLOps issues we needed to handle. We realized that the present mannequin orchestration pipeline manages nearly all of the MLOps wants. Nevertheless, there are particulars relating to Person Engagement fashions that we dealt with on the Advice API facet.

Advice API is the first element of the Good Recommender, the place the advice requests are acquired and addressed. Customers obtain product suggestions primarily based on parameters and personalization particulars outlined within the marketing campaign creation steps.

Within the first iteration of the implementation, we made some MVP selections that might enable us to ship the real-time mannequin construction to manufacturing shortly. These selections, implementation particulars, and future enchancment areas are shared within the Advice API Adaptation part.

Earlier than detailing the real-time inference, offering extra context relating to the underlying tech stack of Person Engagement fashions could also be useful to grasp the situation higher.

Person Engagement fashions are transformer-based fashions that goal to offer customized product suggestions primarily based on the present consumer periods. We utilized the Transformer4Rec mannequin construction to develop our mannequin spine and practice fashions primarily based on consumer interactions inside periods. We carry out mannequin coaching on AWS SageMaker, and make the most of MLFLow to wrap skilled fashions. With the assistance of the in-house mannequin registry venture, a specialised Python API serves wrapped fashions. At present, the API is hosted in Kubernetes. The Mannequin Serving API gives endpoints to carry out CRUD operations for skilled fashions moreover an endpoint that performs the inference with given consumer occasions and the mannequin GUID.

With the underlying mannequin construction defined, the next diagram shows the required interplay between the Person Engagement pipeline and the Advice API.

Because the diagram suggests, the next is the requirement checklist to allow real-time inference within the Advice API.

- Accessing the interactions that customers carried out of their most up-to-date periods,

- Extracting options from these interactions,

- Choosing the proper skilled mannequin primarily based on the incoming request and companion,

- Performing the inference requests and forming product suggestions,

- Evaluating the standard of predicted suggestions.

Within the Advice API, we make the most of the actor buildings of Akka. Whereas there are further advantages of Akka actors, introducing further encapsulation for various operations and concurrent execution of center steps are probably the most helpful for us.

We will now match the implementation supplied above with the diagram that showcases required circulation to serve actual time customized suggestions.

// That is the simplified model of the present

// Person Engagement Actor implementation in Advice API.

class UserEngagementActor(

... // within the authentic implementation

... // we've got some singleton service

... // objects that

... // we move as parameters

) {override def obtain: Obtain = {

case params: UserEngagementMessage =>

val userDetails = getUserDetails(params)

val response =

for {

currentProducts <- paramProducts

userEngagementProducts -> getUserEngagementInference(userDetails)

question = createQuery(userDetails, userEngagementProducts)

recommendationProductList <- fetchRecommendationProducts(params, question)

} yield {

val inferenceFeatures = getUserEngagementFeatures(userInteractions, currentProducts)

logRecommendationEvaluationMetric(userDetails, inferenceFeatures, recommendationProductList)

RecommendationProductResponse(recommendationProductList)

}

response pipeTo sender()

}

...

}

val userDetails = getUserDetails(params)

This line addresses the primary requirement to serve Person Engagement suggestions. When a Person Engagement request is acquired within the SR API, the current interactions of the consumer is fetched from the Unified Buyer Database.

userEngagementProducts -> getUserEngagementInference(userDetails)

That is the operate that accesses the service class for Person Engagement suggestions. It’s the half the place the API performs all of the Person Engagement mannequin and prediction-related operations. Payloads for the inference are shaped right here, and this service handles the inference course of with HTTP requests to Mannequin Serving API. Within the subsequent subsection, we might be protecting particulars of the service in additional element.

question = createQuery(userDetails, userEngagementProducts)

recommendationProductList <- fetchRecommendationProducts(params, question)

Based mostly on parameters within the API request and the merchandise we receive after the inference name, we then populate the required particulars of those merchandise from Product Catalog Databases. To this finish, we carry out an Elasticsearch question with the product ID info we collect from the inference end result.

val inferenceFeatures = getUserEngagementFeatures(userInteractions, currentProducts)logRecommendationEvaluationMetric(userDetails, inferenceFeatures, recommendationProductList)

RecommendationProductResponse(recommendationProductList)

The final a part of the operations within the actor construction types the API response by developing the objects for that objective. Along with this operation, we additionally carry out logging operations right here to judge the standard of the inference.

Because the pseudo-implementation depicts, all the required operations will allow serving customized suggestions in actual time. Moreover, for different present advice methods, our API implements specialised actors that encapsulate totally different essential operations for various circumstances.

On this part, I might be offering extra context relating to the inference section within the Advice API the place Person Engagement suggestions are obtained.

With an extra service class, the interior implementation, HTTP request and response operations, and have/payload creation logic are stored separate from the actor class. This service class is accessed by the actor with current consumer interactions. The response that the actor receives is the checklist of ranked Person Engagement really helpful product IDs.

To maintain the actor with the inference outcomes, the inference service has the next circulation;

- Fetch the proper mannequin GUID: To fetch the proper mannequin for the given request, we make the most of the metadata desk for fashions. As the primary iteration of the Person Engagement pipeline, we make the most of probably the most up-to-date fashions skilled for companions throughout inference.

- Type the inference payload: Within the preliminary implementation, inference payload within the Advice API included all the options we used for fashions in them. We calculated or gathered options from the current consumer interplay knowledge. Nevertheless, we realized that the consumer interplay knowledge and product databases may have some inconsistencies between them relating to a number of the options. Due to it, we transitioned function operations to the skilled fashions. With this alteration, the one info we have to carry out inference is the merchandise ID and the timestamp info from current user-product interactions

Person Engagement fashions are wrapped with MLFlow and include lookup tables for function values as parquet recordsdata. Throughout inference, the mannequin fetches the corresponding function values from the lookup tables and types its personal enter knowledge. It permits us to make use of the constant function values relating to objects.

Ship the inference request and return the response to the actor: We now have an extra API on this pipeline to host the skilled Person Engagement fashions referred to as Model Serving API. This API permits fast entry to skilled Person Engagement fashions with optimized cache buildings. For every companion that makes use of Person Engagement suggestions, we’ve got their skilled fashions served within the Mannequin Serving API to be accessed by their mannequin GUIDs. Due to this, a significant advice inference requires appropriate mannequin entry and proper function set.

The Actual-Time Person Engagement suggestions pipeline at the moment operates round 30 companions that make the most of the Good Recommender. Because the function has been developed not too long ago, we anticipate that the variety of companions that undertake the function might be growing steadily. Because the variety of companions that use the function will increase, the variety of skilled fashions we have to serve in actual time may even enhance. Whereas we don’t foresee a possible downside sooner or later, serving an elevated variety of fashions will problem the Mannequin Serving API a part of the pipeline with reminiscence allocation and real-time site visitors.

Our first implementation iteration additionally contains an choice to watch the relevancy of suggestions which might be served to finish customers. To determine this, we use a customized analysis metric much like NDCG. As an alternative of straight utilizing it, we modified the present metric to judge category-matching efficiency between consumer interactions and predicted suggestions. We referred to as this metric the Weighted Common Class Matching Rating, or WACS.

After every inference operation in Advice API, we calculate the WACS rating of the present Advice API request. Every rating is enriched with request particulars and logged to S3 with Kinesis Firehose streams. These logs are utilized to create an Athena desk. This fashion, we are able to monitor the advice relevance for skilled fashions within the system.

Establishing the real-time Person Engagement suggestions pipeline was one of many milestones for a whole real-time advice system. There may be important room for enhancements within the mannequin lifecycle elements of the present pipeline. Shortly, we wish to introduce;

- Improved Mannequin Versioning, the place we put together a number of fashions for a single coaching process. This fashion, we are able to choose the perfect performing mannequin for the inference course of,

- Monitoring Mannequin Efficiency for Retraining, the place we carefully monitor the already deployed fashions for efficiency drops. In such circumstances, we are able to dynamically retrain fashions to adapt to knowledge change and knowledge drift situations.

Establishing the real-time inference circulation in our Advice API allowed us to serve Person Engagement suggestions to finish customers. Under, you possibly can see pattern product suggestions for a consumer after a few interactions.

On this pattern situation, the consumer interacts with cheese and strawberry chocolate reward bins. Following are the top-ranked Person Engagement suggestions after these two interactions. We will observe that the Person Engagement pipeline can present related product suggestions within the real-time situation.

As famous earlier, preserving product suggestions adaptive and related in real-time methods is essential. In an upcoming publish, we’ll delve into additional particulars relating to the underlying transformer fashions, options, and actions to maintain the excessive advice relevancy of the Person Engagement pipeline.

Within the meantime, be sure that you examine the Insider Engineering Medium Web page. For readers aiming to be taught extra concerning the underlying MLOps in Insider, I can advocate a publish that focuses on our in-house Machine Learning Platform: Delphi.