add noise to enhance the efficiency of 2D and 3D object detection. On this article we’ll learn the way this mechanism works and focus on its contribution.

Early Imaginative and prescient Transformers

DETR — DEtection TRansformer (Carion, Massa et al. 2020), one of many first transformer architectures for object detection, used realized decoder queries to extract detection info from the picture tokens. These queries have been randomly initialized, and the structure didn’t impose any constraints that pressured these queries to be taught issues that resembled anchors. Whereas reaching comparable outcomes with Quicker-RCNN, its downside was in its sluggish convergence — 500 epochs have been required to coach it (DN-DETR, Li et al., 2024). Newer DETR-based architectures, used deformable aggregation that enabled queries to focus solely on sure areas within the picture (Zhu et al., Deformable DETR: Deformable Transformers For Finish-To-Finish Object Detection, 2020), whereas others (Liu et al., DAB-DETR: Dynamic Anchor Bins Are Higher Queries For DETR, 2022) used spatial anchors (generated utilizing k-means, in a manner much like the way in which anchor-based CNNs do it), that have been encoded into the preliminary queries. Skip connections pressured the decoder block of the transformer be taught bins as regression values from the anchors. Deformable consideration layers used the pre-encoding anchors to pattern spatial options from the picture and use them to assemble tokens for consideration. Throughout coaching the mannequin learns the optimum anchors to make use of. This method teaches the mannequin to explicitly use options like field measurement in its queries.

Prediction To Floor Reality Matching

With a view to calculate the loss, the coach first must match the mannequin’s predictions with floor reality (GT) bins. Whereas anchor-based CNNs have comparatively simple options to that drawback (e.g. each anchor can solely be matched with GT bins in its voxel throughout coaching, and, in inference, non-maximum suppression to take away overlapping detections), the usual for transformers, set by DETR, is to make use of a bipartite matching algorithm known as the Hungarian algorithm. In every iteration, the algorithm finds the perfect prediction to GT matching (an identical that optimizes some price perform, just like the imply squared distance between field corners, summed over all of the bins). The loss is then calculated between pairs of prediction-GT field and might be back-propagated. Extra predictions (prediction with no matching GT) incur a separate loss that encourages them to lower their confidence rating.

The Downside

The time complexity of the Hungarian algorithm is o(n³). Apparently, this isn’t essentially the bottle neck in coaching high quality: it was proven (The Steady Marriage Downside: An Interdisciplinary Evaluation From The Physicist’s Perspective, Fenoaltea et al., 2021) that the algorithm is unstable, within the sense {that a} small change in its goal perform could result in a dramatic change in its matching consequence — resulting in inconsistent question coaching targets. The sensible implications in transformer coaching are that object-queries can bounce between objects and take a very long time to be taught the perfect options for convergence.

DN-DETR

A sublime answer to the unstable matching drawback was proposed by Li et al. and later adopted by many different works, together with DINO, Masks DINO, Group DETR and many others.

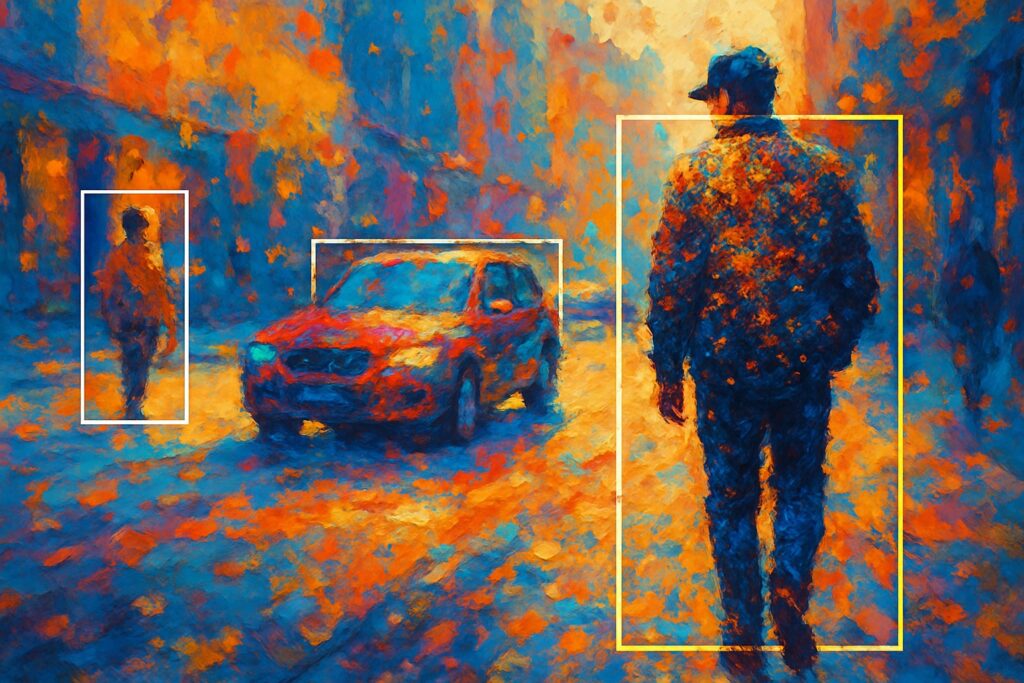

The primary concept in DN-DETR is to spice up coaching by creating fictitious, easy-to-regress-from anchors, that skip the matching course of. That is performed throughout coaching by including a small quantity of noise to GT bins and feeding these noised-up bins as anchors to the decoder queries. The DN queries are masked from the natural queries and vice versa, to keep away from cross consideration that may intervene with the coaching. The detections generated by these queries are already matched with their source-GT bins and don’t require the bipartite matching. The authors of DN-DETR have proven that in validation levels at epoch ends (the place denoising is turned off), this improves the steadiness of the mannequin in comparison with DETR and DAB-DETR, within the sense that extra queries are constant of their matching with a GT object in successive epochs. (See Determine 2).

The authors present that utilizing DN each accelerates convergence, and achieves higher detection outcomes. (See Determine 3). Their ablation examine reveals a rise of 1.9% in AP on COCO detection dataset, in contrast the earlier SOTA (DAB-DETR, AP 42.2%), when utilizing ResNet-50 as spine.

DINO And Contrastive Denoising

DINO took this concept additional, and added contrastive studying to the denoising mechanism: along with the optimistic instance, DINO creates one other noised-up model for every GT, which is mathematically constructed to be extra distant from the GT, in comparison with the optimistic instance (see Determine 4). That model is used as a detrimental instance for the coaching: the mannequin learns to just accept the detection nearer to the bottom reality, and reject the one that’s farther away (by studying to foretell the category “no object”).

As well as, DINO allows a number of contrastive denoising (CDN) teams — a number of noised-up anchors per GT object — getting extra out of every coaching iteration.

DINO’s authors report AP of 49% (on COCO val2017) when utilizing CDN.

Latest temporal fashions, that must hold monitor on objects from body to border, like Sparse4Dv3, utilizing the CDN, and add temporal denoising teams, the place among the profitable DN anchors are saved (together with the realized, non-DN anchors), for utilization in later frames, enhancing the mannequin’s efficiency in object monitoring.

Dialogue

Denoising (DN) appears to enhance the convergence pace and closing efficiency of imaginative and prescient transformer detectors. However, analyzing the evolution of the assorted strategies talked about above, raises the next questions:

- DN improves fashions that use learnable anchors. However are learnable anchor actually so essential? Would DN additionally enhance fashions that use non-learnable anchors?

- The primary contribution of DN to the coaching is by including stability to the gradient descent course of by bypassing the bipartite matching. However plainly the bipartite matching is there, primarily as a result of the usual in transformer works is to keep away from spatial constraints on queries. So, if we manually constrained queries to particular picture areas, and gave up using bipartite matching (or used a simplified model of bipartite matching, that runs on every picture patch individually) — would DN nonetheless enhance outcomes?

I couldn’t discover works that offered clear solutions to those questions. My speculation is {that a} mannequin that makes use of non-learnable anchors (offered that the anchors are usually not too sparse) and spatially constrained queries, 1 — wouldn’t require a bipartite matching algorithm, and a couple of — wouldn’t profit from DN in coaching, because the anchors are already identified and there’s no revenue in studying to regress from different evanescent anchors.

If the anchors are fastened however sparse, then, I can see how utilizing evanescent anchors which are simpler to regress from, can present a warm-start to the coaching course of.

Anchor-DETR (Wand et al., 2021) evaluate the spatial distribution of learnable and non-learnable anchors, and the efficiency of the respective fashions, and for my part, the learnability doesn’t add that a lot worth to the mannequin’s efficiency. Notably — they use the Hungarian algorithm in each strategies, so it’s unclear whether or not they may quit the bipartite matching and retain the efficiency.

One consideration to make is that there could also be manufacturing causes to keep away from NMS in inference, which promotes utilizing the Hungarian algorithm in coaching.

The place can denoising actually be important? For my part — in monitoring. In monitoring the mannequin is fed a video stream, and is required not solely to detect a number of objects throughout successive frames, but additionally to protect the distinctive id of every detected object. Temporal transformer fashions, i.e. fashions that make the most of the sequential nature of the video stream, don’t course of particular person frames independently. As a substitute, they keep a financial institution that shops earlier detections. At coaching, the monitoring mannequin is inspired to regress from an object’s earlier detection (or extra exactly — the anchor that’s connected to the item’s earlier detection), somewhat than regressing from merely the closest anchor. And because the earlier detection isn’t constrained to some fastened anchor grid, it’s believable that the flexibleness that DN induces, is useful. I’d very very like to learn future works that attend to those points.

That’s it for denoising and its contribution to imaginative and prescient transformers! Should you favored my article, you’re welcome to go to a few of my different articles on deep studying, machine studying and Computer Vision!