This text is a continuation of matter modeling open-source intelligence (OSINT) from the OpenAlex API. In a earlier article, I give an introduction into matter modeling, the information used, and a standard NLP method utilizing Latent Dirichlet Allocation (LDA).

See the earlier article right here:

This text makes use of a extra superior method of matter modeling by leveraging illustration fashions, generative AI, and different superior strategies. We leverage BERTopic to carry a number of fashions collectively into one pipeline, visualize our subjects, and discover variations of matter fashions.

The BERTopic Pipeline

Utilizing a standard method to matter modeling could be tough, needing to construct your personal pipeline to scrub your knowledge, tokenize, lemmatize, create options, and so on. Conventional fashions like LDA or LSA are additionally computationally costly and sometimes yield poor outcomes.

BERTopic leverages the transformer structure by means of embedding fashions, and incorporates different elements like dimensionality discount and matter illustration fashions, to create high-performing matter fashions. BERTopic additionally gives variations of fashions to suit a wide range of knowledge and use circumstances, visualizations to discover outcomes, and extra.

The largest benefit of BERTopic is its modularity. Seen above, the pipeline is comprised of a number of totally different fashions:

- Embedding mannequin

- Dimensionality Discount mannequin

- Clustering mannequin

- Tokenizer

- Weighting Scheme

- Illustration mannequin (non-compulsory)

Subsequently, we are able to experiment with totally different fashions in every element, every with its personal parameters. For instance, we are able to attempt totally different embedding fashions, change the dimensionality discount from PCA to UMAP, or attempt fine-tuning the parameters on our clustering mannequin. This can be a big benefit that permits us to suit a subject mannequin to our knowledge and use case.

First, we have to import to mandatory modules. Most of those are to construct the elements of our BERTopic mannequin.

#import packages for knowledge administration

import pickle

#import packages for matter modeling

from bertopic import BERTopic

from bertopic.illustration import KeyBERTInspired

from bertopic.vectorizers import ClassTfidfTransformer

from sentence_transformers import SentenceTransformer

from umap.umap_ import UMAP

from hdbscan import HDBSCAN

from sklearn.feature_extraction.textual content import CountVectorizer

#import packages for knowledge manipulation and visualization

import pandas as pd

import matplotlib.pyplot as plt

from scipy.cluster import hierarchy as schEmbedding Mannequin

The principle element of the BERTopic mannequin is the embedding mannequin. First, we initialize the mannequin utilizing the sentence transformer. You possibly can then specify the embedding mannequin you want to use.

On this case, I’m utilizing a comparatively small mannequin (~30 million parameters). Whereas we are able to most likely get higher outcomes utilizing bigger embedding fashions, I made a decision to make use of a smaller mannequin to emphasise velocity on this pipeline. You could find and evaluate embedding fashions based mostly on their measurement, efficiency, supposed use, and so on. through the use of the MTEB leaderboard from Hugging Face (https://huggingface.co/spaces/mteb/leaderboard).

#initalize embedding mannequin

embedding_model = SentenceTransformer('thenlper/gte-small')

#calculate embeddings

embeddings = embedding_model.encode(knowledge['all_text'].tolist(), show_progress_bar=True)As soon as we run our mannequin, we are able to use the .form operate to see the scale of the vectors produced. Beneath, we are able to see that every embedding incorporates 384 values which make up the that means of every doc.

#invesigate form and measurement of vectors

embeddings.form

#output: (6102, 384)Dimensionality Discount Mannequin

The subsequent element of the BERTopic mannequin is the dimensionality discount mannequin. As high-dimensional knowledge could be troublesome to mannequin, we are able to use a dimensionality discount mannequin to signify the embeddings in a decrease dimensional illustration with out shedding an excessive amount of data.

There are a number of several types of dimensionality discount fashions, with Principal Element Evaluation (PCA) being the preferred. On this case, we are going to use a Uniform Manifold Approximation and Projection (UMAP) mannequin. The UMAP mannequin is a non-linear mannequin and is more likely to higher deal with the complicated relationships in our knowledge higher than PCA.

#initialize dimensionality discount mannequin and cut back embeddings

umap_model = UMAP(n_neighbors=5, min_dist=0.0, metric='cosine', random_state=42)

reduced_embeddings = umap_model.fit_transform(embeddings)You will need to word that dimensionality discount isn’t a solve-all for high-dimensional knowledge. Dimensionality discount presents a tradeoff between velocity and accuracy as data is misplaced. These fashions must be well-thought out and experimented with to keep away from shedding an excessive amount of data whereas sustaining velocity and scalability.

Clustering Mannequin

The third step is to make use of the decreased embeddings and create clusters. Whereas clustering isn’t normally mandatory for matter modeling, we are able to leverage density-based clustering fashions to isolate outliers and eradicate noise in our knowledge. Beneath, we initialize the Hierarchical Density-Based mostly Spatial Clustering of Functions with Noise (HDBSCAN) mannequin and create our clusters.

#initialize clustering mannequin and cluster

hdbscan_model = HDBSCAN(min_cluster_size=30, metric='euclidean', cluster_selection_method='eom').match(reduced_embeddings)

clusters = hdbscan_model.labels_A density-based method provides us just a few benefits. Paperwork aren’t pressured into clusters that they shouldn’t be assigned to, due to this fact isolating outliers and lowering noise in our knowledge. Additionally, versus centroid-based fashions, we don’t specify the variety of clusters, and clusters usually tend to be well-defined.

See my information to clustering algorithms:

See the code beneath to visualise the outcomes of the clustering mannequin.

#create dataframe of decreased embeddings and clusters

df = pd.DataFrame(reduced_embeddings, columns = ['x', 'y'])

df['Cluster'] = [str(c) for c in clusters]

#break up between clusters and outliers

to_plot = df.loc[df.Cluster != '-1', :]

outliers = df.loc[df.Cluster == '-1', :]

#plot clusters

plt.scatter(outliers.x, outliers.y, alpha = 0.05, s = 2, c = 'gray')

plt.scatter(to_plot.x, to_plot.y, alpha = 0.6, s = 2, c = to_plot.Cluster.astype(int), cmap = 'tab20b')

plt.axis('off')

We are able to see well-defined clusters that don’t overlap. We are able to additionally see some smaller clusters group collectively to make up higher-level subjects. Lastly, we are able to see a number of paperwork are greyed out and recognized as outliers.

Making a BERTopic Pipeline

We now have the required elements to construct our BERTopic pipeline (embedding mannequin, dimensionality discount mannequin, clustering mannequin). We are able to use the fashions we now have initialized and match them to our knowledge utilizing the BERTopic operate.

#use fashions above to BERTopic pipeline

topic_model = BERTopic(

embedding_model=embedding_model, # Step 1 - Extract embeddings

umap_model=umap_model, # Step 2 - Cut back dimensionality

hdbscan_model=hdbscan_model, # Step 3 - Cluster decreased embeddings

verbose = True).match(knowledge['all_text'].tolist(), embeddings)Since I do know I ingested papers about human-machine interfaces (augmented actuality, digital actuality), let’s see which matter align to the time period “augmented actuality”.

#subjects most much like 'augmented actuality'

topic_model.find_topics("augmented actuality")

#output: ([18, 3, 16, 24, 12], [0.9532771, 0.9498462, 0.94966936, 0.9451431, 0.9417263])From the output above, we are able to see that subjects 18, 3, 16, 24, and 12 extremely align to the time period “augmented actuality”. All these matter ought to (hopefully) contribute to the broader theme of augmented actuality, however every cowl a unique side.

To verify this, let’s examine the subject representations. A subject illustration is an inventory of phrases that goals to correctly signify the underlying theme of the subject. For instance, the phrases “cake”, “candles”, “household”, and “presents” could collectively signify the subject of birthdays or birthday events.

We are able to use the get_topic() operate to analyze the illustration of matter 18.

#examine matter 18

topic_model.get_topic(18)

Within the above illustration, we see some helpful phrases like “actuality”, “digital”, “augmented”, and so on. Nonetheless, this isn’t helpful as a complete, as we see a number of cease phrases like “and” and “the”. It’s because BERTopic makes use of Bag of Phrases because the default strategy to signify subjects. This illustration may match different representations about augmented actuality.

Subsequent, we are going to enhance our BERTopic pipeline to create extra significant matter representations which might be give us extra perception into these themes.

Bettering Matter Representations

We are able to enhance the subject representations by including a weighting scheme, which is able to spotlight a very powerful phrases and higher differentiate our subjects.

This doesn’t substitute the Bag of Phrases mannequin, however improves upon it. Beneath we add a TF-IDF mannequin to raised decide the significance of every time period. We use the update_topics() operate to replace our pipeline.

#initialize tokenizer mannequin

vectorizer_model = CountVectorizer(stop_words="english")

#initialize ctfidf mannequin to weight phrases

ctfidf_model = ClassTfidfTransformer()

#add tokenizer and ctfidf to pipeline

topic_model.update_topics(knowledge['all_text'].tolist(), vectorizer_model=vectorizer_model, ctfidf_model=ctfidf_model)#examine how matter representations have modified

topic_model.get_topic(18)

With TF-IDF, these matter representations are rather more helpful. We are able to see that the meaningless cease phrases are gone, different phrases seem that assist describe the subject, and phrases are reordered by their significance.

However we don’t have to cease right here. Due to numerous new developments on this planet of AI and NLP, there are strategies we are able to leverage to fine-tune these representations.

To fine-tune, we are able to take one among two approaches:

- A illustration mannequin

- A generative mannequin

High quality-Tuning with a Illustration Mannequin

First, let’s add the KeyBERTInspired mannequin as our illustration mannequin. This leverages BERT to match the semantic similarity of the TF-IDF representations with the paperwork themselves to raised decide the relevance of every time period, reasonably than the significance.

See all illustration mannequin choices right here: https://maartengr.github.io/BERTopic/getting_started/representation/representation.html#keybertinspired

#initilzae illustration mannequin and add to pipeline

representation_model = KeyBERTInspired()

topic_model.update_topics(knowledge['all_text'].tolist(), vectorizer_model=vectorizer_model, ctfidf_model=ctfidf_model, representation_model=representation_model)

Right here, we see a reasonably main change within the phrases, with some further phrases and acronyms. Evaluating this to the TF-IDF illustration, we once more get a greater understanding of what this matter is about. Additionally discover that the scores modified from the TF-IDF weights, which didn’t have any that means with out context, to scores between 0–1. These new scores signify the semantic similarity scores.

Matter Mannequin Visualizations

Earlier than we transfer to generative fashions for fine-tuning, let’s discover among the visualizations that BERTopic provides. Visualizing matter fashions is essential in understanding your knowledge and the way the mannequin is working.

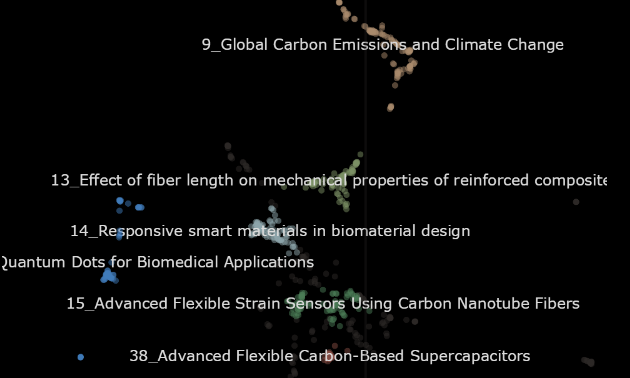

First, we are able to visualize our subjects in a 2-dimensional area, permitting us to see the scale of subjects and what different subjects are related. Beneath, we are able to see we now have many subjects, with clusters of subjects making up bigger themes. We are able to additionally see a subject that’s giant and remoted, indicating that there’s a lot of comparable analysis relating to crispr.

Let’s zoom into these clusters of subjects to see how they break down higher-level themes. Beneath, we zoom into subjects relating to augmented and digital actuality and see how some subjects cowl totally different domains and functions.

We are able to additionally rapidly visualize a very powerful or most related phrases in every matter. Once more, that is dependent in your method to the subject representations.

We are able to additionally use a heatmap to discover the similarity between subjects.

These are just some of the visualizations that BERTopic provides. See the total checklist right here: https://maartengr.github.io/BERTopic/getting_started/visualization/visualization.html

Leveraging Generative Fashions

For our final step of fine-tuning our matter representations, we are able to leverage generative AI to supply representations which might be coherent descriptions of the subject.

BERTopic provides a simple strategy to leverage OpenAI’s GPT fashions to work together with the subject mannequin. We first set up a immediate that reveals the mannequin the information and the present illustration of the subjects. We then ask it to generate a brief label for every matter.

We then initialize the consumer and mannequin, and replace our pipeline.

import openai

from bertopic.illustration import OpenAI

#promt for GPT to create matter labels

immediate = """

I've a subject that incorporates the next paperwork:

[DOCUMENTS]

The subject is described by the next key phrases: [KEYWORDS]

Based mostly on the data above, extract a brief matter label within the following format:

matter:

"""

#import GPT

consumer = openai.OpenAI(api_key='API KEY')

#add GPT as illustration mannequin

representation_model = OpenAI(consumer, mannequin = 'gpt-3.5-turbo', exponential_backoff=True, chat=True, immediate=immediate)

topic_model.update_topics(knowledge['all_text'].tolist(), representation_model=representation_model) Now, let’s return to the augmented actuality matter.

#examine how matter representations have modified

topic_model.get_topic(18)

#output: [('Comparative analysis of virtual and augmented reality for immersive analytics',1)]The subject illustration now reads “Comparative evaluation of digital and augmented actuality for immersive analytics”. The subject is now rather more clear, as we are able to see the targets, applied sciences, and area included in these paperwork.

Beneath is the total checklist of our new matter representations.

It doesn’t take a lot code to see how highly effective generative AI is in supporting our matter mannequin and its representations. It’s after all extraordinarily essential to dig deeper and validate these outputs as you construct your mannequin and to do loads of experimentation with totally different fashions, parameters, and approaches.

Leveraging Matter Fashions Variations

Lastly, BERTopic gives a number of variations of matter fashions to supply options for various knowledge and use circumstances. These embody time-series, hierarchical, supervised, semi-supervised, and plenty of extra.

See the total checklist and documentation right here: https://maartengr.github.io/BERTopic/getting_started/topicsovertime/topicsovertime.html

Let’s rapidly discover one among these prospects with hierarchical matter modeling. Beneath, we create a linkage operate utilizing scipy, which establishes distances between our subjects. We are able to simply match it to our knowledge and visualize the hierarchy of subjects.

#create linkages between subjects

linkage_function = lambda x: sch.linkage(x, 'single', optimal_ordering=True)

hierarchical_topics = topic_model.hierarchical_topics(knowledge['all_text'], linkage_function=linkage_function)

#visualize matter mannequin hierarchy

topic_model.visualize_hierarchy(hierarchical_topics=hierarchical_topics)

Within the visualization above, we are able to see how subjects put themselves collectively to create broader and broader subjects. For instance, we see subjects 25 and 30 come collectively to kind “Sensible Cities and Sustainable Improvement”. This mannequin gives an superior functionality of with the ability to zoom out and in and deciding how broad or slender we wish our subjects to be.

Conclusion

On this article, we obtained to see the ability of BERTopic for matter modeling. BERTopics use of transformers and embedding fashions dramatically improves outcomes from conventional approaches. The BERTopic pipeline additionally provides each energy and modularity, leveraging a number of fashions and permitting you to plug-in different fashions to suit your knowledge. All of those fashions could be fine-tuned and put collectively to create a robust matter mannequin.

You may also combine illustration and generative fashions to enhance matter representations and enhance interpretability. BERTopic additionally provides a number of visualizations to really discover your knowledge and validate your mannequin. Lastly, BERTopic provides a number of variations of matter modeling, like time-series or hierarchical matter modeling, to raised suit your use case.

I hope you could have loved my article! Please be at liberty to remark, ask questions, or request different subjects.

Join with me on LinkedIn: https://www.linkedin.com/in/alexdavis2020/