We began Day 6 within the Medical AI cohort with a lecture introducing Pure Language Processing (NLP) — a discipline targeted on educating computer systems to grasp and work with human language. Although our group is centered round medical imaging, it was fascinating to see how lots of the similar concepts apply. Whether or not it’s analyzing textual content or chest X-rays, the problem is popping advanced, human-generated data into one thing AI fashions can motive with.

The lecture coated how fashions course of language, from tokenizing phrases to turning them into vectors, and the way strategies like recurrent neural networks and transformers assist seize that means and context. We additionally talked about phrase embeddings and next-token prediction, each of that are basic to how giant language fashions, and even vision-language fashions like BioMedCLIP, work. It was a useful basis for understanding how our personal undertaking connects pictures and textual content for medical diagnostics.

Contained in the Mission

After the lecture, the mentors walked us via the undertaking code and gave us an in depth overview of what to anticipate over the following week. Our process is to construct AI fashions for zero-shot classification on the ChestMNIST and PneumoniaMNIST datasets. Which means the fashions should predict illness classes with out being explicitly educated on these particular duties. We’ll be working with fashions like BioMedCLIP and SmolVLM, experimenting with immediate engineering to enhance their accuracy. The undertaking positively feels formidable, however having seen the construction and sources laid out, it feels manageable so long as we work collectively.

We additionally discovered extra about immediate engineering — the concept fastidiously designing the language prompts we feed into the fashions can considerably impression efficiency. It’s an area the place medical data, language, and AI all come collectively, and there’s a number of room to be artistic with how we method it.

Equity by Design with Professor Russakovsky

Later within the day, we heard from Professor Olga Russakovsky, one of many founders of AI4ALL. She shared a little bit of this system’s historical past, together with the way it started as Stanford SAILORS, and confirmed us an image of the lovable robotic plushies they used to present college students. It was a enjoyable reminder of how far this system has come, and the way a lot the mission behind it nonetheless issues.

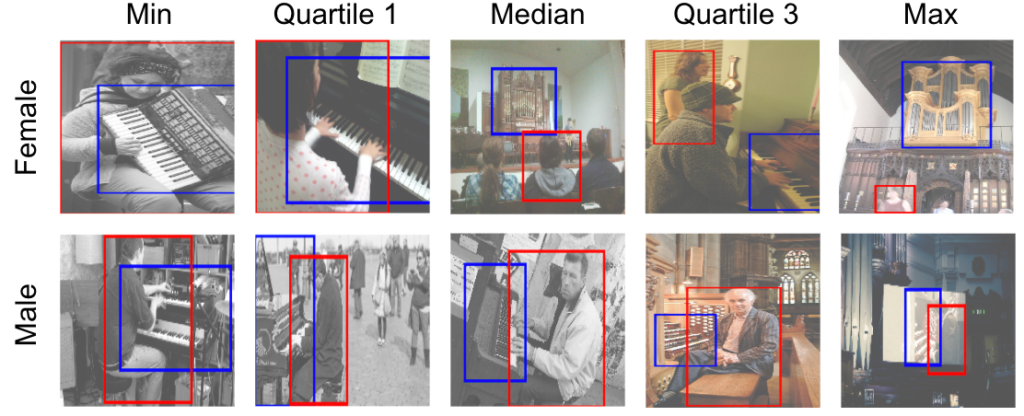

Her primary speak targeted on equity and bias in pc imaginative and prescient. As AI techniques get utilized to real-world duties like surveillance, hiring, or healthcare, the dangers of amplifying societal biases develop. Professor Russakovsky walked us via examples of how datasets can replicate current inequalities, from gender imbalances to geographic and cultural illustration gaps. However bias doesn’t simply come from the information — it reveals up at each stage, from who captures the pictures to who designs and deploys the fashions.

She additionally mentioned technical strategies for decreasing bias, like adjusting inner mannequin representations and utilizing knowledge balancing methods to enhance equity. Nonetheless, fixing these issues requires extra than simply technical fixes. It means pondering critically about who’s represented, who offers consent, and who’s held accountable when AI techniques have an effect on individuals’s lives.

Piecing It All Collectively

We wrapped up the day with workplace hours, the place we had the prospect to speak with our undertaking teams a bit extra and ask our mentors questions on CNNs, constructing fashions, and anything we had been inquisitive about.

There was positively so much to soak up at present, nevertheless it seems like all of the items — technical abilities, undertaking work, and conversations about accountability — are beginning to join. Tomorrow, we dive deeper into our undertaking code and begin making use of what we’ve discovered!