Since Okay-means doesn’t obtain the specified stage of distinction within the clusters, we’ll attempt one other clustering methodology, Gaussian Combination Fashions (GMM).

GMMs are distribution-based mannequin, quite than distance-based like Okay-Means. They don’t assume clusters to be of any geometry, like Okay-Means which bias the cluster sizes to have particular constructions (round). Moreover, they work properly with non-linear geometric distributions.

The principle disadvantages issues its potential fast convergence to an area minimal, which isn’t optimum. Nonetheless, we are able to alter its parameters appropriately.

from sklearn.combination import GaussianMixture

from sklearn.combination import BayesianGaussianMixturegm_df = data_2015_2021.copy()

bgm = BayesianGaussianMixture(n_components=10, n_init=7, max_iter=1000)

bgm.match(pca_scores)

np.spherical(bgm.weights_, 2)

array([0.18, 0.16, 0.06, 0.07, 0.06, 0.09, 0.18, 0.03, 0.01, 0.15])

We used BayesianGaussianMixture to select the variety of clusters. In short, it returns the weights of clusters, with misguided clusters being weighted beneath 0.10 and principally eradicating them robotically. On the finish, now we have 4 distinct clusters.

gm = GaussianMixture(n_components=4, init_params='kmeans', tol=1e-4,

covariance_type='full', n_init=10, random_state=1)

plays_gm_df['gm_cluster'] = gm.fit_predict(pca_scores)pca_gm_df = pd.concat([gm_df.reset_index(drop=True), pd.DataFrame(

data=pca_scores, columns=['pca_1', 'pca_2', 'pca_3', 'pca_4'])], axis=1)

pca_gm_df.head()

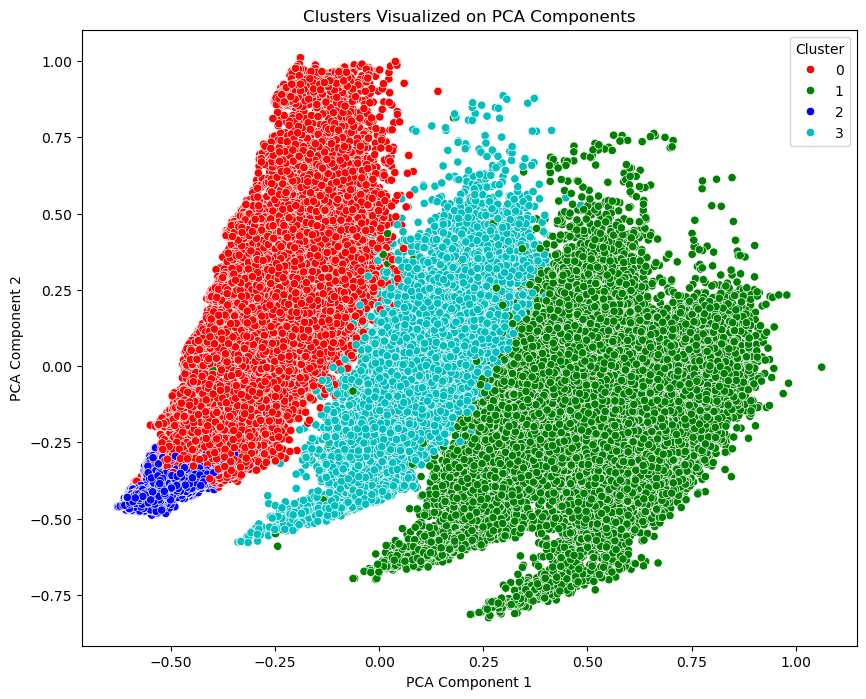

As soon as the clusters are predicted, the visualization of the information factors in two dimeansions is as follows:

Now cluster_3 and cluster_0 is separated higher than different clusters in comparison with Okay-Means clustering.

With a view to validate the mannequin, we’ll make use of Okay-Fold Cross Validation. Right here is the operate to run:

def train_model(df, folds, options, mannequin):

# Shuffle the dataframe

df = df.pattern(frac=1, random_state=42).reset_index(drop=True)

scores = [] # To retailer accuracy scores for every fold

fold_size = len(df) // foldsfor fold in vary(folds):

begin = fold * fold_size

finish = (fold + 1) * fold_size if fold != folds - 1 else len(df)

# Validation set for this fold

df_valid = df[start:end].reset_index(drop=True)

# Coaching knowledge

df_train = pd.concat([df[:start], df[end:]], axis=0).reset_index(drop=True)

X_train, y_train = df_train[features].values, df_train['gm_cluster'].values

X_valid, y_valid = df_valid[features].values, df_valid['gm_cluster'].values

# Practice the mannequin

mannequin.match(X_train, y_train)

# Predict on validation set

valid_preds = mannequin.predict(X_valid)

# Calculate accuracy

accuracy = accuracy_score(y_valid, valid_preds)

print(f"Fold {fold}, Accuracy: {accuracy:.4f}")

scores.append(accuracy)

# Imply accuracy throughout all folds

mean_accuracy = np.imply(scores)

print(f"Imply Accuracy: {mean_accuracy:.4f}")

return mean_accuracy

We may even implement a few features to evaluate the presence of options in accordance with their importances as a result of we might encounter some underneath or overfitting points within the validation half.

def feat_permutation_importance(df, options, mannequin):# outline the dataset options and goal

X = df[features]

y = df["gm_cluster"]

# initialize the mannequin

mannequin = mannequin

mannequin.match(X, y)

# carry out permutation significance

outcomes = permutation_importance(mannequin, X, y, scoring='f1_weighted')

# get significance

significance = outcomes.importances_mean

idxs = np.argsort(significance)

importances = pd.Collection(significance, index=options)

# plot characteristic significance

plt.title('Permutation Function Significance', fontsize=12)

plt.barh(vary(len(idxs)), importances.iloc[idxs], align='heart')

plt.yticks(vary(len(idxs)), [features[i] for i in idxs])

plt.xlabel('Function Significance')

plt.present()

return importances

When now we have to get rid of some options, the importances of others change. For that reason, we’ll use Recursive Function Elimination (RFE). Briefly, in every iteration the characteristic with the bottom significance will probably be eradicated, however in fact, we decide the quantity of options that will probably be left on the finish.

from sklearn.feature_selection import RFEdef rfe_feature_selection(df, options,num_features_to_select, mannequin):

...

...

...

return selected_features, rankings

def rfe_with_cv(df, options, mannequin):

X = df[features]

y = df['gm_cluster']

mannequin = mannequin

outcomes = {}

for num_features in vary(1, len(options)+1):

rfe = RFE(estimator=mannequin, n_features_to_select=num_features)

rfe.match(X, y)

# Cross-validation with the chosen options

selected_features = [features[i] for i in vary(len(options)) if rfe.support_[i]]

rating = cross_val_score(mannequin, X[selected_features], y, cv=5, scoring='accuracy')

# Retailer the outcomes

outcomes[num_features] = np.imply(rating)

return outcomes

Now, we are able to scale the information with MinMaxScaler. The options that must be scaled are the identical options used when creating clusters:

['START_POSITION',

'MIN',

'OFF_RATING',

'DEF_RATING',

'AST_PCT',

'AST_TOV',

'AST_RATIO',

'OREB_PCT',

'DREB_PCT',

'REB_PCT',

'TM_TOV_PCT',

'EFG_PCT',

'TS_PCT',

'USG_PCT',

'PACE',

'PACE_PER40',

'POSS',

'PIE']

We are going to map these columns with _n suffix:

train_data = train_df[features].values

scaler = MinMaxScaler()scaler.match(train_data)

train_data_scaled = scaler.rework(train_data)

train_norm_features = [feat+'_n' for feat in train_features]

Logistic Regression

We are going to first attempt with the baseline mannequin, which incorporates each characteristic we talked about above.

from sklearn.linear_model import LogisticRegressionlogres = LogisticRegression(max_iter = 1000, solver='lbfgs', n_jobs=-1)

train_model(train_norm_df, 5, train_norm_features, logres)

Fold 0, Accuracy: 0.9964

Fold 1, Accuracy: 0.9964

Fold 2, Accuracy: 0.9962

Fold 3, Accuracy: 0.9968

Fold 4, Accuracy: 0.9962

Imply Accuracy: 0.9964

0.9964271451771785

We shouldn’t be comfy with such an amazing accuracy from the very beggining. Let’s verify the options significance beneath.

START_POSITION_n 0.582933

MIN_n 0.014381

OFF_RATING_n 0.000202

DEF_RATING_n -0.000030

AST_PCT_n 0.000114

AST_TOV_n -0.000072

AST_RATIO_n 0.001265

OREB_PCT_n 0.000130

DREB_PCT_n 0.002387

REB_PCT_n 0.000569

TM_TOV_PCT_n 0.000023

EFG_PCT_n 0.043940

TS_PCT_n 0.106686

USG_PCT_n 0.001300

PACE_n 0.000000

PACE_PER40_n 0.000000

POSS_n 0.028949

PIE_n 0.000002

dtype: float64

train_model(train_norm_df, 5, ['START_POSITION_n'], logres)

Fold 0, Accuracy: 0.9018

Fold 1, Accuracy: 0.8995

Fold 2, Accuracy: 0.9033

Fold 3, Accuracy: 0.9045

Fold 4, Accuracy: 0.9046

Imply Accuracy: 0.9027

0.9027288943318869

Utilizing the START_POSITION_n characteristic alone, we achieved a powerful accuracy of 90% in our logistic regression mannequin. Nonetheless, this characteristic dominates the mannequin and causes overfitting, because it outperforms the opposite options considerably. If we verify the imply stats for the group_1 feats throughout all of the START_POSITION values:

OFF_RATING AST_PCT AST_TOV TM_TOV_PCT EFG_PCT TS_PCT POSS

START_POSITION

0 101.680642 0.120858 0.655623 10.245366 0.455788 0.486946 34.830412

1 108.583286 0.217609 2.013673 10.038103 0.504163 0.539964 64.867027

2 108.151839 0.119372 1.155329 9.782779 0.520350 0.552918 62.546659

3 108.147874 0.114246 0.995818 11.557729 0.562291 0.589391 56.833615

OFF_RATING, AST_TOV, EFG_PCT, TS_PCT & POSS get the minimal ranges for the START_POSITION 0 or else NaN (bear in mind we encoded the NaN positions with 0). Which signifies that this variable betrays that these gamers did not begin the sport, therefore there may be excessive risk for them to have performed much less time than the opposite and consequently have worse stats. To be extra explicit, the much less you play the decrease the possibility to extend any data (move, factors, and so forth). In the identical context, one other variable may be responsible; MIN. It exactly expresses the time a participant spent within the courtroom and so now we have to disregard it, too. With a view to affirm let’s attempt it once more after eradicating START_POSITION_n .

MIN_n 0.245335

OFF_RATING_n 0.001005

DEF_RATING_n 0.003744

AST_PCT_n 0.032213

AST_TOV_n 0.000197

AST_RATIO_n 0.005186

OREB_PCT_n 0.007655

DREB_PCT_n 0.010354

REB_PCT_n 0.029342

TM_TOV_PCT_n 0.002220

EFG_PCT_n 0.069433

TS_PCT_n 0.114258

USG_PCT_n 0.010686

PACE_n -0.000002

PACE_PER40_n -0.000002

POSS_n 0.060816

PIE_n -0.000114

dtype: float64

Clearly, the identical applies for the case of MIN – it leaks info of the time spent within the courtroom by the athlete. So, the mannequin is aware of ‘apriori’ that the participant with larger length might have higher stats.

Now now we have to repeat the normalization to ensure that the scaler to be match within the new form of knowledge [: , 16] as a substitute of [: , 18].

# re-define feats

train_feats.take away('START_POSITION')

train_feats.take away('MIN')

Now, it’s time to use PCA to the normalized knowledge.

We are able to select any variety of element above 6, since it should clarify greater than %90 of variance. In our case, we selected 7 as optimum numbers of parts. So, we’ll initialize the logistic regression once more. It must be famous that we nonetheless have many options and eradicating a few of them can improve significance of others.

selected_features, ranks = rfe_feature_selection(train_norm_pca_df, train_norm_features, 12, logres)

Fold 0, Accuracy: 0.7242

Fold 1, Accuracy: 0.7187

Fold 2, Accuracy: 0.7190

Fold 3, Accuracy: 0.7189

Fold 4, Accuracy: 0.7170

Imply Accuracy: 0.7195

0.7195495327600436

First, we used the 12 options with essentially the most significance and the validaiton accuracy we bought is above. We, tried it with 8 most vital characteristic as properly, right here is the consequence:

Fold 0, Accuracy: 0.7096

Fold 1, Accuracy: 0.7063

Fold 2, Accuracy: 0.7080

Fold 3, Accuracy: 0.7049

Fold 4, Accuracy: 0.7068

Imply Accuracy: 0.7071

0.7071270732941082

Since, the accuracy is larger with 12 options, we’ll decide them.

['OFF_RATING', 'DEF_RATING', 'AST_PCT', 'AST_RATIO', 'OREB_PCT', 'DREB_PCT', 'REB_PCT', 'EFG_PCT', 'TS_PCT', 'USG_PCT', 'POSS', 'PIE']

From right here, we’ll apply GridSearch to see if we are able to additional optimize the mannequin.

# initialize grid search

# estimator is the mannequin that now we have outlined

# we use f1_weighted as our metric

# cv=5 signifies that we're utilizing 5 fold cv

from sklearn import model_selection

mannequin = model_selection.GridSearchCV(

estimator=logres,

param_grid=param_grid,

scoring="f1_weighted",

verbose=10,

n_jobs=1,

cv=5

)

X = train_norm_pca_df[train_norm_selected_features].values

y = train_norm_pca_df.gm_cluster.values# match mannequin on coaching knowledge

mannequin.match(X, y)

print(f"Finest rating: {mannequin.best_score_}")

print("Finest parameters set:")

best_parameters = mannequin.best_estimator_.get_params()

for param_name in sorted(param_grid.keys()):

print(f"t{param_name}: {best_parameters[param_name]}")

Finest rating: 0.7151094663220918

Finest parameters set:

C: 100

Now, we’ll initialize LogReg with the hyperparameter C once more. And these are the outcomes we get with and with out PCA

# w/o PCA

Fold 0, Accuracy: 0.7244

Fold 1, Accuracy: 0.7189

Fold 2, Accuracy: 0.7195

Fold 3, Accuracy: 0.7199

Fold 4, Accuracy: 0.7172

Imply Accuracy: 0.7200

0.7199701818375442

# with PCA

Fold 0, Accuracy: 0.7217

Fold 1, Accuracy: 0.7141

Fold 2, Accuracy: 0.7152

Fold 3, Accuracy: 0.7145

Fold 4, Accuracy: 0.7153

Imply Accuracy: 0.7161

0.7161470674369692

Gentle BM

Other than Logistic Regression, we’ll make use of one other classification mannequin: Gentle GBM.

# Initialize the LightGBM Classifier

import lightgbm as lgb

lgb_clf = lgb.LGBMClassifier(n_jobs=-1, verbose = -1)

These are the outcomes we get with 16 options and 12 chosen fetures respectively:

# 16 options

Fold 0, Accuracy: 0.7385

Fold 1, Accuracy: 0.7316

Fold 2, Accuracy: 0.7340

Fold 3, Accuracy: 0.7309

Fold 4, Accuracy: 0.7298

Imply Accuracy: 0.7330

0.7329517318495247

# 12 chosen options

Fold 0, Accuracy: 0.7333

Fold 1, Accuracy: 0.7281

Fold 2, Accuracy: 0.7287

Fold 3, Accuracy: 0.7269

Fold 4, Accuracy: 0.7251

Imply Accuracy: 0.7284

0.7284151114187588

Let’s alter the parameters of Gentle GBM and apply GridSearch to see if we get any higher.

param_grid = {

"num_leaves": [31, 50], # Variety of leaves within the tree (larger values could make the mannequin extra complicated)

"learning_rate": [0.05, 0.1], # Studying charge (decrease values require extra boosting rounds)

"n_estimators": [100, 150, 200], # Variety of boosting rounds (timber)

"max_depth": [-1, 5], # Most depth of the tree, -1 means no restrict

"min_child_samples": [20, 30], # Minimal variety of knowledge factors in a leaf

"colsample_bytree": [0.8, 1.0], # Fraction of options to think about for every tree

"subsample": [0.8, 1.0], # Fraction of knowledge for use for becoming every tree (to stop overfitting)

}# we use f1 as our metric

# cv=5 signifies that we're utilizing 5 fold cv

mannequin = model_selection.GridSearchCV(

estimator=lgb_clf,

param_grid=param_grid,

scoring="f1_weighted",

verbose=10,

n_jobs=1,

cv=5

)

# match mannequin on coaching knowledge

mannequin.match(X, y)

# get finest rating

print(f"Finest rating: {mannequin.best_score_}")

# get finest params

print("Finest parameters set:")

best_parameters = mannequin.best_estimator_.get_params()

for param_name in sorted(param_grid.keys()):

print(f"t{param_name}: {best_parameters[param_name]}")

Finest rating: 0.7218224201696597

Finest parameters set:

colsample_bytree: 0.8

learning_rate: 0.1

max_depth: -1

min_child_samples: 20

n_estimators: 100

num_leaves: 50

subsample: 0.8

Lastly, we’ll prepare the mannequin with tuned parameters and normalized options.

Fold 0, Accuracy: 0.7339

Fold 1, Accuracy: 0.7263

Fold 2, Accuracy: 0.7284

Fold 3, Accuracy: 0.7261

Fold 4, Accuracy: 0.7259

Imply Accuracy: 0.7281

0.7281062804504673

And, that is the final accuracy rating we had. We are able to observe that GridSearch and eliminating an excessive amount of options (excluding START_POSITION and MIN ) didn’t present any profit. Looks like Gentle GBM with 16 options with out normalization and GridSearch performs the perfect for now. However we need to experiment with some totally different fashions as properly equivalent to Random Forest, XGBoost and so forth to see if we are able to do any higher.

Ender Orman, M. Beşir Acar