At this time’s data-driven world calls for environment friendly strategies to organize and analyze huge quantities of data. Whereas many advances have targeted on enhancing mannequin architectures or creating automated Machine Studying (AutoML) pipelines, comparatively fewer options deal with superior knowledge preprocessing — a foundational step that may make or break a predictive mannequin.

On this article, we’ll discover a framework for automated knowledge preprocessing that goes past the everyday knowledge cleansing and encoding steps, delving into extra refined strategies like characteristic choice, characteristic engineering, and a novel sampling method. We’ll additionally see how these methods could be mixed in a single pipeline to spice up mannequin accuracy and scale back computational overhead.

Preprocessing ensures that your dataset is “model-ready.” Typical approaches normally cease at:

- Dealing with lacking values

- Encoding categorical variables

- Normalization or standardization

However these steps solely scratch the floor. Superior preprocessing can create fully new options, take away wasteful ones, and resample giant datasets with out dropping vital knowledge patterns.

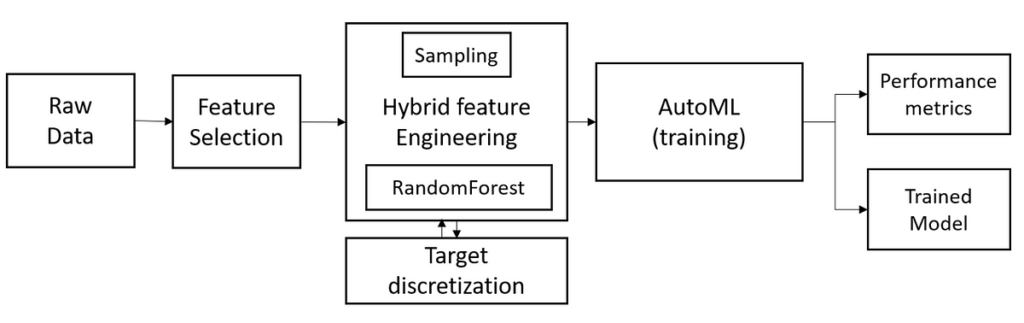

Within the paper, the authors current an automated preprocessing pipeline composed of 4 principal steps:

- Characteristic Choice — Determine and take away redundant, extremely correlated, or statistically insignificant options.

- Sampling — Use a novel “Bin-Primarily based” sampling technique to create smaller but consultant datasets.

- Goal Discretization — Convert steady targets into discrete courses when regression fails to yield passable efficiency.

- Hybrid Characteristic Engineering — Generate new options routinely utilizing unary or binary transformations, then prune them to maintain solely essentially the most informative.

Typically, datasets have columns with:

- Fixed values (e.g., “Machine No.” is at all times the identical quantity),

- Values that match the overall variety of rows (e.g., IDs, names, or emails),

- Very excessive correlations (redundant from an data perspective).

The paper outlines a technique mixing variance evaluation, correlation thresholds, and an ANOVA F-test (for classification) or a univariate linear regression take a look at (for regression) to detect and take away these much less important options. These checks make sure the dataset retains solely the columns that carry significant data for the prediction activity.

Working with full datasets could be computationally costly, particularly throughout time-intensive duties like characteristic engineering. Conventional sampling (like random sampling) may fail to protect knowledge distribution. Stratified sampling is nice however could be very costly computationally.

That’s why the authors suggest Bin-Primarily based sampling:

- Divide steady options into bins (based mostly on their distribution).

- Draw random samples from every bin.

- Mix the ensuing samples from every characteristic.

This technique maintains distributional traits higher than pure random sampling, whereas being way more environment friendly than absolutely stratified approaches.

When a regression mannequin’s R2R²R2-score is just too low, the authors counsel a easy repair: convert the continual goal into discrete buckets. This basically transforms the issue right into a classification activity, which typically yields higher predictive efficiency if fine-grained regression proves unreliable.

This step is non-compulsory, nevertheless it’s a simple solution to keep away from discarding datasets that aren’t well-suited to direct regression modeling.

Typically, actual efficiency beneficial properties come not from fancy ML fashions however from higher options. The paper attracts inspiration from approaches like Cognito and ExploreKit however streamlines them by introducing a Hybrid Characteristic Engineering (HFE) method:

- Unary transformations on numerical columns (e.g., log, inverse, sq.).

- Binary transformations combining two columns (e.g., multiplication, ratio).

- Pruning new options shortly through the identical characteristic choice logic used earlier.

- Rating mannequin: Consider every newly generated characteristic’s impression on a baseline mannequin, retaining solely those who enhance metrics.

The online end result? Probably important boosts in mannequin accuracy (or R²-score) for datasets the place interactions between options matter so much.

By chaining these 4 steps — and optionally utilizing AutoML libraries like AutoSklearn, H2O, or AutoGluon afterwards — the paper reveals:

- A 4–7% efficiency enchancment in lots of benchmark duties utilizing a baseline RandomForest mannequin.

- Marginal however constant enhancements when appended to present AutoML frameworks (which usually skip superior characteristic engineering).

For these within the full element and experimental validations, the unique paper is:

Paranjape A., Katta P., Ohlenforst M. (2022). “Automated Knowledge Preprocessing for Machine Studying Primarily based Analyses.” COLLA 2022.