Unmasking the True Value of Idle GPUs and the Progressive Resolution Lastly Banishing LLM Chilly Begins.

On the planet of Synthetic Intelligence, “GPU utilization” is usually touted as the final word metric of effectivity. Everybody talks about it — from cloud suppliers to enterprise information scientists — however scratch the floor, and also you’ll discover it’s some of the misunderstood ideas within the business. Why? As a result of GPU utilization isn’t a single, simple quantity; it’s a multi-layered story.

The Nuance of GPU Utilization: Extra Than Meets the Eye

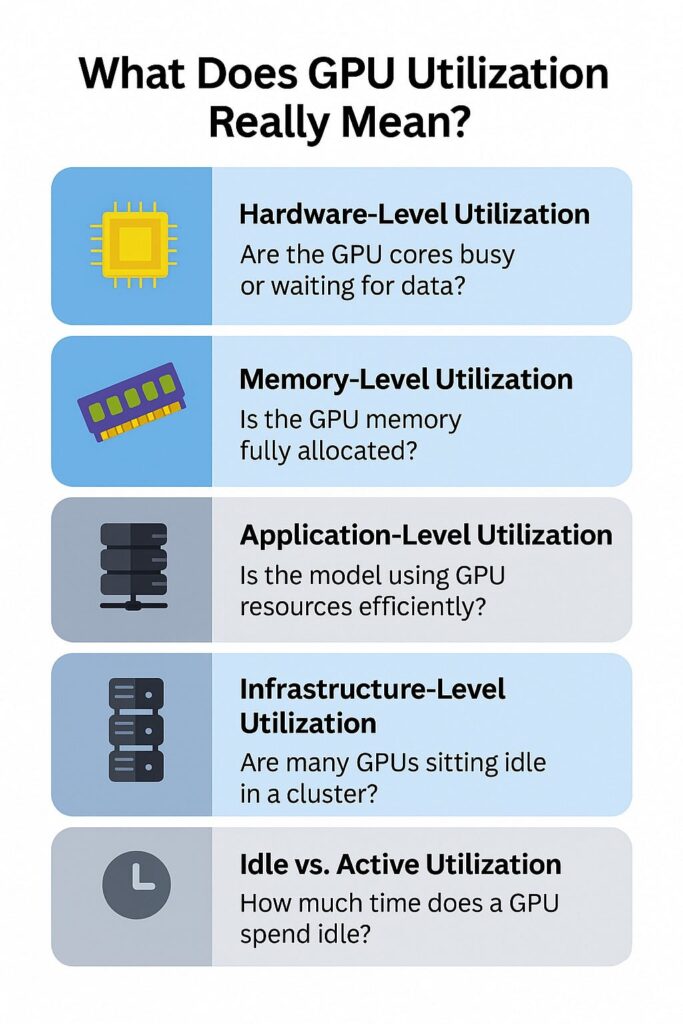

To really perceive effectivity, we have to look past a single proportion. Right here’s a breakdown of the completely different “ranges” of GPU utilization:

{Hardware}-Stage Utilization: That is what most monitoring instruments report: are the GPU’s processing cores busy? Whereas seemingly direct, a busy core isn’t all the time a productive one. It could possibly be ready for information, stalled on reminiscence entry, or performing non-compute-intensive duties. “Ready for information isn’t productiveness.”

Reminiscence-Stage Utilization: This means how a lot of the GPU’s devoted reminiscence is at the moment in use. A GPU with excessive reminiscence utilization isn’t essentially performing intensive computations; it’d simply be holding massive fashions or datasets, probably even when they’re not actively being processed. “Excessive reminiscence use doesn’t imply excessive compute — simply means it’s full.”

Software-Stage Utilization: This delves into how effectively a particular AI mannequin or software is utilizing the GPU sources it has been allotted. Is the mannequin itself optimized? Are there bottlenecks within the software program stack stopping the GPU from operating at its theoretical peak throughput? “Is the mannequin really environment friendly, or is it bottlenecked?”

Fleet-Stage Utilization: For organizations managing massive clusters of GPUs, this metric is essential. It seems on the general effectivity throughout the whole pool of {hardware}. Are most GPUs in your cluster sitting idle whereas just a few work time beyond regulation? This factors to inefficient useful resource allocation throughout the datacenter. “Are most GPUs in your cluster simply sitting idle whereas a couple of work time beyond regulation?”

Time-based Utilization: Maybe probably the most important however typically neglected facet. A GPU may hit 100% utilization throughout its lively processing bursts. Nevertheless, if it spends 80% of its time sitting idle between requests (a typical situation in serverless environments), its efficient, productive utilization over time is considerably decrease. “100% utilization when lively means little in case your GPU’s idle 80% of the time.”

The underside line is: True effectivity isn’t nearly what occurs when the GPU is on. It’s about how a lot actual work will get accomplished over time.

The Elephant within the Room: Chilly Begins in Serverless LLM Inference

This multi-faceted understanding of GPU utilization turns into much more important once we discuss Giant Language Mannequin (LLM) inference, particularly in serverless architectures. Serverless computing guarantees useful resource elasticity and low-latency paradigms. Nevertheless, a elementary problem — cold-start delays — typically undermines these advantages.

Conventional metrics like Time-to-First-Token (TTFT) usually measure latency from when a request arrives, assuming that containers, inference engines, and fashions are already loaded and prepared. This overlooks the numerous delay incurred when fashions have to be loaded into GPUs earlier than serving can start. If we focus solely on optimizing TTFT by holding the whole lot “heat,” it results in substantial useful resource waste, significantly for costly GPU reminiscence and compute capability.

To handle this, the business wants a extra complete metric. That’s why the idea of Chilly-Begin Time-to-First-Token (CS-TTFT) has emerged. CS-TTFT augments TTFT by incorporating the essential latency launched in the course of the cold-start part. This contains the time required to initialize runtime environments and cargo LLM fashions, which may considerably affect end-to-end latency in elastic serverless settings. CS-TTFT is formally outlined as:

CS-TTFT = Container Begin Time + Framework Init Time + Mannequin Load Time + First-Req. TTFT

InferX: The Resolution for True Serverless LLM Effectivity

That is the place InferX steps in. Our system is particularly designed to sort out the cold-start drawback head-on and unlock true GPU effectivity for serverless LLM inference.

The core innovation of InferX lies in its light-weight snapshotting know-how. As a substitute of initializing a complete container from scratch each time, InferX works as follows:

- 1. Offline Snapshot Creation: Earlier than any requests arrive, InferX proactively prepares container snapshots. These snapshots seize the totally initialized state of the containerized serving engine (like vLLM) and the loaded LLM mannequin (e.g., Llama 3.1, Deepseek R1). Crucially, these snapshots seize each CPU-side and GPU-side states (together with CUDA context, metadata allotted by way of cudaMalloc, and CUDA host-registered reminiscence). They’re saved to low-cost storage (like a blob retailer), permitting beneficial GPU sources to be launched.

- 2. Useful resource-Environment friendly Pre-Heat Section: To scale back container setup time even additional, InferX retains containers in a “pre-warmed” state. Importantly, throughout this part, no GPU sources — neither reminiscence nor computation — are consumed. As a substitute, CPU-side reminiscence maintains container metadata, enabling quicker snapshot loading when a request ultimately arrives.

- 3.Fast On-Demand Restoration: When a request is obtained, InferX restores the container from the pre-created snapshot. This eliminates the necessity to reinitialize the KV cache and considerably reduces the mixed time of container startup, framework initialization, and mannequin loading.

The Influence: Efficiency That Issues

The outcomes converse for themselves. Our evaluations present that InferX achieves really breakthrough efficiency:

- Dramatic CS-TTFT Discount: In comparison with broadly adopted baselines utilizing runC, InferX reduces Chilly-Begin Time-to-First-Token (CS-TTFT) by as much as 95%. Whereas baselines for fashions like OLMo-1B and DeepSeek-R1–7B typically exceed 20 seconds for CS-TTFT, InferX persistently delivers beneath 2 seconds.

- Superior GPU Utilization: By reworking wasteful idle time into productive cycles, InferX drives over 80% productive GPU utilization. This basically modifications the economics of operating LLM inference at scale.

This stage of effectivity means companies can lastly embrace really elastic, on-demand LLM serving. For GPU cloud suppliers, it interprets to increased income per GPU and extra aggressive serverless choices. For API suppliers, it means prompt responsiveness and higher scalability. For enterprises, it means vital price financial savings and the power to deploy interactive LLM purposes with out compromise.

InferX is transferring past the buzzwords, delivering measurable effectivity for the way forward for AI.

For those who’re a GPU cloud supplier, an AI platform, or an enterprise grappling with LLM chilly begins and GPU effectivity, we’d love to debate how InferX can remodel your operations.

E mail us: Prashanth@inferx.internet