That is the fourth article in our collection exploring the design and implementation of a complete laptop imaginative and prescient notion system. In Part 1, we coated detection, in Part 2, we explored monitoring, and in Part 3, we added depth estimation. Immediately, we’ll implement picture segmentation to attain pixel-perfect object understanding.

Think about a self-driving automotive approaching a busy intersection. It detects and tracks varied objects — automobiles, pedestrians, cyclists — and estimates their distances. However to navigate safely, it must know the exact boundaries of every object, distinguish between an individual and their shadow, and determine drivable highway surfaces all the way down to the pixel degree.

That is the place picture segmentation turns into essential. Whereas detection offers us bounding containers that roughly find objects, segmentation offers pixel-precise masks that define precisely what elements of the picture belong to every object, enabling a lot finer-grained scene understanding.

On this article, we’ll discover easy methods to implement a picture segmentation module utilizing Meta AI’s groundbreaking Phase Something Mannequin (SAM), and combine it into our notion pipeline. SAM represents a brand new paradigm in segmentation — a basis mannequin that may section just about something in a picture, even objects it wasn’t particularly educated to acknowledge.

Picture segmentation offers pixel-precise masks that define precisely what elements of the picture belong to every object.

Segmentation serves a number of vital capabilities in a notion system:

- Exact object boundaries: Figuring out precisely which pixels belong to every object

- Occasion separation: Distinguishing between a number of situations of the identical object class

- Fantastic-grained evaluation: Enabling detailed evaluation of object elements and options

- Scene understanding: Figuring out surfaces, areas, and background parts

- Occlusion dealing with: Higher understanding of partial occlusions between objects

These capabilities are basic for functions requiring exact environmental understanding, akin to autonomous autos, robotics, medical imaging, and augmented actuality.

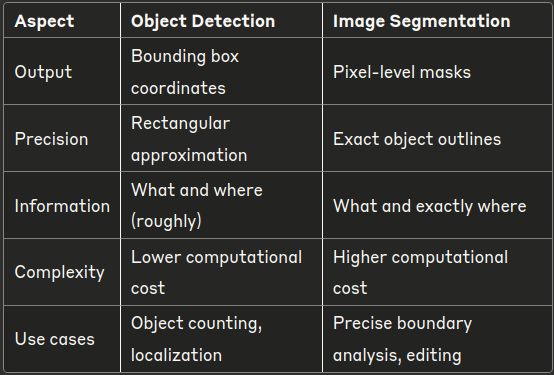

To know segmentation, it’s useful to check it with detection:

This comparability highlights why segmentation is so helpful: it offers a way more detailed understanding of objects and their boundaries.

There are a number of forms of picture segmentation, every serving totally different functions:

- Semantic segmentation: Assigns a category label to every pixel (e.g., highway, automotive, individual)

- Occasion segmentation: Distinguishes between totally different situations of the identical class (e.g., automotive #1, automotive #2)

- Panoptic segmentation: Combines semantic and occasion segmentation

- Immediate-based segmentation: Generates masks based mostly on prompts like factors or containers

For our notion system, we’re notably fascinated with occasion segmentation, because it aligns properly with our detection and monitoring modules.

For our implementation, we selected the Phase Something Mannequin (SAM), launched by Kirillov et al. from Meta AI Analysis. SAM represents a serious advance in segmentation know-how:

- Immediate-based: Can generate masks from varied forms of prompts (factors, containers, textual content)

- Zero-shot efficiency: Works on objects and scenes it wasn’t particularly educated on

- Scale and variety: Skilled on over 1 billion masks throughout numerous photos

- Actual-time functionality: Smaller variants can run in real-time on fashionable {hardware}

- Basis mannequin: Serves as a base for personalisation and fine-tuning

SAM is especially well-suited for integration with our notion system as a result of it will probably work with detection outcomes (bounding containers) as prompts to generate exact masks. This creates a pure workflow: detection → monitoring → segmentation.

Following our design ideas of modularity and abstraction, we’ll create an summary base class for segmentation, then implement SAM-based segmentation.

First, let’s outline the summary base class that establishes the interface for any segmentation implementation:

# notion/segmentation/segmenter.pyfrom abc import ABC, abstractmethod

from typing import Checklist, Dict, Any, Tuple, Elective

import numpy as np

class Segmenter(ABC):

"""

Summary base class for picture segmentation.

All segmenter implementations ought to inherit from this class and

implement the section technique.

"""

def __init__(self, config: Dict = None):

"""

Initialize the segmenter with configuration.

Args:

config: Configuration dictionary

"""

self.config = config or {}

self.is_initialized = False

@abstractmethod

def initialize(self) -> None:

"""Initialize the segmenter and cargo fashions."""

cross

@abstractmethod

def section(self, body: np.ndarray) -> Dict[str, Any]:

"""

Phase objects in a body.

Args:

body: Enter picture body (BGR format)

Returns:

Dictionary containing:

- masks: Checklist of segmentation masks (every as a binary numpy array)

- courses: Checklist of sophistication IDs for every masks

- scores: Checklist of confidence scores for every masks

- class_names: Checklist of human-readable class names

"""

cross

def preprocess(self, body: np.ndarray) -> np.ndarray:

"""

Preprocess body for segmentation.

Args:

body: Enter body

Returns:

Preprocessed body

"""

# Default preprocessing (might be overridden)

return body

def segment_by_points(self,

body: np.ndarray,

factors: Checklist[Tuple[int, int]],

point_labels: Elective[List[int]] = None) -> Dict[str, Any]:

"""

Phase based mostly on immediate factors.

Args:

body: Enter body

factors: Checklist of (x, y) coordinate tuples to make use of as prompts

point_labels: Checklist of labels for factors (1 for foreground, 0 for background)

If None, all factors are thought of foreground

Returns:

Dictionary with segmentation outcomes

"""

# Default implementation (needs to be overridden by implementations that assist this)

increase NotImplementedError("Level-based segmentation not supported by this segmenter")

def segment_by_boxes(self,

body: np.ndarray,

containers: Checklist[List[int]]) -> Dict[str, Any]:

"""

Phase based mostly on bounding containers.

Args:

body: Enter body

containers: Checklist of [x1, y1, x2, y2] bounding containers to make use of as prompts

Returns:

Dictionary with segmentation outcomes

"""

# Default implementation (needs to be overridden by implementations that assist this)

increase NotImplementedError("Field-based segmentation not supported by this segmenter")

def segment_by_masks(self,

body: np.ndarray,

masks: Checklist[np.ndarray]) -> Dict[str, Any]:

"""

Refine present masks.

Args:

body: Enter body

masks: Checklist of binary masks to refine

Returns:

Dictionary with refined segmentation outcomes

"""

# Default implementation (needs to be overridden by implementations that assist this)

increase NotImplementedError("Masks-based segmentation not supported by this segmenter")

This summary class defines:

- Core performance via the

section()technique that each one implementations should present - Immediate-based interfaces for various methods to information segmentation (factors, containers, masks)

- Constant return format with masks, scores, courses, and sophistication names

- Configuration assist for versatile habits customization

Now, let’s implement the Phase Something Mannequin (SAM) segmenter:

# notion/segmentation/segment_anything.pyimport os

import sys

import numpy as np

import torch

import cv2

import logging

from typing import Checklist, Dict, Tuple, Elective, Any

from notion.segmentation.segmenter import Segmenter

logger = logging.getLogger(__name__)

class SegmentAnythingModel(Segmenter):

"""

Picture segmentation utilizing Meta's Phase Something Mannequin (SAM).

This class implements picture segmentation utilizing the SAM structure,

which may section something in a picture based mostly on prompts.

"""

def __init__(self, config: Dict = None):

"""

Initialize SAM segmenter.

Args:

config: Configuration with keys:

- model_type: SAM mannequin kind ('vit_h', 'vit_l', 'vit_b')

- checkpoint: Path to mannequin checkpoint or 'default'

- system: Inference system ('cuda', 'cpu')

- points_per_side: Variety of factors for automated masks era

- conf_threshold: Confidence threshold for predictions (0-1)

"""

tremendous().__init__(config)

self.config = {

'model_type': 'vit_b', # Choices: 'vit_h', 'vit_l', 'vit_b'

'checkpoint': 'default',

'system': 'cuda' if torch.cuda.is_available() else 'cpu',

'points_per_side': 32, # For automated masks era

'conf_threshold': 0.8,

'output_mode': 'binary_mask', # or 'crf_refined', 'full'

**(config or {})

}

self.mannequin = None

self.predictor = None

self.system = None

def initialize(self) -> None:

"""Initialize the SAM mannequin."""

attempt:

logger.information(f"Initializing SAM segmenter on {self.config['device']}...")

# Import SAM required libraries

attempt:

from segment_anything import sam_model_registry, SamPredictor

besides ImportError:

logger.information("Putting in segment_anything...")

os.system('pip set up git+https://github.com/facebookresearch/segment-anything.git')

from segment_anything import sam_model_registry, SamPredictor

# Set system

self.system = torch.system(self.config['device'])

# Decide checkpoint path

checkpoint_path = self.config['checkpoint']

if checkpoint_path == 'default':

model_type = self.config['model_type']

# Default checkpoints based mostly on mannequin kind

if model_type == 'vit_h':

checkpoint_path = "sam_vit_h_4b8939.pth"

elif model_type == 'vit_l':

checkpoint_path = "sam_vit_l_0b3195.pth"

else: # vit_b

checkpoint_path = "sam_vit_b_01ec64.pth"

# Test if checkpoint exists, in any other case obtain it

if not os.path.exists(checkpoint_path):

logger.information(f"Downloading {checkpoint_path}...")

import urllib.request

model_urls = {

"sam_vit_h_4b8939.pth": "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth",

"sam_vit_l_0b3195.pth": "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_l_0b3195.pth",

"sam_vit_b_01ec64.pth": "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_b_01ec64.pth"

}

urllib.request.urlretrieve(model_urls[checkpoint_path], checkpoint_path)

# Load SAM mannequin

sam = sam_model_registry[self.config['model_type']](checkpoint=checkpoint_path)

sam.to(system=self.system)

# Create predictor

self.predictor = SamPredictor(sam)

logger.information(f"SAM segmenter initialized with mannequin kind {self.config['model_type']}")

self.is_initialized = True

besides Exception as e:

logger.error(f"Did not initialize SAM segmenter: {e}")

increase

def section(self, body: np.ndarray) -> Dict[str, Any]:

"""

Mechanically section objects in a body utilizing SAM.

Args:

body: Enter picture body (BGR format)

Returns:

Dictionary with segmentation outcomes

"""

if not self.is_initialized:

self.initialize()

# Convert BGR to RGB

frame_rgb = cv2.cvtColor(body, cv2.COLOR_BGR2RGB)

# Set picture in predictor

self.predictor.set_image(frame_rgb)

attempt:

# For automated masks era

from segment_anything import SamAutomaticMaskGenerator

# Create masks generator with configuration

mask_generator = SamAutomaticMaskGenerator(

mannequin=self.predictor.mannequin,

points_per_side=self.config['points_per_side'],

pred_iou_thresh=self.config['conf_threshold'],

stability_score_thresh=0.95,

crop_n_layers=1,

crop_n_points_downscale_factor=2,

min_mask_region_area=100 # Threshold for small areas

)

# Generate masks

masks = mask_generator.generate(frame_rgb)

# Course of outcomes

return self._process_automatic_masks(masks, body.form[:2])

besides Exception as e:

logger.error(f"Error in automated segmentation: {e}")

return {

'masks': [],

'scores': [],

'courses': [],

'class_names': []

}

def segment_by_points(self,

body: np.ndarray,

factors: Checklist[Tuple[int, int]],

point_labels: Elective[List[int]] = None) -> Dict[str, Any]:

"""

Phase based mostly on immediate factors.

Args:

body: Enter body (BGR)

factors: Checklist of (x, y) coordinate tuples to make use of as prompts

point_labels: Checklist of labels for factors (1 for foreground, 0 for background)

If None, all factors are thought of foreground

Returns:

Dictionary with segmentation outcomes

"""

if not self.is_initialized:

self.initialize()

# Convert BGR to RGB

frame_rgb = cv2.cvtColor(body, cv2.COLOR_BGR2RGB)

# Set picture in predictor if not already set

self.predictor.set_image(frame_rgb)

# Put together factors and labels

if point_labels is None:

point_labels = [1] * len(factors)

# Convert to numpy arrays

input_points = np.array(factors)

input_labels = np.array(point_labels)

# Predict masks

masks, scores, logits = self.predictor.predict(

point_coords=input_points,

point_labels=input_labels,

multimask_output=True # Return a number of masks

)

# Course of outcomes

end result = {

'masks': masks.tolist(), # Checklist of binary masks

'scores': scores.tolist(), # Confidence scores

'courses': [0] * len(scores), # Generic class ID for all masks

'class_names': ['object'] * len(scores), # Generic class title

'logits': logits # Uncooked logits for potential additional processing

}

return end result

def segment_by_boxes(self,

body: np.ndarray,

containers: Checklist[List[int]]) -> Dict[str, Any]:

"""

Phase based mostly on bounding containers.

Args:

body: Enter body (BGR)

containers: Checklist of [x1, y1, x2, y2] bounding containers to make use of as prompts

Returns:

Dictionary with segmentation outcomes

"""

if not self.is_initialized:

self.initialize()

# Convert BGR to RGB

frame_rgb = cv2.cvtColor(body, cv2.COLOR_BGR2RGB)

# Set picture in predictor

self.predictor.set_image(frame_rgb)

outcomes = {

'masks': [],

'scores': [],

'courses': [],

'class_names': []

}

# Course of every field

for field in containers:

# Convert to tensor format anticipated by SAM

input_box = np.array(field)

# Predict masks for this field

masks, scores, logits = self.predictor.predict(

field=input_box,

multimask_output=True

)

# Take the best scoring masks

best_idx = np.argmax(scores)

outcomes['masks'].append(masks[best_idx])

outcomes['scores'].append(float(scores[best_idx]))

outcomes['classes'].append(0) # Generic class ID

outcomes['class_names'].append('object')

return outcomes

def _process_automatic_masks(self, masks: Checklist[Dict], image_shape: Tuple[int, int]) -> Dict[str, Any]:

"""

Course of masks from automated masks generator right into a standardized format.

Args:

masks: Checklist of masks dictionaries from SAM automated masks generator

image_shape: (peak, width) of the unique picture

Returns:

Standardized segmentation end result dictionary

"""

if not masks:

return {

'masks': [],

'scores': [],

'courses': [],

'class_names': []

}

# Convert to standardized format

binary_masks = []

scores = []

courses = []

class_names = []

# Type masks by space (largest first)

masks = sorted(masks, key=lambda x: -x['area'])

for idx, mask_data in enumerate(masks):

# Convert RLE to binary masks if wanted

if isinstance(mask_data['segmentation'], dict):

from pycocotools import masks as mask_utils

binary_mask = mask_utils.decode(mask_data['segmentation'])

else:

binary_mask = mask_data['segmentation']

# Guarantee masks is binary and accurately sized

binary_mask = binary_mask.astype(bool)

# Add to outcomes

binary_masks.append(binary_mask)

scores.append(float(mask_data.get('predicted_iou', 1.0)))

courses.append(0) # Generic class ID since SAM does not classify

class_names.append(f"object_{idx}")

return {

'masks': binary_masks,

'scores': scores,

'courses': courses,

'class_names': class_names,

'areas': [m['area'] for m in masks],

'stability_scores': [m.get('stability_score', 1.0) for m in masks]

}

This implementation offers a number of methods to section photos:

- Automated segmentation by way of the

section()technique, which tries to determine all objects - Level-based segmentation by way of

segment_by_points(), which generates masks from click on prompts - Field-based segmentation by way of

segment_by_boxes(), which refines bounding containers into exact masks

The box-based method is especially vital for our notion system, because it creates a pure pipeline: detection → bounding containers → segmentation masks.

The segmentation module integrates with our YAML-based configuration system:

# config/perception_config.yaml# Segmentation configuration

segmentation:

enabled: true

model_type: "vit_b" # Choices: "vit_b", "vit_l", "vit_h"

checkpoint: "default" # Or path to a customized checkpoint

points_per_side: 32 # For automated segmentation

conf_threshold: 0.8 # Confidence threshold for segments

# Phase Something Mannequin configuration

segment_anything:

model_type: "vit_b" # Choices: "vit_b", "vit_l", "vit_h"

checkpoint: "default" # Or path to checkpoint

points_per_side: 32 # Larger values = extra segments

pred_iou_thresh: 0.8 # Minimal predicted IoU for a masks

stability_score_thresh: 0.95 # Minimal stability rating

system: "cuda"

optimize: true

This configuration method offers management over:

- Mannequin choice: Select between totally different SAM variants (vit_b, vit_l, vit_h)

- Segmentation density: Management what number of segments to generate

- High quality thresholds: Set confidence thresholds for acceptable segments

- Efficiency settings: Configure for various deployment environments

Probably the most highly effective elements of our segmentation module is the way it can improve detection and monitoring outcomes by remodeling bounding containers into exact masks.

Right here’s how we match segmentation masks to detected/tracked objects:

def _match_masks_to_objects(self, objects: Checklist[Dict], segmentation_results: Dict) -> Checklist[Optional[np.ndarray]]:

"""

Match segmentation masks to detection/monitoring objects.Args:

objects: Checklist of detection/monitoring objects

segmentation_results: Segmentation outcomes with masks, scores, and so forth.

Returns:

Checklist of masks matched to things (None for unmatched objects)

"""

masks = segmentation_results.get('masks', [])

scores = segmentation_results.get('scores', [1.0] * len(masks))

class_ids = segmentation_results.get('courses', [0] * len(masks))

# Filter masks by confidence threshold

threshold = self.config['segment_confidence_threshold']

valid_masks = [

(mask, score, class_id)

for mask, score, class_id in zip(masks, scores, class_ids)

if score >= threshold

]

if not valid_masks:

return [None] * len(objects)

# Convert to arrays for simpler processing

valid_masks, valid_scores, valid_class_ids = zip(*valid_masks)

# Match masks to every object

matched_masks = []

for obj in objects:

if 'field' not in obj:

matched_masks.append(None)

proceed

# Get object properties

field = obj['box']

obj_class_id = obj.get('class_id', -1)

# Calculate IoU between object field and every masks

best_iou = 0.0

best_mask = None

for i, masks in enumerate(valid_masks):

# Skip masks with totally different class ID if specified

mask_class_id = valid_class_ids[i]

if obj_class_id != -1 and mask_class_id != -1 and obj_class_id != mask_class_id:

proceed

# Calculate IoU between field and masks

iou = self._calculate_box_mask_iou(field, masks)

# Replace finest match

if iou > best_iou:

best_iou = iou

best_mask = masks

# Add finest matching masks

if best_iou > 0.3: # Threshold for matching

matched_masks.append(best_mask)

else:

matched_masks.append(None)

return matched_masks

This matching course of creates a one-to-one relationship between detected/tracked objects and segmentation masks, enhancing our notion outcomes with exact object boundaries.

Segmentation can really enhance detection outcomes by refining bounding containers to extra exactly match objects:

def _refine_boxes_with_masks(self, objects: Checklist[Dict], masks: Checklist[Optional[np.ndarray]]) -> Checklist[Dict]:

"""

Refine object bounding containers utilizing segmentation masks.Args:

objects: Checklist of detection/monitoring objects

masks: Checklist of masks matched to things

Returns:

Checklist of objects with refined containers

"""

refined_objects = []

for obj, masks in zip(objects, masks):

refined_obj = obj.copy()

# Skip if no masks or no field

if masks is None or 'field' not in obj:

refined_objects.append(refined_obj)

proceed

# Discover masks contours to get refined bbox

attempt:

contours, _ = cv2.findContours(

masks.astype(np.uint8),

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE

)

if contours:

# Discover bounding rect of all contours

all_points = np.concatenate(contours)

x, y, w, h = cv2.boundingRect(all_points)

# Replace field with refined coordinates

refined_obj['box'] = [float(x), float(y), float(x + w), float(y + h)]

# Replace middle and dimensions

refined_obj['center'] = [float(x + w/2), float(y + h/2)]

refined_obj['dimensions'] = [float(w), float(h)]

besides Exception as e:

logger.warning(f"Error refining field with masks: {e}")

refined_objects.append(refined_obj)

return refined_objects

This refinement course of can considerably enhance the accuracy of bounding containers, particularly for objects with irregular shapes or partial occlusions.

Segmentation masks allow extra correct evaluation of objects, together with higher distance estimation from depth maps:

def _calculate_object_distance_with_mask(self, depth_map: np.ndarray, masks: np.ndarray) -> float:

"""

Calculate distance to an object utilizing a segmentation masks and depth map.Args:

depth_map: Depth map

masks: Segmentation masks for the item

Returns:

Estimated distance

"""

# Guarantee masks is binary

binary_mask = masks > 0

# Test if masks has any pixels

if not np.any(binary_mask):

return 1.0 # Default max distance if masks is empty

# Extract depth values inside the masks

masked_depth = depth_map[binary_mask]

# If utilizing the decrease a part of the item for higher distance estimation

if self.config['use_lower_part_for_distance']:

# Discover lowest 30% of masks pixels (highest y values)

y_indices = np.the place(binary_mask)[0] # Row indices

if len(y_indices) > 10: # Guarantee sufficient pixels

threshold_y = np.percentile(y_indices, 70) # Backside 30%

lower_mask = np.zeros_like(binary_mask)

lower_mask[int(threshold_y):, :] = binary_mask[int(threshold_y):, :]

if np.any(lower_mask):

masked_depth = depth_map[lower_mask]

# Use specified percentile (median by default)

distance = float(np.percentile(masked_depth, self.config['distance_percentile']))

return distance

This method is extra correct than utilizing the whole bounding field for distance estimation as a result of it considers solely the precise object pixels, ignoring background or occluding objects.

Masks additionally allow extra correct colour extraction:

def _extract_color_from_mask(self, body: np.ndarray, masks: np.ndarray) -> Checklist[int]:

"""

Extract the dominant colour from a masked area of the picture.Args:

body: Enter picture

masks: Binary masks

Returns:

Dominant colour as [R, G, B]

"""

# Guarantee masks is binary

binary_mask = masks > 0

# Test if masks has any pixels

if not np.any(binary_mask):

return [0, 0, 0] # Return black for empty masks

# Extract masked area

masked_pixels = body[binary_mask]

# Apply k-means to masked pixels

pixels = np.float32(masked_pixels)

# Use fewer clusters for smaller masks

okay = min(self.config['kmeans_clusters'], len(pixels) // 10 + 1)

okay = max(1, okay) # A minimum of 1 cluster

if len(pixels) < okay:

# Not sufficient pixels, use common

avg_color = np.imply(pixels, axis=0).astype(int)

dominant_color = avg_color[::-1].tolist() # BGR to RGB

else:

# Apply k-means

standards = self.kmeans_criteria

_, labels, facilities = cv2.kmeans(

pixels, okay, None, standards, 10, cv2.KMEANS_RANDOM_CENTERS

)

# Discover largest cluster

counts = np.bincount(labels.flatten())

dominant_cluster = np.argmax(counts)

# Get colour of dominant cluster

dominant_color = facilities[dominant_cluster].astype(int)

dominant_color = dominant_color[::-1].tolist() # BGR to RGB

return dominant_color

This technique extracts colours solely from the precise object pixels, ignoring surrounding content material.

The segmentation module integrates with our notion pipeline, enhancing detection, monitoring, and depth outcomes:

# pipeline/perception_pipeline.py (excerpt)def process_frame(self, body: np.ndarray, timestamp: float = None) -> PerceptionResult:

"""Course of a single body via the notion pipeline."""

# Create end result container

end result = PerceptionResult()

end result.frame_id = self.frame_id

end result.timestamp = timestamp or time.time()

# 1. Object Detection

detections = self.detector.detect(body)

end result.detections = detections

# 2. Object Monitoring (if accessible)

if self.tracker:

tracks = self.tracker.monitor(detections, body, end result.timestamp)

end result.tracks = tracks

# 3. Depth Estimation (if accessible)

if self.depth_estimator:

depth_map = self.depth_estimator.estimate_depth(body)

end result.depth_map = depth_map

# 4. Segmentation (if accessible)

if self.segmenter:

segmentation_result = None

# Get objects to section (tracks if accessible, in any other case detections)

objects_to_segment = end result.tracks if end result.tracks else end result.detections

if self.config.get('use_box_prompts', True) and objects_to_segment:

# Extract containers for box-prompted segmentation

containers = [obj['box'] for obj in objects_to_segment if 'field' in obj]

if containers:

segmentation_result = self.segmenter.segment_by_boxes(body, containers)

else:

# Automated segmentation (no prompts)

segmentation_result = self.segmenter.section(body)

end result.segmentation = segmentation_result

# 5. Object Fusion (if accessible)

if self.fusion:

objects_to_fuse = end result.tracks if end result.tracks else end result.detections

fused_objects = self.fusion.fuse_objects(

objects_to_fuse,

end result.depth_map,

body,

end result.segmentation if hasattr(end result, 'segmentation') else None

)

end result.fused_objects = fused_objects

# Increment body counter

self.frame_id += 1

return end result

The pipeline can use both prompt-based segmentation (utilizing bounding containers from detection/monitoring) or automated segmentation, relying on configuration.

Visualizing segmentation masks is crucial for understanding and debugging. Right here’s how we visualize segmentation outcomes:

def draw_segmentation(self, body: np.ndarray, segmentation_result: Dict) -> np.ndarray:

"""

Draw segmentation masks on the body.Args:

body: Enter body

segmentation_result: Segmentation end result dictionary

Returns:

Body with segmentation visualization

"""

if not segmentation_result or 'masks' not in segmentation_result:

return body

# Get masks and scores

masks = segmentation_result['masks']

scores = segmentation_result.get('scores', [1.0] * len(masks))

# Create a duplicate of the body

vis_frame = body.copy()

# Apply segmentation masks overlay

alpha = self.config['segmentation_alpha']

# Course of every masks

for i, (masks, rating) in enumerate(zip(masks, scores)):

# Skip low confidence masks

if rating < self.config.get('mask_viz_threshold', 0.5):

proceed

# Generate colour based mostly on masks index

colour = self.get_color_by_id(i)

# Create coloured masks

colored_mask = np.zeros_like(body)

colored_mask[mask > 0] = colour

# Mix with authentic body

cv2.addWeighted(vis_frame, 1.0, colored_mask, alpha, 0, vis_frame)

# Discover contour of masks for define

binary_mask = masks.astype(np.uint8) * 255

contours, _ = cv2.findContours(

binary_mask,

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE

)

# Draw contour define

cv2.drawContours(vis_frame, contours, -1, colour, 2)

return vis_frame

This visualization exhibits every segmented object with a semi-transparent colour overlay and outlined contours, making it simple to see the exact object boundaries.

Visualization of detected objects with exact segmentation masks, coloured by object ID.

Right here’s a standalone instance of easy methods to use the segmentation module:

import cv2

import numpy as np

from notion.segmentation.segment_anything import SegmentAnythingModel# Create segmenter

segmenter = SegmentAnythingModel({

'model_type': 'vit_b',

'points_per_side': 32

})

# Load a picture

picture = cv2.imread('test_image.jpg')

# Automated segmentation

seg_result = segmenter.section(picture)

print(f"Discovered {len(seg_result['masks'])} segments")

# Visualize masks

alpha = 0.5 # Transparency for masks overlay

vis_image = picture.copy()

for i, masks in enumerate(seg_result['masks']):

# Generate colour for this masks

color_id = i % 20 # Cycle via 20 colours

hue = color_id / 20

colour = [int(c * 255) for c in colorsys.hsv_to_rgb(hue, 0.8, 1.0)]

colour = [color[2], colour[1], colour[0]] # Convert to BGR

# Create coloured masks

mask_colored = np.zeros_like(picture)

mask_colored[mask > 0] = colour

# Overlay on picture

cv2.addWeighted(vis_image, 1.0, mask_colored, alpha, 0, vis_image)

# Draw contour

contours, _ = cv2.findContours(

masks.astype(np.uint8),

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE

)

cv2.drawContours(vis_image, contours, -1, colour, 2)

# Show end result

cv2.imshow('Segmentation', vis_image)

cv2.waitKey(0)

cv2.destroyAllWindows()

And right here’s easy methods to use segmentation with bounding containers from detection:

# First, run detection

from notion.detection.yolo_detector import YOLODetector

detector = YOLODetector({'model_size': 's'})

detections = detector.detect(picture)# Extract bounding containers

containers = [det['box'] for det in detections]

# Run box-prompted segmentation

seg_result = segmenter.segment_by_boxes(picture, containers)

# Visualize the outcomes

vis_image = picture.copy()

for i, (det, masks) in enumerate(zip(detections, seg_result['masks'])):

# Get object information

x1, y1, x2, y2 = [int(c) for c in det['box']]

class_name = det['class_name']

# Generate colour

colour = [int(c * 255) for c in colorsys.hsv_to_rgb(i/len(detections), 0.8, 1.0)]

colour = [color[2], colour[1], colour[0]] # BGR

# Draw segmentation masks

mask_colored = np.zeros_like(picture)

mask_colored[mask > 0] = colour

cv2.addWeighted(vis_image, 1.0, mask_colored, 0.5, 0, vis_image)

# Draw label

cv2.putText(vis_image, class_name, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, colour, 2)

cv2.imshow('Field-prompted Segmentation', vis_image)

cv2.waitKey(0)

Segmentation is computationally intensive, particularly with bigger fashions. Listed below are optimizations to contemplate:

- Mannequin choice: SAM provides totally different mannequin sizes (vit_b, vit_l, vit_h)

- Immediate-driven segmentation: Use field prompts as a substitute of automated segmentation

- Decision management: Course of at lowered decision for sooner inference

- Batch processing: Group a number of prompts for parallel processing

- Skip frames: Apply segmentation each N frames for video

These choices might be configured based mostly on the deployment atmosphere:

# Excessive-accuracy configuration (desktop/server)

config_high_accuracy = {

'model_type': 'vit_h',

'system': 'cuda',

'points_per_side': 64

}# Balanced configuration (laptop computer)

config_balanced = {

'model_type': 'vit_l',

'system': 'cuda',

'points_per_side': 32

}

# Useful resource-constrained configuration (edge system)

config_resource_constrained = {

'model_type': 'vit_b',

'system': 'cuda',

'points_per_side': 16,

'use_box_prompts': True

}

Probably the most highly effective elements of our notion system is the mixture of segmentation and depth estimation, which permits 3D scene understanding on the pixel degree.

Right here’s how we are able to calculate per-pixel 3D coordinates for a segmented object:

def calculate_object_3d_points(self,

depth_map: np.ndarray,

masks: np.ndarray,

camera_intrinsics: np.ndarray) -> np.ndarray:

"""

Calculate 3D factors for pixels inside a segmentation masks.Args:

depth_map: Depth map

masks: Binary segmentation masks

camera_intrinsics: Digital camera intrinsic matrix

Returns:

Nx3 array of 3D factors in digital camera coordinates

"""

# Guarantee masks is binary

binary_mask = masks > 0

# Get pixel coordinates inside the masks

y_indices, x_indices = np.the place(binary_mask)

# Skip if masks is empty

if len(y_indices) == 0:

return np.array([])

# Extract depth values for these pixels

depth_values = depth_map[binary_mask]

# Digital camera parameters

fx = camera_intrinsics[0, 0]

fy = camera_intrinsics[1, 1]

cx = camera_intrinsics[0, 2]

cy = camera_intrinsics[1, 2]

# Calculate 3D coordinates

points_3d = np.zeros((len(x_indices), 3))

for i in vary(len(x_indices)):

x = x_indices[i]

y = y_indices[i]

z = depth_values[i]

# Convert from picture coordinates to digital camera coordinates

points_3d[i, 0] = (x - cx) * z / fx # X

points_3d[i, 1] = (y - cy) * z / fy # Y

points_3d[i, 2] = z # Z

return points_3d

This creates a dense 3D level cloud for every segmented object, which can be utilized for functions like object modeling, 3D reconstruction, and exact collision detection.

Whereas segmentation is highly effective, it’s vital to know its limitations:

- Computational value: Extra resource-intensive than detection or monitoring

- Ambiguity: Some object boundaries could also be inherently ambiguous

- Fantastic particulars: Might battle with very nice particulars like skinny buildings

- Temporal consistency: Masks might flicker or change between frames

- Classification: SAM offers masks however not semantic courses

For functions requiring each exact segmentation and classification, think about combining SAM with a classifier or utilizing detection outcomes to label the segments.

There are a number of methods to increase and enhance the segmentation module:

- Temporal consistency: Add monitoring of segments throughout frames

- Semantic integration: Mix with semantic segmentation for sophistication labels

- Hierarchical segmentation: Deal with part-whole relationships

- Interactivity: Add refinement based mostly on consumer suggestions

- Area adaptation: Fantastic-tune for particular environments

Right here’s a sketch of how temporal section monitoring might be carried out:

class TemporalSegmentTracker:

"""Monitor segmentation masks throughout frames."""def __init__(self, config=None):

self.config = config or {}

self.prev_segments = None

self.segment_tracks = {} # ID -> monitor historical past

self.next_id = 0

def track_segments(self,

current_masks: Checklist[np.ndarray],

current_frame: np.ndarray) -> Checklist[Dict]:

"""

Monitor segmentation masks throughout frames.

Args:

current_masks: Present body section masks

current_frame: Present body

Returns:

Checklist of tracked segments with IDs

"""

# First body case

if self.prev_segments is None:

tracked_segments = []

for masks in current_masks:

segment_id = self.next_id

self.next_id += 1

tracked_segments.append({

'masks': masks,

'id': segment_id

})

self.prev_segments = tracked_segments

return tracked_segments

# Match present masks to earlier masks

matches = self._match_masks(self.prev_segments, current_masks, current_frame)

# Create tracked segments with preserved IDs the place attainable

tracked_segments = []

for i, masks in enumerate(current_masks):

if i in matches:

# Matched to a earlier section

prev_idx = matches[i]

segment_id = self.prev_segments[prev_idx]['id']

else:

# New section

segment_id = self.next_id

self.next_id += 1

tracked_segments.append({

'masks': masks,

'id': segment_id

})

# Replace monitor historical past

if segment_id not in self.segment_tracks:

self.segment_tracks[segment_id] = []

# Calculate centroid

y_indices, x_indices = np.the place(masks)

if len(x_indices) > 0:

centroid = (np.imply(x_indices), np.imply(y_indices))

self.segment_tracks[segment_id].append(centroid)

# Replace earlier segments

self.prev_segments = tracked_segments

return tracked_segments

def _match_masks(self,

prev_segments: Checklist[Dict],

current_masks: Checklist[np.ndarray],

current_frame: np.ndarray) -> Dict[int, int]:

"""

Match present masks to earlier masks.

Args:

prev_segments: Earlier body's tracked segments

current_masks: Present body's masks

current_frame: Present body

Returns:

Dictionary mapping present masks index to earlier section index

"""

# Calculate IoU matrix

iou_matrix = np.zeros((len(current_masks), len(prev_segments)))

for i, curr_mask in enumerate(current_masks):

for j, prev_segment in enumerate(prev_segments):

prev_mask = prev_segment['mask']

iou = self._calculate_mask_iou(curr_mask, prev_mask)

iou_matrix[i, j] = iou

# Use Hungarian algorithm for optimum project

from scipy.optimize import linear_sum_assignment

row_indices, col_indices = linear_sum_assignment(-iou_matrix) # Detrimental for max IoU

# Create matches dictionary

matches = {}

for i, j in zip(row_indices, col_indices):

# Guarantee IoU is above threshold

if iou_matrix[i, j] >= 0.5: # IoU threshold

matches[i] = j

return matches

def _calculate_mask_iou(self, mask1: np.ndarray, mask2: np.ndarray) -> float:

"""Calculate IoU between two masks."""

intersection = np.logical_and(mask1, mask2).sum()

union = np.logical_or(mask1, mask2).sum()

if union == 0:

return 0.0

return intersection / union

This tracker would keep constant IDs for segments throughout frames, enabling temporal evaluation and movement monitoring on the masks degree.

On this article, we’ve explored the segmentation module of our laptop imaginative and prescient notion system. We’ve seen how segmentation:

- Enhances notion with pixel-precise object boundaries

- Integrates with detection and monitoring to refine bounding containers

- Combines with depth estimation for 3D scene understanding

- Could be carried out effectively utilizing the Phase Something Mannequin

Segmentation represents the head of 2D scene understanding, offering probably the most detailed illustration of objects attainable within the picture aircraft. When mixed with the opposite modules in our notion system — detection, monitoring, and depth estimation — it permits a complete understanding of the visible world.

The whole notion system we’ve constructed all through this collection is a strong basis for functions in robotics, autonomous autos, surveillance, augmented actuality, and lots of different fields that require machines to know the visible world.