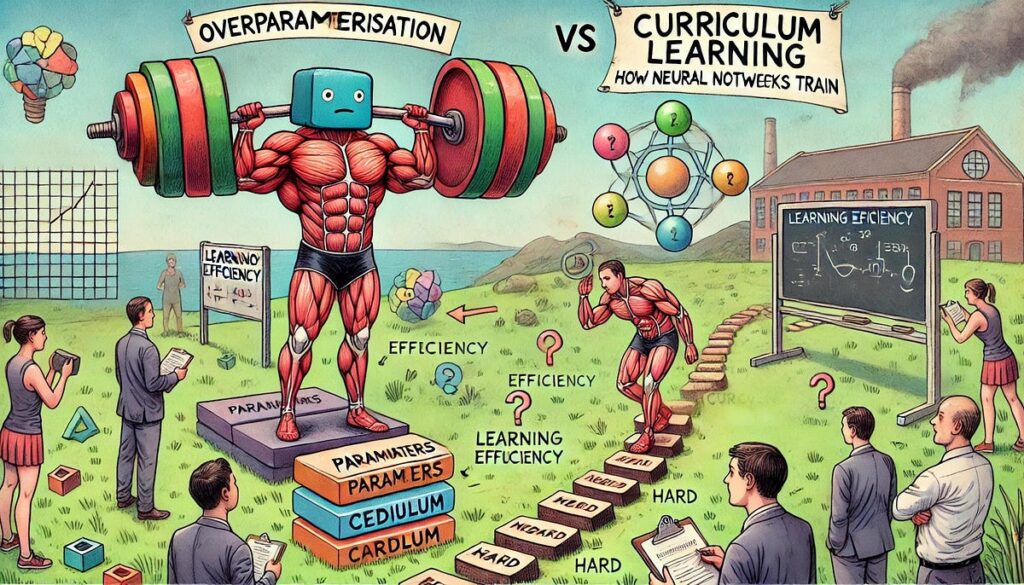

Deep studying continues to remodel industries, from healthcare to autonomous driving, by enabling machines to be taught advanced patterns from huge datasets. However, as we push neural networks to ever larger ranges of sophistication, new questions emerge about how finest to coach them. A groundbreaking examine introduced on the 2024 International Conference on Machine Learning (ICML) offers some solutions by exploring the advanced interaction between two key facets of neural community coaching: overparameterisation and curriculum studying. This analysis, carried out by a staff of prime researchers, sheds mild on how overloading neural networks with extra parameters would possibly really assist them be taught extra effectively, whereas additionally difficult long-held beliefs concerning the utility of structured coaching schedules.

This paper is nothing wanting inspiring as a result of it rethinks elementary facets of deep studying. The authors discover how networks with an awesome variety of parameters may not simply be a byproduct of mannequin scale, however a vital part that might enhance coaching effectivity. By drawing from cognitive science and trendy machine studying concept, the staff offers a recent theoretical understanding of how each the complexity of the mannequin (overparameterisation) and the sequence through which information is introduced to the mannequin (curriculum studying) work together to form studying…