My Journey into the World of Deepfakes and the Struggle Towards Digital Deception

In late 2023, a video of Rashmika Mandanna went viral — however one thing about it was off. She seemed to be doing one thing she’d by no means publicly accomplished earlier than, and it left followers and media in shock.

However right here’s the twist:

It wasn’t her.

It was a deepfake — a chillingly correct AI-generated video that mimicked her face, expressions, and voice.

That was the second my curiosity become concern.

As a pc science pupil at KIIT, I had already been exploring synthetic intelligence. However after seeing how simply a revered movie star’s picture could possibly be manipulated and weaponized, I knew I needed to do one thing extra.

I wasn’t simply going to examine deepfakes.

I used to be going to combat them.

Quick ahead to my closing 12 months venture, I made a decision to work on a analysis paper titled:

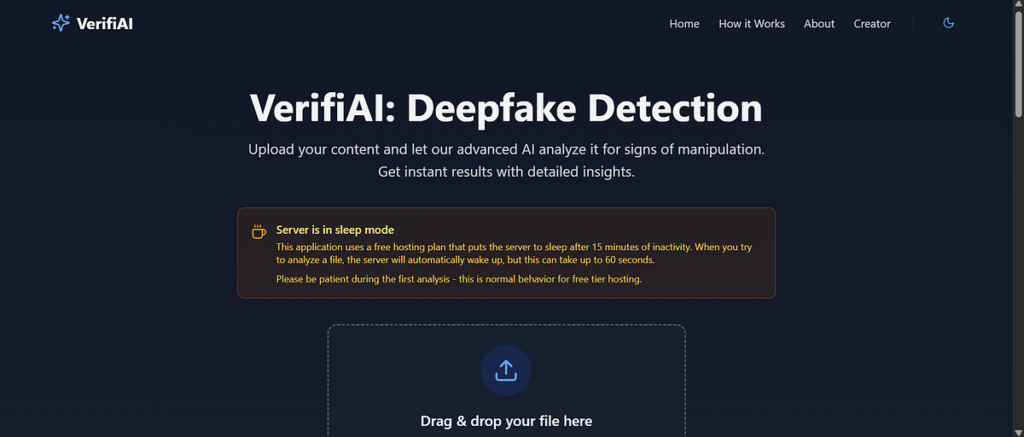

And with that, VERIFIAI was born — a deepfake detection system powered by XceptionNet, skilled on large-scale datasets like DFDC and FaceForensics++.

However constructing it was no cakewalk.

Let me be actual.

Working with high-resolution movies means large information and lengthy processing instances. There have been nights I left fashions coaching and woke as much as discover the system had crashed. I fought with corrupted datasets, complicated documentation, and imposter syndrome.

I felt caught, annoyed — like I used to be coding at the hours of darkness.

What received me by way of?

The considered that Rashmika video — and the way anybody could possibly be subsequent.

My family. My associates. You. Me.

Nobody is secure from digital impersonation except we construct safeguards.

After weeks of making an attempt and failing, I found that utilizing switch studying with XceptionNet (a robust CNN structure) gave one of the best outcomes when rigorously fine-tuned on genuine and pretend movies.

I additionally realized that it wasn’t nearly mannequin accuracy — it was about making a software that would adapt to newer kinds of deepfakes, not simply those within the coaching set.

And slowly, VERIFIAI started to detect deepfakes with spectacular accuracy.

Deepfakes are now not science fiction — they’re in our memes, information, and viral movies.

They will destroy reputations.

They will manipulate elections.They will emotionally injury actual folks — like in Rashmika’s case.

This isn’t only a tech downside.

It’s a societal menace.And except we equip ourselves with instruments like VERIFIAI, we’ll be left questioning all the things we see.

Hey!

You simply scrolled by way of my journey of battling deepfakes, coaching neural nets, and (virtually) dropping sleep over mannequin accuracy — and if that doesn’t scare you off, we undoubtedly want to attach. This wasn’t only a venture. VERIFIAI grew to become a full-blown mission — constructed with late-night analysis calls, numerous coaching epochs, countless debugging, and moments the place I questioned actuality greater than XceptionNet ever may.

And I didn’t do it alone.

With my wonderful pal and co-researcher Prasenjeet Singh, we turned a shared ardour into one thing actual. 💻⚡️

🔎 Take a look at our creation — VERIFIAI:

👉 www.verifiai.tech

Strive it. Break it. Adore it. Hate it. Both manner, inform us. Your suggestions = progress.

📄 Learn our analysis paper — it’s received extra drama than a Netflix docuseries:

👉 DEEPFAKE DETECTION: A SYSTEMATIC SURVEY

In the event you’re into AI, ethics, misinformation, or simply wanna know what XceptionNet truly does — it’s price a learn.

Linkedin — linkedin.com/in/debangi-ghatak-d777999

Comply with me right here on Medium

Let’s flip this scroll right into a spark. Collab? Chat? Concepts? Espresso over Zoom? We’re all in. Till then — Sayonara. It’s me, Debangi Ghatak, signing off.