The AI world simply obtained its largest shakeup in months. Whereas everybody was nonetheless adjusting to OpenAI’s GPT-4.1 and Google’s Gemini 2.5 Professional, Anthropic quietly dropped Claude 4 — and it’s not simply an incremental replace. That is the type of launch that makes you rethink what AI can really do.

Launched simply yesterday, Claude 4 is available in two flavors: Claude Opus 4, the powerhouse designed for advanced duties, and Claude Sonnet 4, the environment friendly workhorse for on a regular basis use. However right here’s what makes this launch totally different — these aren’t simply quicker, smarter variations of their predecessors. They’re hybrid fashions that may change between lightning-fast responses and deep, methodical considering relying on what you want.

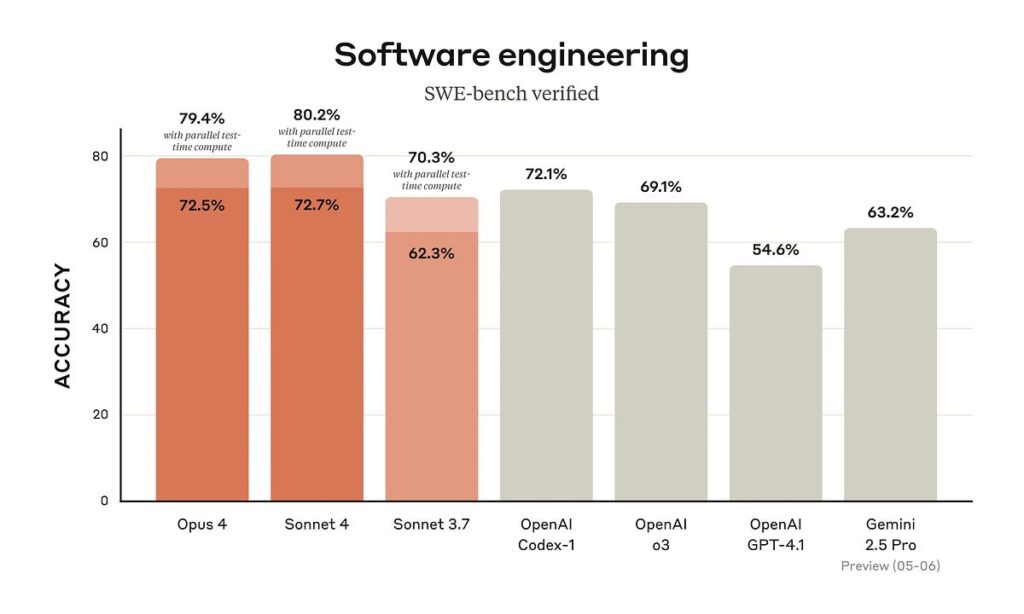

Let’s discuss efficiency, as a result of the benchmarks are telling a narrative that’s arduous to disregard. Claude Opus 4 simply turned the world’s finest coding mannequin, scoring a powerful 72.5% on SWE-bench, the trade customary for real-world software program engineering duties. To place that in perspective, GPT-4.1 managed 54.6% on the identical take a look at. That’s not a small hole — that’s a chasm.

But it surely will get higher. On Terminal-bench, which assessments how effectively AI can deal with advanced command-line duties, Opus 4 hit 43.2%. In the meantime, Claude Sonnet 4, the supposedly “smaller” mannequin, nonetheless managed to attain 72.7% on SWE-bench — beating GPT-4.1 by a major margin.

Current head-to-head testing revealed one thing fascinating about how these fashions assume otherwise. When given the identical seven prompts testing every thing from inventive writing to technical explanations, Claude 4 Sonnet constantly outperformed Gemini 2.5 Professional in emotional intelligence and nuanced communication. Whereas Gemini excelled at technical accuracy, Claude confirmed superior viewers adaptation and empathetic responses.

Right here’s the place Claude 4 will get genuinely thrilling. Each fashions characteristic one thing known as “prolonged considering with device use” — basically, they’ll pause mid-conversation, search the net, run code, or entry different instruments whereas reasoning by advanced issues. This isn’t only a fancy characteristic; it’s a basic shift in how AI approaches problem-solving.

One researcher at Anthropic demonstrated this by having Claude Opus 4 play Pokémon Pink. Whereas Claude 3.7 Sonnet obtained caught in a single metropolis for “dozens of hours” and struggled to acknowledge non-player characters, Opus 4 confirmed outstanding enchancment in long-term reminiscence and planning. When it decided it wanted a particular energy to advance, the AI spent two days enhancing its skills earlier than resuming play. That’s the type of sustained, goal-oriented conduct we’ve by no means seen from AI earlier than.

The implications are staggering. Rakuten reported that Claude Opus 4 labored autonomously on an open-source refactor for seven hours straight whereas sustaining efficiency. Seven hours. That’s not a chatbot anymore — that’s a digital colleague.

Certainly one of Claude 4’s most underrated options is its dramatically improved reminiscence capabilities. When builders give Claude entry to native information, Opus 4 turns into expert at creating and sustaining “reminiscence information” to retailer key data. This permits higher long-term activity consciousness and coherence on agent duties.

Take into consideration what this implies virtually. As a substitute of beginning recent each dialog, Claude can construct institutional information about your tasks, coding model, and preferences. It’s like having an AI assistant that really learns and grows with you over time.

Versus GPT-4.1: Whereas OpenAI’s newest mannequin brings improved instruction following and an enormous 1 million token context window, Claude 4 merely outperforms it on real-world duties. GPT-4.1 prices $2 per million enter tokens and $8 per million output tokens, whereas Claude maintains pricing at $15/$75 for Opus 4 and $3/$15 for Sonnet 4. The efficiency distinction greater than justifies the fee for critical purposes.

Versus Gemini 2.5 Professional: Google’s considering mannequin leads on LMArena by a major margin and exhibits robust reasoning capabilities. Nevertheless, latest comparative testing suggests Claude 4 Sonnet gives higher sensible communication expertise and emotional intelligence. Gemini 2.5 Professional excels in technical accuracy however struggles with viewers empathy, whereas Claude strikes a greater stability between functionality and value.

The Coding Championship: For builders, the selection is turning into clear. Whereas GPT-4.1 handles routine coding duties effectively and gives cost-effective Mini and Nano variants, Claude 3.7 Sonnet already outperformed it in advanced debugging and structure planning. With Claude 4’s enhancements, the hole has solely widened. Claude is especially robust in security-sensitive purposes the place its reasoning mode helps determine potential vulnerabilities.

Select Claude Opus 4 in the event you’re: Constructing advanced AI brokers, engaged on multi-day coding tasks, conducting deep analysis, or want sustained efficiency on long-running duties. Firms like Cursor name it “state-of-the-art for coding” whereas Block reviews it’s the primary mannequin to really enhance code high quality throughout modifying and debugging.

Select Claude Sonnet 4 in the event you’re: In search of an instantaneous improve from earlier fashions, want balanced efficiency for on a regular basis duties, or need frontier capabilities with out the computational overhead. GitHub is already integrating it because the mannequin powering their new coding agent in GitHub Copilot.

Persist with GPT-4.1 in the event you’re: Primarily doing routine coding duties the place price issues greater than functionality, want the 1 million token context window for particular use circumstances, or are already deeply built-in into the OpenAI ecosystem.

Think about Gemini 2.5 Professional in the event you’re: Engaged on duties requiring pure technical accuracy, want Google’s ecosystem integration, or prioritize efficiency on particular mathematical and scientific benchmarks.

Claude 4 represents one thing we haven’t seen earlier than: AI that may work independently for hours whereas sustaining focus and constructing on its earlier work. The mixture of superior coding efficiency, prolonged considering capabilities, and real reminiscence persistence makes this really feel much less like an incremental enchancment and extra like a categorical shift.

The true take a look at isn’t in benchmarks — it’s in whether or not these fashions can deal with the messy, advanced, long-term tasks that outline actual work. Early reviews from firms like Cognition, which notes that Opus 4 “excels at fixing advanced challenges that different fashions can’t”, counsel we’re lastly getting there.

For builders, researchers, and anybody constructing with AI, Claude 4 isn’t simply an alternative choice — it’s shortly turning into the brand new customary. The query isn’t whether or not you must attempt it, however how shortly you’ll be able to combine it into your workflow earlier than your competitors does.

The age of AI assistants that really help — reasonably than simply reply — has formally begun. And Claude 4 is main the cost.