There’s numerous hype right now about LLMs. Engineers typically examine and reward current revolutionary fashions like ChatGPT, Llama, Gemini, and Mistral, which certainly deserve a lot consideration for the highly effective capabilities that they’ve. On the similar time, builders have a tendency to not point out so many different impactful fashions that introduced numerous success within the machine studying business as nicely.

On this article, I want to discuss some of the iconic fashions developed by OpenAI — CLIP. Launched in 2021, CLIP can be utilized in numerous settings for NLP or pc imaginative and prescient tasks and produces state-of-the-art outcomes on totally different duties. Whereas many engineers consider CLIP as simply an embedding mannequin — which is true — its utility is extraordinarily vast.

On this article, we’ll cowl intimately the CLIP mannequin, together with its structure and coaching course of, efficiency, and functions.

Contrastive studying

Earlier than discussing the CLIP structure, allow us to perceive the which means behind contrastive studying, which performs an integral function within the CLIP design.

Contrastive studying is a self-supervised studying methodology whose goal consists of educating an embedding mannequin to supply embeddings such that related samples are introduced nearer within the area and dissimilar ones are pushed additional away.

Merely talking, in contrastive studying, the mannequin works with pairs of objects. Throughout coaching, it doesn’t know whether or not, in actuality, they’re related or not. After predicting their distance (similarity) via calculated embeddings, the loss operate is computed. Principally, there are two instances:

- The preliminary objects had been related. The loss operate worth results in a weight replace in a manner that adjusts the embeddings to deliver their similarity nearer subsequent time.

- The preliminary objects had been dissimilar. On this case, the mannequin updates its weights in order that the similarity between this pair of embeddings might be decrease subsequent time.

Structure & Coaching

CLIP builders collected an enormous dataset of 400M pairs (picture, textual content). Each picture was supplied with a textual description.

The objective consisted of setting up significant embedding representations such that the similarity between them would measure how related a given textual content description is with respect to a picture. For that, the authors took two already present mannequin architectures:

- Textual content embedding mannequin

- Picture embedding mannequin

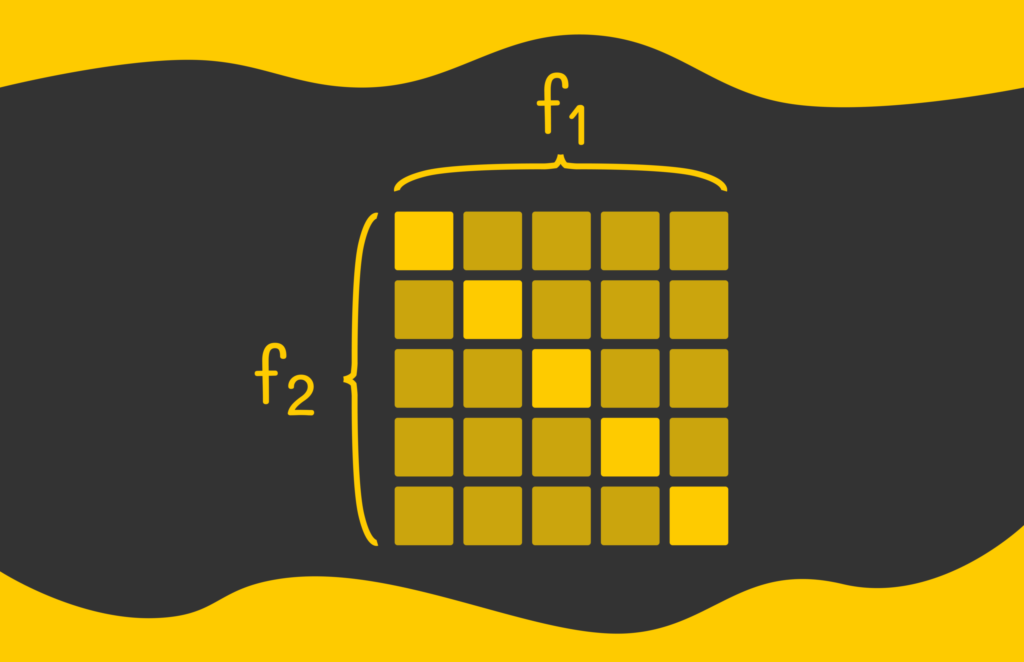

The preliminary 400M pairs of photographs and texts had been break up into batches. Each picture and textual content in every batch was handed via the picture or textual content embedding mannequin to supply embeddings. In consequence, if there have been n embedding pairs within the batch, then n embeddings can be produced for photographs and texts.

After that, the cosine pairwise similarity matrix is constructed between picture and textual content embeddings.

Each component on the primary diagonal of the pairwise matrix represents the similarity between a picture and the textual content that had been coupled collectively within the batch from the start. Because the textual content description corresponds nicely to the picture, the similarities on the primary diagonal needs to be maximized.

Alternatively, parts off the diagonal weren’t coupled collectively and are available from totally different pairs. Due to this fact, their similarities needs to be minimized.

Calculated similarities are then handed to the cross-entropy loss operate, which is used to carry out a weight replace for each embedding fashions.

Particulars

The primary parameters of CLIP are the embedding fashions used to encode texts and pictures:

- Textual content is encoded with a Transformer-based mannequin whose structure is much like BERT.

- For photographs, the encoding could be accomplished both by conventional convolutional networks (ResNet) or by a Imaginative and prescient Transformer mannequin (ViT).

Each fashions had been skilled from scratch and, by default, generate embeddings of dimension 512. Given the truth that the dataset dimension (400M pairs) is massive, ViT is often most well-liked over ResNet.

Benefits

There are a number of robust sides of CLIP value noting:

- CLIP can be utilized for numerous duties, not only for embedding technology (examples are within the following part).

- Zero-shot CLIP efficiency is comparable with easy supervised baselines utilizing a linear classifier on high of ResNet options.

- Computational effectivity: many computations could be run in parallel.

Functions

Embeddings

The obvious CLIP utility consists of utilizing it for textual content and picture embedding calculation. The embeddings can be utilized individually for textual content or picture duties, for instance, in similarity search pipelines or RAG methods.

Moreover, each texts and pictures can be utilized collectively if there’s a must affiliate a picture with its corresponding textual content description.

Picture classification

Other than the technology of picture and textual content embeddings, one of many strongest sides of CLIP is its potential to unravel different duties in a zero-shot studying fashion.

For instance, let’s take a picture classification process. If we’re given an animal picture with the target to establish its class from a listing of animals, we will embed each identify of an animal. Then, by discovering essentially the most related textual embedding to a given picture embedding, we will straight establish the animal class.

Talking of this recognition methodology, research have proven that it’s higher to embed each textual content (class identify) utilizing the next immediate construction: “a photograph of

”. For different process varieties, the perfect immediate may differ.

OCR

OCR stands for optical character recognition and easily means recognizing textual content from photographs. OCR duties are often solved by specifically skilled supervised fashions. However, CLIP’s spectacular capabilities additionally enable it to establish textual content on photographs in zero-shot manner.

If there’s a record of all doable texts that may seem within the picture, then, in the same method as within the earlier case, we will encode all doable choices and select essentially the most related pair. Nonetheless, on this case, the variety of all doable phrases or texts is often a lot bigger than the standard variety of labels in a picture classification process. Encoding all of them can be very lengthy and inefficient. That’s the reason CLIP is never used for OCR duties with lengthy textual content sequences.

By way of OCR, CLIP works significantly better for small phrases, and even higher for image recognition. For instance, it’s straightforward to arrange a digit recognition process with CLIP since there are solely 10 lessons (every class represents a digit between 0 and 9).

One attention-grabbing commentary is that zero-shot CLIP achieves solely an 88% accuracy rating on the well-known MNIST handwritten digit recognition process, whereas different easy fashions simply attain 99% accuracy. What is important to remember is that although CLIP has spectacular zero-shot capabilities, there can nonetheless exist very particular picture varieties on which CLIP has not been skilled.

Listed here are some necessary notes:

CLIP isn’t good for some summary duties, like counting objects in a photograph, estimating how shut two objects are to one another within the picture, and so on.

CLIP produces related zero-shot efficiency for traditional pc imaginative and prescient duties in comparison with different older fashions like ImageNet, and so on. However, to have the ability to beat them, the authors declare that CLIP should be skilled on {hardware} that surpasses trendy {hardware} by a thousand occasions, which is infeasible below present circumstances.

Conclusion

On this article, we’ve got studied the architectural rules of CLIP. Skilled on 400M (picture, textual content) pairs, CLIP reached state-of-the-art efficiency on many duties. Whereas CLIP typically fails on some summary downstream duties, it nonetheless has good capabilities to carry out different normal pc imaginative and prescient duties utilizing zero-shot methods.

Assets

All photographs except in any other case famous are by the creator.