It was 3 a.m., and I used to be elbow-deep in dough when the hearth began. A defective oven sensor had ignited, and smoke billowed into the kitchen. Earlier than I might react, a digicam mounted close to the ceiling — a “sensible security system” I’d put in the week prior — blared an alarm, pinpointed the hearth’s location, and shut off the gasoline line mechanically. Firefighters later informed me the AI had saved the constructing.

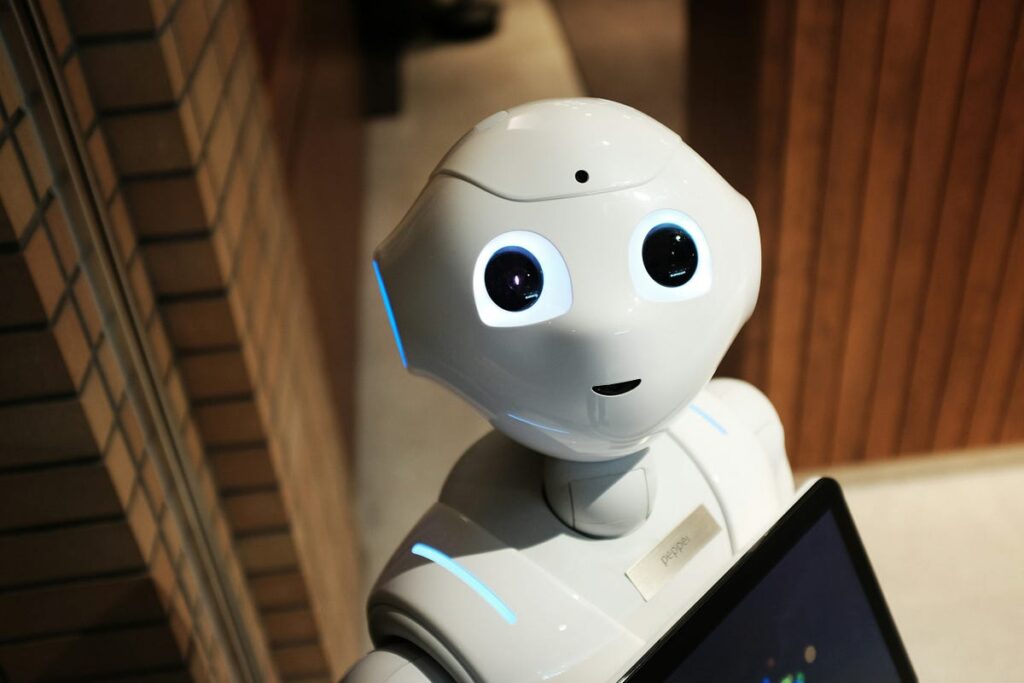

That digicam didn’t simply “detect smoke.” It understood context: flames vs. steam, an actual menace vs. a false alarm. That is laptop imaginative and prescient (CV) — not simply cameras and code, however machines studying to interpret the visible world like people. Let’s unpack how we’re instructing silicon brains to see, why it’s tougher than it sounds, and what it means for our future.

Shut one eye. Maintain your thumb up. Now change eyes. Discover how your thumb “jumps”? Your mind stitches these mismatched 2D inputs right into a seamless 3D world. Oh, it does this whereas filtering out irrelevant particulars (just like the itch in your nostril) and recognizing your mother’s face in a crowd.