- Introduction — From Prompts to Techniques

- How Context Works — Data Ecosystems & Administration

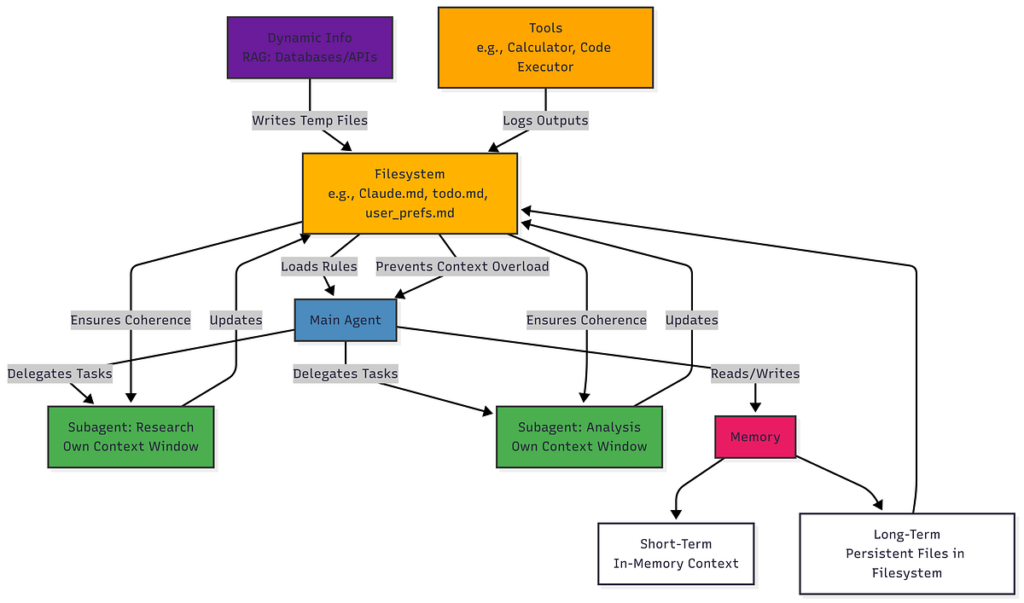

- The 4 Pillars

Foundational Guidelines

Dynamic Data

Instruments & Subagents

Reminiscence Techniques - Actual-World Advantages — Reliability, Effectivity, Studying

- Conclusion — Constructing Higher AI Worlds

Within the early days of LLM, working with it felt like a brand new approach to talk with a being with no concept of easy methods to. Making essentially the most out of the token spitting machine was to immediate it the appropriate means.

This led to everybody being the immediate engineer of their very own league, squeezing essentially the most out of LLMs in the best means potential, however quickly everybody realized that we had been hitting a wall.

A excellent immediate is sort of a effectively rehearsed dialogue, nice for one-shot output however fails because the context grows. Issues begin to change into foggy, outputs begin to change into dim. The as soon as LLM that all the time had the potential immediately shifts to change into a convoluted mess.

This makes us notice that possibly one-shot outputs and prompting was not the best way, possibly speaking to AI wasn’t sufficient and what we wanted was a system an surroundings to construct efficient AI methods.

That’s the place context engineering shines !

At its coronary heart context engineering is principally creating an data ecosystem, an AI/LLM that operates inside.

Perhaps consider it as this fashion, Fundamental prompting is sort of a chef with entry to simply the knife, absolutely can lower numerous stuff however not full sufficient to do lengthy complicated duties.

And context engineer is like giving that chef an entire kitchen with each utensil, software and ingredient spawning out when wanted.

Its principally is juggling and managing the data, the instruments and the reminiscence that AI can entry at any given second.

That is crucial if you notice all LLMs are certain to their mounted context home windows, which in flip rots away if not caught up with on a regular basis.

Context engineering is the artwork of giving the mannequin precisely what it wants, when it wants it, within the format it understands finest.

There even could be circumstances the place context engineering is utilized by you with out realizing something about it.

However guidelines alone aren’t sufficient, let’s speak about maintaining issues present with dynamic data.

It has some fundamental pillars, you cant simply stuff a immediate inside an LLM and name it a day, its not simply textual content its extra of an architectural problem. It is advisable to create a system of prompts and data and instruments in such a means that when the Agent comes inside it works with all that in a synergy selecting the proper context and triggering the proper instruments.

This acts just like the central rulebook, in context engineering, set of foundational directions and checklists that outline the brokers core function, its construction, its persona and guidelines of engagement.

Like a central hub to coordinate all different instruments at our disposal. This offloads the litter from the fashions context window and creates a scientific approach to maintain observe of issues.

One instance for this may be how Claude.md is utilized by Claude code to create a central file of checklists and repetitive duties that’s first loaded into the context window earlier than even something is began, it gives and pumps simply the appropriate data into the LLM to get a way of route and skeleton.

However guidelines alone aren’t sufficient, let’s speak about maintaining issues present with dynamic data.

Whereas organising the appropriate surroundings is one step in the appropriate route, we have to realise that LLMs are skilled on static historic knowledge, leaving them unaware of the current. Context engineering solves this by giving the AI entry to the actual world.

The most typical means to do that is thru RAG, which permits agent to tug real-time data from paperwork, databases or APIs.

This helps us fetch real-time knowledge corresponding to newest inventory on monetary historical past of an organization. The data is fetched and dynamically injected into the context, grounding the AI’s response in present, factual knowledge.

As soon as once more with out pulling the principle context window.

Now that we’ve obtained contemporary knowledge flowing, let’s equip our agent with the appropriate instruments.

To maneuver from speaking to doing, an agent wants instruments. This may very well be something from a easy calculator to a posh search_flights perform or a code executor.

We offer these instruments with higher description and metadata that may assist the mannequin to set off them on the proper time.

That is the place issues get messy although, as soon as the brokers context is full of a whole bunch of instruments it will get confused and its efficiency and pace total drop. Whereas I used to be working with ACI.dev, they solved this with one thing known as Unified MCP server, a sensible dispatcher that picks the appropriate software with out cluttering the LLM’s context. This made the software calling smarter and long-lasting.

For much more complicated duties, we are able to break issues down with specialised helpers.

As a substitute of asking one agent/LLM to simply do one process or bunch of duties sequentially, subagents divide it into particular duties, small minions working in the direction of their very own small particular objectives that meet collectively in the long run the place a joint output that was wanted.

That is essential as a result of when every subagent has its personal separate context window, it performs significantly better with out one influencing one other.

Brokers with one targeted surroundings then permit much more complicated and targeted outcomes, this prevents context air pollution and permits for a lot extra complicated, multi-step workflows.

As soon as once more we are able to do a callback on how Claude code makes use of Claude.md as a central approach to preserve context throughout entire environments, this file when working incorporates context of all of the subagents spawned and makes positive they end their duties with checkboxes and factors to take care of coherence.

Lastly, to tie all of it collectively, brokers want a approach to keep in mind.

Lastly, an efficient agent wants to recollect. Context engineering gives mechanisms for each short-term reminiscence (monitoring the present dialog) and long-term reminiscence (storing person preferences or previous interactions).

Some of the fascinating methods I’ve seen is how some brokers use their surroundings as a type of reminiscence. The Manus agent, for instance, typically creates a todo.md file for complicated duties and updates it because it progresses.

By always rewriting its objectives on the finish of the context, it retains the principle goal in its “latest consideration,” stopping it from getting “lost-in-the-middle” of a protracted process. It is a sensible, low-tech approach to manipulate the mannequin’s consideration and guarantee it stays on observe.

Whereas the mechanisms are sufficient to outdo the products and dangerous of our good previous immediate engineering, this shift isnt simply going to be in your personal lab, it has its personal engineering advantages. By managing the data stream we see advantages which werent there earlier than.

- Elevated Reliability: With a clear, related context, brokers are far much less prone to make errors or hallucinate. When Claude Code runs a process, it has entry to the venture’s particular error dealing with patterns and testing necessities, resulting in extra strong code.

- Smarter Software Use: By offering solely the required instruments or delegating to subagents,the AI can extra precisely choose and use the appropriate software for the job.

- Higher Effectivity: A targeted context means fewer tokens are wanted, which reduces prices and hurries up response occasions. This isn’t a small saving; it may well lower token consumption dramatically.

- Studying from Failure: Some of the efficient, if counter-intuitive, classes is to maintain failures within the context. When an agent tries one thing and will get an error, seeing that failed motion and the ensuing stack hint helps it study. It implicitly updates its inner mannequin and avoids making the identical mistake twice.

The dialog round AI is maturing. Immediate engineering taught us easy methods to converse to those new minds, and it stays a significant ability. However to construct the actually clever and autonomous methods we envision for the longer term, we’ve to assume past single instructions. We’ve got to change into architects of their worlds.

By shifting our focus from remoted prompts to increasing our horizon in the direction of environments which can be increasingly succesful, dependable, and extra aligned with our objectives that’s how we create higher brokers.