A complete information on getting essentially the most out of your Chinese language subject fashions, from preprocessing to interpretation.

With our recent paper on discourse dynamics in European Chinese language diaspora media, our workforce has tapped into an virtually unanimous frustration with the standard of subject modelling approaches when utilized to Chinese language knowledge. On this article, I’ll introduce you to our novel subject modelling methodology, KeyNMF, and how you can apply it most successfully to Chinese language textual knowledge.

Earlier than diving into practicalities, I want to offer you a short introduction to subject modelling idea, and inspire the developments launched in our paper.

Subject modelling is a self-discipline of Pure Language Processing for uncovering latent topical data in textual corpora in an unsupervised method, that’s then introduced to the consumer in a human-interpretable method (often 10 key phrases for every subject).

There are various methods to formalize this process in mathematical phrases, however one quite well-liked conceptualization of subject discovery is matrix factorization. It is a quite pure and intuitive option to sort out the issue, and in a minute, you will note why. The first perception behind subject modelling as matrix factorization is the next: Phrases that often happen collectively, are more likely to belong to the identical latent construction. In different phrases: Phrases, the prevalence of that are extremely correlated, are a part of the identical subject.

You’ll be able to uncover matters in a corpus, by first developing a bag-of-words matrix of paperwork. A bag-of-words matrix represents paperwork within the following method: Every row corresponds to a doc, whereas every column to a singular phrase from the mannequin’s vocabulary. The values within the matrix are then the variety of instances a phrase happens in a given doc.

This matrix will be decomposed into the linear mixture of a topic-term matrix, which signifies how vital a phrase is for a given subject, and a document-topic matrix, which signifies how vital a given subject is for a given doc. A technique for this decomposition is Non-negative Matrix Factorization, the place we decompose a non-negative matrix to 2 different strictly non-negative matrices, as an alternative of permitting arbitrary signed values.

NMF just isn’t the one methodology one can use for decomposing the bag-of-words matrix. A technique of excessive historic significance, Latent Semantic Evaluation, makes use of Truncated Singular-Worth Decomposition for this objective. NMF, nevertheless, is usually a more sensible choice, as:

- The found latent components are of various high quality from different decomposition strategies. NMF sometimes discovers localized patterns or elements within the knowledge, that are simpler to interpret.

- Non-negative topic-term and document-topic relations are simpler to interpret than signed ones.

Utilizing NMF with simply BoW matrices, nevertheless engaging and easy it might be, does include its setbacks:

- NMF sometimes minimizes the Frobenius norm of the error matrix. This entails an assumption of Gaussianity of the end result variable, which is clearly false, as we’re modelling phrase counts.

- BoW representations are simply phrase counts. Which means phrases gained’t be interpreted in context, and syntactical data can be ignored.

To account for these limitations, and with the assistance of recent transformer-based language representations, we are able to considerably enhance NMF for our functions.

The important thing instinct behind KeyNMF is that the majority phrases in a doc are semantically insignificant, and we are able to get an summary of topical data within the doc by highlighting the highest N most related phrases. We are going to choose these phrases by utilizing contextual embeddings from sentence-transformer fashions.

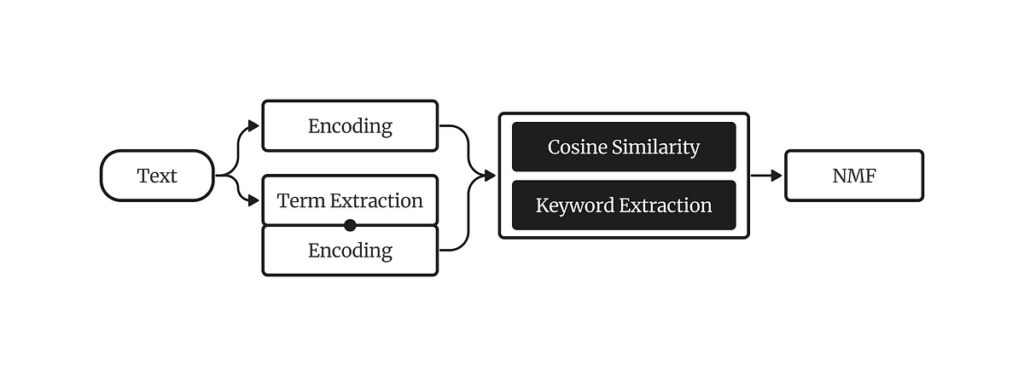

The KeyNMF algorithm consists of the next steps:

- Embed every doc utilizing a sentence-transformer, together with all phrases within the doc.

- Calculate cosine similarities of phrase embeddings to doc embeddings.

- For every doc, preserve the best N phrases with constructive cosine similarities to the doc.

- Prepare cosine similarities right into a keyword-matrix, the place every row is a doc, every column is a key phrase, and values are cosine similarities of the phrase to the doc.

- Decompose the key phrase matrix with NMF.

This formulation helps us in a number of methods. a) We considerably scale back the mannequin’s vocabulary, thereby having much less parameters, leading to sooner and higher mannequin match b) We get steady distribution, which is a greater match for NMF’s assumptions and c) We incorporate contextual data into our subject mannequin.

Now that you just perceive how KeyNMF works, let’s get our arms soiled and apply the mannequin in a sensible context.

Preparation and Information

First, let’s set up the packages we’re going to use on this demonstration:

pip set up turftopic[jieba] datasets sentence_transformers topicwizard

Then let’s get some overtly accessible knowledge. I selected to go along with the SIB200 corpus, as it’s freely accessible below the CC-BY-SA 4.0 open license. This piece of code will fetch us the corpus.

from datasets import load_dataset# Hundreds the dataset

ds = load_dataset("Davlan/sib200", "zho_Hans", cut up="all")

corpus = ds["text"]

Constructing a Chinese language Subject Mannequin

There are a variety of tough points to making use of language fashions to Chinese language, since most of those programs are developed and examined on English knowledge. In relation to KeyNMF, there are two points that should be taken into consideration.

Firstly, we might want to determine how you can tokenize texts in Chinese language. Fortunately, the Turftopic library, which accommodates our implementation of KeyNMF (amongst different issues), comes prepackaged with tokenization utilities for Chinese language. Usually, you’ll use a CountVectorizer object from sklearn to extract phrases from textual content. We added a ChineseCountVectorizer object that makes use of the Jieba tokenizer within the background, and has an optionally usable Chinese language cease glossary.

from turftopic.vectorizers.chinese language import ChineseCountVectorizervectorizer = ChineseCountVectorizer(stop_words="chinese language")

Then we are going to want a Chinese language embedding mannequin for producing doc and phrase representations. We are going to use the paraphrase-multilingual-MiniLM-L12-v2 mannequin for this, as it’s fairly compact and quick, and was particularly skilled for use in multilingual retrieval contexts.

from sentence_transformers import SentenceTransformerencoder = SentenceTransformer("paraphrase-multilingual-MiniLM-L12-v2")

We are able to then construct a totally Chinese language KeyNMF mannequin! I’ll initialize a mannequin with 20 matters and N=25 (a most of 15 key phrases can be extracted for every doc)

from turftopic import KeyNMFmannequin = KeyNMF(

n_components=20,

top_n=25,

vectorizer=vectorizer,

encoder=encoder,

random_state=42, # Setting seed in order that our outcomes are reproducible

)

We are able to then match the mannequin to the corpus and see what outcomes we get!

document_topic_matrix = mannequin.fit_transform(corpus)

mannequin.print_topics()

┏━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Subject ID ┃ Highest Rating ┃

┡━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┩

│ 0 │ 旅行, 非洲, 徒步旅行, 漫步, 活动, 通常, 发展中国家, 进行, 远足, 徒步 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 1 │ 滑雪, 活动, 滑雪板, 滑雪运动, 雪板, 白雪, 地形, 高山, 旅游, 滑雪者 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 2 │ 会, 可能, 他们, 地球, 影响, 北加州, 并, 它们, 到达, 船 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 3 │ 比赛, 选手, 锦标赛, 大回转, 超级, 男子, 成绩, 获胜, 阿根廷, 获得 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 4 │ 航空公司, 航班, 旅客, 飞机, 加拿大航空公司, 机场, 达美航空公司, 票价, 德国汉莎航空公司, 行李 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 5 │ 原子核, 质子, 能量, 电子, 氢原子, 有点像, 原子弹, 氢离子, 行星, 粒子 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 6 │ 疾病, 传染病, 疫情, 细菌, 研究, 病毒, 病原体, 蚊子, 感染者, 真菌 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 7 │ 细胞, cella, 小房间, cell, 生物体, 显微镜, 单位, 生物, 最小, 科学家 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 8 │ 卫星, 望远镜, 太空, 火箭, 地球, 飞机, 科学家, 卫星电话, 电话, 巨型 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 9 │ 猫科动物, 动物, 猎物, 狮子, 狮群, 啮齿动物, 鸟类, 狼群, 行为, 吃 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 10 │ 感染, 禽流感, 医院, 病毒, 鸟类, 土耳其, 病人, h5n1, 家禽, 医护人员 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 11 │ 抗议, 酒店, 白厅, 抗议者, 人群, 警察, 保守党, 广场, 委员会, 政府 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 12 │ 旅行者, 文化, 耐心, 国家, 目的地, 适应, 人们, 水, 旅行社, 国外 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 13 │ 速度, 英里, 半英里, 跑步, 公里, 跑, 耐力, 月球, 变焦镜头, 镜头 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 14 │ 原子, 物质, 光子, 微小, 粒子, 宇宙, 辐射, 组成, 亿, 而光 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 15 │ 游客, 对, 地区, 自然, 地方, 旅游, 时间, 非洲, 开车, 商店 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 16 │ 互联网, 网站, 节目, 大众传播, 电台, 传播, toginetradio, 广播剧, 广播, 内容 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 17 │ 运动, 运动员, 美国, 体操, 协会, 支持, 奥委会, 奥运会, 发现, 安全 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 18 │ 火车, metroplus, metro, metrorail, 车厢, 开普敦, 通勤, 绕城, 城内, 三等舱 │

├──────────┼──────────────────────────────────────────────────────────────────────────────────────────────┤

│ 19 │ 投票, 投票箱, 信封, 选民, 投票者, 法国, 候选人, 签名, 透明, 箱内 │

└──────────┴──────────────────────────────────────────────────────────────────────────────────────────────┘

As you see, we’ve already gained a wise overview of what there may be in our corpus! You’ll be able to see that the matters are fairly distinct, with a few of them caring with scientific matters, corresponding to astronomy (8), chemistry (5) or animal behaviour (9), whereas others have been oriented at leisure (e.g. 0, 1, 12), or politics (19, 11).

Visualization

To achieve additional help in understanding the outcomes, we are able to use the topicwizard library to visually examine the subject mannequin’s parameters.

Since topicwizard makes use of wordclouds, we might want to inform the library that it must be utilizing a font that’s suitable with Chinese language. I took a font from the ChineseWordCloud repo, that we’ll obtain after which cross to topicwizard.

import urllib.request

import topicwizardurllib.request.urlretrieve(

"https://github.com/shangjingbo1226/ChineseWordCloud/uncooked/refs/heads/grasp/fonts/STFangSong.ttf",

"./STFangSong.ttf",

)

topicwizard.visualize(

corpus=corpus, mannequin=mannequin, wordcloud_font_path="./STFangSong.ttf"

)

This can open the topicwizard internet app in a pocket book or in your browser, with which you’ll interactively examine your subject mannequin:

On this article, we’ve checked out what KeyNMF is, the way it works, what it’s motivated by and the way it may be used to find high-quality matters in Chinese language textual content, in addition to how you can visualize and interpret your outcomes. I hope this tutorial will show helpful to those that wish to discover Chinese language textual knowledge.

For additional data on the fashions, and how you can enhance your outcomes, I encourage you to take a look at our Documentation. In the event you ought to have any questions or encounter points, be happy to submit an issue on Github, or attain out within the feedback :))

All figures introduced within the article have been produced by the creator.