Within the age of GPT-4 and Gemini 1.5, operating an LLM on a smartphone feels virtually… outdated. And but, at the moment I ran TinyLlama-1.1B on my cellphone — and it labored. No cloud. No GPU. Simply an on-device neural community producing considerate responses in actual time.

This submit isn’t nearly what I did — it’s about why that issues.

Most individuals work together with massive language fashions by way of APIs — OpenAI, Google, Anthropic — which cover the heavy lifting behind paywalls and server farms.

However counting on cloud APIs creates a couple of key limitations:

- Privateness: Each immediate is shipped to a distant server.

- Latency: Responses rely on community circumstances.

- Price: API calls add up quick in manufacturing.

- Dependence: Your app turns into tethered to exterior suppliers.

That’s the place native LLMs enter the scene — tiny, quantized fashions you may run immediately in your cellphone or laptop computer utilizing frameworks like GGUF, llama.cpp, and MLC.

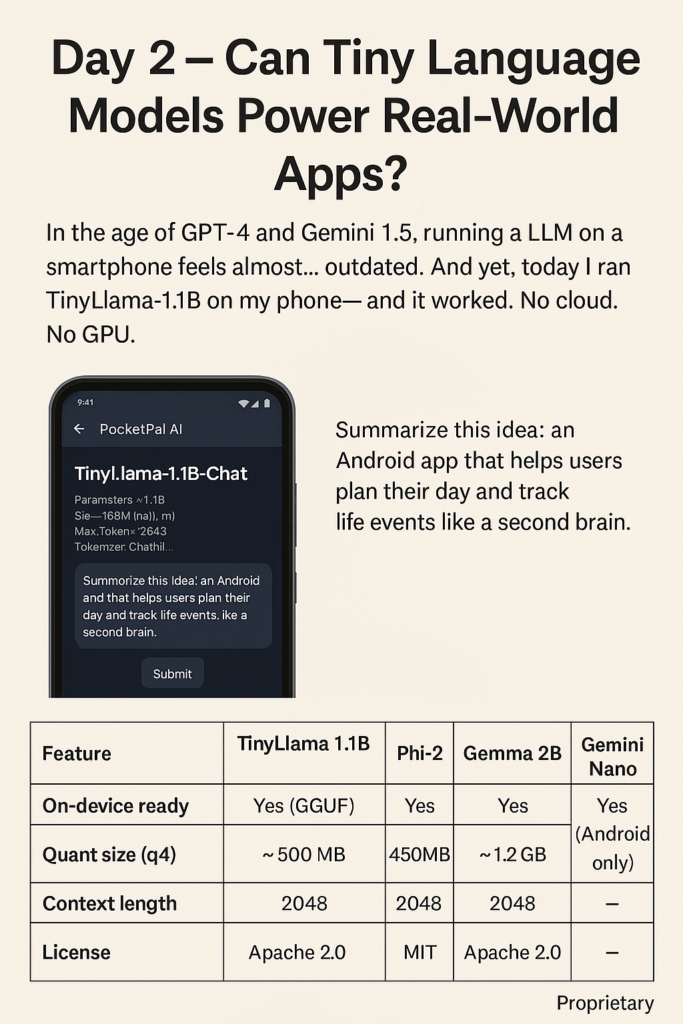

I downloaded an app referred to as PocketPal AI from the Play Retailer. It helps GGUF-format fashions and makes use of GGML below the hood to run them on-device.

- Parameters: ~1.1 billion

- Quantized dimension: ~500MB (q4_k_m)

- Context size: 2048 tokens

- Tokenizer: ChatML-compatible

- {Hardware}: Mid-range Android cellphone (Snapdragon 778G, 8GB RAM)

I gave it a easy check immediate:

“Summarize this concept: an Android app that helps customers plan their day and observe life occasions like a second mind.”

It responded with:

“A private assistant app that helps customers set up duties, document reminiscences, and enhance self-awareness.”

Not groundbreaking, however coherent, on-topic, and quick — round 1.2 tokens/sec on-device. That’s sufficient for journaling, word summarization, and even immediate rephrasing — all with out hitting an API.

FeatureTinyLlama 1.1BPhi-2Gemma 2BGemini NanoOn-device readyYes (GGUF)YesYesYes (Android solely)Quant dimension (this fall)~500MB~1.2GB~1.5GBOEM-onlyContext length204820488192UnknownLicenseApache 2.0MITApache 2.0Proprietary

TinyLlama shines in minimal reminiscence footprint, open weights, and pace on lower-end telephones. Nevertheless, it lacks reasoning depth and typically repeats or stalls on complicated prompts — not ideally suited for chatbot use, however nice for light-weight duties.

This one check gave me three insights:

- Native-first is viable for actual apps.

For journal apps, planners, or immediate engines — you may ship on-device AI with no exterior value. - Mannequin dimension isn’t every part.

TinyLlama carried out higher than anticipated. It proves a well-trained small mannequin > an enormous mannequin used poorly. - That is the start.

If fashions like TinyLlama are usable now, think about what we’ll get in 6 months — with MLC, Steel backend, or Google’s AICore pushing additional.

Tomorrow I’ll begin constructing the app shell in Kotlin — no ML but, simply organising the construction. Ultimately, TinyLlama (or the same mannequin) will energy options like:

- Journaling assistant

- Objective-based suggestions

- Reminiscence recall and semantic search

- Summarization and perception era

However at the moment proved that even a solo dev, on a funds, can construct clever instruments that don’t rely on the cloud.