That is day one among my 90-day full rebuild. I’m studying machine studying from the bottom up, not simply to move a course, however to construct mastery. Most individuals study machine studying by copying code and shifting on. I made a decision to do it the exhausting approach. No co-pilots. No Cursor. No shortcuts. Simply uncooked execution. That is day one among a 90-day rebuild.

This week I began constructing a portfolio of actual ML initiatives to interrupt into the trade for actual. I’m studying every part from first rules, documenting all of it, and sharing the method step-by-step. That is for anybody who’s curious, or studying ML from scratch and needs to observe alongside with out all of the BS.

GitHub repo: https://github.com/heyrobbb/housing-price-predictor

I used the California Housing dataset from sklearn.datasets. It is primarily based on 1990 US Census knowledge and contains:

- Median Earnings

- Common Variety of Rooms

- Inhabitants

- Latitude / Longitude

- Median Home Worth

It’s nice for regression duties as a result of it’s already clear and structured. No lacking values. You get to concentrate on the modeling, not knowledge cleanup.

In a real-world case, you’d pull knowledge from someplace like Zillow or Redfin. However you’d have to wash it up, label it, construction it, and ensure nothing’s damaged. For this challenge, I needed to concentrate on constructing the mannequin, so the only factor was to make use of sklearn.

We load the dataset in src/load.py:

from sklearn.datasets import fetch_california_housing

import pandas as pd# load knowledge

housing = fetch_california_housing(as_frame=True)

df = housing.body

df.to_csv('../knowledge/housing.csv', index=False)

print(df.head())

We’re utilizing pandas to construction the information right into a DataFrame. Consider it like an Excel spreadsheet however programmable. describe() provides us fast stats throughout all options so we are able to see what we’re working with.

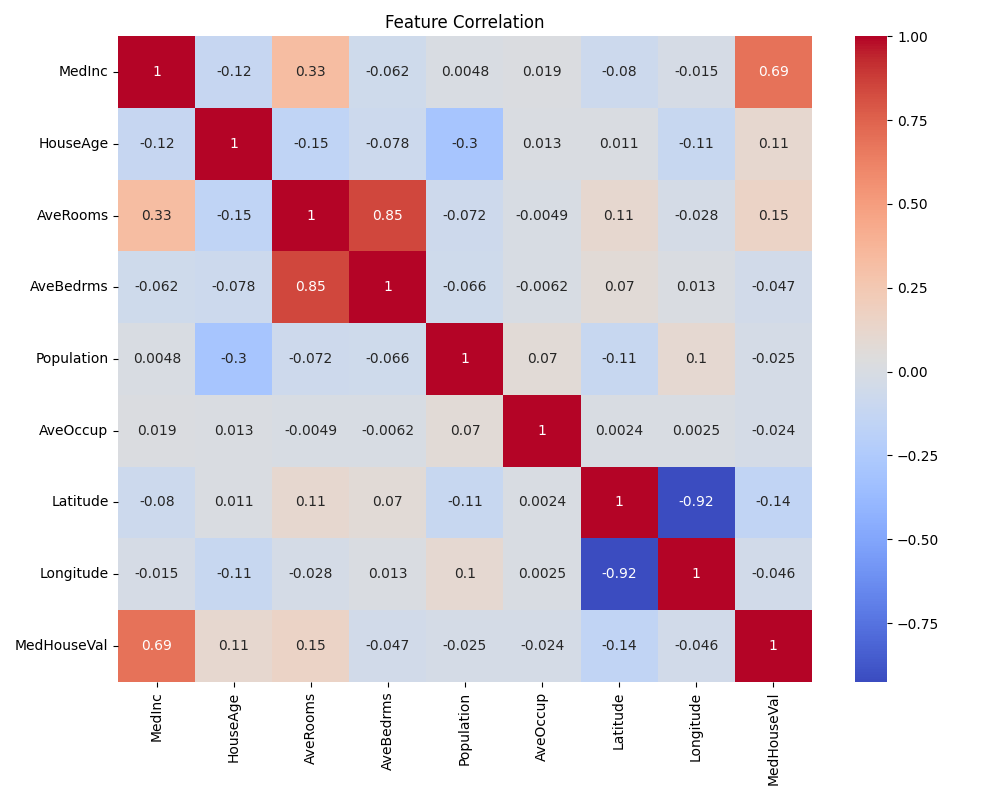

EDA helps us determine what’s truly influencing the home costs. For instance, median earnings reveals a powerful optimistic correlation with home worth. No shock there.

In src/eda.py:

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import osdf = pd.read_csv('../knowledge/housing.csv')

print("Information form:n", df.form)

print("Columns:n", df.columns.tolist())

print("Information Varieties:n", df.dtypes)

print("Abstract Stats:n", df.describe())

os.makedirs("plots", exist_ok=True)

correlation = df.corr(numeric_only=True)

plt.determine(figsize=(10,8))

sns.heatmap(correlation, annot=True, cmap='coolwarm')

plt.title('Characteristic Correlation')

plt.tight_layout()

plt.savefig('../plots/correlation_heatmap.png')

print('Correlation heatmap saved.')

# Distribution of goal

plt.determine(figsize=(8, 6))

sns.histplot(df['MedHouseVal'], bins=30, kde=True)

plt.title('Distribution of Median Home Worth')

plt.xlabel('Median Home Worth')

plt.ylabel('Frequency')

plt.tight_layout()

plt.savefig('../plots/target_distribution.png')

print('Goal distribution plot saved.')

We’re printing out construction data, organising a fundamental plots listing, and saving:

- A correlation heatmap (to point out what options join to cost)

- A goal distribution plot (to point out how costs are unfold out throughout the dataset)

This helps us get an early really feel for what’s going to matter once we mannequin.

In src/train_model.py, we practice a linear regression mannequin utilizing scikit-learn.

import pandas as pd

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

import joblib

import os# load dataset

df = pd.read_csv('../knowledge/housing.csv')

# cut up options and goal

# supervised drawback

# Capital X is used (conference) enter knowledge (your options) Rows + colums, its capital as a result of it's normally a complete matrix

X = df.drop("MedHouseVal", axis=1)

# lowercase is as a result of it's one column all knowledge is X, however we need to predict y

y = df["MedHouseVal"]

# Practice/check cut up

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# practice mannequin

mannequin = LinearRegression()

mannequin.match(X_train, y_train)

# Consider

y_pred = mannequin.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print('MSE: {mse: .4f}')

print('r2 rating: {r2: .4f}')

os.makedirs('fashions', exist_ok=True)

joblib.dump(mannequin, '../fashions/linear_regression_model.pkl')

print('mannequin saved to ../fashions/linear_regression_model.pkl')

We cut up the information into coaching and check units, match the mannequin, and reserve it utilizing joblib. MSE (Imply Squared Error) tells us how far off our predictions are, on common.

In src/predict.py, we load the saved mannequin and make predictions on new or check knowledge.

import pandas as pd

import joblib

import os# load mannequin (joblib to serial fashions and cargo fashions)

mannequin = joblib.load('../fashions/linear_regression_model.pkl')

# load dataset

df = pd.read_csv('../knowledge/housing.csv')

# separate featuress (similar as coaching)

X = df.drop('MedHouseVal', axis=1)

# predict

predictions = mannequin.predict(X)

df['PredictedHouseVal'] = predictions

# Save outcomes

os.makedirs('output', exist_ok=True)

df.to_csv('../output/housing_with_predictions.csv', index=False)

print('predictions saved to output/housing_with_predictions.csv')

This script reloads our skilled mannequin and runs predictions on the dataset. From right here, we may save these predictions or consider them in numerous methods (visualizations, deployment, and so on).

import matplotlib.pyplot as plt

import pandas as pddf = pd.read_csv('../output/housing_with_predictions.csv')

plt.determine(figsize=(8,6))

plt.scatter(df['MedHouseVal'], df['PredictedHouseVal'], alpha=0.3)

plt.xlabel('Precise Median Home Worth')

plt.ylabel('Predicted Median Home Worth')

plt.title('Predicted vs Precise')

plt.grid(True)

plt.tight_layout()

plt.savefig('../plots/predicted_vs_actual.png')

print("Saved predicted vs precise scatter plot.")

We are able to present how properly the mannequin’s predictions align with precise home costs. Ideally, the dots would line up alongside the diagonal, however utilizing the identical prediction, we are able to generate a reasonably correct scatter plot.

- How linear regression works in fashions.

- How even a easy dataset can educate core ML ideas like correlation, overfitting danger, and mannequin analysis

This was day one among my rebuild, and its been actually enjoyable to dive into the actual ML world, relatively than simply fiddle with LLMs, however really get again into actual code, and understanding.

Throughout this ninety day rebuild, I write each line of code by hand. No co-pilots, no LLMs. Simply me, my mbp and a desk.

We maintain pushing.

GitHub Repo: https://github.com/heyrobbb/housing-price-predictor