So that you’re at a celebration, and somebody mentions they work in “AI.” Inside minutes, you’re drowning in buzzwords — machine studying, deep studying, neural networks, synthetic intelligence. Sound acquainted? I’ve been there, nodding alongside whereas internally screaming “what’s the precise distinction between all these items?”

Right here’s the factor: deep studying is definitely a subset of machine studying, which is itself a subset of synthetic intelligence. It’s like Russian nesting dolls, however with algorithms as a substitute of picket figures. The confusion is completely comprehensible as a result of these phrases get thrown round interchangeably, even by individuals who ought to know higher.

I spent months confused about this distinction once I first began studying about AI. Let me prevent that headache and break down precisely what separates deep studying from conventional machine studying — and why it issues on your initiatives.

Let’s begin with the household tree. Synthetic Intelligence is the grandparent — the broad purpose of creating machines assume and act intelligently. Machine Studying is the mum or dad — algorithms that study patterns from knowledge with out being explicitly programmed. Deep Studying is the kid — a particular kind of machine studying utilizing neural networks with many layers.

Consider it this manner:

- AI: Making computer systems sensible

- Machine Studying: Instructing computer systems to study from knowledge

- Deep Studying: Instructing computer systems to study utilizing brain-inspired networks

The confusion typically comes from individuals utilizing these phrases as in the event that they’re competing applied sciences. They’re not. Deep studying is just one strategy throughout the broader machine studying toolkit.

Machine studying is like educating a pc to acknowledge patterns with out giving it specific directions. As an alternative of programming each potential state of affairs, you feed the algorithm examples and let it determine the principles.

Basic machine studying algorithms embrace:

- Linear Regression — discovering the most effective line via knowledge factors

- Choice Timber — making a flowchart of sure/no questions

- Random Forest — combining a number of choice timber

- Help Vector Machines — discovering the most effective boundary between lessons

- Ok-Means Clustering — grouping related knowledge factors collectively

The standard ML workflow:

- Acquire and clear your knowledge

- Manually engineer options (resolve what’s vital)

- Select an algorithm

- Practice the mannequin

- Consider and tune

The important thing right here is function engineering — it’s good to inform the algorithm what to concentrate to. If you happen to’re predicting home costs, you resolve that sq. footage, location, and variety of bedrooms are vital options.

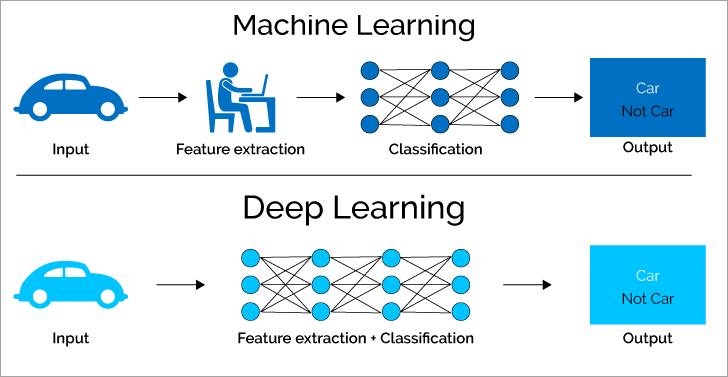

Deep studying flipped the script by automating function engineering. As an alternative of you deciding what’s vital, the neural community figures it out throughout coaching. It’s like having a very sensible assistant who can spot patterns you by no means even thought to search for.

What makes deep studying “deep”:

- A number of layers of synthetic neurons (normally 3+ hidden layers)

- Every layer learns more and more complicated options

- Computerized function extraction from uncooked knowledge

- Can deal with unstructured knowledge like pictures, textual content, and audio

The deep studying workflow:

- Acquire large quantities of information

- Design a neural community structure

- Feed uncooked knowledge straight into the community

- Let the community study options routinely

- Superb-tune the structure and hyperparameters

I bear in mind my first “aha” second with deep studying. I used to be engaged on picture classification, spending weeks attempting to hand-craft options like edge detection and colour histograms. Then I attempted a easy convolutional neural community, fed it uncooked pixel values, and it instantly outperformed all the things I’d constructed. That’s once I realized the facility of automated function studying.

Conventional ML: Can work with smaller datasets (a whole lot to hundreds of examples) Deep Studying: Hungry for knowledge (usually wants hundreds to thousands and thousands of examples)

That is enormous. If you happen to’re working with a small dataset, conventional ML algorithms typically carry out higher than deep studying fashions. Don’t let anybody let you know that deep studying is at all times higher — it’s not.

Conventional ML: You manually resolve what options matter Deep Studying: The community routinely discovers options

Instance: For electronic mail spam detection, conventional ML may use options like “variety of exclamation marks” or “presence of sure key phrases.” Deep studying would analyze the uncooked textual content and uncover these patterns (and lots of others) routinely.

Conventional ML: Often simpler to know why a choice was made Deep Studying: Typically a “black field” — onerous to elucidate particular predictions

If it’s good to clarify your mannequin’s selections (assume medical analysis or mortgage approval), conventional ML is likely to be your higher guess. Deep studying fashions will be notoriously troublesome to interpret.

Conventional ML: Runs in your laptop computer Deep Studying: Typically wants GPUs and important computational energy

FYI, coaching a deep studying mannequin can take hours or days, whereas conventional ML fashions typically practice in minutes. This issues for each growth time and deployment prices.

You might have restricted knowledge (lower than 10,000 examples) You want interpretability (should clarify selections) You’re working with structured/tabular knowledge You might have restricted computational sources You want quick coaching and prediction instances

Actual-world examples:

- Predicting buyer churn with gross sales knowledge

- Medical analysis the place explanations are essential

- Fraud detection in monetary transactions

- A/B testing evaluation

You might have large datasets (100,000+ examples) You’re working with unstructured knowledge (pictures, textual content, audio) You’ll be able to afford the computational value You want state-of-the-art efficiency and interpretability isn’t essential You’re working with complicated patterns which can be onerous to engineer manually

Actual-world examples:

- Picture recognition and laptop imaginative and prescient

- Pure language processing

- Speech recognition

- Autonomous autos

- Sport taking part in (like AlphaGo)

Right here’s one thing they don’t let you know in most articles: the most effective answer typically combines each approaches. In my expertise, profitable AI initiatives incessantly use conventional ML for some components and deep studying for others.

Hybrid approaches work nice:

- Use deep studying to extract options from pictures, then conventional ML to categorise them

- Apply conventional ML for knowledge preprocessing, then deep studying for the principle process

- Mix a number of fashions (ensemble strategies) for higher efficiency

There’s a well-known theorem in machine studying that mainly says “no single algorithm works greatest for all issues.” This is applicable completely to the deep studying vs. conventional ML debate. The very best strategy relies upon solely in your particular downside, knowledge, and constraints.

I’ve seen initiatives the place a easy logistic regression outperformed a fancy deep studying mannequin. I’ve additionally seen circumstances the place solely deep studying might remedy the issue. The hot button is understanding your state of affairs and selecting accordingly.

Nope. On tabular knowledge with restricted samples, conventional ML typically wins. Deep studying shines with unstructured knowledge and huge datasets.

Positively not. Conventional ML algorithms are nonetheless extensively utilized in manufacturing programs. They’re typically extra dependable, sooner, and simpler to keep up than deep studying fashions.

False. The very best practitioners use each approaches and know when to use every one. It’s not about loyalty to a selected method — it’s about fixing issues successfully.

Partially true. Whereas the speculation will be complicated, trendy frameworks like TensorFlow and PyTorch make implementation surprisingly accessible. You’ll be able to construct working deep studying fashions with only a few strains of code.

If you happen to’re simply beginning out, right here’s what I like to recommend:

Begin with conventional ML to know core ideas like overfitting, cross-validation, and have significance. These fundamentals apply to all machine studying approaches.

Find out about totally different downside sorts:

- Supervised vs. unsupervised studying

- Classification vs. regression

- Time collection vs. static knowledge

Then discover deep studying for particular use circumstances the place it excels. Don’t bounce straight to deep studying with out understanding the fundamentals — you’ll miss essential insights about why sure approaches work.

Apply with actual initiatives that allow you to evaluate each approaches. Nothing beats hands-on expertise for understanding when to make use of which device.

The entire “deep studying vs. machine studying” debate misses the purpose. They’re not opponents — they’re instruments in the identical toolbox. The query isn’t which one is healthier, however which one suits your particular downside.

Conventional machine studying provides you interpretability, works with smaller datasets, and sometimes gives sooner, extra dependable options. Deep studying excels with unstructured knowledge, massive datasets, and complicated sample recognition duties.

The actual ability is understanding which device to achieve for once you encounter a brand new downside. Generally it’s a hammer (conventional ML), typically it’s a precision instrument (deep studying), and typically you want each.

Don’t get caught up within the hype round any specific strategy. Deal with understanding your knowledge, defining your downside clearly, and selecting the best answer that meets your necessities. Whether or not that’s linear regression or a transformer mannequin, the most effective algorithm is the one which solves your downside successfully.

Keep in mind: good knowledge beats fancy algorithms each time. IMO, spending time understanding your knowledge and downside will get you additional than chasing the newest algorithmic pattern. Grasp the basics, then discover the leading edge — that’s the way you construct actual experience on this subject.