The is the third weblog of my DeepSeek Mannequin technical evaluation collection weblog, for the entire background please consult with the first weblog of this collection “DeepSeek Technical Analysis — (1) MoE”.

Within the final 2 blogs, I defined Mixture-of-Experts(MoE) and Multi-Head Latent Attention(MLA) respectively. The MoE decreased the coaching value considerably by decreasing the variety of activated parameters for every token, for instance the variety of activated parameters of DeepSeek-V3-671B is 37B. The MLA decreased the KV cache dimension by 93.3% (evaluate to unique Multi-Head Consideration), and boosted the inference velocity by a number of occasions.

On this weblog, I’ll give attention to one other method adopted by DeepSeek (begin from model V3) — Multi-Token Prediction which may enhance the efficiency(accuracy) of the mannequin.

Massive language fashions similar to GPT and Llama are skilled with a next-token prediction loss. These fashions study a big textual content corpus x1, . . . xT by implementing a next-token prediction activity. Formally, the educational goal is to attenuate the cross entropy loss:

the place Pθ is our giant language mannequin below coaching, as to maximise the chance of xt+1 as the following future token, given the historical past of previous tokens xt:1 = xt, . . . , x1.

Better & Faster Large Language Models via Multi-token Prediction — 2024 this paper generalized the above by implementing a multi-token prediction activity, the place at every place of the coaching corpus, the mannequin is instructed to foretell n future tokens without delay in parallel utilizing unbiased output heads. This interprets into the cross-entropy loss:

This paper did a number of experiments to search out the optimum n(what number of future tokens to foretell), additionally verified that multi-token prediction can enhance the efficiency(accuracy) over next-token prediction in coaching language fashions for generative or reasoning duties.

This paper additionally demonstrated that multi-token prediction results in qualitative adjustments in mannequin capabilities and generalization behaviors. The explanation behind that in all probability is as a result of multi-token prediction mitigates the distributional discrepancy between training-time trainer forcing and inference-time autoregressive era.

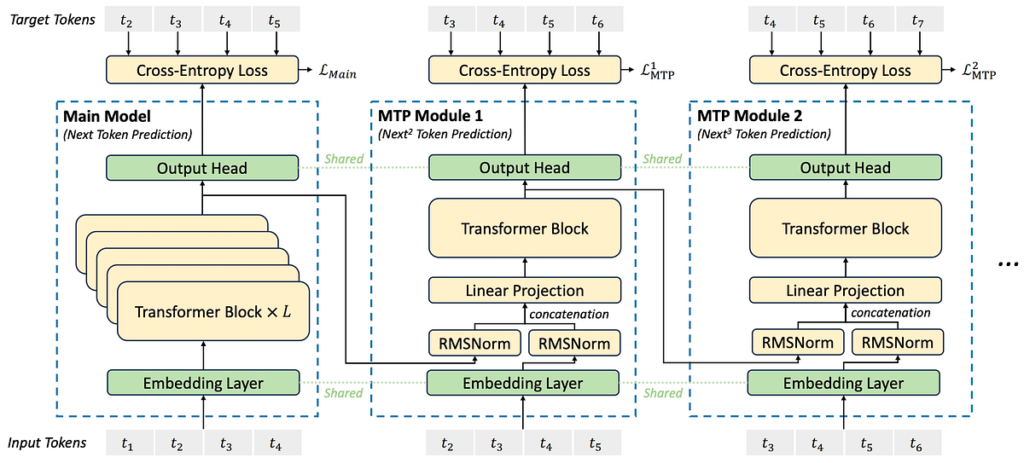

DeepSeek V3 adopted the principle thought of Multi-Token Prediction talked about in above paper, however with the change that sequentially predict further tokens and maintain the entire causal chain at every prediction depth.

As an alternative of a parallel-structure within the unique paper, it’s a chain-structure Multi-Token Prediction within the DeepSeek. The enter tokens [t1,t2,t3,t4] undergo the principle mannequin’s transformer blocks after which undergo the output head of fundamental mannequin to provide subsequent predicted token t5. In the meantime the illustration of the enter tokens[t1,t2,t3,t4](output of fundamental mannequin’s transformer blocks) will probably be handed to the MTP module and mix with new enter tokens’ embedding[t2,t3,t4,t5(new predicted)] to assist produce further token t6… In DeepSeek-V3, the mannequin predicts subsequent 2 tokens.

On this DeepSeek-V3 Technical Report, authors demonstrated that the Multi-Token Prediction can enhance the efficiency most often.

Does Multi-Token Prediction have enchancment for all instances? Better & Faster Large Language Models via Multi-token Prediction — 2024 this paper demonstrated that multi-token prediction could introduce regression for multiple-choice and likelihood-based benchmarks. The MMLU(Huge Multitask Language Understanding) regression (67.5 -> 66.6) in DeepSeek with MTP matches this conclusion.