Machine Studying (ML) has pushed exceptional breakthroughs in laptop imaginative and prescient, pure language processing, and speech recognition, largely as a result of abundance of information in these fields. Nonetheless, many challenges — particularly these tied to particular product options or scientific analysis — undergo from restricted knowledge high quality and amount. This information offers a roadmap for tackling small knowledge issues primarily based in your knowledge constraints, and affords potential options, guiding your determination making early on.

Uncooked knowledge is never a blocker for ML tasks. Excessive-quality labels then again, are sometimes prohibitively costly and laborious to gather. The place acquiring an expert-labelled “floor fact” requires area experience, intensive fieldwork, or specialised data. For example, your downside would possibly concentrate on uncommon occasions, possibly, endangered species monitoring, excessive local weather occasions, or uncommon manufacturing defects. Different occasions, enterprise particular or scientific questions could be too specialised for off-the-shelf large-scale datasets. Finally this implies many tasks fail as a result of label acquisition is just too costly.

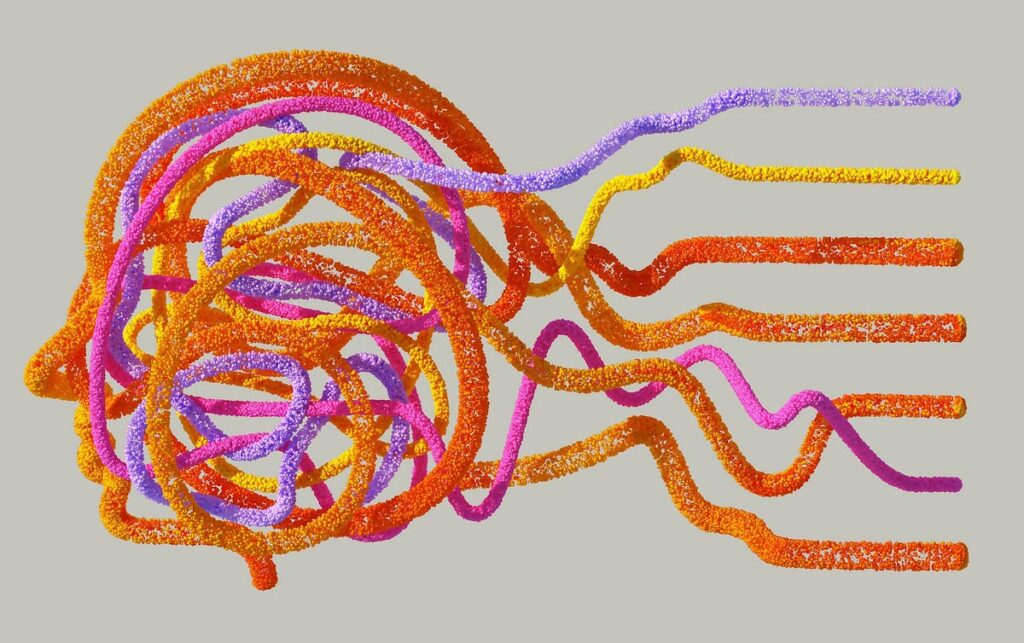

With solely a small dataset, any new mission begins off with inherent dangers. How a lot of the true variability does your dataset seize? In some ways this query is unanswerable the smaller your dataset will get. Making testing and validation more and more troublesome, and leaving a substantial amount of uncertainty about how nicely your mannequin really generalises. Your mannequin doesn’t know what your knowledge doesn’t seize. This implies, with doubtlessly only some hundred samples, each the richness of the options you may extract, and the variety of options you should utilize decreases, with out important threat of overfitting (that in lots of instances you may’t measure). This usually leaves you restricted to classical ML algorithms (Random Forest, SVM and so forth…), or closely regularised deep studying strategies. The presence of sophistication imbalance will solely exacerbate your issues. Making small datasets much more delicate to noise, the place only some incorrect labels or defective measurements will trigger havoc and complications.

For me, working the issue begins with asking a couple of easy questions in regards to the knowledge, labelling course of, and finish targets. By framing your downside with a “guidelines”, we are able to make clear the constraints of your knowledge. Have a go at answering the questions under:

Is your dataset totally, partially, or largely unlabelled?

- Absolutely labeled: You’ve got labels for (almost) all samples in your dataset.

- Partially labelled: A portion of the dataset has labels, however there’s a big portion of unlabelled knowledge.

- Largely unlabelled: You’ve got only a few (or no) labeled knowledge factors.

How dependable are the labels you do have?

- Extremely dependable: If a number of annotates agree on labels, or they’re confirmed by trusted consultants or well-established protocols.

- Noisy or weak: Labels could also be crowd-sourced, generated mechanically, or liable to human or sensor error.

Are you fixing one downside, or do you could have a number of (associated) duties?

- Single-task: A singular goal, reminiscent of a binary classification or a single regression goal.

- Multi-task: A number of outputs or a number of goals.

Are you coping with uncommon occasions or closely imbalanced lessons?

- Sure: Optimistic examples are very scarce (e.g., “tools failure,” “antagonistic drug reactions,” or “monetary fraud”).

- No: Lessons are considerably balanced, or your activity doesn’t contain extremely skewed distributions.

Do you could have professional data accessible, and in that case, in what kind?

- Human consultants: You’ll be able to periodically question area consultants to label new knowledge or confirm predictions.

- Mannequin-based consultants: You’ve got entry to well-established simulation or bodily fashions (e.g., fluid dynamics, chemical kinetics) that may inform or constrain your ML mannequin.

- No: No related area experience accessible to information or appropriate the mannequin.

Is labelling new knowledge potential, and at what value?

- Possible and inexpensive: You’ll be able to purchase extra labeled examples if mandatory.

- Troublesome or costly: Labelling is time-intensive, expensive, or requires specialised area data (e.g., medical analysis, superior scientific measurements).

Do you could have prior data or entry to pre-trained fashions related to your knowledge?

- Sure: There exist large-scale fashions or datasets in your area (e.g., ImageNet for photos, BERT for textual content).

- No: Your area is area of interest or specialised, and there aren’t apparent pre-trained assets.

Together with your solutions to the questions above prepared, we are able to transfer in the direction of establishing an inventory of potential strategies for tackling your downside. In follow, small dataset issues require hyper-nuanced experimentation, and so earlier than implementing the strategies under give your self a stable basis by beginning with a easy mannequin, get a full pipeline working as rapidly as potential and at all times cross-validate. This offers you a baseline to iteratively apply new strategies primarily based in your error evaluation, whereas specializing in conducting small scale experiments. This additionally helps keep away from constructing a very sophisticated pipeline that’s by no means correctly validated. With a baseline in place, chances are high your dataset will evolve quickly. Instruments like DVC or MLflow assist observe dataset variations and guarantee reproducibility. In a small-data situation, even a handful of latest labeled examples can considerably change mannequin efficiency — model management helps systematically handle that.

With that in thoughts, right here’s how your solutions to the questions above level in the direction of particular methods described later on this put up:

Absolutely Labeled + Single Job + Sufficiently Dependable Labels:

- Information Augmentation (Part 5.7) to extend efficient pattern dimension.

- Ensemble Strategies (Part 5.9) when you can afford a number of mannequin coaching cycles.

- Switch Studying (Part 5.1) if a pre-trained mannequin in your area (or a associated area) is offered.

Partially Labeled + Labelling is Dependable or Achievable:

- Semi-Supervised Studying (Part 5) to leverage a bigger pool of unlabelled knowledge.

- Lively Studying (Part 5.6) in case you have a human professional who can label probably the most informative samples.

- Information Augmentation (Part 5.7) the place potential.

Not often Labeled or Largely Unlabelled + Professional Information Out there:

- Lively Studying (Part 5.6) to selectively question an professional (particularly if the professional is an individual).

- Course of-Conscious (Hybrid) Fashions (Part 5.10) in case your “professional” is a well-established simulation or mannequin.

Not often Labeled or Largely Unlabelled + No Professional / No Extra Labels:

- Self-Supervised Studying (Part 5.2) to use inherent construction in unlabelled knowledge.

- Few-Shot or Zero-Shot Studying (Part 5.4) when you can depend on meta-learning or textual descriptions to deal with novel lessons.

- Weakly Supervised Studying (Part 5.5) in case your labels exist however are imprecise or high-level.

A number of Associated Duties:

- Multitask Studying (Part 5.8) to share representations between duties, successfully pooling “sign” throughout your complete dataset.

Coping with Noisy or Weak Labels:

- Weakly Supervised Studying (Part 5.5) which explicitly handles label noise.

- Mix with Lively Studying or a small “gold customary” subset to wash up the worst labelling errors.

Extremely Imbalanced / Uncommon Occasions:

- Information Augmentation (Part 5.7) concentrating on minority lessons (e.g., artificial minority oversampling).

- Lively Studying (Part 5.6) to particularly label extra of the uncommon instances.

- Course of-Conscious Fashions (Part 5.10) or area experience to verify uncommon instances, if potential.

Have a Pre-Educated Mannequin or Area-Particular Information:

- Switch Studying (Part 5.1) is commonly the quickest win.

- Course of-Conscious Fashions (Part 5.10) if combining your area data with ML can cut back knowledge necessities.

Hopefully, the above has supplied a place to begin for fixing your small knowledge downside. It’s price noting that most of the strategies mentioned are advanced and useful resource intensive. So take note you’ll doubtless have to get buy-in out of your crew and mission managers earlier than beginning. That is greatest executed by way of clear, concise communication of the potential worth they could present. Body experiments as strategic, foundational work that may be reused, refined, and leveraged for future tasks. Concentrate on demonstrating clear, measurable influence from a brief, tightly-scoped pilot.

Regardless of the comparatively easy image painted of every approach under, it’s vital to remember there’s no one-size-fits-all resolution, and making use of these strategies isn’t like stacking lego bricks, nor do they work out-of-the-box. To get you began I’ve supplied a quick overview of every approach, that is under no circumstances exhaustive, however appears to supply a place to begin to your personal analysis.

Switch studying is about reusing current fashions to resolve new associated issues. By beginning with pre-trained weights, you leverage representations realized from giant, numerous datasets and fine-tune the mannequin in your smaller, goal dataset.

Why it helps:

- Leverages highly effective options learnt from bigger, usually numerous datasets.

- Effective-tuning pre-trained fashions usually results in larger accuracy, even with restricted samples, whereas decreasing coaching time.

- Perfect when compute assets or mission timelines stop coaching a mannequin from scratch.

Suggestions:

- Choose a mannequin aligned along with your downside area or a big general-purpose “basis mannequin” like Mistral (language) or CLIP/SAM (imaginative and prescient), accessible on platforms like Hugging Face. These fashions usually outperform domain-specific pre-trained fashions attributable to their general-purpose capabilities.

- Freeze layers that seize basic options whereas fine-tuning only some layers on high.

- To counter the danger of overfitting to your small datasets strive pruning. Right here, much less vital weights or connections are eliminated decreasing the variety of trainable parameters and growing inference pace.

- If interpretability is required, giant black-box fashions will not be best.

- With out entry to the pre-trained fashions supply dataset, you threat reinforcing sampling biases throughout fine-tuning.

A pleasant instance of switch studying is described within the following paper. The place leveraging a pre-trained ResNet mannequin enabled higher classification of chest X-ray photos and detecting COVID-19. Supported by way of dropout and batch normalisation, the researchers froze the preliminary layers of the ResNet base mannequin, whereas fine-tuning later layers, capturing task-specific, high-level options. This proved to be a value efficient technique for attaining excessive accuracy with a small dataset.

Self-supervised studying is a pre-training approach the place synthetic duties (“pretext duties”) are created to study representations from broad unlabelled knowledge. Examples embody predicting masked tokens for textual content or rotation prediction, colorisation for photos. The result’s general-purpose representations you may later pair with transfer-learning (part 5.1) or semi-supervised (part 5) and fine-tune along with your smaller dataset.

Why it helps:

- Pre-trained fashions function a powerful initialisation level, decreasing the danger of future overfitting.

- Learns to signify knowledge in a means that captures intrinsic patterns and constructions (e.g., spatial, temporal, or semantic relationships), making them simpler for downstream duties.

Suggestions:

- Pre-tasks like cropping, rotation, color jitter, or noise injection are wonderful for visible duties. Nonetheless it’s a steadiness, as extreme augmentation can distort the distribution of small knowledge.

- Guarantee unlabelled knowledge is consultant of the small dataset’s distribution to assist the mannequin study options that generalise nicely.

- Self-supervised strategies might be compute-intensive; usually requiring sufficient unlabelled knowledge to actually profit and a big computation finances.

LEGAL-BERT is a distinguished instance of self-supervised studying. Authorized-BERT is a domain-specific variant of the BERT language mannequin, pre-trained on a big dataset of authorized paperwork to enhance its understanding of authorized language, terminology, and context. The important thing, is the usage of unlabelled knowledge, the place strategies reminiscent of masked language modelling (the mannequin learns to foretell masked phrases) and subsequent sentence prediction (studying the relationships between sentences, and figuring out if one follows one other) removes the requirement for labelling. This textual content embedding mannequin can then be used for extra particular authorized primarily based ML duties.

Leverages a small labeled dataset along with a bigger unlabelled set. The mannequin iteratively refines predictions on unlabelled knowledge, to generate activity particular predictions that can be utilized as “pseudo-labels” for additional iterations.

Why it helps:

- Labeled knowledge guides the task-specific goal, whereas the unlabelled knowledge is used to enhance generalisation (e.g., by way of pseudo-labelling, consistency regularisation, or different strategies).

- Improves determination boundaries and may increase generalisation.

Suggestions:

- Consistency regularisation is a technique that assumes mannequin predictions ought to be constant throughout small perturbations (noise, augmentations) made to unlabelled knowledge. The thought is to “easy” the choice boundary of sparsely populated high-dimensional area.

- Pseudo-labelling permits you to prepare an preliminary mannequin with a small dataset and use future predictions on unlabelled knowledge as “pseudo” labels for future coaching. With the purpose of generalising higher and decreasing overfitting.

Monetary fraud detection is an issue that naturally lends itself to semi-supervised studying, with little or no actual labelled knowledge (confirmed fraud instances) and a big set of unlabelled transaction knowledge. The following paper proposes a neat resolution, by modelling transactions, customers, and gadgets as nodes in a graph, the place edges signify relationships, reminiscent of shared accounts or gadgets. The small set of labeled fraudulent knowledge is then used to coach the mannequin by propagating fraud alerts throughout the graph to the unlabelled nodes. For instance, if a fraudulent transaction (labeled node) is linked to a number of unlabelled nodes (e.g., associated customers or gadgets), the mannequin learns patterns and connections that may point out fraud.

Few and zero-shot studying refers to a broad assortment of strategies designed to deal with very small datasets head on. Typically these strategies prepare a mannequin to determine “novel” lessons unseen throughout coaching, with a small labelled dataset used primarily for testing.

Why it helps:

- These approaches allow fashions to rapidly adapt to new duties or lessons with out intensive retraining.

- Helpful for domains with uncommon or distinctive classes, reminiscent of uncommon illnesses or area of interest object detection.

Suggestions:

- In all probability the most typical approach, referred to as similarity-based studying, trains a mannequin to match pairs of things and determine in the event that they belong to the identical class. By studying a similarity or distance measure the mannequin can generalise to unseen lessons by evaluating new cases to class prototypes (your small set of labelled knowledge throughout testing) throughout testing. This strategy requires a great way to signify several types of enter (embedding), usually created utilizing Siamese neural networks or comparable fashions.

- Optimisation-based meta-learning, goals to coach a mannequin to rapidly adapt to new duties or lessons utilizing solely a small quantity of coaching knowledge. A preferred instance is model-agnostic meta-learning (MAML). The place a “meta-learner” is educated on many small duties, every with its personal coaching and testing examples. The aim is to show the mannequin to begin from a very good preliminary state, so when it encounters a brand new activity, it might rapidly study and regulate with minimal further coaching. These usually are not easy strategies to implement.

- A extra classical approach, one-class classification, is the place a binary classifier (like one class SVM) is educated on knowledge from just one class, and learns to detect outliers throughout testing.

- Zero-shot approaches, reminiscent of CLIP or giant language fashions with immediate engineering, allow classification or detection of unseen classes utilizing textual cues (e.g., “a photograph of a brand new product kind”).

- In zero-shot instances, mix with energetic studying (human within the loop) to label probably the most informative examples.

It’s vital to keep up practical expectations when implementing few-shot and zero-shot strategies. Typically, the purpose is to attain usable or “adequate” efficiency. As a direct comparability of conventional deep-learning (DL) strategies, the following study compares each DL and few-shot studying (FSL) for classifying 20 coral reef fish species from underwater photos with purposes for detecting uncommon species with restricted accessible knowledge. It ought to come as no shock that the perfect mannequin examined was a DL mannequin primarily based on ResNet. With ~3500 examples for every species the mannequin achieved an accuracy of 78%. Nonetheless, gathering this quantity of information for uncommon species is past sensible. Subsequently, the variety of samples was diminished to 315 per species, and the accuracy dropped to 42%. In distinction, the FSL mannequin, achieved comparable outcomes with as few as 5 labeled photos per species, and higher efficiency past 10 photographs. Right here, the Reptile algorithm was used, which is a meta-learning-based FSL strategy. This was educated by repeatedly fixing small classification issues (e.g., distinguishing a couple of lessons) drawn from the MiniImageNet dataset (a helpful benchmark dataset for FSL). Throughout fine-tuning, the mannequin was then educated utilizing a couple of labeled examples (1 to 30 photographs per species).

Weakly supervised studying describes a set of strategies for constructing fashions with noisy, inaccurate or restricted sources to label giant portions of information. We will cut up the subject into three: incomplete, inexact, and inaccurate supervision, distinguished by the boldness within the labels. Incomplete supervision happens when solely a subset of examples has ground-truth labels. Inexact supervision includes coarsely-grained labels, like labelling an MRI picture as “lung most cancers” with out specifying detailed attributes. Inaccurate supervision arises when labels are biased or incorrect attributable to human.

Why it helps:

- Partial or inaccurate knowledge is commonly easier and cheaper to pay money for.

- Allows fashions to study from a bigger pool of data with out the necessity for intensive handbook labelling.

- Focuses on extracting significant patterns or options from knowledge, that may amplify the worth of any current nicely labeled examples.

Suggestions:

- Use a small subset of high-quality labels (or an ensemble) to appropriate systematic labelling errors.

- For eventualities the place coarse-grained labels can be found (e.g., image-level labels however not detailed instance-level labels), Multi-instance studying might be employed. Specializing in bag-level classification since instance-level inaccuracies are much less impactful.

- Label filtering, correction, and inference strategies can mitigate label noise and minimise reliance on costly handbook labels.

The first aim of this method is to estimate extra informative or larger dimensional knowledge with restricted info. For instance, this paper presents a weakly supervised studying strategy to estimating a 3D human poses. The tactic depends on 2D pose annotations, avoiding the necessity for costly 3D ground-truth knowledge. Utilizing an adversarial reprojection community (RepNet), the mannequin predicts 3D poses and reprojects them into 2D views to match with 2D annotations, minimising reprojection error. This strategy leverages adversarial coaching to implement plausibility of 3D poses and showcases the potential of weakly supervised strategies for advanced duties like 3D pose estimation with restricted labeled knowledge.

Lively studying seeks to optimise labelling efforts by figuring out unlabelled samples that, as soon as labeled, will present the mannequin with probably the most informative knowledge. A typical strategy is uncertainty sampling, which selects samples the place the mannequin’s predictions are least sure. This uncertainty is commonly quantified utilizing measures reminiscent of entropy or margin sampling. That is extremely iterative; every spherical influences the mannequin’s subsequent set of predictions.

Why it helps:

- Optimises professional time; you label fewer samples total.

- Rapidly identifies edge instances that enhance mannequin robustness.

Suggestions:

- Variety sampling is an alternate choice strategy that focuses on numerous space of the characteristic area. For example, clustering can be utilized to pick a couple of consultant samples from every cluster.

- Attempt to use a number of choice strategies to keep away from introducing bias.

- Introducing an professional human within the loop might be logistically troublesome, managing availability with a labelling workflow that may be sluggish/costly.

This system has been extensively utilized in chemical evaluation and supplies analysis. The place, giant databases of actual and simulated molecular constructions and their properties have been collected over many years. These databases are notably helpful for drug discovery, the place simulations like docking are used to foretell how small molecules (e.g., potential medication) work together with targets reminiscent of proteins or enzymes. Nonetheless, the computational value of performing most of these calculations over thousands and thousands of molecules makes brute drive research impractical. That is the place energetic studying is available in. One such study confirmed that by coaching a predictive mannequin on an preliminary subset of docking outcomes and iteratively choosing probably the most unsure molecules for additional simulations, researchers had been in a position to drastically cut back the variety of molecules examined whereas nonetheless figuring out the perfect candidates.

Artificially enhance your dataset by making use of transformations to current examples — reminiscent of flipping or cropping photos, translation or synonym substitute for textual content and time shifts or random cropping for time-series. Alternatively, upsample underrepresented knowledge with ADASYN (Adaptive Artificial Sampling) and SMOTE (Artificial Minority Over-sampling Approach).

Why it helps:

- The mannequin focuses on extra basic and significant options somewhat than particular particulars tied to the coaching set.

- As a substitute of gathering and labelling extra knowledge, augmentation offers an economical different.

- Improves generalisation by growing the variety of coaching knowledge, serving to study strong and invariant options somewhat than overfitting to particular patterns.

Suggestions:

- Preserve transformations domain-relevant (e.g., flipping photos vertically would possibly make sense for flower photos, much less so for medical X-rays).

- Listen that any augmentations don’t distort the unique knowledge distribution, preserving the underlying patterns.

- Discover GANs, VAEs, or diffusion fashions to supply artificial knowledge — however this usually requires cautious tuning, domain-aware constraints, and sufficient preliminary knowledge.

- Artificial oversampling (like SMOTE) can introduce noise or spurious correlations if the lessons or characteristic area are advanced and never nicely understood.

Information augmentation is an extremely broad subject, with quite a few surveys exploring the present state-of-the-art throughout varied fields, together with laptop imaginative and prescient (review paper), pure language processing (review paper), and time-series knowledge (review paper). It has change into an integral element of most machine studying pipelines attributable to its means to boost mannequin generalisation. That is notably essential for small datasets, the place augmenting enter knowledge by introducing variations, reminiscent of transformations or noise, and eradicating redundant or irrelevant options can considerably enhance a mannequin’s robustness and efficiency.

Right here we prepare one mannequin to resolve a number of duties concurrently. This improves how nicely fashions carry out by encouraging them to search out patterns or options that work nicely for a number of targets on the similar time. Decrease layers seize basic options that profit all duties, even in case you have restricted knowledge for some.

Why it helps:

- Shared representations are realized throughout duties, successfully growing pattern dimension.

- The mannequin is much less prone to overfit, because it should account for patterns related to all duties, not only one.

- Information realized from one activity can present insights that enhance efficiency on one other.

Suggestions:

- Duties want some overlap or synergy to meaningfully share representations; in any other case this technique will damage efficiency.

- Modify per-task weights fastidiously to keep away from letting one activity dominate coaching.

The shortage of information for a lot of sensible purposes of ML makes sharing each knowledge and fashions throughout duties a horny proposition. That is enabled by Multitask studying, the place duties profit from shared data and correlations in overlapping domains. Nonetheless, it requires a big, numerous dataset that integrates a number of associated properties. Polymer design is one example the place this has been profitable. Right here, a hybrid dataset of 36 properties throughout 13,000 polymers, overlaying a mixture of mechanical, thermal, and chemical traits, was used to coach a deep-learning-based MTL structure. The multitask mannequin outperformed single-task fashions for each polymer property. Significantly, for underrepresented properties.

Ensembles mixture predictions from a number of base fashions to enhance robustness. Typically, ML algorithms might be restricted in a wide range of methods: excessive variance, excessive bias, and low accuracy. This manifests as totally different uncertainty distributions for various fashions throughout predictions. Ensemble strategies restrict the variance and bias errors related to a single mannequin; for instance, bagging reduces variance with out growing the bias, whereas boosting reduces bias.

Why it helps:

- Diversifies “opinions” throughout totally different mannequin architectures.

- Reduces variance, mitigating overfitting threat.

Suggestions:

- Keep away from advanced base fashions which may simply overfit small datasets. As a substitute, use regularised fashions reminiscent of shallow bushes or linear fashions with added constraints to manage complexity.

- Bootstrap aggregating (bagging) strategies like Random Forest might be notably helpful for small datasets. By coaching a number of fashions on bootstrapped subsets of the info, you may cut back overfitting whereas growing robustness. That is efficient for algorithms liable to excessive variance, reminiscent of determination bushes.

- Mix totally different base fashions sorts (e.g., SVM, tree-based fashions, and logistic regression) with a easy meta-model like logistic regression to mix predictions.

For instance, the following paper highlights ensemble studying as a way to enhance the classification of cervical cytology photos. On this case, three pre-trained neural networks — Inception v3, Xception, and DenseNet-169 — had been used. The range of those base fashions ensured the ensemble advantages from every fashions distinctive strengths and have extraction capabilities. This mixed with the fusion of mannequin confidences, by way of a way that rewards assured, correct predictions whereas penalising unsure ones, maximised the utility of the restricted knowledge. Mixed with switch studying, the ultimate predictions had been strong to the errors of any explicit mannequin, regardless of the small dataset used.

Combine domain-specific data or physics-based constraints into ML fashions. This embeds prior data, decreasing the mannequin’s reliance on giant knowledge to deduce patterns. For instance, utilizing partial differential equations alongside neural networks for fluid dynamics.

Why it helps:

- Reduces the info wanted to study patterns which are already nicely understood.

- Acts as a type of regularisation, guiding the mannequin to believable options even when the info is sparse or noisy.

- Improves interpretability and belief in domain-critical contexts.

Suggestions:

- Frequently confirm that mannequin outputs make bodily/organic sense, not simply numerical sense.

- Preserve area constraints separate however feed them as inputs or constraints in your mannequin’s loss perform.

- Watch out to steadiness domain-based constraints along with your fashions means to study new phenomena.

- In follow, bridging domain-specific data with data-driven strategies usually includes severe collaboration, specialised code, or {hardware}.

Constraining a mannequin, on this means requires a deep understanding of your downside area, and is commonly utilized to issues the place the surroundings the mannequin operates in is nicely understood, reminiscent of bodily methods. An example of this is lithium-ion battery modelling, the place area data of battery dynamics is built-in into the ML course of. This permits the mannequin to seize advanced behaviours and uncertainties missed by conventional bodily fashions, making certain bodily constant predictions and improved efficiency beneath real-world situations like battery growing old.

For me, tasks constrained by restricted knowledge are a number of the most attention-grabbing tasks to work on — regardless of the upper threat of failure, they provide a chance to discover the state-of-the-art and experiment. These are robust issues! Nonetheless, systematically making use of the methods lined on this put up can significantly enhance your odds of delivering a strong, efficient mannequin. Embrace the iterative nature of those issues: refine labels, make use of augmentations, and analyse errors in fast cycles. Brief pilot experiments assist validate every approach’s influence earlier than you make investments additional.