What number of instances have you ever spent months evaluating automation tasks – enduring a number of vendor assessments, navigating prolonged RFPs, and managing advanced procurement cycles – solely to face underwhelming outcomes or outright failure? You’re not alone.

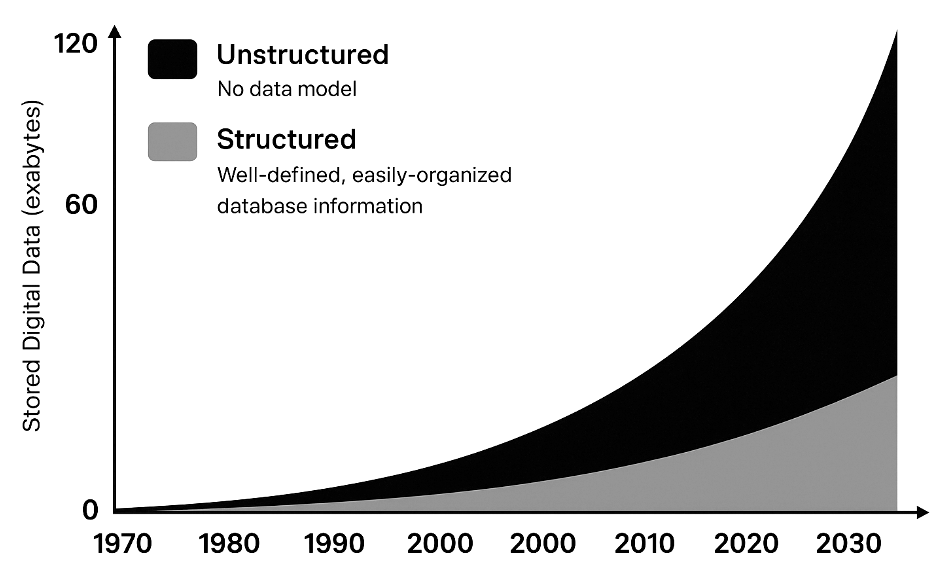

Many enterprises battle to scale automation, not on account of an absence of instruments, however as a result of their knowledge isn’t prepared. In idea, AI brokers and RPA bots might deal with numerous duties; in apply, they fail when fed messy or unstructured inputs. Research present that 80%-90% of all enterprise knowledge is unstructured – consider emails, PDFs, invoices, photos, audio, and many others. This pervasive unstructured knowledge is the actual bottleneck. Regardless of how superior your automation platform, it could actually’t reliably course of what it can not correctly learn or perceive. Briefly, low automation ranges are often a knowledge downside, not a device downside.

Why Brokers and RPA Require Structured Information

Automation instruments like Robotic Course of Automation (RPA) excel with structured, predictable knowledge – neatly organized in databases, spreadsheets, or standardized varieties. They falter with unstructured inputs. A typical RPA bot is basically a rules-based engine (“digital employee”) that follows express directions. If the enter is a scanned doc or a free-form textual content area, the bot doesn’t inherently know methods to interpret it. RPA is unable to immediately handle unstructured datasets; the info should first be transformed into structured type utilizing further strategies. In different phrases, an RPA bot wants a clear desk of information, not a pile of paperwork.

“RPA is best when processes contain structured, predictable knowledge. In apply, many enterprise paperwork comparable to invoices are unstructured or semi-structured, making automated processing troublesome”. Unstructured knowledge now accounts for ~80% of enterprise knowledge, underscoring why many RPA initiatives stall.

The identical holds true for AI brokers and workflow automation: they solely carry out in addition to the info they obtain. If an AI customer support agent is drawing solutions from disorganized logs and unlabeled recordsdata, it’ll doubtless give incorrect solutions. The inspiration of any profitable automation or AI agent is “AI-ready” knowledge that’s clear, well-organized, and ideally structured. This is the reason organizations that make investments closely in instruments however neglect knowledge preparation typically see disappointing automation ROI.

Challenges with Conventional Information Structuring Strategies

If unstructured knowledge is the difficulty, why not simply convert it to structured type? That is simpler mentioned than accomplished. Conventional strategies to construction knowledge like OCR, ICR, and ETL have important challenges:

- OCR and ICR: OCR and ICR have lengthy been used to digitize paperwork, however they crumble in real-world eventualities. Basic OCR is simply pattern-matching, it struggles with assorted fonts, layouts, tables, photos, or signatures. Even high engines hit solely 80 – 90% accuracy on semi-structured docs, creating 1,000 – 2,000 errors per 10,000 paperwork and forcing handbook evaluate on 60%+ of recordsdata. Handwriting makes it worse, ICR barely manages 65 – 75% accuracy on cursive. Most methods are additionally template-based, demanding countless rule updates for each new bill or type format.OCR/ICR can pull textual content, nevertheless it can’t perceive context or construction at scale, making them unreliable for enterprise automation.

- Typical ETL Pipelines: ETL works nice for structured databases however falls aside with unstructured knowledge. No fastened schema, excessive variability, and messy inputs imply conventional ETL instruments want heavy customized scripting to parse pure language or photos. The consequence? Errors, duplicates, and inconsistencies pile up, forcing knowledge engineers to spend 80% of their time cleansing and prepping knowledge—leaving solely 20% for precise evaluation or AI modeling. ETL was constructed for rows and columns, not for at the moment’s messy, unstructured knowledge lakes—slowing automation and AI adoption considerably.

- Rule-Primarily based Approaches: Older automation options typically tried to deal with unstructured information with brute-force guidelines, e.g. utilizing regex patterns to seek out key phrases in textual content, or establishing choice guidelines for sure doc layouts. These approaches are extraordinarily brittle. The second the enter varies from what was anticipated, the principles fail. Because of this, firms find yourself with fragile pipelines that break every time a vendor modifications an bill format or a brand new textual content sample seems. Upkeep of those rule methods turns into a heavy burden.

All these elements contribute to why so many organizations nonetheless depend on armies of information entry workers or handbook evaluate. McKinsey observes that present doc extraction instruments are sometimes “cumbersome to arrange” and fail to yield excessive accuracy over time, forcing firms to take a position closely in handbook exception dealing with. In different phrases, regardless of utilizing OCR or ETL, you find yourself with individuals within the loop to repair all of the issues the automation couldn’t determine. This not solely cuts into the effectivity positive factors but in addition dampens worker enthusiasm (since employees are caught correcting machine errors or doing low-value knowledge clean-up). It’s a irritating establishment: automation tech exists, however with out clear, structured knowledge, its potential isn’t realized.

Foundational LLMs Are Not a Silver Bullet for Unstructured Information

With the rise of enormous language fashions, one may hope that they may merely “learn” all of the unstructured knowledge and magically output structured information. Certainly, trendy basis fashions (like GPT-4) are excellent at understanding language and even deciphering photos. Nonetheless, general-purpose LLMs usually are not purpose-built to resolve the enterprise unstructured knowledge downside of scale, accuracy, and integration. There are a number of causes for this:

- Scale Limitations: Out-of-the-box LLMs can not ingest hundreds of thousands of paperwork or total knowledge lakes in a single go. Enterprise knowledge typically spans terabytes, far past an LLM’s capability at any given time. Chunking the info into smaller items helps, however then the mannequin loses the “large image” and may simply combine up or miss particulars. LLMs are additionally comparatively sluggish and computationally costly for processing very massive volumes of textual content. Utilizing them naively to parse each doc can turn into cost-prohibitive and latency-prone.

- Lack of Reliability and Construction: LLMs generate outputs probabilistically, which suggests they might “hallucinate” info or fill in gaps with plausible-sounding however incorrect knowledge. For essential fields (like an bill complete or a date), you want 100% precision, a made-up worth is unacceptable. Foundational LLMs don’t assure constant, structured output until closely constrained. They don’t inherently know which components of a doc are necessary or correspond to which area labels (until skilled or prompted in a really particular manner). As one analysis research famous, “sole reliance on LLMs is just not viable for a lot of RPA use instances” as a result of they’re costly to coach, require plenty of knowledge, and are vulnerable to errors/hallucinations with out human oversight. In essence, a chatty normal AI may summarize an e mail for you, however trusting it to extract each bill line merchandise with good accuracy, each time, is dangerous.

- Not Skilled on Your Information: By default, basis fashions study from internet-scale textual content (books, net pages, and many others.), not out of your firm’s proprietary varieties and vocabulary. They might not perceive particular jargon on a type, or the structure conventions of your business’s paperwork. Nice-tuning them in your knowledge is feasible however expensive and complicated, and even then, they continue to be generalists, not specialists in doc processing. As a Forbes Tech Council perception put it, an LLM by itself “doesn’t know your organization’s knowledge” and lacks the context of inner information. You typically want further methods (like retrieval-augmented technology, data graphs, and many others.) to floor the LLM in your precise knowledge, successfully including again a structured layer.

In abstract, basis fashions are highly effective, however they aren’t a plug-and-play answer for parsing all enterprise unstructured knowledge into neat rows and columns. They increase however don’t change the necessity for clever knowledge pipelines. Gartner analysts have additionally cautioned that many organizations aren’t even able to leverage GenAI on their unstructured knowledge on account of governance and high quality points, utilizing LLMs with out fixing the underlying knowledge is placing the cart earlier than the horse.

Structuring Unstructured Information, Why Objective-Constructed Fashions are the reply

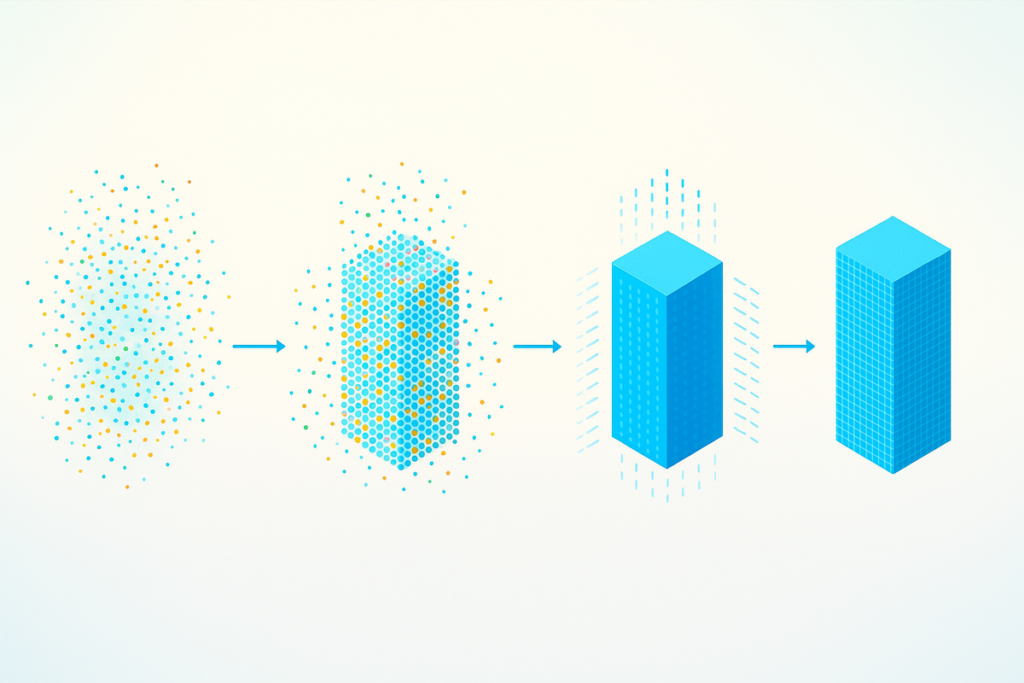

As we speak, Gartner and different main analysts point out a transparent shift: conventional IDP, OCR, and ICR options have gotten out of date, changed by superior massive language fashions (LLMs) which can be fine-tuned particularly for knowledge extraction duties. In contrast to their predecessors, these purpose-built LLMs excel at deciphering the context of assorted and complicated paperwork with out the constraints of static templates or restricted sample matching.

Nice-tuned, data-extraction-focused LLMs leverage deep studying to know doc context, acknowledge refined variations in construction, and constantly output high-quality, structured knowledge. They’ll classify paperwork, extract particular fields—comparable to contract numbers, buyer names, coverage particulars, dates, and transaction quantities—and validate extracted knowledge with excessive accuracy, even from handwriting, low-quality scans, or unfamiliar layouts. Crucially, these fashions regularly study and enhance by processing extra examples, considerably lowering the necessity for ongoing human intervention.

McKinsey notes that organizations adopting these LLM-driven options see substantial enhancements in accuracy, scalability, and operational effectivity in comparison with conventional OCR/ICR strategies. By integrating seamlessly into enterprise workflows, these superior LLM-based extraction methods enable RPA bots, AI brokers, and automation pipelines to perform successfully on the beforehand inaccessible 80% of unstructured enterprise knowledge.

Because of this, business leaders emphasize that enterprises should pivot towards fine-tuned, extraction-optimized LLMs as a central pillar of their knowledge technique. Treating unstructured knowledge with the identical rigor as structured knowledge by these superior fashions unlocks important worth, lastly enabling true end-to-end automation and realizing the complete potential of GenAI applied sciences.

Actual-World Examples: Enterprises Tackling Unstructured Information with Nanonets

How are main enterprises fixing their unstructured knowledge challenges at the moment? A lot of forward-thinking firms have deployed AI-driven doc processing platforms like Nanonets to nice success. These examples illustrate that with the precise instruments (and knowledge mindset), even legacy, paper-heavy processes can turn into streamlined and autonomous:

- Asian Paints (Manufacturing): One of many largest paint firms on the planet, Asian Paints handled 1000’s of vendor invoices and buy orders. They used Nanonets to automate their bill processing workflow, reaching a 90% discount in processing time for Accounts Payable. This translated to liberating up about 192 hours of handbook work monthly for his or her finance crew. The AI mannequin extracts all key fields from invoices and integrates with their ERP, so workers not spend time typing in particulars or correcting errors.

- JTI (Japan Tobacco Worldwide) – Ukraine operations: JTI’s regional crew confronted a really lengthy tax refund declare course of that concerned shuffling massive quantities of paperwork between departments and authorities portals. After implementing Nanonets, they introduced the turnaround time down from 24 weeks to simply 1 week, a 96% enchancment in effectivity. What was a multi-month ordeal of information entry and verification grew to become a largely automated pipeline, dramatically rushing up money movement from tax refunds.

- Suzano (Pulp & Paper Business): Suzano, a world pulp and paper producer, processes buy orders from varied worldwide purchasers. By integrating Nanonets into their order administration, they lowered the time taken per buy order from about 8 minutes to 48 seconds, roughly a 90% time discount in dealing with every order. This was achieved by mechanically studying incoming buy paperwork (which arrive in numerous codecs) and populating their system with the wanted knowledge. The result’s quicker order achievement and fewer handbook workload.

- SaltPay (Fintech): SaltPay wanted to handle an unlimited community of 100,000+ distributors, every submitting invoices in numerous codecs. Nanonets allowed SaltPay to simplify vendor bill administration, reportedly saving 99% of the time beforehand spent on this course of. What was as soon as an amazing, error-prone activity is now dealt with by AI with minimal oversight.

These instances underscore a typical theme: organizations that leverage AI-driven knowledge extraction can supercharge their automation efforts. They not solely save time and labor prices but in addition enhance accuracy (e.g. one case famous 99% accuracy achieved in knowledge extraction) and scalability. Staff may be redeployed to extra strategic work as a substitute of typing or verifying knowledge all day. The expertise (instruments) wasn’t the differentiator right here, the important thing was getting the info pipeline so as with the assistance of specialised AI fashions. As soon as the info grew to become accessible and clear, the present automation instruments (workflows, RPA bots, analytics, and many others.) might lastly ship full worth.

Clear Information Pipelines: The Basis of the Autonomous Enterprise

Within the pursuit of a “really autonomous enterprise”, the place processes run with minimal human intervention – having a clear, well-structured knowledge pipeline is totally essential. A “really autonomous enterprise” doesn’t simply want higher instruments—it wants higher knowledge. Automation and AI are solely nearly as good as the data they devour, and when that gasoline is messy or unstructured, the engine sputters. Rubbish in, rubbish out is the only greatest motive automation tasks underdeliver.

Ahead-thinking leaders now deal with knowledge readiness as a prerequisite, not an afterthought. Many enterprises spend 2 – 3 months upfront cleansing and organizing knowledge earlier than AI tasks as a result of skipping this step results in poor outcomes. A clear knowledge pipeline—the place uncooked inputs like paperwork, sensor feeds, and buyer queries are systematically collected, cleansed, and remodeled right into a single supply of fact—is the muse that enables automation to scale seamlessly. As soon as that is in place, new use instances can plug into current knowledge streams with out reinventing the wheel.

In distinction, organizations with siloed, inconsistent knowledge stay trapped in partial automation, always counting on people to patch gaps and repair errors. True autonomy requires clear, constant, and accessible knowledge throughout the enterprise—very similar to self-driving automobiles want correct roads earlier than they’ll function at scale.

The takeaway: The instruments for automation are extra highly effective than ever, nevertheless it’s the info that determines success. AI and RPA don’t fail on account of lack of functionality; they fail on account of lack of unpolluted, structured knowledge. Resolve that, and the trail to the autonomous enterprise—and the subsequent wave of productiveness—opens up.

Sources: