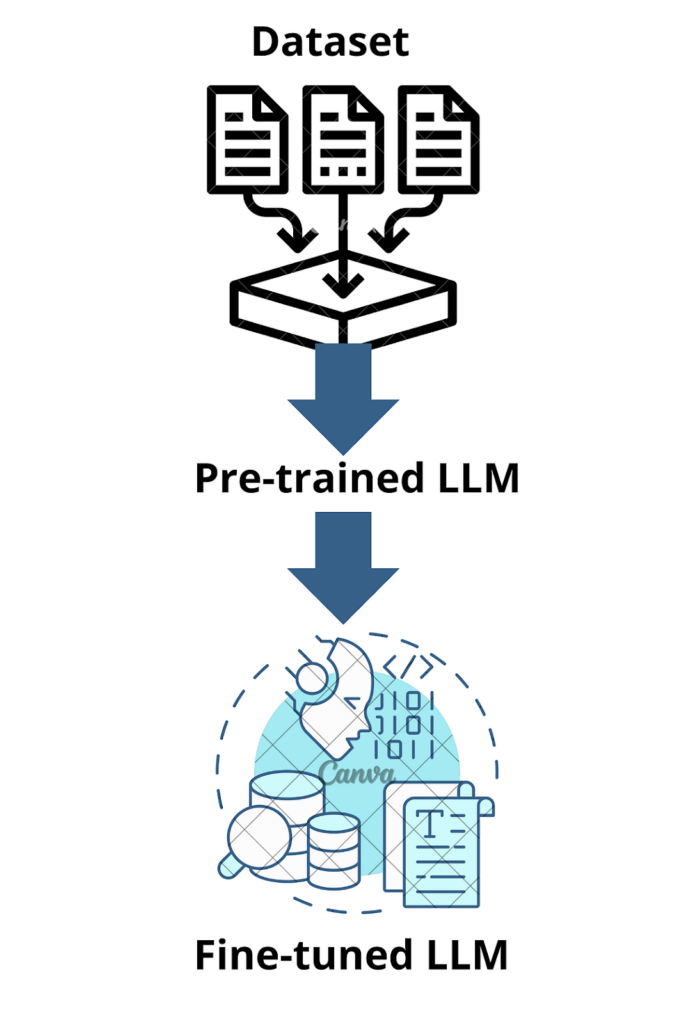

Advantageous-tuning is the method of taking a LLM and coaching it on a smaller, specialised dataset to enhance its efficiency on a particular process. not like coaching a mannequin from scratch.

Area-Particular Adaptation: Normal LLMs are skilled on numerous datasets however could lack experience in particular fields (e.g., authorized, medical, monetary).

Efficiency Enchancment: Advantageous-tuning helps enhance accuracy and coherence in responses.

Customization: Helps align the mannequin’s responses with an organization’s tone, branding, or particular wants.

How LLMs Work

Transformer Structure:LLMs are primarily based on transformers that use self-attention mechanisms to grasp sence and relationships between phrases.

Pre-training vs Advantageous-Tuning:

Pre-training: mannequin learns from huge datasets (e.g., books, Wikipedia, code).

Advantageous-tuning: mannequin adjusts weights utilizing particular dataset of a process.

Varieties of Advantageous-Tuning

- Full Advantageous-Tuning: Updating all layers of our mannequin, requiring excessive computational energy.

- Parameter-Environment friendly Advantageous-Tuning: Updating a subset of parameters (e.g., LoRA, adapters) to scale back useful resource utilization.

- Immediate Engineering & Few-Shot Studying: No fine-tuning, however guiding our mannequin utilizing well-structured prompts about what we want.

Dataset Assortment

- Use present datasets in Hugging Face or Kaggle … or you possibly can scrapy information that you just want.

Dataset Cleansing and Formatting

- Take away duplicates information and particular characters.

- Convert information into codecs like JSON, CSV or Parquet.

- Construction your datasets (create the output of every enter information).

Dataset Splitting

Coaching information: for updating mannequin parameters.

Validation information: for hyperparameter tuning.

Check information: for last analysis.

{Hardware} Necessities

Native Machines: GPUs (NVIDIA A100, RTX 4090) rely upon the scale of your mannequin.

Cloud Companies: Google Colab, Hugging Face Areas, AWS, RunPod….

Putting in Dependencies

- pip set up transformers datasets torch speed up peft

- If utilizing Hugging Face Coach API, set up consider for efficiency metrics.

Load the Pre-Educated Mannequin

from transformers import AutoModelForCausalLM, AutoTokenizer

# Specify the identify of the pre-trained mannequin

model_name = "mistralai/Mistral-7B-v0.1"

# Load the pre-trained causal language mannequin

mannequin = AutoModelForCausalLM.from_pretrained(model_name)

# Load the corresponding tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

Preprocess the Knowledge

# Outline a preprocessing perform to tokenize the enter textual content

def preprocess(instance):

return tokenizer(instance["text"], truncation=True, padding="max_length")

# Apply the preprocessing perform to the dataset

dataset = dataset.map(preprocess)

Outline Coaching Arguments

from transformers import TrainingArguments

# Outline coaching parameters

training_args = TrainingArguments(

output_dir="./outcomes", # Listing to avoid wasting coaching outcomes and checkpoints

evaluation_strategy="epoch", # Consider the mannequin on the finish of every epoch

learning_rate=5e-5, # Studying charge for optimization

per_device_train_batch_size=4, # Batch measurement for coaching per system (GPU/CPU)

per_device_eval_batch_size=4, # Batch measurement for analysis per system

num_train_epochs=3 # Variety of coaching epochs

)

Practice the Mannequin

from transformers import Coach

coach = Coach(

mannequin=mannequin, # The pre-trained mannequin

args=training_args, # Coaching configuration

train_dataset=dataset["train"], # Coaching information

eval_dataset=dataset["validation"] # Validation information

)# Coaching course of

coach.prepare()

Metrics to Consider

Perplexity (PPL): Measures how effectively the mannequin predicts textual content. Decrease is best.

Accuracy/F1 Rating: For classification duties.

BLEU/ROUGE Scores: Measure similarity between generated and reference texts.

Testing the Mannequin

# Enter textual content

input_text = "What's the capital of France?"

# Tokenize the enter textual content and convert it into PyTorch tensors

inputs = tokenizer(input_text, return_tensors="pt")

# Generate a response

output = mannequin.generate(**inputs, max_length=50)

# Decode the generated output again into human-readable textual content, eradicating particular tokens

print(tokenizer.decode(output[0], skip_special_tokens=True))

Saving the Mannequin

# Save the nice tuned mannequin in a listing

mannequin.save_pretrained("./fine_tuned_model")

# Save the tokenizer to the identical listing

tokenizer.save_pretrained("./fine_tuned_model")

Deployment Choices

Hugging Face Areas: Deploy by utilizing Gradio or Streamlit.

FastAPI: Construct an API for inference.

ONNX / TorchScript: Optimize for quick inference. …

Lowering Mannequin Dimension and Price

- Quantization: Scale back precision from fp32 to int8 or int4.

- LoRA: Advantageous tune small layers as an alternative of the entire mannequin.

- Distillation: Practice a small mannequin by utilizing data of a bigger llm.

Advantageous Tuning Challenges

- Overfitting Drawback: Use dropout or regularization approach.

- Bias in Knowledge: Guarantee numerous information and unbiased coaching.

- Computational Limits: Use parameter-efficient strategies when GPUs are restricted.

Advantageous tuning is important for customizing LLMs.

Select the suitable dataset, mannequin and tuning technique primarily based on assets.

Deployment and optimization are essential for production-ready fashions.