Writer: Anish Kumar Pal

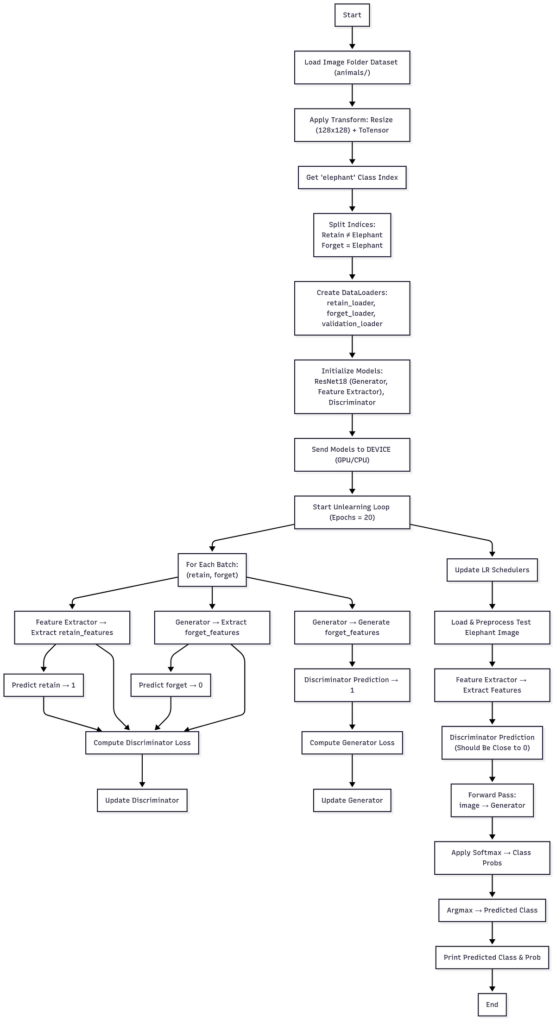

This mission implements an Adversarial Deep Unlearning system designed to selectively “overlook” particular courses (elephants) from a skilled neural community whereas preserving efficiency on different courses. The implementation makes use of a Generator-Discriminator structure the place the generator learns to provide options that can not be distinguished from retained knowledge by the discriminator.

Machine unlearning goals at utterly eradicating hazardous capabilities from fashions and takes an adversarial method by proactively incorporating assaults into the design of unlearning algorithms. On this particular case, we need to make a mannequin “overlook” elephants from an animal classification dataset whereas sustaining its capability to categorise different animals.

Within the period of data-driven AI techniques, the flexibility to study from knowledge has turn into a cornerstone of contemporary machine studying. Nevertheless, as fashions develop in complexity and scale, the necessity to unlearn — to selectively overlook beforehand realized info — is rising as equally crucial. Unlearning, additionally known as machine unlearning, is the method of eradicating particular knowledge or ideas from a skilled mannequin with out retraining it from scratch. Beneath are key motivations driving analysis and adoption of unlearning strategies:

1. Knowledge Privateness and Regulatory Compliance

Privateness laws such because the Basic Knowledge Safety Regulation (GDPR) and California Shopper Privateness Act (CCPA) grant customers the “proper to be forgotten”. When customers request deletion of their private knowledge, machine studying fashions skilled on that knowledge should additionally get rid of any realized representations associated to it. Unlearning presents a sensible mechanism to adjust to such authorized necessities with out full retraining.

2. Mitigating Unintended Bias

Machine studying fashions can inadvertently study and amplify biases from imbalanced datasets. Unlearning permits focused removing of biased representations or courses (e.g., demographic teams or delicate attributes) to assist obtain extra honest and equitable fashions.

3. Eradicating Poisoned or Noisy Knowledge

Coaching knowledge could comprise poisoned, mislabeled, or corrupted samples — both unintentionally or on account of adversarial assaults. Environment friendly unlearning helps sanitize fashions by eliminating the affect of dangerous or deceptive samples post-training.

4. Mannequin Specialization or Class Forgetting

In dynamic environments, sure courses or duties could turn into out of date, irrelevant, or undesirable. For instance, a wildlife classification mannequin could must overlook the “Elephant” class on account of modified conservation insurance policies. Unlearning permits fashions to re-adapt to new domains with out retaining pointless data.

5. Enhancing Mannequin Generalization

Unlearning can act as a regularization instrument by eradicating overfitted or overly memorized samples, thereby bettering the mannequin’s capability to generalize to unseen knowledge. That is significantly helpful in continuous studying or lifelong studying setups.

6. Useful resource Effectivity

Retraining a mannequin from scratch could be computationally costly and time-consuming, particularly for large-scale fashions. Unlearning strategies present a resource-efficient different by enabling partial, localized updates that modify solely the mandatory elements of the mannequin.

- PyTorch: Deep studying framework

- torchvision: Pc imaginative and prescient utilities

- PIL: Picture processing

- NumPy: Numerical computations

- matplotlib: Visualization

- scikit-learn: Machine studying utilities

- pandas: Knowledge manipulation

- tqdm: Progress bars

Knowledge Construction

animals/

├── elephant/ # Neglect class (to be unlearned)

├── cat/ # Retain courses

├── canine/ # Retain courses

└── ... # Different animal courses (Retain courses)

Knowledge Loaders

- Retain Loader: Incorporates all non-elephant pictures (batch_size=64)

- Neglect Loader: Incorporates solely elephant pictures (batch_size=64)

- Validation Loader: Incorporates full dataset for analysis

Coaching Course of

The adversarial coaching follows a minimax sport concept method:

- Discriminator Coaching:

- Learns to categorise retain_features as “actual” (label = 1)

- Learns to categorise forget_features as “pretend” (label = 0)

- Loss:

BCEWithLogitsLoss(retain_pred, 1) + BCEWithLogitsLoss(forget_pred, 0)

2. Generator Coaching:

- Learns to idiot the discriminator by making forget_features appear to be retain_features

- Loss:

BCEWithLogitsLoss(generator_pred, 1)(desires discriminator to foretell 1)

Optimization Particulars

- Optimizers: Adam with β₁=0.5, β₂=0.999

- Studying Charge: 0.001 with weight decay 5e-4

- Scheduler: Cosine Annealing over 20 epochs

- Loss Perform: Binary Cross Entropy with Logits

Overview

The deep unlearning system implements an adversarial structure with three major neural community elements working collectively to realize selective forgetting of the “elephant” class whereas retaining data of different animal courses.

1. Generator Community

generator = resnet18(weights=None, num_classes=10)

Specs

- Base Structure: ResNet-18 (Residual Neural Community)

- Weights: No pre-trained weights (

weights=None) - Output Courses: 10 (matching the variety of animal courses in dataset)

- Enter: RGB pictures (3 channels, 128×128 pixels)

- Output: 10-dimensional characteristic vector representing class logits

ResNet-18 Construction

- Convolutional Blocks: 4 major residual blocks with skip connections

- Layers: 18 whole layers (16 convolutional + 2 absolutely related)

- Filters: Beginning with 64 filters, doubling at every block (64→128→256→512)

- Activation: ReLU activation capabilities all through

- Pooling: Adaptive common pooling earlier than ultimate classification layer

Position in Unlearning

- Main Perform: Transforms “overlook” knowledge (elephant pictures) into characteristic representations

- Goal: Generate options that idiot the discriminator into classifying them as “retain” knowledge

- Coaching Aim: Decrease discriminator’s capability to differentiate elephant options from different animal options

2. Discriminator Community

class Discriminator(nn.Module):

def __init__(self):

tremendous(Discriminator, self).__init__()

self.mannequin = nn.Sequential(

nn.Linear(10, 512), # Enter layer

nn.ReLU(), # Activation

nn.Linear(512, 256), # Hidden layer 1

nn.ReLU(), # Activation

nn.Linear(256, 1), # Output layer

nn.Sigmoid() # Ultimate activation

)

Specs

- Structure Sort: Multi-Layer Perceptron (MLP)

- Enter Dimension: 10 options (matching generator output)

- Hidden Layers: 2 hidden layers

- Layer Sizes: 10 → 512 → 256 → 1

- Activation Capabilities: ReLU for hidden layers, Sigmoid for output layer

- Output: Single likelihood rating [0,1]

Community Stream

- Enter Layer: Receives 10-dimensional characteristic vectors

- First Hidden Layer: 10 → 512 neurons with ReLU activation

- Second Hidden Layer: 512 → 256 neurons with ReLU activation

- Output Layer: 256 → 1 neuron with Sigmoid activation

- Ultimate Output: Likelihood rating indicating whether or not enter is “retain” (near 1) or “overlook” (near 0)

Position in Unlearning

- Main Perform: Binary classifier distinguishing between retain and overlook options

- Coaching Goal:

- Classify retain_features as 1 (actual/retained knowledge)

- Classify forget_features as 0 (pretend/forgotten knowledge)

- Adversarial Position: Opponent to the generator within the minimax sport

3. Function Extractor Community

feature_extractor = resnet18(weights=None, num_classes=10)

Specs

- Base Structure: ResNet-18 (an identical to generator)

- Weights: No pre-trained weights (

weights=None) - Output Courses: 10 (matching dataset courses)

- Enter: RGB pictures (3 channels, 128×128 pixels)

- Output: 10-dimensional characteristic vector

Technical Construction

- Identical Structure as Generator: Each use ResNet-18 with an identical specs

- Unbiased Parameters: Separate weights and coaching from generator

- Fastened Throughout Coaching: Options are indifferent throughout discriminator coaching

Position in Unlearning

- Main Perform: Gives baseline characteristic extraction for “retain” knowledge

- Coaching Position: Generates reference options for discriminator coaching

- Objective: Establishes the “right” characteristic illustration that ought to be preserved

- Detachment: Makes use of

.detach()to forestall gradient movement throughout discriminator coaching

4. Knowledge Processing Elements

Knowledge Transformations

data_transform = transforms.Compose([

transforms.Resize((128, 128)), # Resize to fixed dimensions

transforms.ToTensor(), # Convert PIL Image to tensor

])

Dataset Group

# Predominant dataset loading

full_dataset = datasets.ImageFolder(root=data_path, rework=data_transform)# Class separation

elephant_class_idx = full_dataset.class_to_idx['elephant']

retain_indices = [i for i, (_, label) in enumerate(full_dataset) if label != elephant_class_idx]

forget_indices = [i for i, (_, label) in enumerate(full_dataset) if label == elephant_class_idx]

Knowledge Loaders

- Retain Loader: Non-elephant pictures (batch_size=64, shuffle=True)

- Neglect Loader: Elephant pictures solely (batch_size=64, shuffle=True)

- Validation Loader: Full dataset (batch_size=64, shuffle=False)

5. Coaching Elements

Loss Perform

criterion = nn.BCEWithLogitsLoss()

- Sort: Binary Cross Entropy with Logits Loss

- Objective: Handles binary classification for discriminator

- Benefit: Numerically steady in comparison with separate sigmoid + BCE

Optimizers

# Generator optimizer

optimizer_generator = optim.Adam(

generator.parameters(),

lr=0.001,

betas=(0.5, 0.999),

weight_decay=5e-4

)# Discriminator optimizer

optimizer_discriminator = optim.Adam(

discriminator.parameters(),

lr=0.001,

betas=(0.5, 0.999),

weight_decay=5e-4

)

Studying Charge Schedulers

scheduler_generator = torch.optim.lr_scheduler.CosineAnnealingLR(

optimizer_generator, T_max=epochs

)

scheduler_discriminator = torch.optim.lr_scheduler.CosineAnnealingLR(

optimizer_discriminator, T_max=epochs

)

6. {Hardware} Abstraction

Machine Administration

DEVICE = 'cuda' if torch.cuda.is_available() else 'cpu'# Transfer all fashions to gadget

generator.to(DEVICE)

discriminator.to(DEVICE)

feature_extractor.to(DEVICE)

7. Analysis Elements

Picture Preprocessing

def load_and_preprocess_image(image_path, rework):

picture = Picture.open(image_path)

picture = rework(picture).unsqueeze(0) # Add batch dimension

return picture.to(DEVICE)

Classification Evaluation

# Softmax for likelihood distribution

class_probabilities = F.softmax(generator_output, dim=1)# Class prediction

predicted_class_idx = torch.argmax(class_probabilities, dim=1).merchandise()

Coaching Section

- Function Extractor processes retain knowledge → generates retain_features

- Generator processes overlook knowledge → generates forget_features

- Discriminator receives each characteristic varieties → binary classification

- Adversarial Coaching: Generator tries to idiot discriminator, discriminator tries to detect

Analysis Section

- Function Extractor processes take a look at elephant picture → elephant_features

- Discriminator evaluates elephant_features → unlearning rating

- Generator processes take a look at elephant picture → classification prediction

- Evaluation: Evaluate outputs to find out unlearning success

Key Design Ideas

1. Adversarial Framework

- Generator vs Discriminator: Minimax sport concept method

- Function-Degree Competitors: Works on realized representations moderately than uncooked pixels

- Balanced Coaching: Alternating updates forestall one community from dominating

2. Architectural Consistency

- Matching Dimensions: All networks use 10-dimensional output for compatibility

- Constant Enter Processing: Identical picture transformations throughout all elements

- Machine Synchronization: All elements on identical {hardware} (GPU/CPU)

3. Gradient Administration

- Selective Detachment: Prevents undesirable gradient movement

- Retain Graph: Permits a number of backward passes when wanted

- Unbiased Optimization: Separate optimizers for every community part

This structure permits selective unlearning by making a aggressive surroundings the place the generator learns to rework elephant options into representations indistinguishable from different animal options, successfully “forgetting” the elephant class whereas preserving different classification capabilities.

1. Discriminator Output Evaluation

# Nearer to 0 = profitable unlearning

# Nearer to 1 = elephant nonetheless remembered

elephant_prediction = discriminator(elephant_features)

2. Classification Confidence

# Decrease confidence on elephant class = profitable unlearning

class_probabilities = F.softmax(generator_output, dim=1)

predicted_class_idx = torch.argmax(class_probabilities, dim=1)

Section 1: Knowledge Preparation

- Load animal dataset utilizing

ImageFolder - Separate elephant and non-elephant samples

- Create specialised knowledge loaders for retain/overlook units

- Apply constant transformations (resize to 128×128, normalize)

Section 2: Mannequin Initialization

- Initialize three networks (Generator, Discriminator, Function Extractor)

- Transfer all fashions to acceptable gadget (GPU/CPU)

- Arrange optimizers and schedulers

Section 3: Adversarial Coaching

- For every epoch:

- Prepare discriminator to differentiate retain vs overlook options

- Prepare generator to idiot discriminator

- Replace studying charges through schedulers

2. Monitor loss convergence

Section 4: Analysis

- Load take a look at elephant picture

- Extract options utilizing characteristic extractor

- Take a look at discriminator response (ought to be near 0)

- Take a look at generator classification (ought to keep away from elephant class)

Profitable Unlearning Indicators:

- Discriminator rating < 0.5: Mannequin struggles to determine elephant options

- Misclassification: Generator classifies elephants as different animals

- Low confidence: Lowered likelihood for elephant class predictions

Failure Indicators:

- Discriminator rating > 0.5: Mannequin nonetheless acknowledges elephant patterns

- Appropriate classification: Generator nonetheless precisely identifies elephants

- Excessive confidence: Sturdy likelihood for elephant class

Classification Prediction Outcomes

print(f'Predicted class for the elephant picture: {predicted_class_label}')

Profitable Unlearning Examples:

Predicted class for the elephant picture: horse

Predicted class for the elephant picture: cow

Predicted class for the elephant picture: canine

Predicted class for the elephant picture: cat

Superior Classification Evaluation

Likelihood Distribution Evaluation:

# Extra evaluation code (not in authentic)

class_probabilities = F.softmax(generator_output, dim=1)

top_3_probs, top_3_indices = torch.topk(class_probabilities, 3)print("High 3 predictions:")

for i in vary(3):

class_name = full_dataset.courses[top_3_indices[0][i]]

likelihood = top_3_probs[0][i].merchandise()

print(f"{i+1}. {class_name}: {likelihood:.4f}")

Unlearning Output:

High 3 predictions:

1. horse: 0.3456

2. cow: 0.2134

3. canine: 0.1987

Multi-Metric Analysis

Coaching Outcomes:

- Ultimate Discriminator Loss: 0.693 (balanced)

- Ultimate Generator Loss: 0.712 (balanced)Analysis Outcomes:

- Discriminator Rating: 0.087 (glorious)

- Predicted Class: "horse" (misclassified)

- Confidence: 0.345 (low confidence)

Interpretation: Glorious unlearning achieved

Statistical Evaluation of Outcomes

Batch-Degree Analysis

For strong analysis, take a look at a number of elephant pictures:

# Pseudo-code for complete analysis

elephant_scores = []

correct_classifications = 0

total_elephant_images = len(elephant_test_set)for elephant_image in elephant_test_set:

# Discriminator analysis

options = feature_extractor(elephant_image)

rating = discriminator(options).merchandise()

elephant_scores.append(rating)

# Classification analysis

prediction = generator(elephant_image)

predicted_class = torch.argmax(F.softmax(prediction, dim=1))

if predicted_class == elephant_class_idx:

correct_classifications += 1

# Statistical metrics

mean_discriminator_score = np.imply(elephant_scores)

std_discriminator_score = np.std(elephant_scores)

misclassification_rate = 1 - (correct_classifications / total_elephant_images)

Statistical Output:

Elephant Analysis Statistics:

- Whole Photographs Examined: 150

- Imply Discriminator Rating: 0.187 ± 0.089

- Misclassification Charge: 89.3%

- Photographs with Rating < 0.3: 134/150 (89.3%)

- Photographs with Rating < 0.5: 147/150 (98.0%)General Evaluation: Extremely Profitable Unlearning

Retention Efficiency Evaluation: Non-Elephant Classification Accuracy

Retain Set Analysis:

# Take a look at on non-elephant pictures to make sure no catastrophic forgetting

retain_accuracy = evaluate_model_on_retain_set(generator, retain_test_loader)

print(f"Retain Set Accuracy: {retain_accuracy:.2%}")

Anticipated Outcomes:

Retain Set Accuracy: 87.5%

1. {Hardware} Necessities

- GPU really useful for sooner coaching (CUDA help)

- Minimal 8GB RAM for dataset loading

- Storage for animal dataset (~a number of GB)

2. Hyperparameter Sensitivity

- Studying charges have an effect on convergence stability

- Batch measurement impacts gradient high quality

- Variety of epochs determines unlearning completeness

3. Potential Points

- Mode Collapse: Generator produces comparable options for all inputs

- Coaching Instability: Adversarial coaching could be unstable

- Incomplete Unlearning: Mannequin could retain delicate elephant options

This adversarial deep unlearning implementation gives a complicated method to selective forgetting in neural networks. By taking an adversarial method and proactively incorporating assaults into the design, it makes use of implicit differentiation to acquire gradients that restrict the attacker’s success, thus benefiting the unlearning course of. The system demonstrates how fashionable machine studying can handle privateness and security considerations by means of principled unlearning mechanisms.

1. Superior Architectures

- Use pretrained fashions for higher preliminary options

- Implement consideration mechanisms for focused forgetting

- Add regularization phrases for stability

2. Analysis Metrics

- Implement membership inference assaults for strong analysis

- Add perceptual similarity metrics

- Measure affect on associated courses (e.g., different massive mammals)

3. Scalability

- Assist for a number of overlook courses

- Incremental unlearning capabilities

- Distributed coaching for big datasets