The Titanic dataset has turn into a ceremony of passage for anybody diving into machine studying. Its steadiness of categorical and numerical options makes it very best for exploring information preprocessing, mannequin analysis, and deployment methods with out being overwhelmingly giant or complicated.

On this challenge, I got down to construct a classification mannequin that predicts whether or not a passenger would survive the Titanic catastrophe, utilizing options like age, gender, fare, passenger class, and port of embarkation. However I didn’t need to cease at a pocket book stuffed with metrics — I needed to create one thing that anybody may work together with.

That’s the place Streamlit got here in. By combining my machine studying mannequin with Streamlit’s intuitive UI parts, I turned the mannequin right into a real-time internet software. Now, customers can enter passenger particulars and immediately see whether or not that particular person would have survived — with chances to again it up.

Earlier than constructing any mannequin, I started by completely cleansing and getting ready the Titanic dataset.

- Lacking Worth Dealing with: The

Embarkedcolumn had some lacking values, which I crammed utilizing the mode. This ensured that no categorical gaps disrupted mannequin coaching. - Column Cleanup & Renaming: A number of columns had inconsistent or uninformative names (like

2urvived,zero.1, and many others.), which I dropped or renamed to enhance readability and keep away from confusion in downstream duties. - One-Sizzling Encoding: Categorical options like

Embarkedhave been transformed into binary columns utilizingpd.get_dummies(). This allowed my mannequin to interpret ports of embarkation as numerically significant inputs whereas avoiding multicollinearity withdrop_first=True. - Function Choice: I retained key numerical and encoded options:

['Age', 'Fare', 'Sex', 'SibSp', 'Parch', 'Pclass', 'Embarked_1.0', 'Embarked_2.0']These variables present each demographic and journey-related insights into survival chance.

I educated two fashions:

- Logistic Regression (as a easy, interpretable baseline)

- Choice Tree Classifier (for comparability)

After preliminary testing, I used GridSearchCV to optimize the logistic mannequin by tuning hyperparameters like C, solver, and max_iter. This resulted in a better-performing model which I then used for deployment.

To raised perceive and talk how the mannequin was performing, I generated three visuals:

This visible exhibits how the untuned logistic regression mannequin carried out on the check set.

It breaks down predictions into:

- True Positives (Survived appropriately predicted as Survived)

- True Negatives (Died appropriately predicted as Died)

- False Positives (Predicted Survived however really Died)

- False Negatives (Predicted Died however really Survived)

This early outcome helped determine the mannequin’s imbalance towards predicting non-survival, highlighting areas for tuning.

A ROC (Receiver Working Attribute) curve plots True Constructive Fee vs. False Constructive Fee throughout thresholds.

The nearer the curve arcs towards the top-left nook, the higher. My mannequin’s curve confirmed promising separation from the random classifier baseline — indicating it wasn’t merely guessing, however studying helpful patterns from the information.

This matrix represents the predictions made by the best-tuned logistic regression mannequin.

Right here, the variety of true positives (174) considerably outweighed false negatives (15), which means the mannequin obtained a lot better at recognizing survivors. This validated that tuning hyperparameters by way of GridSearchCV had tangible advantages — particularly in decreasing the variety of incorrectly categorised survivors.

Every chart helps highlight a unique a part of the mannequin’s efficiency:

- Confusion matrices provide you with a transparent view of what the mannequin will get proper and fallacious, class by class.

- ROC curves assist consider how nicely your mannequin balances sensitivity vs. specificity, particularly in imbalanced datasets like this one.

They don’t simply look good — they make it easier to clarify and justify your mannequin’s strengths and limitations in a visible, accessible means.

After deciding on the best-performing mannequin through GridSearchCV, I wanted a solution to protect and reuse it with out retraining every time the app runs. That’s the place Python’s pickle module is available in.

I saved each my logistic regression mannequin and the fitted StandardScaler object utilizing:

import pickle

# Save finest mannequin

with open("titanic_best_model.pkl", "wb") as f:

pickle.dump(best_model, f)

# Save scaler

with open("scaler.pkl", "wb") as f:

pickle.dump(scaler, f)

As soon as these information have been saved, I may merely load them in any setting and use them immediately for predictions — even from a light-weight Streamlit frontend.

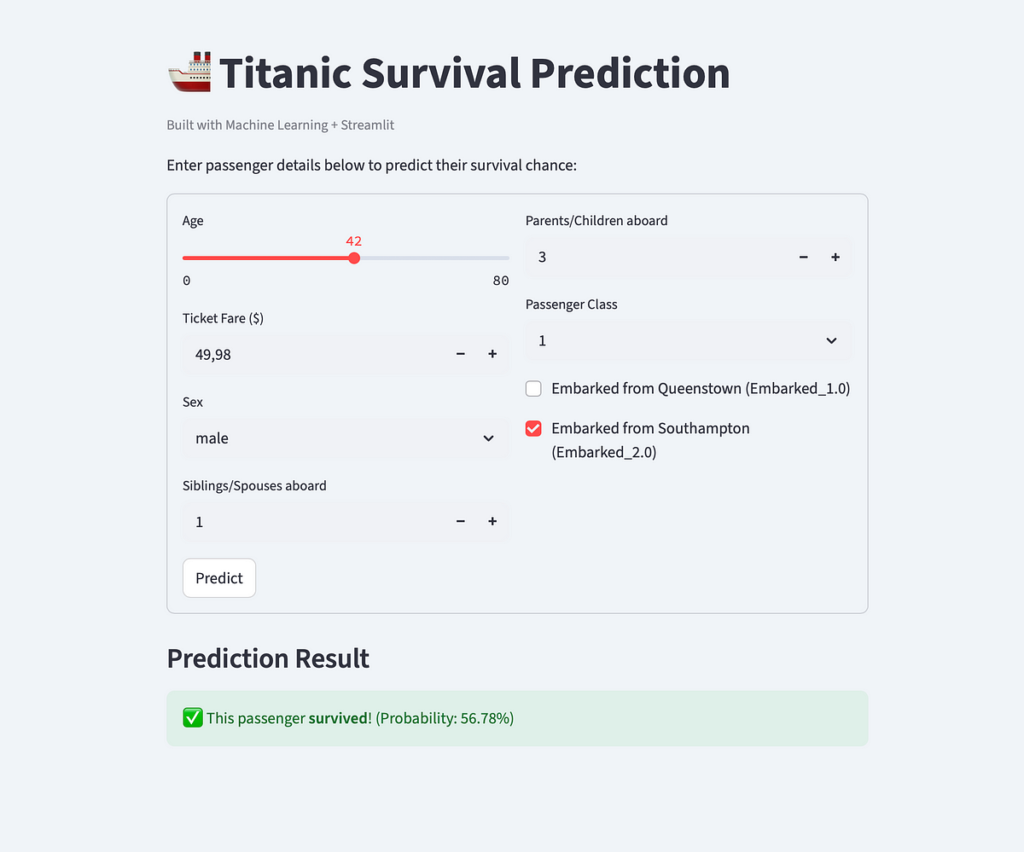

To create an interactive interface, I constructed a Streamlit app the place customers can enter passenger particulars and obtain a real-time survival prediction. The UI consists of:

- A title and outline to information customers

- Kind fields for every function (

Age,Fare,Pclass, and many others.) - A predict button that triggers mannequin inference

- A outcome show with survival chance

The magic occurs when person enter is reworked utilizing the scaler and handed into the mannequin like this:

input_scaled = scaler.rework(input_df)

prediction = mannequin.predict(input_scaled)[0]

chance = mannequin.predict_proba(input_scaled)[0][1]

This enables the app to ship an on the spot prediction with visible suggestions.

This visible provides readers a transparent thought of what the deployed app appears like and the way intuitive it’s to make use of — no set up, simply click on and discover.

As soon as the mannequin and interface have been prepared, it was time to share the app with the world. I selected a mixture of GitHubfor model management and code internet hosting, and Streamlit Cloud for deployment.

This manner, anybody can entry the app immediately with out cloning the repo or putting in Python.

Discover the complete codebase on GitHub : https://github.com/semaozylmz/titanic-survival-predictor

The challenge is organized right into a clear and minimal construction:

titanic/

├── titanic_app.py # Streamlit software (essential Python script)

├── titanic_best_model.pkl # Skilled Logistic Regression mannequin

├── scaler.pkl # Fitted StandardScaler object

├── necessities.txt # Mission dependencies

├── README.md # Mission overview & directions

└── information/

└── titanic.csv # (Non-obligatory) authentic dataset for native use

To make sure reproducibility, I listed my challenge’s dependencies in necessities.txt. This file is mechanically picked up by Streamlit Cloud when organising the deployment setting.

streamlit

pandas

scikit-learn

- Push the code to GitHub

- Navigate to streamlit.io/cloud

- Join your GitHub account and choose the repo

- Set

titanic_app.pyas the primary file - (Non-obligatory) Customise your app URL, e.g.

titanic-survival-predictor-app - Hit Deploy

The app is in-built seconds and turns into totally shareable with a public hyperlink.

Attempt it your self: https://titanic-survival-predictor-app.streamlit.app

Accessible from any browser. No installs wanted. Simply enter the passenger particulars and discover out in the event that they’d have made it.

This challenge was extra than simply an train in constructing a classifier. It challenged me to assume like a product developer: to not solely practice a mannequin, but in addition bundle it, talk it, and put it in entrance of customers by way of an actual interface.

Right here’s what I walked away with:

- Streamlit accelerated my capability to share machine studying insights interactively — eradicating the friction of full-stack deployment.

- I deepened my understanding of mannequin analysis with instruments like confusion matrices and ROC curves — not only for inside checks, however to clarify selections externally.

- I improved my ML pipeline design, from information cleansing and have engineering to storing educated artifacts and serving them in manufacturing.

- I obtained to share my work in a means that’s accessible, visually comprehensible, and shareable with one hyperlink.

However maybe most significantly, I spotted that constructing real-world machine studying apps doesn’t require monumental infrastructure. With the proper instruments — and a little bit of persistence — you possibly can go from CSV to cloud in a weekend.

I’m at the moment engaged on deploying extra initiatives involving NLP, classification challenges, and mannequin interpretability. I’d additionally like to discover:

- Including model management and branching for larger-scale ML apps

- Integrating person inputs logging for future analytics (privacy-respectful)

- Constructing a mini portfolio website to centralize all my apps in a single place

If you happen to’ve obtained suggestions, concepts, or need to construct one thing collectively — don’t hesitate to succeed in out!