Think about Googling “easy methods to repair a leaky faucet” and getting outcomes like “easy methods to restore a dripping faucet” as a substitute of simply articles containing the precise phrases “leaky faucet.” That’s the ability of semantic search.

On this publish, we’ll discover what semantic search is, the way it differs from conventional key phrase search, and the way trendy AI fashions like BERT, Siamese networks, and sentence transformers are making search techniques smarter. We’ll additionally stroll via a sensible instance utilizing Python to implement semantic search utilizing embeddings.

Semantic search refers back to the means of retrieving info primarily based on its that means moderately than merely matching key phrases.

Conventional search engines like google depend on key phrase frequency and placement. In distinction, semantic search understands the intent and context behind your question.

For instance:

Question: “What’s the capital of India?”

Key phrase Search Consequence: Pages with “capital” and “India” showing collectively.

Semantic Search Consequence: “New Delhi” — even when the phrase “capital of India” isn’t used immediately.

Conventional search engines like google usually fail when:

- Synonyms are used (e.g., “automobile” vs. “vehicle”)

- Questions are requested in a conversational tone

- Context is vital to disambiguate that means (e.g., “python” the snake vs. “Python” the language)

Semantic search addresses these challenges by leveraging Pure Language Understanding (NLU).

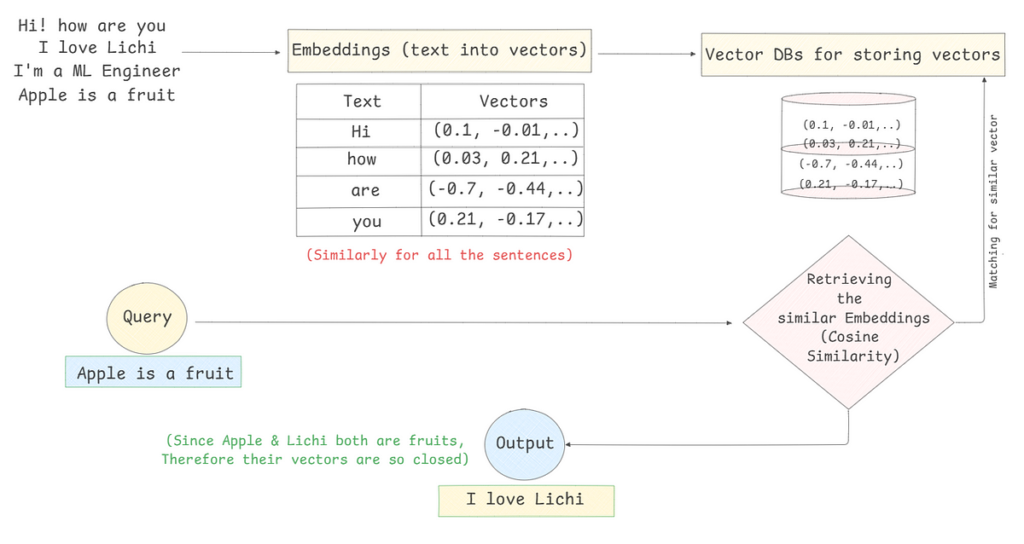

Underneath the hood, semantic search usually includes three steps:

- Convert Queries and Paperwork to Vectors utilizing language fashions.

- Retailer doc embeddings in a vector database or index.

- Discover the Most Related Embeddings to the question utilizing similarity metrics (like cosine similarity).

1. From Textual content to Vectors: Embeddings

Utilizing fashions like BERT, RoBERTa, or sentence-transformers, sentences are transformed into high-dimensional vectors.

Instance:

- “Methods to repair a leaking faucet?” →

[0.23, -0.47, ..., 0.19](768-dim vector)

These embeddings seize semantic properties of the textual content. Semantically comparable texts lie nearer on this vector house.

2. Storing the vectors in VectorDBs

Pinecone, Weaviate, Qdrant, and so on., are some vector databases to retailer the embeddings and could be fetched simply at any time when required.

3. Retrieving the same Embeddings

To match how comparable two texts are, we calculate the distance or angle between their vectors. Frequent similarity/distance metrics embrace:

a. Cosine Similarity (Most Frequent for NLP)

What it measures:

The angle between two vectors (i.e., how comparable their instructions are).

Formulation:

Cosine Similarity = (A • B) / (||A|| * ||B||)

the place:

- A • B is the dot product of vectors A and B

- ||A|| is the magnitude (size) of vector A

- ||B|| is the magnitude of vector B

Vary: (-1 to 1):

- 1 → Vectors level in the identical route (very comparable)

- 0 → Vectors are orthogonal (unrelated)

- -1 → Vectors level in reverse instructions (uncommon in observe with textual content embeddings)

Why it’s helpful:

Cosine similarity ignores magnitude and focuses on route, which makes it excellent for evaluating sentence or phrase embeddings.

b. Euclidean Distance

What it measures:

The straight-line distance between two vectors in house.

Formulation:

Euclidean Distance = Sq. root of the sum of squared variations throughout all dimensions

= sqrt( (A1 — B1)² + (A2 — B2)² + … + (An — Bn)² )

Interpretation:

- Decrease distance = extra comparable

- Increased distance = extra completely different

Why it’s used much less in NLP:

It’s delicate to vector magnitude and never preferrred while you’re solely interested by route or semantic closeness.

c. Manhattan Distance (Additionally referred to as L1 distance)

What it measures:

The sum of absolute variations throughout all dimensions.

Formulation:

Manhattan Distance = |A1 — B1| + |A2 — B2| + … + |An — Bn|

Use case:

Helpful in high-dimensional or sparse knowledge situations. Not quite common in dense textual content embeddings.

- BERT & Sentence-BERT: Pretrained fashions that generate contextual embeddings.

- FAISS: Fb’s library for environment friendly similarity search.

- Pinecone, Weaviate, Qdrant: Vector databases.

- Hugging Face Transformers: For producing embeddings from fashions.

# To put in dependencies

pip set up sentence-transformers#Pattern Paperwork

docs = [

"How to learn Python programming?",

"Best ways to stay healthy during winter",

"Tips for fixing a leaky faucet",

"Introduction to machine learning",

"How to repair a dripping tap"

]

# Create Embeddings

from sentence_transformers import SentenceTransformer, util

mannequin = SentenceTransformer('all-MiniLM-L6-v2')

doc_embeddings = mannequin.encode(docs, convert_to_tensor=True)

# Question & Search

question = "How can I repair a leaking faucet?"

query_embedding = mannequin.encode(question, convert_to_tensor=True)

# Discover probably the most comparable doc

scores = util.pytorch_cos_sim(query_embedding, doc_embeddings)

# Rank paperwork by rating

best_match_index = scores.argmax()

print(f"Greatest match: {docs[best_match_index]}")

#This Have to be the output

" Greatest match: Methods to restore a dripping faucet "

Visible Illustration of the above code snippet:

- E-commerce: “Inexpensive laptop computer for college kids” → Outcomes with low-cost notebooks

- Buyer Assist: Match tickets to related data base articles

- Recruitment Platforms: Match resumes to job descriptions

- Chatbots: Retrieve context-aware solutions from documentation

Semantic search isn’t only a buzzword — it’s reworking the way in which we discover info. Whether or not you’re constructing a sensible search characteristic in your app or optimizing your content material for voice search, understanding semantics is now important.

As language fashions develop extra highly effective, the road between “search” and “understanding” continues to blur. And that’s precisely what makes this house so thrilling.