Now that our dataset is clear and preprocessed (because of Milestone 1), it’s time to construct machine studying fashions to foretell track reputation based mostly on options like streams, playlists, and audio traits.

We have to predict whether or not a track is well-liked/successful or not.

- We outline reputation based mostly on the variety of streams.

- We convert

streamsright into a binary classification (well-liked vs not well-liked) - Drop any lacking values to scrub dataset.

It defines well-liked/hit songs as these within the 10% most streamed.

Options are chosen for use in figuring out reputation

✅ Now, we have now a classification drawback:

- 1 → In style track/Hit track (above median streams)

- 0 → Not well-liked track /Not hit track (beneath median streams)

Earlier than coaching, we break up our dataset into coaching (80%) and testing (20%).

Stratified sampling ensures each coaching and take a look at units have a balanced variety of well-liked and non-popular songs.

Since our drawback is a classification drawback, we opted to strive the next ✅ Logistic Regression — Easy and interpretable.

✅ Random Forest — Can deal with advanced relationships.

We used Logistic Regression to foretell whether or not a track is well-liked based mostly on options like BPM, dancebility, vitality and acousticness.

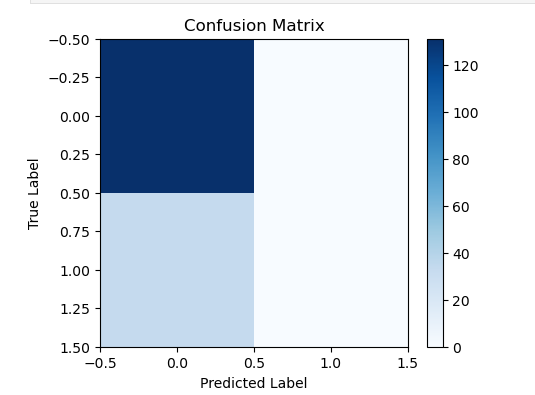

and code for Confusion Matrix on the backside

Helps perceive false positives and false negatives

We used Random Forest to foretell whether or not a track is a “hit” or “not successful” based mostly on its options.

Random Forest is an ensemble studying methodology that trains a number of resolution bushes and averages their prediction

1. Makes Predictions on the take a look at set.

2. Evaluates Efficiency (Accuracy, Classification Report, and Confusion Matrix).

3. Shows Function Significance (which options contribute most to the mannequin).

4. Visualizes the Confusion Matrix.

Recall: What number of precise hits have been appropriately predicted?

F1-score: Steadiness of precision and recall

- Random Forest is strong and works nicely with each imbalanced and high-dimensional knowledge.

- Function significance helps determine which elements contribute probably the most to predicting successful.

- If recall for hits (1) is low, knowledge balancing or hyperparameter tuning could also be wanted.

✅ Transformed ‘streams’ right into a binary classification drawback (well-liked vs. not well-liked).

✅ Educated two fashions: Logistic Regression and Random Forest

✅ Evaluated mannequin efficiency utilizing accuracy, precision, recall, and F1-score.

Try our repository on Github!!