I. Introduction: The Double-Edged Sword of GenAI

The event of enterprise automation by Generative AI (GenAI) permits digital assistants and chatbots to grasp consumer intent, to allow them to create appropriate responses and predictive actions. The promising advantages of steady clever interplay at scale create a number of moral challenges that embrace biased outputs and misinformation alongside regulatory non-compliance and consumer mistrust. The deployment of GenAI is not a query of functionality, nevertheless it has developed right into a matter of duty and acceptable implementation strategies. The McKinsey report signifies that greater than half of enterprises have began utilizing GenAI instruments which primarily concentrate on customer support and operational purposes. The rising scale of this know-how produces corresponding results on equity requirements and safety measures and compliance necessities. GenAI chatbots have already began remodeling private and non-private interactions by their implementation in banking digital brokers and multilingual authorities helplines.

II. Enterprise-Grade Chatbots: A New Class of Accountability

Shopper purposes normally tolerate chatbot errors with out consequence. The dangers in enterprise environments reminiscent of finance, healthcare and authorities are a lot better. A flawed output can result in misinformation, compliance violations, and even authorized penalties. Moral habits isn’t only a social obligation; it’s a business-critical crucial. Enterprises want frameworks to make sure that AI methods respect consumer rights, adjust to laws, and preserve public belief.

III. From Immediate to Output: The place Ethics Begins

Each GenAI system begins with a prompt-but what occurs between enter and output is a fancy net of coaching information, mannequin weights, reinforcement logic, and danger mitigation. The moral considerations can emerge at any step:

- Ambiguous or culturally biased prompts

- Non-transparent determination paths

- Responses primarily based on outdated or inaccurate information

With out strong filtering and interpretability mechanisms, enterprises might unwittingly deploy methods that reinforce dangerous biases or fabricate data.

IV. Moral Challenges in GenAI-Powered Chatbots

- The coaching course of utilizing historic information tends to strengthen present social and cultural biases.

- The LLMs produce responses which include each factual inaccuracies and fictional content material.

- The unintentional habits of fashions can lead to the leakage of delicate enterprise or consumer data.

- The absence of multilingual and cross-cultural understanding in GenAI methods results in alienation of customers from totally different cultural backgrounds.

- GenAI methods lack built-in moderation methods which permits them to create inappropriate or coercive messages.

- The unverified AI-generated content material spreads false or deceptive information at excessive velocity all through regulated sectors.

- The dearth of auditability in these fashions creates difficulties when making an attempt to determine the supply of a specific output as a result of they perform as black packing containers.

These challenges seem with totally different ranges of severity and show totally different manifestations primarily based on the particular trade. The healthcare trade faces a vital danger as a result of hallucinated information in retail chatbots would confuse prospects however may lead to deadly penalties.

V. Design Rules for Accountable Chatbot Growth

The event of moral chatbots requires designers to include values instantly into their design course of past fundamental bug fixing.

- The system consists of guardrails and immediate moderation options which prohibit each subjects and response tone and scope.

- Human-in-the-Loop: Delicate selections routed for human verification

- Explainability Modules: Allow transparency into how responses are generated

- The coaching information should embrace numerous and consultant examples to stop one-dimensional studying.

- Audit Logs & Model Management: Guarantee traceability of mannequin habits

- Equity Frameworks: Instruments like IBM’s AI Equity 360 will help check for unintended bias in NLP outputs

- Actual-Time Moderation APIs: Providers like OpenAI’s content material filter or Microsoft Azure’s content material security API assist filter unsafe responses earlier than they’re seen by customers

VI. Governance and Coverage Integration

All enterprise deployments have to observe each inside organizational insurance policies and exterior regulatory necessities.

- GDPR/CCPA: Information dealing with and consumer consent

- EU AI Act & Algorithmic Accountability Act: Threat classification, affect evaluation

- Inside AI Ethics Boards: Periodic evaluation of deployments

- Organizations ought to implement real-time logging and alerting and auditing instruments for steady compliance monitoring.

Organizations ought to assign danger ranges to GenAI methods primarily based on area, viewers and information kind which could be low, medium or excessive danger. AI audit checklists and compliance dashboards assist doc determination trails and cut back legal responsibility.

VII. A Blueprint Structure for Moral GenAI Chatbots

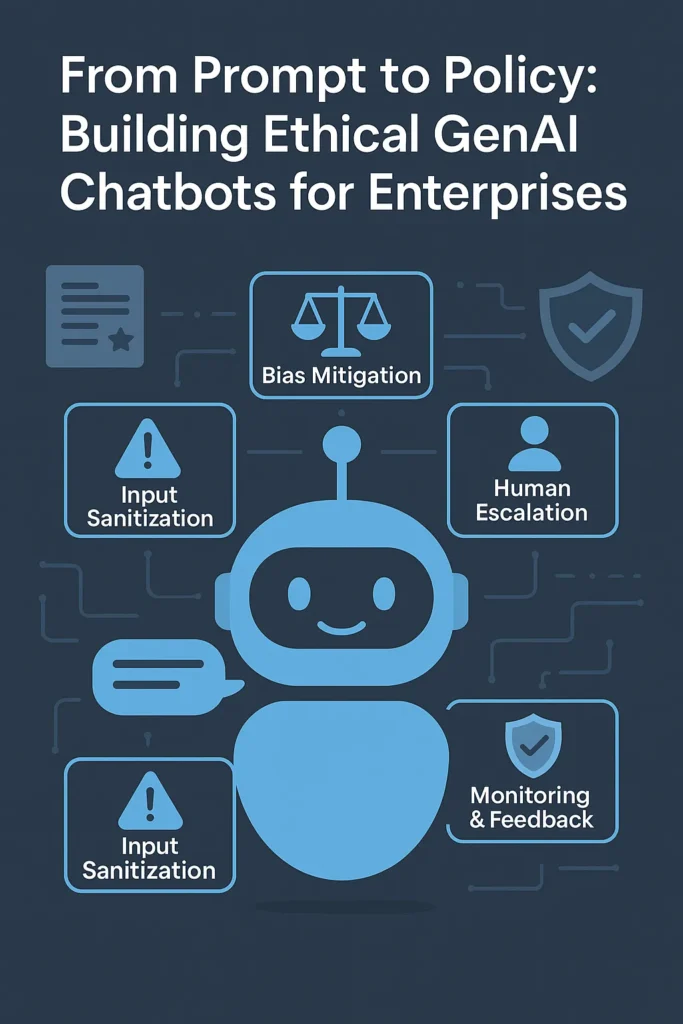

An moral GenAI chatbot system ought to embrace:

- The Enter Sanitization Layer identifies offensive or manipulative or ambiguous prompts within the system.

- The Immediate-Response Alignment Engine is answerable for making certain that the responses are in step with the company tone and moral requirements.

- The Bias Mitigation Layer performs real-time checks on gender, racial, or cultural skew in responses.

- Human Escalation Module: Routes delicate conversations to human brokers

- The system features a Monitoring and Suggestions Loop that learns from flagged outputs and retrains the mannequin periodically.

Determine 1: Structure Blueprint for Moral GenAI Chatbots (AI-generated for editorial readability)

Instance Circulation: A consumer enters a borderline medical question into an insurance coverage chatbot. The sanitization layer flags it for ambiguity, the alignment engine generates a secure response with a disclaimer, and the escalation module sends a transcript to a stay help agent. The monitoring system logs this occasion and feeds it into retraining datasets.

VIII. Actual-World Use Circumstances and Failures

- Microsoft Tay: A chatbot grew to become corrupted inside 24 hours due to unmoderated interactions

- Meta’s BlenderBot obtained criticism for delivering offensive content material and spreading false data

- Salesforce’s Einstein GPT applied human evaluation and compliance modules to help enterprise adoption

These examples show that moral breakdowns exist in actual operational environments. The query isn’t about when failures will happen however when they’ll occur and whether or not organizations have established response mechanisms.

IX. Metrics for Moral Efficiency

Enterprises want to ascertain new measurement standards which surpass accuracy requirements.

- Belief Scores: Primarily based on consumer suggestions and moderation frequency

- Equity Metrics: Distributional efficiency throughout demographics

- Transparency Index: How explainable the outputs are

- Security Violations Depend: Cases of inappropriate or escalated outputs

- The analysis of consumer expertise in opposition to moral enforcement requires evaluation of the retention vs. compliance trade-off.

Actual-time enterprise dashboards show these metrics to supply quick moral well being snapshots and detect potential intervention factors. Organizations now combine moral metrics into their quarterly efficiency critiques which embrace CSAT, NPS and common dealing with time to ascertain ethics as a major KPI for CX transformation.

X. Future Traits: From Compliance to Ethics-by-Design

The GenAI methods of tomorrow will probably be value-driven by design as an alternative of simply being compliant. Business expects advances in:

- New age APIs with Embedded Ethics

- Extremely managed environments geared up with Regulatory Sandboxes for testing AI methods

- Sustainability Audits for energy-efficient AI deployment

- Cross-cultural Simulation Engines for world readiness

Giant organizations are creating new roles reminiscent of AI Ethics Officers and Accountable AI Architects to watch unintended penalties and oversee coverage alignment.

XI. Conclusion: Constructing Chatbots Customers Can Belief

The way forward for GenAI as a core enterprise software calls for acceptance of its capabilities whereas sustaining moral requirements. Each design aspect of chatbots from prompts to insurance policies must show dedication to equity transparency and duty. Efficiency doesn’t generate belief as a result of belief exists because the precise end result. The winners of this period will probably be enterprises which ship accountable options that shield consumer dignity and privateness and construct enduring belief. The event of moral chatbots calls for teamwork between engineers and ethicists and product leaders and authorized advisors. Our capacity to create AI methods that profit all individuals is dependent upon working collectively.

Creator Bio:

Satya Karteek Gudipati is a Principal Software program Engineer primarily based in Dallas, TX, specializing in constructing enterprise grade methods that scale, cloud-native architectures, and multilingual chatbot design. With over 15 years of expertise constructing scalable platforms for world purchasers, he brings deep experience in Generative AI integration, workflow automation, and clever agent orchestration. His work has been featured in IEEE, Springer, and a number of commerce publications. Join with him on LinkedIn.

References

1. McKinsey & Firm. (2023). *The State of AI in 2023*. [Link](https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2023 )

2. IBM AI Equity 360 Toolkit. (n.d.). [Link](https://aif360.mybluemix.net/ )

3. EU Synthetic Intelligence Act – Proposed Laws. [Link](https://artificialintelligenceact.eu/ )

4. OpenAI Moderation API Overview. [Link](https://platform.openai.com/docs/guides/moderation )

5. Microsoft Azure Content material Security. [Link](https://learn.microsoft.com/en-us/azure/ai-services/content-safety/overview )

The put up From Prompt to Policy: Building Ethical GenAI Chatbots for Enterprises appeared first on Datafloq.