Within the age of synthetic intelligence and laptop imaginative and prescient, understanding and detecting human physique actions in real-time has grow to be an enchanting and extensively researched matter. On this undertaking, we discover full-body detection utilizing OpenCV and MediaPipe — a strong mixture for pose estimation and real-time utility growth.

Full-body detection includes figuring out varied landmarks on the human physique, resembling face options, hand positions, and physique joints, to investigate posture and motion. Functions of such expertise embrace health monitoring, gesture recognition, and even digital actuality experiences.

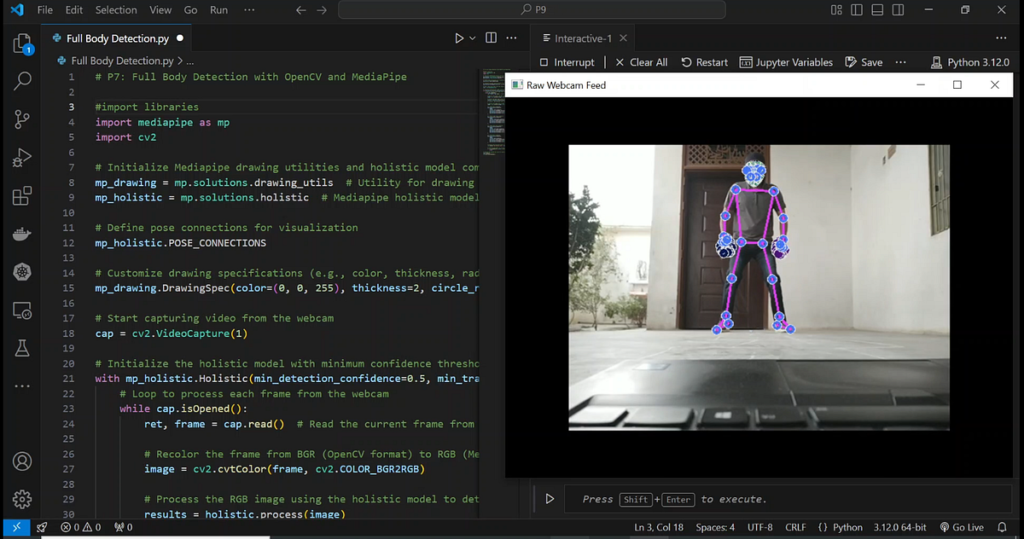

On this article, we’ll stroll by the method of constructing a real-time full-body detection system utilizing MediaPipe’s Holistic resolution and OpenCV. We’ll cowl:

- Organising MediaPipe’s holistic mannequin

- Capturing and processing webcam feed

- Drawing landmarks and connections for visualization

Let’s dive into the code!

To observe alongside, guarantee you’ve got Python put in with the required libraries:

pip set up mediapipe opencv-python

First, import the mandatory libraries for our undertaking:

import mediapipe as mp

import cv2

We’ll use MediaPipe for pose, face, and hand detection and OpenCV to deal with the video stream and render the output.

MediaPipe supplies a Holistic resolution that mixes pose, face, and hand landmark detection into one mannequin.

# Initialize Mediapipe drawing utilities and holistic mannequin elements

mp_drawing = mp.options.drawing_utils

mp_holistic = mp.options.holistic# Begin capturing video from the webcam

cap = cv2.VideoCapture(1)

Right here, mp_drawing is used to visualise the landmarks and connections detected by the mannequin.

Subsequent, we course of the webcam feed and use MediaPipe’s Holistic resolution to detect landmarks:

# Initialize the holistic mannequin with confidence thresholds

with mp_holistic.Holistic(min_detection_confidence=0.5, min_tracking_confidence=0.5) as holistic:

whereas cap.isOpened():

ret, body = cap.learn()# Convert BGR (OpenCV format) to RGB (MediaPipe format)

picture = cv2.cvtColor(body, cv2.COLOR_BGR2RGB)

# Course of the body with the holistic mannequin

outcomes = holistic.course of(picture)

# Convert RGB again to BGR for rendering

picture = cv2.cvtColor(picture, cv2.COLOR_RGB2BGR)

# Visualization code (lined within the subsequent step)

# Show the processed body

cv2.imshow('Full Physique Detection', picture)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

MediaPipe supplies detailed facial meshes, hand connections, and pose connections. Let’s visualize these on the video feed:

# Draw face landmarks

mp_drawing.draw_landmarks(

picture,

outcomes.face_landmarks,

mp_holistic.FACEMESH_CONTOURS,

mp_drawing.DrawingSpec(shade=(80, 110, 10), thickness=1, circle_radius=1),

mp_drawing.DrawingSpec(shade=(80, 256, 121), thickness=1, circle_radius=1)

)# Draw proper hand landmarks

mp_drawing.draw_landmarks(

picture,

outcomes.right_hand_landmarks,

mp_holistic.HAND_CONNECTIONS,

mp_drawing.DrawingSpec(shade=(80, 22, 10), thickness=2, circle_radius=4),

mp_drawing.DrawingSpec(shade=(80, 44, 121), thickness=2, circle_radius=2)

)

# Draw left hand landmarks

mp_drawing.draw_landmarks(

picture,

outcomes.left_hand_landmarks,

mp_holistic.HAND_CONNECTIONS,

mp_drawing.DrawingSpec(shade=(121, 22, 76), thickness=2, circle_radius=4),

mp_drawing.DrawingSpec(shade=(121, 44, 250), thickness=2, circle_radius=2)

)

# Draw pose landmarks

mp_drawing.draw_landmarks(

picture,

outcomes.pose_landmarks,

mp_holistic.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(shade=(245, 117, 66), thickness=2, circle_radius=4),

mp_drawing.DrawingSpec(shade=(245, 66, 230), thickness=2, circle_radius=2)

)

This renders the detected landmarks and connections on the dwell video feed for straightforward visualization.

Lastly, launch the webcam and shut all OpenCV home windows when this system exits:

cap.launch()

cv2.destroyAllWindows()

Right here is the total script on your reference:

import mediapipe as mp

import cv2mp_drawing = mp.options.drawing_utils

mp_holistic = mp.options.holistic

cap = cv2.VideoCapture(1)

with mp_holistic.Holistic(min_detection_confidence=0.5, min_tracking_confidence=0.5) as holistic:

whereas cap.isOpened():

ret, body = cap.learn()

picture = cv2.cvtColor(body, cv2.COLOR_BGR2RGB)

outcomes = holistic.course of(picture)

picture = cv2.cvtColor(picture, cv2.COLOR_RGB2BGR)

mp_drawing.draw_landmarks(picture, outcomes.face_landmarks, mp_holistic.FACEMESH_CONTOURS,

mp_drawing.DrawingSpec(shade=(80, 110, 10), thickness=1, circle_radius=1),

mp_drawing.DrawingSpec(shade=(80, 256, 121), thickness=1, circle_radius=1))

mp_drawing.draw_landmarks(picture, outcomes.right_hand_landmarks, mp_holistic.HAND_CONNECTIONS,

mp_drawing.DrawingSpec(shade=(80, 22, 10), thickness=2, circle_radius=4),

mp_drawing.DrawingSpec(shade=(80, 44, 121), thickness=2, circle_radius=2))

mp_drawing.draw_landmarks(picture, outcomes.left_hand_landmarks, mp_holistic.HAND_CONNECTIONS,

mp_drawing.DrawingSpec(shade=(121, 22, 76), thickness=2, circle_radius=4),

mp_drawing.DrawingSpec(shade=(121, 44, 250), thickness=2, circle_radius=2))

mp_drawing.draw_landmarks(picture, outcomes.pose_landmarks, mp_holistic.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(shade=(245, 117, 66), thickness=2, circle_radius=4),

mp_drawing.DrawingSpec(shade=(245, 66, 230), thickness=2, circle_radius=2))

cv2.imshow('Full Physique Detection', picture)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.launch()

cv2.destroyAllWindows()

This undertaking opens up quite a few prospects:

- Health Monitoring: Monitor train varieties and postures.

- Gesture Recognition: Construct functions for signal language interpretation.

- Digital Avatars: Map detected landmarks to 3D fashions for animation.

Be happy to experiment with this code and prolong its performance. Completely satisfied coding!

🔗 Discover the GitHub Code Right here: https://github.com/iamramzan/Full-Body-Detection-with-OpenCV-and-MediaPipe