Prediction fashions are one of the crucial generally used machine studying fashions. Gradient boosting Algorithm in machine studying is a technique standing out for its prediction pace and accuracy, notably with giant and sophisticated datasets. This algorithm has produced the very best outcomes from Kaggle competitions to machine studying options for enterprise. It’s a boosting methodology, and I’ve talked extra about it on this article. It’s refer it as Stochastic Gradient Boosting Machine or GBM Algorithm. On this article, I’ll focus on the mathematics instinct behind the Gradient boosting algorithm.

On this article, you’ll study concerning the gradient boosting regressor, a key part of gradient boosting machines (GBM), and the way these highly effective algorithms improve predictive modelling.

Studying Aims:

- Perceive the elemental ideas of gradient boosting algorithms

- Learn the way gradient boosting improves mannequin accuracy via sequential weak learners

- Discover the mathematical rules behind gradient boosting for regression and classification

- Achieve sensible information on implementing Stochastic Gradient Boosting utilizing scikit-learn

TABLE OF CONTENTWhat's Boosting?

What's Gradient Boosting?

What's AdaBoost Algorithm?

What's a Gradient Boosting Algorithm?

Understanding Gradient Boosting Algorithm with An Instance

What's a Gradient Boosting Classifier?

How Does it Work?

Implementation of GBM Utilizing scikit-learn

Parameter Tuning in Gradient Boosting (GBM) in Python

Conclusion

Whereas finding out machine studying, it’s essential to have encountered this time period known as boosting. It’s the most misinterpreted time period within the discipline of Knowledge Science. The precept behind boosting algorithms is that first, we construct a mannequin on the coaching dataset; then, a second mannequin is constructed to rectify the errors current within the first mannequin. Let me clarify to you what precisely this implies and the way this works.

Suppose you’ve n knowledge factors and a pair of output lessons (0 and 1). You wish to create a mannequin to detect the category of the take a look at knowledge. Now we randomly choose observations from the coaching dataset and feed them to mannequin 1 (M1), assuming that originally, all observations carry equal weight, giving them an equal likelihood of choice.

Keep in mind in ensembling methods the weak learners mix to make a robust mannequin so right here M1, M2, M3….Mn, are all weak learners.

Since M1 is a weak learner, it would absolutely misclassify some observations. Now, earlier than feeding the observations to M2, we replace the weights of the wrongly labeled observations. You possibly can consider it as a bag that originally incorporates 10 totally different color balls, however after a while, some child takes out his favorite color ball and places 4 crimson color balls as an alternative contained in the bag. In fact, the likelihood of choosing a crimson ball is increased.

Different Gradient Boosting Strategies

This identical phenomenon happens in gradient boosting methods: when an commentary will get wrongly labeled, we replace its weight, and for people who we classify accurately, we lower their weights. The likelihood of choosing a wrongly labeled commentary will increase. Therefore, within the subsequent mannequin, solely these observations that have been misclassified within the mannequin.

Equally, it occurs with M2. The wrongly labeled weights after which feed them to M3. This process continues till we decrease the errors and predict the dataset accurately. Now, when the brand new datapoint is available in (Take a look at knowledge), it passes via all of the fashions (weak learners), and the category that will get the best vote is the output for our take a look at knowledge.

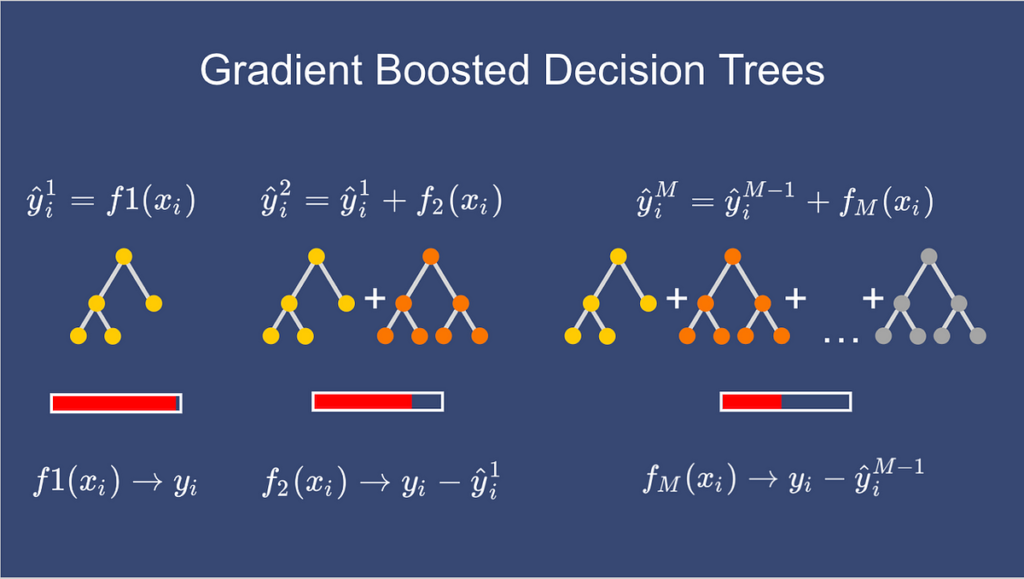

Gradient boosting is a machine studying ensemble approach that sequentially combines the predictions of a number of weak learners, usually choice timber. It goals to enhance general predictive efficiency by optimizing the mannequin’s weights based mostly on the errors of earlier iterations, step by step decreasing prediction errors and enhancing the mannequin’s accuracy. This system is mostly used for linear regression.

Earlier than stepping into the small print of the gradient boosting algorithm in machine studying, we should know concerning the AdaBoost Algorithm, a boosting methodology. This algorithm begins by constructing a call stump and assigning equal weights to all the information factors. Then, it will increase the weights for all of the misclassified factors and lowers the load for these simply or accurately labeled. A brand new choice stump is made for these weighted knowledge factors. The concept behind that is to enhance the predictions made by the primary stump. I’ve talked extra about this algorithm right here.

The primary distinction between these two algorithms is that Stochastic Gradient Boosting has a hard and fast base estimator and choice timber. In distinction, in AdaBoost, we are able to change the bottom estimator to swimsuit our wants.

Errors play a serious function in any machine studying algorithm. There are two foremost forms of errors: bias error and variance error. The gradient increase algorithm helps us decrease the mannequin’s bias error. The primary concept behind this algorithm is to construct fashions sequentially, and these subsequent fashions attempt to scale back the errors of the earlier mannequin. However how will we do this? How will we scale back the error? Construct a brand new mannequin on the errors or residuals of the earlier mannequin.

When the goal column is steady, we use a Gradient Boosting Regressor; when it’s a classification downside, we use a Gradient Boosting Classifier. The one distinction between the 2 is the “Loss perform”. The target is to reduce this loss perform by including weak learners utilizing gradient descent. Since it’s based mostly on the++ loss perform, for regression issues, we’ll have totally different loss features like Imply squared error (MSE), and for classification, we can have totally different features, like log-likelihood.

Let’s perceive the instinct behind the Stochastic Gradient Boosting algorithm in Machine Studying with an instance. Our goal column is steady, so we’ll use a gradient boosting regressor.

The next is a pattern from a random dataset during which we should predict the automotive worth based mostly on varied options. The goal column is worth, and the opposite options are impartial.

Step 1: Construct a Base Mannequin

Step one in gradient boosting is to construct a base mannequin to foretell the observations within the coaching dataset. For simplicity, we take a median of the goal column and assume that to be the expected worth as proven under:

Why did I say we take the typical of the goal column? Nicely, math is concerned on this. Mathematically step one might be written as:

this may increasingly provide you with a headache, however don’t fear — we’ll attempt to perceive what it says right here.

Right here L is our loss perform,

Gamma is our predicted worth, and

arg min means we now have to discover a predicted worth/gamma for which the loss perform is minimal.

For the reason that goal column is steady our loss perform can be:

Right here yi is the noticed worth, and gamma is the expected worth.

Now, we have to discover a minimal worth of gamma such that this loss perform is minimal. All of us studied find out how to discover minima and maxima in twelfth grade. Did we use them to distinguish this loss perform after which put it equal to 0, proper? Sure, we’ll do the identical right here.

Let’s see how to do that with the assistance of our instance. Do not forget that y_i is our noticed worth, and gamma_i is our predicted worth. By plugging the values within the above method,a we get:

We find yourself over a median of the noticed automotive worth, which is why I requested you to take the typical of the goal column and assume it as your first prediction.

Therefore for gamma=14500, the loss perform can be minimal so this worth will grow to be our prediction for the base mannequin.

Step 2: Compute Pseudo Residuals

The subsequent step is to calculate the pseudo residuals that are (noticed worth — predicted worth).

Once more the query comes why solely noticed — predicted? Every part is mathematically confirmed. Let’s see the place this method comes from. This step might be written as:

Right here F(xi) is the earlier mannequin and m is the variety of DT made.

We’re simply taking the by-product of the loss perform w.r.t the expected worth and we now have already calculated this by-product:

In case you have a look at the method of residuals above, you’ll discover {that a} damaging signal multiplies the by-product of the loss perform, so now we get:

The anticipated worth right here is the prediction made by the earlier mannequin. In our instance the prediction made by the earlier mannequin (preliminary base mannequin prediction) is 14500, to calculate the residuals our method turns into:

Step 3: Construct a Mannequin on Calculated Residuals

Within the subsequent step, we’ll construct a mannequin on these pseudo residuals and make predictions. Why will we do that? As a result of we wish to decrease these residuals, minimizing them will finally enhance our mannequin accuracy and prediction energy. So, utilizing the residual as a goal and the unique function, the cylinder quantity, cylinder peak, and engine location, we’ll generate new predictions. Be aware that the predictions, on this case, would be the error values, not the expected automotive worth values, since our goal column is an error now.

Let’s say hm(x) is our DT made on these residuals.

Step 4: Compute Resolution Tree Output

On this step, we discover the output values for every choice tree leaf. Which means there is perhaps a case the place one leaf will get multiple residual, so we have to discover the ultimate output of all of the leaves. To seek out the output, we are able to merely take the typical of all of the numbers in a leaf; it doesn’t matter if there is just one quantity or multiple.

Let’s see why we take the typical of all of the numbers. Mathematically this step might be represented as:

Right here, hm(xi) is the DT made on residuals, and m is the variety of DTs. When m=1, we’re speaking concerning the first DT, and when it’s “M,” we’re speaking concerning the final DT.

The leaf’s output worth is the gamma worth that minimizes the Loss perform. The left-hand facet “Gamma” is the output worth of a specific leaf. On the right-hand facet [F m-1 (x i )+ƴh m (x i ))] is much like step 1 however right here the distinction is that we’re taking earlier predictions whereas earlier there was no earlier prediction.

Instance of Calculating Regression Tree Output

Let’s perceive this even higher with the assistance of an instance. Suppose that is our regressor tree:

We see 1st residual goes in R1,1 ,2nd and third residuals go in R2,1 and 4th residual goes in R3,1 .

Let’s calculate the output for the primary depart that’s R1,1

Now we have to discover the worth for gamma for which this perform is minimal. So we discover the by-product of this equation w.r.t gamma and put it equal to 0.

Therefore the leaf R1,1 has an output worth of -2500. Now let’s resolve for the R2,1.

Let’s take the by-product to get the minimal worth of gamma for which this perform is minimal:

We find yourself with the common of the residuals within the leaf R2,1 . Therefore if we get any leaf with greater than 1 residual, we are able to merely discover the typical of that leaf and that can be our ultimate output.

Now, after calculating the output of all of the leaves, we get:

Step 5: Replace Earlier Mannequin Predictions

That is lastly the final step the place we now have to replace the predictions of the earlier mannequin. It may be up to date as:

Since we now have simply began constructing our mannequin so our m=1. Now to make a brand new DT our new predictions can be:

Right here, Fm-1(x) is the prediction of the bottom mannequin (earlier prediction) since F1–1=0. Since F0 is our base mannequin, the earlier prediction is 14500.

nu is the studying charge that’s normally chosen between 0–1. It reduces the impact every tree has on the ultimate prediction, and this improves accuracy in the long term. Let’s take nu=0.1 on this instance.

Hm(x) is the latest DT made on the residuals.

Let’s calculate the brand new prediction now:

Suppose we wish to discover a prediction of our first knowledge level which has a automotive peak of 48.8. This knowledge level will cross via this choice tree, and the output will multiply by the educational charge earlier than including to the earlier prediction.

Now, let’s say m=2, which suggests we now have constructed 2 choice timber and wish to make new predictions.

This time, we’ll add the earlier prediction, F1(x), to the brand new DT made on residuals. We are going to iterate via these steps many times till the loss is negligible.

I’m taking a hypothetical instance right here simply to

make you perceive how this predicts for a brand new dataset:

f a brand new knowledge level comes, say, peak = 1.40, it’ll undergo all of the timber after which will give the prediction. Right here, we now have solely 2 timber; therefore, the information level will undergo these 2 timber, and the ultimate output can be F2(x).

A gradient-boosting classifier works when the goal column is binary. You apply all of the steps defined within the gradient-boosting regressor right here, the one distinction is altering the loss perform. Earlier, we used Imply squared error when the goal column was steady, however we’ll use log-likelihood as our loss perform this time.

Let’s see how this loss perform works.

The loss perform for the classification downside is given under:

Our first step within the Gradient Boosting Algorithm in Machine Studying was to initialize the mannequin with some fixed worth, there we used the typical of the goal column however right here we’ll use log(odds) to get that fixed worth. The query arises: Why log(odds)?

After we differentiate this loss perform, we’ll get a perform of log(odds), after which we have to discover a worth of log(odds) for which the loss perform is minimal.

Are you confused proper? Now let’s see the way it works:

Let’s first remodel this loss function in order that it’s a perform of loloss functiong(odds). I’ll let you know later why we did this transformation.

Now, that is our loss perform, and we have to decrease it. For this, we take the by-product of this w.r.t to log(odds) after which put it equal to 0,

Right here, ‘y’ denotes the noticed values.

You should be questioning why we remodeled the loss perform right into a log(odds) perform. Typically, the log(odds) perform is straightforward to make use of, and generally, the expected likelihood “p” perform is straightforward to make use of.

It isn’t obligatory to rework the loss perform; we did this simply to make calculations simple.

Therefore, the minimal worth of this loss perform can be our first prediction (base mannequin prediction)

Within the gradient boosting regressor, our subsequent step was calculating the pseudo residuals, the place we multiplied the by-product of the loss perform with -1. We are going to do the identical, however now the loss perform is totally different, and we’re coping with the likelihood of an end result.

After discovering the residuals, we are able to construct a call tree with all impartial variables and goal variables as “Residuals”.

When we now have our first choice tree, we discover the ultimate output of the leaves as a result of there is perhaps a case the place a leaf will get greater than 1 residuals, so we have to calculate the ultimate output worth. The mathematics behind this step is out of the scope of this text, so I’ll point out the direct method to calculate the output of a leaf:

Lastly, we’re able to get new predictions by including our base mannequin with the brand new tree we made on residuals.

For implementing the GBM algorithm on a dataset, we’ll use the Earnings Analysis dataset, which has details about a person’s private life and an output of 50K or = 50. The dataset might be discovered here.

The duty right here is to categorise a person’s earnings based mostly on the required inputs about his private life.

First, let’s import all required libraries.

# Import all related librariesfrom sklearn.ensemble import GradientBoostingClassifierimport numpy as npimport pandas as pdfrom sklearn.preprocessing import StandardScalerfrom sklearn.model_selection import train_test_splitfrom sklearn.metrics import accuracy_score, confusion_matrixfrom sklearn import preprocessingimport warningswarnings.filterwarnings("ignore")Now let’s learn the dataset and have a look at the columns to know the knowledge higher.

df = pd.read_csv('income_evaluation.csv')

df.head()

I’ve already carried out the information preprocessing half, and you may see the entire code right here. My foremost purpose right here is to let you know find out how to implement this in Python. Now, for coaching and testing our mannequin, the information needs to be divided into prepare and take a look at units.

We can even scale the information to lie between 0 and 1.

# Cut up dataset into take a look at and prepare knowledge

X_train, X_test, y_train, y_test = train_test_split(df.drop(‘earnings’, axis=1),df[‘income’], test_size=0.2)

Now, let’s outline the Gradient Boosting Classifier and its hyperparameters. Subsequent, we’ll match this mannequin into the coaching knowledge.

# Outline Gradient Boosting Classifier with hyperparameters

gbc=GradientBoostingClassifier(n_estimators=500,learning_rate=0.05,random_state=100,max_features=5 )# Match prepare knowledge to GBCgbc.match(X_train,y_train)

The mannequin has been educated and we are able to now observe the outputs.

Under, you may see the confusion matrix of the mannequin, which stories the variety of classifications and misclassifications.

# Confusion matrix will give variety of right and incorrect classifications

print(confusion_matrix(y_test, gbc.predict(X_test)))

The Gradient Boosting Classifier has misclassified 1334 occasions in comparison with 8302 right classifications. The mannequin has carried out decently.

Let’s test the accuracy:

# Accuracy of mannequin

print("GBC accuracy is %2.2f" % accuracy_score(

y_test, gbc.predict(X_test))

Let’s test the classification report additionally:

from sklearn.metrics import classification_report

pred=gbc.predict(X_test)

print(classification_report(y_test, pred))

The accuracy is 86%, which is fairly good, however you may enhance it by tuning the hyperparameters or processing the information to take away outliers.

This, nonetheless, offers us the fundamental concept behind gradient boosting and its underlying working rules.

The accuracy is 86%, which is fairly good, however you may enhance it by tuning the hyperparameters or processing the information to take away outliers.

This, nonetheless, offers us the fundamental concept behind gradient boosting and its underlying working rules.

Tuning n_estimators and Studying charge

n_estimators is the variety of timber (weak learners) that we wish to add within the mannequin. There are not any optimum values for the educational charge, as low values all the time work higher, on condition that we prepare on a adequate variety of timber. A excessive variety of timber might be computationally costly. That’s why I’ve taken just a few variety of timber right here.

from sklearn.model_selection import GridSearchCV

grid = { 'learning_rate':[0.01,0.05,0.1], 'n_estimators':np.arange(100,500,100),}

gb = GradientBoostingClassifier()gb_cv = GridSearchCV(gb, grid, cv = 4)gb_cv.match(X_train,y_train)print("Greatest Parameters:",gb_cv.best_params_)print("Practice Rating:",gb_cv.best_score_)print("Take a look at Rating:",gb_cv.rating(X_test,y_test))

After tuning n_estimators and studying charge, the accuracy elevated from 86 to 89. Additionally, the “true optimistic” and “true damaging” charges improved.

We are able to additionally tune the max_depth parameter, which it’s essential to have heard in choice timber and random forests.

grid = {'max_depth':[2,3,4,5,6,7] }

gb = GradientBoostingClassifier(learning_rate=0.1,n_estimators=400)gb_cv = GridSearchCV(gb, grid, cv = 4)gb_cv.match(X_train,y_train)print("Greatest Parameters:",gb_cv.best_params_)print("Practice Rating:",gb_cv.best_score_)print("Take a look at Rating:",gb_cv.rating(X_test,y_test))

The accuracy elevated much more once we tuned the parameter “max_depth”.

I hope you understood how the Gradient Boosting algorithm works beneath the hood. I’ve tried to indicate you the mathematics behind this within the simplest way doable. Other than this, there are a lot of different methods for bettering mannequin efficiency, equivalent to XGBoost, LightGBM, and CatBoost. I’ll clarify about these in my upcoming articles.

Hope you just like the article! The gradient boosting regressor is a robust device in machine studying, and gradient boosting machines (GBM) considerably improve predictive accuracy, making them important for knowledge evaluation.

Key Takeaways:

- Gradient boosting builds sequential fashions to cut back errors of earlier iterations.

- The algorithm minimizes a loss perform by including weak learners utilizing gradient descent.

- Pseudo-residuals and choice timber on residuals are key elements of the method.

- Hyperparameter tuning, particularly n_estimators and studying charge, can considerably enhance mannequin efficiency.