Bear in mind these 4 directions:

- Decide your base mannequin (any) from Huggingface

- Add your knowledge — json, csv or choose an present dataset on Huggingface

- Decide the enter and output columns

- Select what number of hours you’d like to coach for

That’s all it’s essential to do to coach a mannequin on Gradients.

Questioning about all these technical parameters?

- Lora rank, alpha, dropout, weight decay

- Curriculum studying, batch dimension, early stopping

- Studying charge, scheduler, optimiser, epoch rely

- GPU kind and rely, quantisation choices

Really feel that headache coming? What to select, will defaults do?

With Gradients, all of this stuff are dealt with for you by a decentralised military of a number of the smartest minds in AI. These ‘miners’ are AI specialists who run highly effective computer systems and algorithms devoted to coaching and optimising fashions, competing to supply the perfect outcomes on your particular job. As soon as the time restrict you set is reached, the miners’ fashions tasked along with your coaching problem are in contrast towards one another and also you’re delivered the one with the perfect outcomes.

So Jeff offers it whirl, 4 clicks and 5 minutes later the job is Brrring…set the timer and goes for lunch. When he returns, he has a hyperlink and a mannequin.

Simpler ✅ however is it higher?

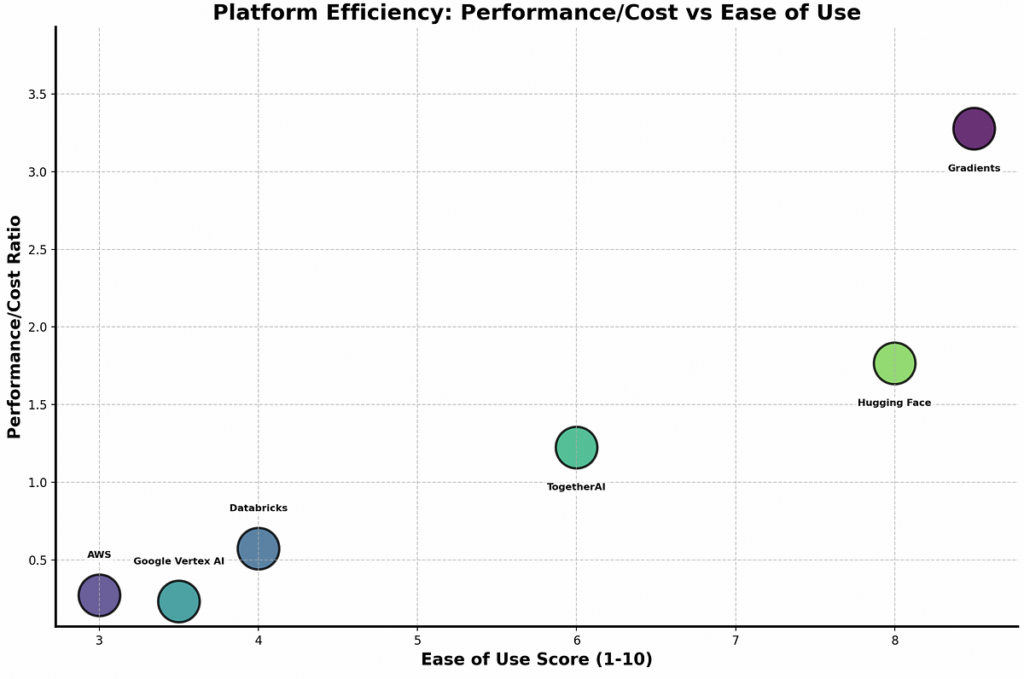

For those who had been to run Jeff’s job on GoogleVertex, HuggingFace AutoTrain, DataBricks, Collectively.AI and AWS Sagemaker — principally all the competitors.

You’ll discover two issues:

- They’re much harder to get set-up and run, and would price much more than operating on Gradients and,

2) The mannequin you’d get handed to you as output would carry out a lot worse than Gradients.

4 Clicks and a Nap: Gradients Made Jeff’s AI Smarter Than Google’s.

We’ve got down to put Gradients via full testing towards each main participant within the AI fine-tuning area:

For every experiment, we began with the identical substances everybody had entry to:

- Normal datasets from Hugging Face

- Fashionable basis fashions as beginning factors

- Similar train-test splits for honest comparability

First, we measured how nicely every base mannequin carried out with none coaching — establishing our baseline. Then got here the true take a look at: we handed the identical coaching knowledge and mannequin to each platform that would deal with it. AWS SageMaker, Google Vertex AI, Databricks, Hugging Face, TogetherAI — all of them obtained their shot.

Similar knowledge. Similar beginning mannequin. Similar objective.

Practice the perfect mannequin you possibly can that generalises to the unseen testset.

Practically 100 experiments later, Gradients hasn’t misplaced as soon as.

Take one AWS comparability, for instance.

Utilizing the unsloth/Llama-3.1–8B base mannequin on the euclaise/logician dataset:

— The bottom mannequin’s loss: 1.735

— AWS SageMaker’s fine-tuned mannequin loss: 0.189

— Gradients’ fine-tuned mannequin loss: 0.060

That’s greater than 3× higher efficiency from Gradients in comparison with AWS, and a 96.5% enchancment over the bottom mannequin — all whereas being considerably extra reasonably priced and user-friendly.

As you possibly can see within the efficiency charts beneath, Gradients delivers greater than 65% enchancment over baseline fashions — outperforming each main competitor whereas costing considerably much less.

How Huge Tech Turned High-quality-Tuning into Flat-Lining

The numbers don’t lie. However they do beg the query: Why are these tech giants — with their large R&D budgets and armies of PhDs — constantly falling brief towards Gradients’ decentralised strategy?

We predict that the reply lies within the elementary structural limitations which have plagued conventional fine-tuning platforms from the beginning:

1. One-size-fits-all algorithms. These platforms apply an identical optimisation methods to wildly various kinds of knowledge. It’s like performing mind surgical procedure and oil adjustments with the identical wrench — technically potential, however removed from optimum. Whereas your geological dataset requires specialised dealing with, it will get the identical generic therapy as everybody else’s.

2. Innovation lag. Their hyperparameter tuning methods haven’t saved tempo with AI analysis that’s shifting at warp pace. By the point they implement a breakthrough approach like DoRA or RSLoRA, it’s already yesterday’s information. In the meantime, Gradients’ miners are implementing cutting-edge strategies inside hours of publication.

3. Generalist infrastructure. Huge Tech platforms prioritise making issues work all over the place slightly than performing brilliantly anyplace. They’ve created methods that whisper in each language as a substitute of singing in yours — dependable however mediocre throughout all domains slightly than distinctive in yours.

4. Data silos. Any significant enhancements they develop stay locked behind company doorways, taking quarters to roll out. They schedule innovation by roadmaps; our miners compete to outdo one another by breakfast. What’s found on Monday is deployed by Tuesday.

This isn’t nearly technological variations — it’s about incentive constructions. Conventional platforms optimise for predictable enterprise metrics; Gradients optimises on your mannequin’s efficiency.

Three months later, Jeff sits in the identical chair however with a really totally different expression. The quarterly govt evaluation is displaying GeoX’s new AI accuracy metrics on display screen:

“Mannequin accuracy: 65% enchancment over baseline”

“Efficiency vs Google Vertex: 4.8% higher outcomes at a fraction of the associated fee”

“Efficiency vs AWS: 3× higher take a look at outcomes on reasoning duties”

The CTO glances down at his notes. Not solely was the AI now appropriately figuring out which wells contained what assets, however it was delivering constantly higher outcomes than fashions fine-tuned on platforms costing a whole lot extra.

“What modified?” asks the CEO.

“Effectively,” Jeff explains. “When you’ve gotten dozens of specialized miners competing to optimise your mannequin, they discover patterns in geological knowledge that generic fine-tuning algorithms miss. The successful miner mixed superior LoRA configurations with adaptive studying methods that the massive platforms haven’t applied but.”

His favorite half? He by no means needed to perceive what any of that meant. 4 clicks, 4 hours, and the decentralised community dealt with the remaining.

*This state of affairs illustrates an particular person real-world efficiency enchancment measured in our cross-platform comparability checks.

The Gradients Benefit

- Specialised optimisation:

Each AI job will get bespoke therapy from miners who’re competing tooth and nail to ship the very best fashions.

2. Chopping-edge methods:

The community continuously absorbs the newest breakthroughs in fine-tuning, from superior LoRA configurations to model new optimisation methods in curriculum studying and adaptive dropout.

3. Parallel experimentation:

A number of miners sort out totally different approaches concurrently, massively increasing the search area. Extra cooks? Higher broth on this case.

4. Financial incentives:

When your mannequin performs higher, they earn extra. The invisible hand isn’t so invisible when it’s handing out tokens.

Miners aren’t grinding away for a paycheck — they’re in a race the place solely the perfect will get the gold.

Like all progressive strategy, Gradients comes with its personal set of trade-offs. Whereas the efficiency benefits are simple, transparency calls for we deal with the elements that may not match each use case:

Public By Default: Your Success Turns into The Group’s

The successful mannequin turns into publicly out there on Hugging Face. For a lot of, this can be a characteristic, not a bug — your fine-tuned mannequin joins a rising library of specialized options, probably serving to others going through comparable challenges.

Nonetheless, in case your knowledge comprises proprietary data or your aggressive benefit depends on protecting your mannequin structure secret, this open strategy requires cautious consideration. Your coaching knowledge is shared with miners for coaching functions, although it’s not publicly launched. The ensuing mannequin structure, nevertheless, turns into out there to the broader neighborhood.

The Black Field Brilliance: Surrendering Parameter Management

“Set it and neglect it” comes with a worth: you’re handing the technical controls to the miners. These hyperparameters that gave you complications? You’re not tweaking them your self.

The upside? Their experience constantly delivers higher outcomes than what most organisations may obtain via handbook tuning. Generally one of the best ways to keep up management is figuring out when to let go.

The Information Threshold: Not For Tiny Datasets

Gradients requires at the very least 2,500 rows of knowledge. This threshold ensures miners have sufficient data to meaningfully compete and differentiate their approaches.

For many enterprise purposes, this requirement poses no impediment. However for area of interest use instances with restricted examples, it’s an vital consideration in your AutoML technique.

The Backside Line: Energy Comes With Perspective

These limitations aren’t defects — they’re acutely aware design decisions that allow Gradients’ exceptional efficiency benefits. For the overwhelming majority of customers in search of state-of-the-art fine-tuning with out the technical overhead, these issues are far outweighed by the 65%+ efficiency enhancements and four-click simplicity that Gradients delivers.

Behind Gradients’ efficiency lies Bittensor – a decentralised machine studying community that is is altering how AI fashions are educated and deployed.

Bittensor is a blockchain protocol that connects AI methods throughout a peer-to-peer community, permitting them to trade worth based mostly on the standard of intelligence they supply. In contrast to conventional centralised AI methods, Bittensor creates an open market the place:

- Miners (AI specialists who compete to coach your mannequin) compete to ship the perfect AI options

- Validators consider and reward efficiency based mostly on goal metrics

- Token incentives (TAO) align everybody’s pursuits towards producing higher AI. These TAO tokens have actual monetary worth, creating a real financial incentive for miners to constantly enhance their algorithms.

For Gradients customers, this implies entry to a world supercomputer optimised particularly for AutoML duties. Whenever you submit your dataset and mannequin, it isn’t processed by a single algorithm on a single cloud – it is analysed by a aggressive ecosystem of specialized algorithms preventing to ship you the very best mannequin.

The financial incentives be certain that solely the best-performing miners obtain rewards, making a Darwinian choice course of that constantly improves the community’s capabilities. This is the reason Gradients constantly outperforms centralised options – it harnesses collective intelligence pushed by market forces slightly than company roadmaps.

Whereas most platforms apply a one-size-fits-all strategy to fine-tuning, our top-performing miners have developed subtle methods particular to totally different knowledge domains and mannequin architectures. Though secret sauce is usually saved secret, we’ve been given some nuggets to share:

Adaptive Rank Choice LoRA

As a substitute of mounted ranks throughout all adapter matrices, high miners dynamically assign totally different ranks to totally different layers based mostly on consideration sample evaluation. For language duties requiring factual precision, they assign greater ranks to center transformer layers whereas utilizing minimal adaptation for embedding layers.

Studying Charge Optimisation

One standout miner constructed a customized studying charge finder that routinely identifies optimum charges for various parameter teams, permitting extra aggressive adaptation in task-specific layers whereas preserving foundational data elsewhere.

Scheduler Sophistication

Somewhat than static coaching schedules, successful approaches typically implement dynamic decay patterns that reply to validation metrics. These subtle schedulers forestall overfitting whereas guaranteeing convergence, significantly essential for domain-specific knowledge with restricted examples.

As AI turns into important to each trade, the hole between mediocre and distinctive mannequin efficiency will immediately impression corporations backside line. It’s harsh however true — corporations that accept second-rate AutoML are primarily selecting to make worse selections based mostly on dodgy outputs.

The query isn’t whether or not you must use AutoML — it’s whether or not you possibly can afford to make use of something lower than the perfect performing AutoML platform on this planet.

Bought a difficult dataset you’ve been desirous to fine-tune? We’d like to see what Gradients can do with it.

Gradients: gradients.io

Git: https://github.com/rayonlabs/G.O.D

Discord: https://discord.gg/aXNv6yKg

RayonLabs: https://rayonlabs.ai/