GPT-4o-based brokers led by a human collaborated to develop experimentally validated nanobodies towards SARS-CoV-2. Uncover with me how we’re getting into the period of automated reasoning and work with actionable outputs, and let’s think about how this may go even additional when robotic lab technicians may perform the moist lab components of any analysis venture!

In an period the place synthetic intelligence continues to reshape how we code, write, and even purpose, a brand new frontier has emerged: AI conducting actual scientific analysis, as a number of firms (from main established gamers like Google to devoted spin offs) are attempting to realize. And we’re not speaking nearly simulations, automated summarization, knowledge crunching, or theoretical outputs, however really about producing experimentally validated supplies, similar to organic designs with potential medical relevance, on this case that I deliver you at the moment.

That future simply acquired a lot nearer; very shut certainly!

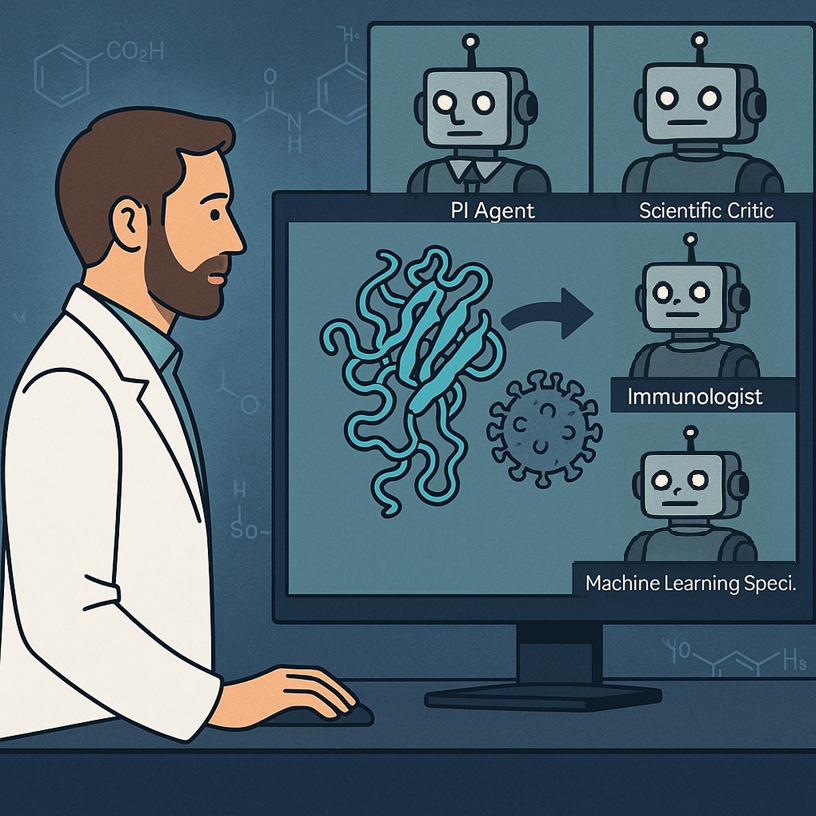

In a groundbreaking paper simply revealed in Nature by researchers from Stanford and the Chan Zuckerberg Biohub, a novel system referred to as the Digital Lab demonstrated {that a} human researcher working with a group of enormous language mannequin (LLM) brokers can design new nanobodies—these are tiny, antibody-like proteins that may bind others to dam their operate—to focus on fast-mutating variants of SARS-CoV-2. This was not only a slender chatbot interplay or a tool-assisted paper; it was an open-ended, multi-phase analysis course of led and executed by AI brokers, every having a specialised experience and function, leading to real-world validated organic molecules that would completely transfer on to downstream research for precise applicability in illness (on this case Covid-19) remedy.

Let’s delve into it to see how that is critical, replicable analysis presenting an strategy to AI-human (and truly AI-AI) collaborative science works.

From “easy” purposes to materializing a full-staff AI lab

Though there are some precedents to this, the brand new system is in contrast to something earlier than it. And one of many coolest issues is that it isn’t based mostly on a special-purpose educated LLM or device; fairly, it makes use of GPT-4o instructed with prompts that make it play the function of the completely different varieties of individuals usually concerned in a analysis group.

Till not too long ago, the function of LLMs in science was restricted to question-answering, summarizing, writing assist, coding and maybe some direct knowledge evaluation. Helpful, sure, however not transformative. The Digital Lab introduced on this new Nature paper adjustments that by elevating LLMs from assistants to autonomous researchers that work together with each other and with a human person (who brings the analysis query, runs experiments when required, and ultimately concludes the venture) in structured conferences to discover, hypothesize, code, analyze, and iterate.

The core concept on the coronary heart of this work was certainly to simulate an interdisciplinary lab staffed by AI brokers. Every agent has a scientific function—say, immunologist, computational biologist, or machine studying specialist—and is instantiated from GPT-4o with a “persona” crafted through cautious immediate engineering. These brokers are led by a Principal Investigator (PI) Agent and monitored by a Scientific Critic Agent, each digital brokers.

The Critic Agent challenges assumptions and pinpoints errors, performing because the lab’s skeptical reviewer; because the paper explored, this was a key aspect of the workflow with out which too many errors and overlooks occurred that went in detriment of the venture.

The human researcher units high-level agendas, injects area constraints, and in the end runs the outputs (particularly the wet-lab experiments). However the “considering” (possibly I ought to get thinking about eradicating the citation marks?) is finished by the brokers.

The way it all labored

The Digital Lab was tasked with fixing an actual and pressing biomedical problem: design new nanobody binders for the KP.3 and JN.1 variants of SARS-CoV-2, which had advanced resistance to current remedies. As a substitute of ranging from scratch, the AI brokers determined (sure, they took this determination by themselves) to mutate current nanobodies that had been efficient towards the ancestral pressure however now not labored as nicely. Sure, they selected that!

As all interactions are tracked and documented, we are able to see precisely how the group moved ahead with the venture.

To start with, the human outlined solely the PI and Critic brokers. The PI agent then created the scientific group by spawning specialised brokers. On this case they had been an Immunologist, a Machine Studying Specialist and a Computational Biologist. In a group assembly, the brokers debated whether or not to design antibodies or nanobodies, and whether or not to design from scratch or mutate current ones. They selected nanobody mutation, justified by quicker timelines and obtainable structural knowledge. They then mentioned about what instruments to make use of and the right way to implement them. They went for the ESM protein language mannequin coupled to AlphaFold multimer for construction prediction and Rosetta for binding power calculations. For implementation, the brokers determined to go together with Python code. Very apparently, the code wanted to be reviewed by the Critic agent a number of occasions, and was refined by means of a number of asynchronous conferences.

From the conferences and a number of runs of code, an actual technique was devised on the right way to suggest the ultimate set of mutations to be examined. Briefly, for the bioinformaticians studying this publish, the PI agent designed an iterative pipeline that makes use of ESM to attain all level mutations on a nanobody sequence by log-likelihood ratio, selects prime mutants to foretell their constructions in complicated with the goal protein utilizing AlphaFold-Multimer, scores the interfaces through ipLDDT, then makes use of Rosetta to estimate binding power, and at last combines the scores to provide you with a rating of all proposed mutations. This really was repeated in a cycle, to introduce extra mutations as wanted.

The computational pipeline generated 92 nanobody sequences that had been synthesized and examined in a real-world lab, discovering that almost all of them had been really proteins that may be produced and dealt with. Two of those proteins gained affinity to the SARS-CoV-2 proteins they had been designed to bind, each to fashionable mutants and to ancestral types.

These success charges are just like these coming from analogous initiatives operating in conventional kind (that’s executed by people), however they took a lot a lot much less time to conclude. And it hurts to say it, however I’m fairly certain the digital lab entailed a lot decrease prices total, because it concerned a lot fewer folks (therefore salaries).

Like in a human group of scientists: conferences, roles, and collaboration

We noticed above how the Digital Lab mimics how human science occurs: through structured interdisciplinary conferences. Every assembly is both a “Crew Assembly”, the place a number of brokers talk about broad questions (the PI begins, others contribute, the Critic opinions, and the PI summarizes and decides); or an “Particular person Assembly” the place a single agent (with or with out the Critic) works on a particular activity, e.g., writing code or scoring outputs.

To keep away from hallucinations and inconsistency, the system additionally makes use of parallel conferences; that’s, the identical activity is run a number of occasions with completely different randomness (i.e. at excessive “temperature”). It’s attention-grabbing that the outcomes from these a number of conferences is the condensed in a single low-temperature merge assembly that’s far more deterministic and may fairly safely resolve which conclusions, amongst all coming from the assorted conferences, make extra sense.

Clearly, these concepts could be utilized to some other form of multi-agent interplay, and for any objective!

How a lot did the people do?

Surprisingly little, for the computational a part of course–because the experiments can’t be a lot automated but, although maintain studying to seek out some reflections about robots in labs!

On this Digital Lab spherical, LLM brokers wrote 98.7% of the overall phrases (over 120,000 tokens), whereas the human researcher contributed simply 1,596 phrases in whole throughout your entire venture. The Brokers wrote all of the scripts for ESM, AlphaFold-Multimer post-processing, and Rosetta XML workflows. The human solely helped operating the code and facilitated the real-world experiments. The Digital Lab pipeline was inbuilt 1-2 days of prompting and conferences, and the nanobody design computation ran in ~1 week.

Why this issues (and what comes subsequent)

The Digital Lab may function the prototype for a essentially new analysis mannequin–and truly for a essentially new technique to work, the place the whole lot that may be completed on a pc is left automated, with people solely taking the very vital selections. LLMs are clearly shifting from passive instruments to energetic collaborators that, because the Digital Lab exhibits, can drive complicated, interdisciplinary initiatives from concept to implementation.

The following bold leap? Exchange the fingers of human technicians, who ran the experiments, with robotic ones. Clearly, the subsequent frontier is in automatizing the bodily interplay with the true world, which is actually what robots are. Think about then the total pipeline as utilized to a analysis lab:

- A human PI defines a high-level organic purpose.

- The group does analysis of current data, scans databases, brainstorms concepts.

- A set of AI brokers selects computational instruments if required, writes and runs code and/or analyses, and at last proposes experiments.

- Then, robotic lab technicians, fairly than human technicians, perform the protocols: pipetting, centrifuging, plating, imaging, knowledge assortment.

- The outcomes circulate again into the Digital Lab, closing the loop.

- Brokers analyze, adapt, iterate.

This is able to make the analysis course of actually end-to-end autonomous. From drawback definition to experiment execution to end result interpretation, all elements can be run by an built-in AI-robotics system with minimal human intervention—simply high-level steering, supervision, and world imaginative and prescient.

Robotic biology labs are already being prototyped. Emerald Cloud Lab, Strateos, and Transcriptic (now a part of Colabra) supply robotic wet-lab-as-a-service. Future Home is a non-profit constructing AI brokers to automate analysis in biology and different complicated sciences. In academia, some autonomous chemistry labs exist whose robots can discover chemical house on their very own. Biofoundries use programmable liquid handlers and robotic arms for artificial biology workflows. Adaptyv Bio automates protein expression and testing at scale.

Such sorts of automatized laboratory programs coupled to rising programs like Digital Lab may radically rework how science and know-how progress. The clever layer drives the venture and provides these robots work to do, whose output then feeds again into the considering device in a closed-loop discovery engine that will run 24/7 with out fatigue or scheduling conflicts, conduct a whole bunch or 1000’s of micro-experiments in parallel, and quickly discover huge speculation areas which can be simply not possible for human labs. Furthermore, the labs, digital labs, and managers don’t even have to be bodily collectively, permitting to optimize how sources are unfold.

There are challenges, after all. Actual-world science is messy and nonlinear. Robotic protocols have to be extremely strong. Surprising errors nonetheless want judgment. However as robotics and AI proceed to evolve, these gaps will definitely shrink.

Ultimate ideas

We people had been at all times assured that know-how within the type of good computer systems and robots would kick us out of our extremely repetitive bodily jobs, whereas creativity and considering would nonetheless be our area of mastery for many years, maybe centuries. Nevertheless, regardless of fairly some automation through robots, the AI of the 2020s has proven us that know-how will also be higher than us at a few of our most brain-intensive jobs.

Within the close to future, LLMs don’t simply reply our questions or barely assist us with work. They’ll ask, argue, debate, resolve. And generally, they are going to uncover!

References and additional reads

The Nature paper analyzed right here:

https://www.nature.com/articles/s41586-025-09442-9

Different scientific discoveries by AI programs: