To fight the shortcuts and risk-taking, Lorenzo is engaged on a software for the San Francisco–based mostly firm DroneDeploy, which sells software program that creates each day digital fashions of work progress from movies and pictures, recognized within the commerce as “actuality seize.” The software, known as Security AI, analyzes every day’s actuality seize imagery and flags conditions that violate Occupational Safety and Health Administration (OSHA) guidelines, with what he claims is 95% accuracy.

That signifies that for any security danger the software program flags, there may be 95% certainty that the flag is correct and pertains to a selected OSHA regulation. Launched in October 2024, it’s now being deployed on lots of of building websites within the US, Lorenzo says, and variations particular to the constructing rules in nations together with Canada, the UK, South Korea, and Australia have additionally been deployed.

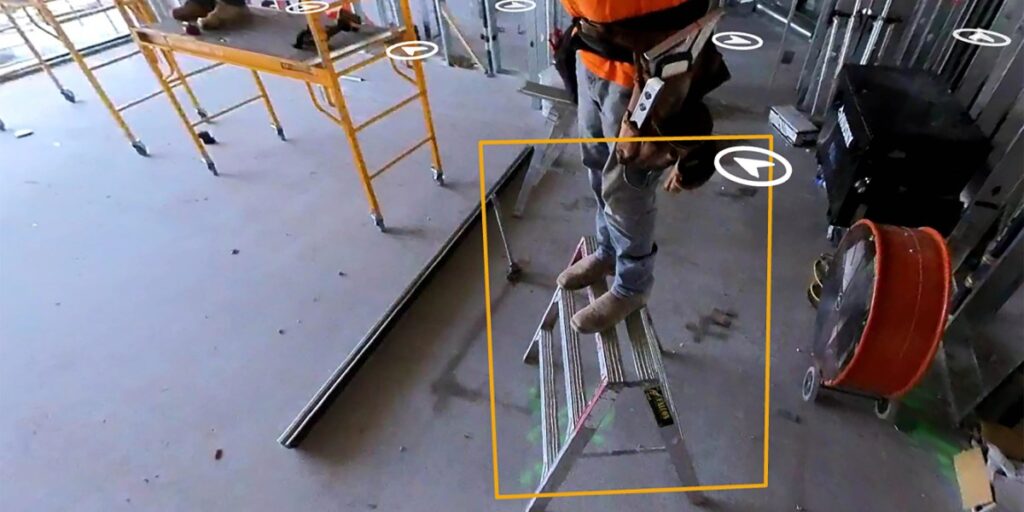

Security AI is one in all a number of AI building security instruments which have emerged in recent times, from Silicon Valley to Hong Kong to Jerusalem. Many of those depend on groups of human “clickers,” usually in low-wage nations, to manually draw bounding containers round pictures of key objects like ladders, as a way to label giant volumes of knowledge to coach an algorithm.

Lorenzo says Security AI is the primary one to make use of generative AI to flag security violations, which suggests an algorithm that may do greater than acknowledge objects reminiscent of ladders or onerous hats. The software program can “motive” about what’s going on in a picture of a website and draw a conclusion about whether or not there may be an OSHA violation. It is a extra superior type of evaluation than the thing detection that’s the present trade customary, Lorenzo claims. However because the 95% success price suggests, Security AI shouldn’t be a flawless and all-knowing intelligence. It requires an skilled security inspector as an overseer.

A visible language mannequin in the true world

Robots and AI are inclined to thrive in managed, largely static environments, like manufacturing facility flooring or delivery terminals. However building websites are, by definition, altering somewhat bit each day.

Lorenzo thinks he’s constructed a greater solution to monitor websites, utilizing a sort of generative AI known as a visible language mannequin, or VLM. A VLM is an LLM with a imaginative and prescient encoder, permitting it to “see” pictures of the world and analyze what’s going on within the scene.

Utilizing years of actuality seize imagery gathered from clients, with their express permission, Lorenzo’s workforce has assembled what he calls a “golden information set” encompassing tens of hundreds of pictures of OSHA violations. Having fastidiously stockpiled this particular information for years, he isn’t apprehensive that even a billion-dollar tech big will have the ability to “copy and crush” him.

To assist prepare the mannequin, Lorenzo has a smaller workforce of building security execs ask strategic questions of the AI. The trainers enter check scenes from the golden information set to the VLM and ask questions that information the mannequin by means of the method of breaking down the scene and analyzing it step-by-step the way in which an skilled human would. If the VLM doesn’t generate the right response—for instance, it misses a violation or registers a false constructive—the human trainers return and tweak the prompts or inputs. Lorenzo says that reasonably than merely studying to acknowledge objects, the VLM is taught “tips on how to assume in a sure means,” which suggests it could possibly draw delicate conclusions about what is occurring in a picture.