Anomaly detection — figuring out out-of-the-ordinary occasions or knowledge factors — performs a pivotal position in cybersecurity by flagging potential threats that evade conventional defenses. This weblog offers an outline of how machine studying is revolutionizing anomaly detection to bolster cyber defenses. We start by analyzing the rising sophistication of cyber assaults and why classical safety measures (like signature-based techniques) wrestle to maintain up. We then clarify the kinds of anomalies (level, contextual, collective) and the way they’re detected utilizing supervised, unsupervised, and semi-supervised studying. Subsequent, we discover superior deep studying approaches — together with Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) — and the way they improve detection accuracy. Actual-world purposes are mentioned, comparable to their use in Intrusion Detection Techniques (IDS) and community monitoring, with case research together with the Goal knowledge breach and probing assaults on institutional networks. We tackle challenges like false positives and the “black-box” nature of advanced fashions, highlighting explainability strategies (LIME, SHAP) that enhance analyst belief. Lastly, we look forward to future instructions: on-line studying for adapting to evolving threats, Graph Neural Networks for relational knowledge, federated studying for collaborative safety, and incorporating professional information to refine machine learning-driven defenses. The objective is to supply a transparent and fascinating perception into why machine learning-based anomaly detection is essential for proactive cybersecurity, balancing technical depth with accessibility.

Cyber threats are rising in each quantity and class, outpacing conventional safety measures. Fashionable attackers typically make use of novel malware, zero-day exploits, and stealthy strategies that mix in with regular consumer habits. Standard signature-based defenses — which depend on recognized patterns of previous assaults — excel at catching recognized threats however wrestle to detect new or unknown assaults [2]. For instance, a legacy intrusion detection system would possibly rapidly acknowledge a beforehand catalogued virus, but fail to alert on a delicate community infiltration it has by no means seen earlier than. This hole is more and more problematic as superior persistent threats (APTs) and insider threats intentionally mimic professional habits to evade detection [1]. Within the face of those evolving risks, solely relying on static guidelines or handbook monitoring is now not enough. Machine studying (ML) affords a dynamic resolution: by studying the baseline of “regular” exercise and constantly adapting to new knowledge, ML-based anomaly detection can uncover deviations which will sign a cyberattack in progress. Integrating ML-driven anomaly detection into safety operations permits earlier identification of potential incidents, together with hard-to-detect assaults that signature-based strategies would possibly miss [1, 2]. In brief, as cyber threats turn into extra crafty, safety groups are turning to machine studying to bolster defenses — shifting from reactive, known-threat detection to proactive discovery of the sudden.

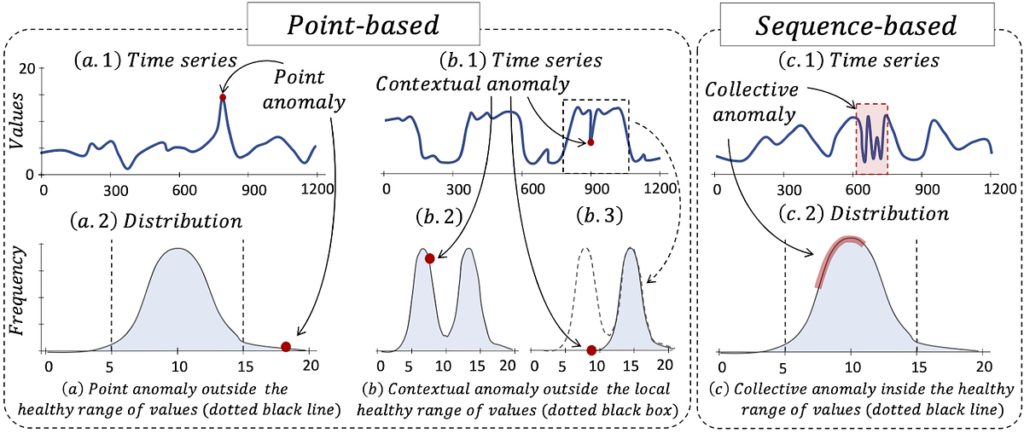

Not all anomalies are equal; they’ll manifest in numerous types. Broadly, we categorize anomalies into level, contextual, and collective anomalies [1].

A degree anomaly is a person knowledge level that deviates sharply from the norm. For example, a single community site visitors spike or an uncommon one-time login might be some extent anomaly. In cybersecurity, this could be a sudden surge in CPU utilization on a server or an outbound connection to an unfamiliar port that stands out from typical patterns [1]. Such an outlier is usually indicative of a problem (e.g., a malware course of spiking CPU, or knowledge exfiltration inflicting irregular site visitors quantity).

A contextual anomaly is a knowledge level that’s solely anomalous in a selected context (comparable to time, location, or sequence). The identical occasion could also be regular below different circumstances. For instance, a consumer logging in at 3 A.M. or from an uncommon geographic location could be thought-about a contextual anomaly — the login itself is a sound motion, however the timing or context is irregular [1]. In community phrases, a excessive quantity of site visitors is predicted throughout enterprise hours, however that quantity in the course of the evening would deviate from the baseline and lift suspicions [13].

A collective anomaly is a set of information factors that, thought-about collectively, kind an uncommon sample, even when every particular person level may appear regular. In different phrases, it’s an anomalous sequence or cluster of occasions [1]. A cybersecurity instance is a coordinated assault: quite a few login makes an attempt from totally different IPs would possibly every look benign alone, however collectively they point out a brute-force assault. Equally, a collection of community packets that individually seem regular may collectively symbolize a port scan or a knowledge exfiltration try when noticed as a complete [1].

Determine 1: Illustrations of level (a), contextual (b), and collective © anomalies in a time collection, the place crimson markers spotlight anomalous values. Level anomaly (a) reveals a price exterior the conventional vary; contextual anomaly (b) falls in a traditional vary globally however is irregular given the native context (window); collective anomaly © is a sequence with an uncommon sample (crimson section) regardless that every level could be within the wholesome vary [11].

To detect these anomalies, varied machine studying strategies are utilized, usually falling into three studying classes: supervised, unsupervised, and semi-supervised anomaly detection [11].

In supervised anomaly detection, the algorithm is skilled on a labeled dataset that features examples of each “regular” cases and “anomalous” cases. Basically, it’s handled as a classification drawback — the mannequin learns to tell apart between regular habits and particular recognized threats or anomalies. This strategy works properly when you have got many examples of assaults. For example, a dataset of community site visitors could also be labeled with lessons like “benign” or varied assault sorts, and a supervised mannequin (even a deep CNN classifier) might be skilled to acknowledge them [11]. The downside is that this requires recognized anomalies: if a brand new sort of assault emerges that wasn’t within the coaching knowledge, a purely supervised mannequin may not detect it. Thus, supervised strategies shine in eventualities with plentiful historic assault knowledge (e.g., malware classification), however are much less helpful for catching novel threats.

Unsupervised anomaly detection is used when no labeled examples of anomalies can be found — in lots of real-world instances, we would not know what assaults appear like, however we will observe regular habits. Unsupervised strategies assume that anomalies are uncommon and differ considerably from the vast majority of knowledge. These algorithms (comparable to clustering, one-class SVMs, or autoencoders) be taught the construction of regular knowledge and flag outliers that deviate from that construction [11]. The benefit is the flexibility to find unexpected assaults. For instance, an unsupervised mannequin monitoring system calls on a server can be taught the everyday patterns after which detect an odd sequence of calls that was by no means noticed earlier than, suggesting a possible intrusion. Unsupervised strategies don’t want an inventory of recognized threats [11], making them excellent for uncovering new or evolving anomalies. Nonetheless, they’ll typically flag benign deviations (resulting in false positives) since “uncommon” doesn’t at all times equal “malicious.”

Semi-supervised anomaly detection is a hybrid strategy, typically probably the most sensible in cybersecurity. On this setup, the system is skilled principally (or solely) on regular knowledge, studying what “good” habits seems like, and something sufficiently totally different is deemed anomalous [11]. That is often known as one-class classification or novelty detection. It assumes we will collect a complete pattern of regular operations (which is usually simpler than anticipating all doable assaults). Many anomaly detection options in observe comply with this paradigm: as an illustration, you feed an IDS logs of unpolluted community site visitors for weeks to mannequin regular patterns, after which it alerts on deviations. Semi-supervised strategies work properly as a result of they incorporate area information of normality supplied by consultants (with out requiring examples of unhealthy occasions). They have a tendency to provide fewer false alarms than purely unsupervised strategies as a result of the definition of regular is knowledgeable by actual knowledge [11]. Nonetheless, they might miss anomalies that resemble regular habits or undergo if the “regular” coaching knowledge was contaminated with unseen assaults.

In abstract, if in case you have labels for each regular and assault site visitors (case of recognized assaults), use supervised studying; if in case you have no labels in any respect, unsupervised strategies can discover outliers; and should you solely have regular knowledge, semi-supervised (one-class) approaches are efficient [11]. Typically in cybersecurity, a mixture of those is used: begin with unsupervised to find anomalies after which label them (with professional assist) to additional refine supervised fashions — making a steady enchancment loop.

Machine studying for anomaly detection has superior past fundamental statistical fashions, with deep studying strategies making a big affect lately. Deep studying (which incorporates neural community architectures like CNNs, RNNs, and so forth.) affords the flexibility to mechanically be taught advanced function representations from uncooked knowledge, typically outperforming strategies that depend on handbook function engineering [5]. In cybersecurity, the place knowledge might be high-dimensional and nuanced (consider community site visitors logs, system name sequences, or consumer habits patterns), deep studying can seize delicate buildings that could be missed by less complicated algorithms.

Convolutional Neural Networks (CNNs), initially fashionable for picture processing, have been successfully tailored for sure cybersecurity anomaly detection duties. CNNs use layers of filters to mechanically extract options, and whereas they excel with spatial knowledge like photographs, they may also be utilized to sequential or structured knowledge by treating it akin to an “picture” or matrix. For instance, researchers have utilized CNNs to community site visitors knowledge by structuring site visitors options (packet sizes, counts, flags, and so forth.) right into a fixed-size enter. The CNN then learns patterns that distinguish regular site visitors from assaults. CNN-based fashions have achieved excessive accuracy in intrusion detection, typically surpassing conventional machine studying fashions. One examine constructed a CNN-based anomaly detection system for community intrusion and reported that it outperformed classical strategies in detecting assaults, attaining excessive accuracy and recall on benchmark datasets [5]. In a case examine specializing in detecting probing assaults (scanning actions) in an institutional community, a CNN mannequin barely outperformed an ensemble studying mannequin, with each attaining excessive detection charges [4]. This demonstrates how deep studying can mechanically be taught discriminative options of cyber assaults (like port scan patterns) that could be arduous to encode with handbook guidelines. Furthermore, CNNs are computationally environment friendly at inference time resulting from their filter mechanism and might function in near-real-time for high-volume knowledge streams (with correct optimization).

One other highly effective deep studying strategy for anomaly detection is using Generative Adversarial Networks (GANs). GANs include two neural networks — a generator and a discriminator — locked in a recreation: the generator tries to provide knowledge that mimic the actual knowledge distribution, and the discriminator tries to inform faux (generated) knowledge from actual knowledge. This adversarial coaching can be taught very advanced knowledge distributions. In cybersecurity, GANs have been leveraged in a number of methods to boost anomaly detection. One use is to enhance detection fashions by producing artificial examples of assaults. As a result of cyber assault knowledge is usually imbalanced (with far fewer examples of assaults than regular occasions), a GAN can generate practical attack-like knowledge to reinforce coaching. This will tackle the category imbalance drawback and result in higher detectors. For example, a GAN was used to create convincing faux community site visitors of uncommon assault sorts so {that a} reinforcement studying based mostly IDS may be taught to catch these minority assaults extra reliably — the consequence was a big enchancment in detecting these beforehand under-represented assaults [6]. The generator on this case learns the distribution of regular site visitors and focused assault site visitors, and by fooling the discriminator, it basically teaches the system what uncommon site visitors would possibly appear like. This method helped the IDS to acknowledge delicate patterns of these assaults that it might in any other case typically miss.

GANs may also be utilized in an unsupervised anomaly detection setting. One fashionable strategy (initially from laptop imaginative and prescient) is to coach a GAN on solely regular knowledge; the generator learns to provide normal-like cases and the discriminator learns to inform real-normal vs generated (additionally regular). Once you then feed a brand new occasion to this method, if it’s regular, the generator ought to be capable of reconstruct or symbolize it properly (and the discriminator received’t flag it strongly), but when it’s anomalous, the generator fails to seize it and the discriminator simply spots it as faux. Methods like AnoGAN leverage this concept by utilizing the generator’s incapacity to recreate an enter as an anomaly rating. In cybersecurity, this might imply coaching on logs of regular habits after which detecting anomalies by how poorly they are often “defined” by the generative mannequin. GANs thus provide a approach to be taught the profile of normality so properly that something deviating stands out. In observe, GAN-based detectors have been utilized to domains like malicious area title detection (e.g., detecting algorithmically generated domains utilized by malware) by coaching a GAN to mannequin professional area title patterns — uncommon area requests that don’t match the mannequin might be flagged [7]. Equally, for community intrusion detection, GAN variants have been proposed to generate artificial site visitors for coaching sturdy classifiers, and even to instantly detect jamming assaults in wi-fi networks by studying regular channel patterns [7].

It’s price noting that deep studying approaches, whereas highly effective, include their very own challenges (which we talk about later). They usually require quite a lot of knowledge and computing assets to coach, and tuning them for anomaly detection (an inherently imbalanced drawback) might be tough. Nonetheless, the flexibility of CNNs and GANs (and different deep fashions like LSTMs or Transformers for sequences) to mannequin advanced, high-dimensional knowledge makes them invaluable for contemporary cybersecurity options. They allow techniques to not simply use surface-level options (like thresholds on packet counts), however to be taught intricate signatures of assaults or anomalies that could be invisible to human-defined logic. In consequence, deep learning-based anomaly detectors have been proven to catch subtle assaults with greater accuracy and decrease false alarm charges, regularly elevating the bar for cyber protection mechanisms [4, 5].

Machine learning-driven anomaly detection is being deployed throughout varied cybersecurity domains to nice impact. Right here we take a look at just a few key purposes and examples.

One of many main makes use of of anomaly detection is in community intrusion detection. Conventional IDS might be signature-based (detecting recognized assault patterns) or anomaly-based (detecting deviations from regular habits). With machine studying, anomaly-based IDS have turn into far more practical, figuring out threats that don’t match any recognized signature. For instance, an ML-based IDS would possibly be taught the conventional vary of community connections for a company community; if immediately a bunch begins making connections to many random exterior IPs or an uncommon surge of site visitors flows at off-peak hours, the IDS will set off an alert. Many enterprise IDS right now incorporate algorithms like one-class SVMs, clustering, or neural networks to mannequin regular community site visitors and flag outliers. In observe, a hybrid strategy is usually used: recognized malware or exploits are caught by signatures, and something actually uncommon is handed to the anomaly detection engine for investigation [2]. This mixture helps safety groups catch the “low-and-slow” assaults — as an illustration, a complicated attacker who has breached the community and is quietly probing round will create minor anomalies (like a consumer account querying assets it by no means accessed earlier than) that an anomaly-based IDS can choose up. The significance of anomaly detection in IDS is underscored by the necessity to catch novel assaults in real-time; as one safety weblog places it, anomaly-based IDS “constantly analyzes knowledge to establish deviations from anticipated norms” and thereby affords a proactive protection towards zero-day and rising threats [2].

Past formal IDS home equipment, anomaly detection strategies are utilized broadly in monitoring community and system habits. This will embody detecting uncommon patterns which may signify distributed denial-of-service (DDoS) assaults, insider misuse, or knowledge breaches. For example, by analyzing community circulation data (NetFlow knowledge) with unsupervised studying, organizations can spot anomalies comparable to an inner server immediately sending out considerably extra knowledge than regular (which could point out knowledge exfiltration). Anomaly detection additionally performs a task in cloud safety and IoT networks, the place baselines of system habits are established and deviations (like a usually quiet IoT sensor beginning to ship frequent giant bursts of information) can point out compromise. Consumer and Entity Habits Analytics (UEBA) is one other space: ML fashions be taught regular consumer habits (logins, entry patterns) and set off on anomalies, comparable to an worker account accessing an uncommon quantity of information or logging in from an atypical location or IP. These sorts of detections are essential for catching insider threats or compromised accounts that in any other case mix in. For instance, if an attacker by some means obtains legitimate credentials (so their actions received’t match any recognized malware signature), the one hope of detection could be noticing that the account is behaving surprisingly in comparison with its historical past — precisely the sort of perception anomaly detection offers.

One infamous instance highlighting the necessity for anomaly detection is the 2013 Goal retail breach. Attackers infiltrated Goal’s community (by way of a third-party HVAC contractor) and put in malware on point-of-sale techniques, stealing bank card knowledge from tens of millions of shoppers. Goal had deployed a state-of-the-art safety system that generated alerts when the attackers started shifting knowledge out — particularly, their intrusion detection instruments (like FireEye) did flag uncommon malware exercise and knowledge exfiltration makes an attempt [3]. Nonetheless, the alerts have been missed or ignored by the safety group [3]. Looking back, this breach underscored that anomaly detection techniques can certainly catch the early indicators of an assault (on this case, anomalous malware information and outbound site visitors), however organizations want the processes to answer these alerts. The Goal breach is usually cited in cybersecurity circles as a lesson: investments in machine studying and superior detection should be matched by incident response. Had Goal’s group investigated the preliminary anomalies (reasonably than turning off some alerting options resulting from unfamiliarity [3]), the huge knowledge theft might need been contained. This case validates that ML-driven anomaly detection — whereas not excellent — was capable of detect a extremely subtle, focused assault, reinforcing its worth in trendy safety architectures.

Contemplate a college or enterprise community that’s periodically topic to port scans and probing assaults (the place an attacker tries many community ports or providers to search out vulnerabilities). Conventional firewalls may not flag these if they’re gradual or distributed. Researchers carried out a case examine utilizing anomaly-based machine studying fashions on a college’s community to detect such probing assaults [4]. They collected actual community site visitors knowledge and centered on probe sort intrusions. By coaching fashions on regular site visitors and recognized probe patterns, they discovered that the ML-based anomaly detectors may reliably establish scanning actions with excessive accuracy. Actually, they examined two approaches: an ensemble of conventional ML algorithms and a deep studying mannequin (CNN), discovering each to be efficient with the CNN being barely extra correct [4]. This experiment demonstrated that anomaly detection may bolster community safety by catching reconnaissance actions early. In an actual deployment, such a system would alert the community admins {that a} explicit IP is performing an uncommon sweep of ports or a bunch is responding in an uncommon sample — permitting them to research and block a possible attacker who’s within the “casing the joint” part of their assault. The success of the ML fashions on this case examine, particularly when in comparison with standard misuse-based detection, reveals how machine studying can adapt to delicate modifications in assault strategies. A signature-based system would possibly solely detect a probe if it matches a recognized scanning instrument’s sample, however the anomaly detection mannequin discovered the final traits of port scanning (a number of sequential connection makes an attempt, and so forth.), so even a novel scanning methodology was flagged resulting from its deviance from regular habits [4]. This type of adaptability is essential as attackers continuously tweak their techniques.

These examples scratch the floor — anomaly detection by way of ML can also be utilized in fraud detection (e.g., detecting anomalous transaction patterns in banking), malware detection (figuring out applications with anomalous habits profiles), and system well being monitoring (catching anomalies in system logs which may point out failures or intrusions). In every case, the core thought is similar: mannequin “regular” and reply while you see one thing that doesn’t match. ML has expanded the attain of this concept, permitting detection techniques to deal with the size and complexity of recent IT environments. It’s essential to notice, although, that deploying anomaly detection isn’t a silver bullet — it requires cautious tuning and integration with safety operations to be efficient, as seen with the Goal breach state of affairs. When executed proper, nonetheless, it offers a much-needed security internet, catching the assaults that slip previous conventional nets.

Whereas machine studying drastically improves anomaly detection, it additionally introduces challenges. Two of the largest points are false positives (benign occasions wrongly flagged as anomalies) and mannequin interpretability (the “black-box” drawback). Let’s study these challenges and the way the {industry} is addressing them.

By its nature, anomaly detection is delicate to something out of the odd — however not each odd occasion is an assault. A brand new software program replace rolling out throughout the community would possibly generate uncommon site visitors patterns, or an government visiting from abroad would possibly set off an anomalous login alert resulting from a overseas IP. These are professional actions that simply occur to be uncommon. A very delicate system can overwhelm analysts with alerts, an issue referred to as alert fatigue. Excessive false constructive charges can erode belief within the system (analysts would possibly begin ignoring the alarms) and waste invaluable time [1]. In cybersecurity, assets are restricted; chasing down false alarms means probably lacking actual threats. To fight this, ML fashions are constantly refined to be extra exact. One strategy is incorporating context and suggestions — the system learns not simply from preliminary coaching knowledge but additionally from analysts’ responses. For instance, if an anomaly is investigated and located benign, that suggestions can be utilized to regulate the mannequin or its thresholds (basically telling the system “this sort of deviation is OK”). Safety groups typically make use of tiered detection: a strict anomaly detector catches something suspicious, then a secondary system or an analyst applies guidelines and/or information to filter out recognized benign anomalies. Moreover, combining anomaly detection with signature detection can cut back false positives by cross-referencing alerts with risk intelligence (if an anomaly coincides with a recognized malware signature, it’s undoubtedly price investigating; if not, maybe decrease its precedence). High-quality-tuning the sensitivity (e.g., requiring a sure magnitude of deviation earlier than alerting) is one other sensible measure. Finally, it’s a balancing act — you need to catch the unhealthy stuff with out crying wolf too typically. Fashionable techniques use strategies like adaptive thresholds (which modify based mostly on noise ranges) and ensemble detectors (a number of fashions that should agree) to maintain false positives to a manageable stage [1].

Machine studying fashions, particularly advanced ones like deep neural networks, can typically be opaque of their decision-making. This lack of transparency is problematic in cybersecurity. If an anomaly detection system flags an occasion however can not clarify why, safety analysts could be hesitant to take motion, or they might wrestle to research the incident. Understanding why an alert was generated is essential for response — it guides the place to look and the right way to remediate. Nonetheless, many ML fashions act as black containers, outputting an anomaly rating with out human-readable rationale [9]. To construct belief and make these techniques virtually helpful, explainable AI (XAI) strategies are being adopted. Instruments like LIME (Native Interpretable Mannequin-Agnostic Explanations) and SHAP (SHapley Additive Explanations) are fashionable for explaining anomaly detection fashions. These strategies present perception by figuring out which options (or elements of the enter knowledge) most affected the mannequin’s resolution [9]. For instance, if an IDS powered by a neural community flags a session as malicious, SHAP would possibly present that “unusually excessive outbound knowledge quantity and uncommon vacation spot IP” have been the highest contributors to the anomaly rating — basically opening the black field to disclose the essential components. Such a proof not solely helps analysts confirm if it’s a real constructive (these causes would possibly clearly point out knowledge exfiltration, confirming an actual risk), but additionally aids in taking motion (e.g., block that IP or system). Interpretability strategies might be model-agnostic (deal with the ML mannequin as a black field and probe it) or constructed into the mannequin (as an illustration, decision-tree based mostly anomaly detectors are inherently interpretable, albeit much less versatile than deep studying). In observe, quite a lot of analysis is targeted on integrating XAI with safety instruments [9]. One examine famous that making use of SHAP and LIME to intrusion detection techniques allowed safety groups to see which options (like login time, consumer position, and so forth.) led to an alert, drastically bettering their potential to belief and act on the system’s outputs [9]. Furthermore, regulatory and compliance issues (comparable to GDPR) could require explanations for automated selections, even in safety. By making anomaly detection extra clear, we be certain that the ML just isn’t a magic oracle however a well-understood assistant — analysts stay in management, and might validate and refine the system’s information. This additionally helps in tuning the system: if many false positives are attributable to a specific function being weighed too closely, that perception (by way of XAI) can result in retraining the mannequin with changes.

In abstract, the challenges of false positives and interpretability are being addressed by means of a mixture of higher algorithms, human-in-the-loop processes, and new instruments. Suggestions loops the place analysts label alerts as benign or malicious assist cut back future false alarms [10]. Explainable AI strategies shine gentle into advanced fashions, turning them from inscrutable containers into advisory instruments that consultants can perceive and enhance. The result’s anomaly detection that isn’t solely correct, but additionally actionable and reliable — a necessary requirement for any cybersecurity expertise.

The intersection of machine studying and cybersecurity is quickly evolving. A number of superior strategies and traits are shaping the way forward for anomaly detection in cybersecurity.

Cyber threats continuously evolve, and static fashions can turn into outdated as attackers change techniques. On-line studying refers to fashions that replace themselves constantly or often as new knowledge is available in. As a substitute of retraining a complete mannequin from scratch periodically (which might be gradual and disruptive), on-line studying algorithms incorporate new examples on the fly. In anomaly detection, this implies the system can modify its notion of “regular” because the atmosphere modifications — as an illustration, if an organization transitions to a distant work mannequin, a web based learner will regularly deal with VPN logins as regular reasonably than flagging the surge in distant entry as anomalous. This functionality is essential for staying forward of attackers. It additionally helps tackle idea drift (when the statistical properties of regular habits change over time). We anticipate to see extra cybersecurity options with on-line or incremental studying, enabling fashions to adapt to new patterns of professional habits and new assault strategies in real-time. Such adaptability was highlighted as a necessity in latest analysis on DDoS detection, the place conventional supervised fashions struggled with altering site visitors patterns; the proposed route was new adaptive mechanisms to catch zero-day and evolving assaults [4].

Many cybersecurity issues contain relational knowledge — consider networks of computer systems, customers accessing assets, or processes interacting on a bunch. These might be naturally represented as graphs (nodes and edges). Graph Neural Networks are a deep studying strategy tailor-made to graph-structured knowledge, and so they have proven distinctive promise in anomaly detection on graphs [12]. For instance, in a big enterprise, one can construct a graph the place nodes are customers, gadgets, and purposes, and edges symbolize interactions (login, knowledge switch). A GNN-based anomaly detector can be taught the advanced relationships and detect when a node’s habits within the context of its connections is irregular (like a usually remoted system immediately speaking with many new friends). GNNs have been used for malicious insider detection, the place the graph of user-resource entry is analyzed for odd hyperlink patterns, and for detecting malware in program dependency graphs. The power of GNNs is their potential to seize construction: safety incidents typically manifest as uncommon buildings (e.g., a sudden clique of communication between machines that usually don’t speak to one another). Analysis surveys be aware that GNN-based strategies leverage graph construction to realize superior anomaly detection efficiency in cybersecurity use-cases like detecting faux accounts in social networks or discovering compromised hosts in enterprise networks [12]. Going ahead, as knowledge sources like authentication logs, community flows, and system calls are built-in, we anticipate GNNs will play a bigger position in correlating these into graph-based profiles and recognizing anomalies that may be arduous to see in remoted knowledge streams.

Cyber protection can drastically profit from collaboration throughout organizations, however sharing uncooked knowledge (which could embody delicate logs or private info) is usually not doable resulting from privateness and authorized considerations. Federated studying is an rising strategy that trains machine studying fashions throughout a number of events with out exchanging uncooked knowledge. As a substitute, every group computes mannequin updates on its native knowledge and a central server or protocol aggregates these updates into a world mannequin. In cybersecurity, federated studying permits, for instance, a number of corporations to collaboratively prepare an anomaly detection mannequin that learns from every of their networks’ experiences, with none of them revealing their inner logs. That is highly effective for catching widespread threats — if one firm sees a brand new assault, the mannequin parameters replace and others can profit by being ready for it, all in close to real-time. A use case could be federated studying for malware detection on endpoints throughout totally different banks: all of them prepare a shared mannequin that rapidly learns malware habits noticed wherever, but no financial institution ever shares its buyer knowledge instantly. In accordance with consultants, this collaborative intelligence permits anomalies and threats to be recognized sooner and extra precisely throughout various environments, because the pooled insights create a richer mannequin [8]. We’re already seeing federated studying in safety merchandise for cell phones (the place every cellphone contributes to a world anomaly mannequin for detecting malicious apps, with out sending consumer knowledge). Sooner or later, extra federated anomaly detection networks could emerge — probably industry-wide initiatives the place members contribute to collective risk fashions (considerably like crowdsourced risk intelligence, however by way of mannequin parameters). This manner, organizations can keep forward of attackers by studying from one another’s detections, all whereas preserving privateness.

Regardless of automation, human perception stays a cornerstone of efficient cybersecurity. Future anomaly detection techniques will probably use human-in-the-loop approaches extra deeply. This contains mechanisms for professional suggestions, in addition to encoding professional information into the fashions. For example, safety analysts would possibly present reinforcement indicators — labeling sure alerts as essential or false — and an energetic studying system would retrain the mannequin to regulate its sensitivity accordingly. Over time, the mannequin basically distills the professional’s information of what’s actually essential. We’d additionally see extra integration of expert-defined guidelines with ML fashions (a type of hybrid system). For instance, if consultants know that sure ports ought to by no means be used, that rule might be baked in so the mannequin doesn’t even have to be taught it. Conversely, when the ML flags one thing, professional techniques can annotate it with context (“this server was just lately patched, which could clarify the bizarre habits”) to assist determine if it’s a real risk. The synergy of ML and professional information can even seem in mannequin design — some analysis is specializing in explainable and interactive ML, the place analysts can question the mannequin (“why is that this anomalous?”) after which right the mannequin’s reasoning if wanted. In observe, we already see SOC analysts successfully instructing their anomaly detection instruments: “With every alert, the group teaches the system what knowledge factors are regular and irregular”, bettering the instrument’s accuracy over time [10]. The longer term will amplify this, probably with user-friendly interfaces for analysts to present suggestions and even modify sure mannequin parameters instantly with area information. By embedding professional information into AI (and vice versa, AI into professional workflows), organizations will refine their cybersecurity defenses constantly — the machine studying catches what people would possibly miss within the knowledge deluge, and people make sure the machine is specializing in related, right indicators.

Along with these, different promising instructions embody automated function extraction utilizing transformers (e.g., treating logs as language and utilizing NLP strategies to search out anomalies), adversarial robustness (making anomaly detectors resilient towards attackers who could attempt to evade or poison them), and the continued growth of normal benchmarks and analysis strategies for cybersecurity anomaly detection (to pretty evaluate approaches and drive progress). The approaching years will probably see extra convergence of various AI strategies — think about federated graph neural networks with on-line studying, all with professional oversight — as we attempt for extra proactive and clever cybersecurity. The complexity of defending digital techniques calls for this multi-faceted strategy. By combining superior machine studying with collaboration and human experience, future anomaly detection techniques will probably be much more correct, clear, and responsive, forming a formidable line of protection towards cyber adversaries.

Anomaly detection has turn into an indispensable element of recent cybersecurity technique, and machine studying is on the coronary heart of its transformation. By shifting past signature-checking to really “perceive” regular versus irregular patterns, ML-driven techniques can catch rising threats that may slip previous conventional instruments. We mentioned how level, contextual, and collective anomalies symbolize other ways one thing might be “off” — machine studying permits detection in all these instances by modeling knowledge in nuanced ways in which people or legacy techniques merely couldn’t. Methods from fundamental clustering to deep CNNs and GANs are deployed to sift by means of large logs and community flows, teasing out the delicate indicators of intrusions or misuse. Actual-world examples just like the Goal breach and college community probes spotlight each the potential and the necessity for these applied sciences: the clues have been there, and with the best ML techniques (and correct consideration), disasters might be averted by recognizing the anomaly early.

But, profitable cybersecurity is extra than simply deploying a intelligent algorithm. It requires cautious dealing with of false positives in order that alarms imply one thing, and embracing explainable AI in order that safety groups belief and act on the alerts. The mixture of human instinct with machine consistency creates a robust suggestions loop — every strengthening the opposite. As we glance to the long run, approaches like on-line studying will make anomaly detectors extra adaptive, federated studying will make them extra collaborative, and graph evaluation will make them extra context-aware. Maybe most significantly, the incorporation of professional information will be certain that these superior instruments stay aligned with real-world safety wants and eventualities.

In a risk panorama the place the one fixed is change, machine studying offers a approach for cybersecurity defenses to constantly enhance and be taught in a way akin to attackers probing for weaknesses. This proactive stance — detecting the faint footprints of an attacker earlier than they trigger injury — is the final word objective of anomaly detection. The advances in machine studying are bringing that objective nearer inside attain by turning oceans of information and occasions into actionable safety perception. By investing in and iterating on these applied sciences, organizations equip themselves to not simply react to breaches, however to anticipate and forestall them. In conclusion, machine studying isn’t just reworking anomaly detection; it’s reworking cybersecurity from a reactive endeavor to a forward-looking, clever self-discipline. The battle between attackers and defenders could by no means finish, however with sensible anomaly detection on our aspect, we tilt the chances in favor of the protection — catching the hackers without warning, as an alternative of the opposite approach round.

- Cross, Ok. (2024, September 6). What’s anomaly detection?. CrowdStrike. https://www.crowdstrike.com/en-us/cybersecurity-101/next-gen-siem/anomaly-detection/#:~:text=,a%20group%20of%20related%20data.

- Hariharasubramanian, N. (2025, January 16). What’s anomaly based mostly detection system?. Fidelis Safety. https://fidelissecurity.com/cybersecurity-101/learn/anomaly-based-detection-system/# :~:text=Anomaly,to%20unknown%20or%20emerging%20threats.

- Shu, X., Tian, Ok., Ciambrone, A., Yao, D. (2017, January 18). Breaking the Goal: An Evaluation of Goal Information Breach and Classes Realized. IEEE Entry. https://arxiv.org/abs/1701.04940.

- Tufan, E., Tezcan, C., Acartürk, C. (2021, January 1). Anomaly-Based mostly Intrusion Detection by Machine Studying: A Case Research on Probing Assaults to an Institutional Community. IEEE Entry. https://open.metu.edu.tr/handle/11511/89925#:~:text=anomaly,model%20being%20slightly%20more%20accurate.

- Al-Turaiki, I., Altwaijry, N. (2021, June 16). A Convolutional Neural Community for Improved Anomaly-Based mostly Community Intrusion Detection. Large Information. https://liebertpub.com/doi/full/10.1089 /massive.2020.0263.

- Benaddi, H., Jouhari, M., Ibrahimi, Ok., Othman, J.B., Amhoud, E.M. (2022, October 22). Anomaly Detection in Industrial IoT Utilizing Distributional Reinforcement Studying and Generative Adversarial Networks. Sensors. https://pmc.ncbi.nlm.nih.gov/articles/PMC96561 36/#:~:text=IDS%20system%20using%20Distributional%20Reinforcement,based%20on%20anomaly%20detection%20datasets.

- Ohaba, E. (2024, March 18). Federated Studying for Cybersecurity: Collaborative Intelligence for Risk Detection. Tripwire. https://www.tripwire.com/state-of-security/federated-learning -cybersecurity-collaborative-intelligence-threat-detection#:~:text=Traditional%20threat%20detection%20often%20struggles,threats%20faster%20and%20more%20accurately.

- Mohale, V.Z., Obagbuwa, I.C. (2025, January 27). A scientific assessment on the combination of explainable synthetic intelligence in intrusion detection techniques to enhancing transparency and interpretability in cybersecurity. Frontiers in Synthetic Intelligence. https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2025.1526221/full#:~:text=The%20rise%20of%20sophisticated%20cyber,transparency%20and%20interpretability%20in%20cybersecurity.

- Brown, S. (2024, June 10). What Is Anomaly Detection? Algorithms, Examples, and Extra. StrongDM. https://www.strongdm.com/blog/anomaly-detection#:~:text=system%20at%20 work,be%20time%20and%20resource%20intensive.

- Boniol, P., Palpanas, T. (2024, July 16). Time Collection Anomaly Detection. ACM SIGMOD Weblog. https://wp.sigmod.org/?p=3739#:~:text=Formally%2C%20we%20define%20three%20types,b%29%29%20but.

- Wang, S., Yu, P.S. (2022). Graph Neural Networks in Anomaly Detection. Springer Singapore. https://graph-neural-networks.github.io/gnnbook_Chapter26.html#:~:text=applied%20to%20numerous%20high,and%20been%20used%20in%20anomaly.

- Kampakis, S. (2022, March 4). 3 Sorts of Anomalies in Anomaly Detection. Hackernoon. https://hackernoon.com/3-types-of-anomalies-in-anomaly-detection.