Coaching a neural community would possibly sound like educating a machine to assume. However once you actually get into it, it’s much less about magic and extra about math, particularly, minimizing a operate.

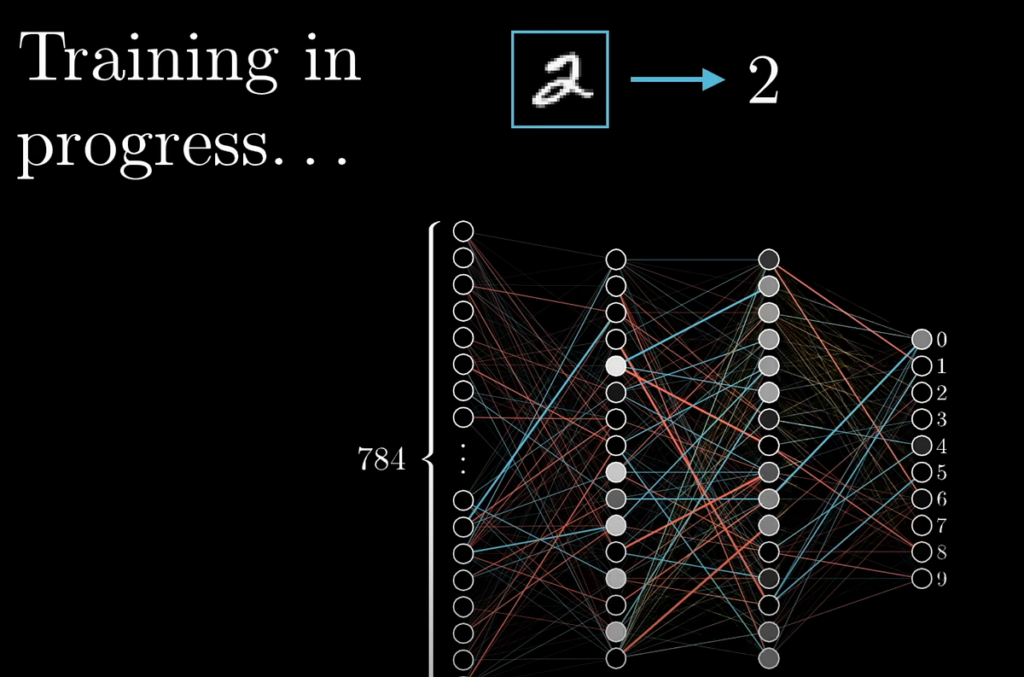

On the coronary heart of coaching is that this purpose: we would like an algorithm that may regulate the 1000’s of parameters, roughly 13,000 weights and biases in a typical small neural community (as seen within the earlier article) in order that it will get higher and higher at classifying issues like handwritten digits.

We feed the community coaching information: a number of photographs of handwritten digits, every labeled with the proper quantity. The thought is that by adjusting the parameters based mostly on this information, the community received’t simply memorize the examples, it’ll study patterns that generalize to new, unseen information.

To check if this studying works, we consider the community on a separate check set, photographs it hasn’t seen earlier than. If it classifies them appropriately, that’s an indication the community isn’t simply guessing and it’s really realized one thing.

That is the place the well-known MNIST dataset is available in: tens of 1000’s of labeled handwritten digits, good for coaching and testing easy neural nets. It’s change into the “Hey World” of machine studying.

However how does the community truly study from this information?

As soon as we’ve arrange our neural community with its construction in place, neurons layered collectively, related by weights and tweaked by biases, we hit an fascinating second: we initialize the whole lot randomly.

Meaning the community begins off as a complete mess.

Feed in a picture of a “3” and the community’s prediction is mainly gibberish. The output would possibly activate all of the unsuitable neurons, as a result of the weights and biases haven’t realized something but, they’re simply throwing darts at nighttime.

To information the community towards higher predictions, we give it suggestions within the type of a price operate.

Consider the price operate because the community’s “report card.” It takes the community’s output for a given enter picture and compares it to what the reply ought to have been. If it’s spot on, the price is low. If it’s wildly off, the price is excessive.

The traditional approach to measure that is by taking the distinction between the output and the goal, squaring it, and summing up all these squared variations. For one coaching instance, that provides us the price.

- If the community predicts “3” and lights up the “3” neuron with a robust activation (near 1) whereas conserving the others near 0, that’s good.

- If it prompts a bunch of neurons randomly, together with “7” and “2,” the price might be excessive as a result of that’s not what we would like.

Then we common this price over your entire coaching set, tens of 1000’s of examples. This provides us a single quantity that displays how nicely (or how poorly) the community is doing general.

Right here’s the place it will get a bit meta.

We already know the community itself is a operate:

It takes 784 pixel values and produces 10 outputs (activations for digits 0 by means of 9).

However the price operate is a brand new operate that sits on prime of the community. It takes all 13,002 weights and biases as enter, runs the community on each coaching instance, and produces one quantity, how unhealthy issues are.

So, in a way, we’re not simply evaluating how the community processes a single enter, we’re evaluating the efficiency of your entire community configuration.

That’s an enormous house to discover: 13,000 dimensions!

Simply mentioning that the community is doing badly isn’t helpful until we additionally know methods to repair it.

To make issues easier, let’s step again and picture a fundamental one-dimensional operate, one thing with a single enter and a single output. How would you discover the enter worth that minimizes the output?

Should you’ve studied calculus, the trick:

Use the slope of the operate to determine which course to maneuver.

- If the slope is constructive, go left.

- If the slope is adverse, go proper.

By taking small steps in the correct course and checking the slope at every level, we inch nearer to a native minimal, a valley within the curve the place the operate is lowest.

This course of is called gradient descent. It’s how we practice our community.

In fact, with a community, we’re not coping with one enter, we’re coping with 13,002. So as a substitute of a slope, we calculate a gradient: the slope in each dimension directly.

Thus far, we’ve checked out how a neural community makes use of a price operate to guage how unhealthy its predictions are, and the way it tries to regulate its weights and biases to do higher subsequent time. However how, precisely, does it know how to regulate them?

That’s the place gradient descent is available in.

Think about a easy operate with two inputs as a substitute of 1, like a floor floating over an XY aircraft. The peak of this floor at any level tells you the operate’s output. You’re now looking for the bottom level on this bumpy floor, aka the minimal of the operate.

However on this two-dimensional house, there’s not only a “slope”, there’s a course that factors downhill the quickest. You’re asking: Which means ought to I step within the XY aircraft to lower the operate most shortly?

That is precisely what the gradient tells you. It’s a vector, a course that reveals you the steepest path uphill. So if you wish to go downhill, you comply with the adverse gradient.

And the size of that vector tells you ways steep the slope is.

You don’t should be a multivariable calculus wizard to grasp the remainder, simply know this:

Should you can compute the gradient of a operate, then you’ll be able to take small steps in the wrong way (downhill), and finally land in a valley, a neighborhood minimal.

Now exchange that 2D enter house with a 13,002-dimensional house.

That’s what number of weights and biases we’re adjusting in our community.

We will consider these parameters as an enormous column vector, and the adverse gradient is one other vector pointing within the course that reduces the price operate the quickest.

So what does a gradient step appear to be right here?

It’s mainly saying:

- “Nudge this weight up just a bit.”

- “Push this bias down a bit extra.”

- “Go away that different one principally unchanged — it doesn’t matter a lot.”

And we do that time and again and over.

Every element of the gradient vector tells us two issues:

- Path: Ought to this weight or bias go up or down?

- Significance: How a lot does altering this particular parameter truly matter for lowering the price?

Some weights matter greater than others. A tweak to at least one connection would possibly drastically enhance efficiency, whereas one other would possibly barely have an effect on something. The relative measurement of every gradient element displays that.

One refined however essential factor to grasp is that for gradient descent to work nicely, the price operate should be clean. That means, the tiny nudges we take utilizing the gradient can constantly lead us downward.

That is truly one cause synthetic neurons use clean, steady activation capabilities like sigmoid or ReLU as a substitute of behaving like binary on/off switches the best way organic neurons do. Easy outputs imply clean price capabilities, and that makes optimization attainable.

It means this:

The community adjusts its weights and biases, time and again, following the adverse gradient of the price operate, making small enhancements with every step.

That’s gradient descent in motion.

Ultimately, the community lands in some valley in that large 13,000-dimensional house, the place the price operate is as little as it may get (not less than regionally). And at that time, it’s not simply making random guesses however making selections based mostly on the patterns it has acknowledged within the information.

After strolling by means of your entire course of, random initialization, price capabilities, and gradient descent, you is perhaps questioning: Okay, however does it truly work?

Surprisingly, sure.

Let’s take the easy neural community described up to now: two hidden layers, every with 16 neurons. This construction was chosen principally for simplicity and visualization functions and never as a result of it’s optimum. And but, this modest community can appropriately classify about 96% of latest, unseen digit photographs from the MNIST check set.

That’s not unhealthy in any respect.

And to be honest, once you have a look at a number of the photographs it does get unsuitable, you’ll be able to virtually sympathize with the community. Some digits are so messy or ambiguous that even a human would possibly hesitate.

With a little bit of tuning, say, adjusting the hidden layer sizes or experimenting with activations, you’ll be able to push that accuracy as much as 98%. That’s fairly spectacular, contemplating how little data we truly give the mannequin.

However even at 96%, there’s one thing magical occurring. This community is managing to generalize to utterly new photographs, regardless of by no means being explicitly informed what options to search for.

It’s by no means been informed: “Search for curves for a 6” or “Straight strains for a 1.”

It simply learns, and that’s price pausing to understand.

You would possibly bear in mind how we initially justified the layered construction of the community:

- Perhaps the primary hidden layer picks up on edges.

- The second hidden layer combines these into shapes, like loops or strains.

- The output layer then identifies full digits.

That’s a pleasant story. A really intuitive one.

However… it seems to be not true, not less than not for this particular setup.

You’ll be able to truly visualize what every neuron within the second layer is “on the lookout for” by analyzing the weights of the connections from the enter layer to that neuron. Consider every neuron’s incoming weights as a tiny 28×28 pixel picture, a filter that’s making an attempt to detect sure patterns.

If our neat concept about edge detection had been proper, we’d anticipate these weight visualizations to appear to be edges, corners, or shapes.

However they don’t.

As an alternative, they usually seem random, with perhaps a number of obscure blobs of sample within the center.

So what’s occurring?

Principally, within the monumental 13,000-dimensional house of attainable weights and biases, the community has discovered some native minimal that works nicely sufficient, it will get the reply proper more often than not. However that doesn’t imply it realized the patterns we hoped it might study.

It’s doing its job… simply not in the best way we imagined.

To actually hammer dwelling this level, attempt feeding the community a random picture, simply noise.

You would possibly anticipate the community to say, “Uh… I don’t know,” or not less than unfold its guesses throughout all 10 digits.

However as a substitute, it confidently spits out a digit. Generally it insists that this mess of static is a “5” simply as confidently as it might acknowledge an actual 5.

This reveals one thing essential:

Despite the fact that the community has realized to categorise digits nicely, it has no thought what a digit truly is.

Why? As a result of it was by no means educated to be unsure. The coaching setup was extraordinarily slender and managed. All of the community has ever seen are centered, unmoving handwritten digits in a tiny 28×28 grid. It by no means needed to take care of variation, randomness, or out-of-distribution inputs.

From the community’s perspective, that is the entire universe.

Given all that, why did we begin off with the intuitive story of edge detectors and form recognizers?

As a result of whereas this easy community doesn’t behave that means, the thought nonetheless serves an important goal. It’s a stepping stone to understanding extra superior fashions that do construct up patterns in a structured means, like convolutional neural networks (CNNs), that are particularly designed to detect native patterns and visible options.

This fundamental community setup, typically known as a “vanilla feedforward neural community”, was closely researched within the Nineteen Eighties and 90s. It’s not the innovative anymore, nevertheless it’s foundational.

And regardless of its simplicity, it works.

It reveals us that even with out specific guidelines, even with out telling it what patterns to seek out, a community can stumble its means into competent habits. It’s not really “clever,” nevertheless it’s doing one thing significant and types the bedrock of the whole lot that’s come since.

To wrap up, let’s step again from our instance community and take a broader have a look at how fashionable neural networks behave, particularly when the fashions change into large and the datasets get extra advanced.

This brings us to an insightful dialog 3Blue1Brown had with Leisha Lee, a deep studying researcher with a PhD within the subject. She shared ideas on a few current papers that problem and refine our understanding of how deep networks study, particularly on the subject of picture recognition.

The primary paper mentioned of their chat explored what occurs once you take a high-performing picture classification mannequin and utterly shuffle the labels of the coaching information earlier than coaching begins. Meaning, for instance, a picture of a cat would possibly now be labeled as a toaster, and a canine is perhaps labeled as a mountain.

As anticipated, when the mannequin was later examined on actual, appropriately labeled information, it carried out no higher than random likelihood, in any case, it had realized nonsense labels.

However right here’s the shocking half: the community nonetheless achieved 100% coaching accuracy, similar to it might have on appropriately labeled information.

Regardless of the labels being random, the mannequin managed to memorize your entire dataset, mapping every picture to its (nonsensical) assigned label.

That raises an uncomfortable query:

If these networks can simply memorize arbitrary information, then is coaching a mannequin actually about studying significant construction within the information or are we simply watching an enormous memorization engine at work?

This experiment forged doubt on the deeper studying narrative many people wish to imagine: that the mannequin is discovering edges, textures, and semantic ideas. Perhaps it’s simply brute-forcing memorization after we give it sufficient parameters and compute.

Leisha then pointed to a follow-up paper, offered about six months later at ICML, that revisited this challenge.

This new work didn’t instantly refute the unique findings, however as a substitute provided a extra nuanced view.

It in contrast how coaching progresses in two totally different eventualities:

- When educated on random labels

- When educated on right, structured labels

Right here’s what they discovered:

- Within the case of random labels, the accuracy improves slowly, virtually in a linear vogue. This means the community is struggling to discover a good configuration of weights, looking arduous for that native minimal.

- Then again, when educated on right labels, the community could wobble a bit at the start, however then shortly plummets to a high-accuracy resolution.

Meaning:

It’s simpler for a community to study from structured, significant information than from random noise.

This distinction in how shortly the community converges reveals one thing essential: whereas the mannequin can memorize random information, it’s not essentially designed to take action effectively. When there’s actual construction within the dataset, the mannequin learns rather more shortly, suggesting that it’s doing greater than blind memorization.

Leisha additionally introduced up an earlier paper that provides one other piece to the puzzle. It seemed on the optimization panorama of deep neural networks, the huge, advanced “terrain” that gradient descent strikes by means of because it searches for low-cost configurations.

One fascinating discovering was this:

Lots of the native minima discovered by these networks are of roughly equal high quality.

In different phrases, it doesn’t matter precisely which native minimal you land in, in case your dataset is well-structured, any of them would possibly result in good efficiency.

Which means that:

- We don’t essentially must land within the international minimal.

- The panorama itself is commonly formed in a means that helps gradient descent land someplace helpful, so long as the info has actual, significant construction.

All of this brings us to a extra mature understanding of what neural networks are doing throughout coaching:

- Sure, with sufficient capability, they can memorize.

- However when educated on structured information, they appear to be doing one thing smarter, one thing that leverages the patterns and relationships inherent within the enter.

And crucially, gradient descent doesn’t should be good. It simply must information the community into one of many many good-enough valleys on this high-dimensional terrain.

It’s a gorgeous, messy mixture of brute-force energy, elegant arithmetic, and emergent habits. And though our easy two-layer community won’t seize edges like we hoped, the instruments we’re utilizing: price capabilities, gradient descent, optimization, are the identical ones driving state-of-the-art methods right this moment.