Utilizing data graphs and AI to retrieve, filter, and summarize medical journal articles

The accompanying code for the app and pocket book are here.

Information graphs (KGs) and Massive Language Fashions (LLMs) are a match made in heaven. My previous posts focus on the complementarities of those two applied sciences in additional element however the brief model is, “among the predominant weaknesses of LLMs, that they’re black-box fashions and battle with factual data, are a few of KGs’ biggest strengths. KGs are, basically, collections of details, and they’re totally interpretable.”

This text is all about constructing a easy Graph RAG app. What’s RAG? RAG, or Retrieval-Augmented Era, is about retrieving related data to increase a immediate that’s despatched to an LLM, which generates a response. Graph RAG is RAG that makes use of a data graph as a part of the retrieval portion. In case you’ve by no means heard of Graph RAG, or desire a refresher, I’d watch this video.

The fundamental thought is that, reasonably than sending your immediate on to an LLM, which was not skilled in your knowledge, you possibly can complement your immediate with the related data wanted for the LLM to reply your immediate precisely. The instance I take advantage of usually is copying a job description and my resume into ChatGPT to jot down a canopy letter. The LLM is ready to present a way more related response to my immediate, ‘write me a canopy letter,’ if I give it my resume and the outline of the job I’m making use of for. Since data graphs are constructed to retailer data, they’re an ideal approach to retailer inner knowledge and complement LLM prompts with further context, enhancing the accuracy and contextual understanding of the responses.

This expertise has many, many, purposes such customer service bots, drug discovery, automated regulatory report generation in life sciences, talent acquisition and management for HR, legal research and writing, and wealth advisor assistants. Due to the extensive applicability and the potential to enhance the efficiency of LLM instruments, Graph RAG (that’s the time period I’ll use right here) has been blowing up in reputation. Here’s a graph exhibiting curiosity over time primarily based on Google searches.

Graph RAG has skilled a surge in search curiosity, even surpassing phrases like data graphs and retrieval-augmented era. Observe that Google Developments measures relative search curiosity, not absolute variety of searches. The spike in July 2024 for searches of Graph RAG coincides with the week Microsoft announced that their GraphRAG utility can be obtainable on GitHub.

The joy round Graph RAG is broader than simply Microsoft, nevertheless. Samsung acquired RDFox, a data graph firm, in July of 2024. The article announcing that acquisition didn’t point out Graph RAG explicitly, however in this article in Forbes revealed in November 2024, a Samsung spokesperson acknowledged, “We plan to develop data graph expertise, one of many predominant applied sciences of customized AI, and organically join with generated AI to help user-specific providers.”

In October 2024, Ontotext, a number one graph database firm, and Semantic Internet firm, the maker of PoolParty, a data graph curation platform, merged to type Graphwise. Based on the press release, the merger goals to “democratize the evolution of Graph RAG as a class.”

Whereas among the buzz round Graph RAG could come from the broader pleasure surrounding chatbots and generative AI, it displays a real evolution in how data graphs are being utilized to unravel complicated, real-world issues. One instance is that LinkedIn applied Graph RAG to enhance their customer support technical help. As a result of the instrument was capable of retrieve the related knowledge (like beforehand solved comparable tickets or questions) to feed the LLM, the responses had been extra correct and the imply decision time dropped from 40 hours to fifteen hours.

This submit will undergo the development of a fairly easy, however I feel illustrative, instance of how Graph RAG can work in follow. The tip result’s an app {that a} non-technical consumer can work together with. Like my final submit, I’ll use a dataset consisting of medical journal articles from PubMed. The concept is that that is an app that somebody within the medical discipline may use to do literature assessment. The identical ideas may be utilized to many use circumstances nevertheless, which is why Graph RAG is so thrilling.

The construction of the app, together with this submit is as follows:

Step zero is making ready the info. I’ll clarify the small print under however the total aim is to vectorize the uncooked knowledge and, individually, flip it into an RDF graph. So long as we preserve URIs tied to the articles earlier than we vectorize, we are able to navigate throughout a graph of articles and a vector area of articles. Then, we are able to:

- Search Articles: use the facility of the vector database to do an preliminary search of related articles given a search time period. I’ll use vector similarity to retrieve articles with essentially the most comparable vectors to that of the search time period.

- Refine Phrases: discover the Medical Subject Headings (MeSH) biomedical vocabulary to pick out phrases to make use of to filter the articles from step 1. This managed vocabulary accommodates medical phrases, different names, narrower ideas, and plenty of different properties and relationships.

- Filter & Summarize: use the MeSH phrases to filter the articles to keep away from ‘context poisoning’. Then ship the remaining articles to an LLM together with an extra immediate like, “summarize in bullets.”

Some notes on this app and tutorial earlier than we get began:

- This set-up makes use of data graphs solely for metadata. That is solely doable as a result of every article in my dataset has already been tagged with phrases which might be a part of a wealthy managed vocabulary. I’m utilizing the graph for construction and semantics and the vector database for similarity-based retrieval, making certain every expertise is used for what it does finest. Vector similarity can inform us “esophageal most cancers” is semantically just like “mouth most cancers”, however data graphs can inform us the small print of the connection between “esophageal most cancers” and “mouth most cancers.”

- The information I used for this app is a group of medical journal articles from PubMed (extra on the info under). I selected this dataset as a result of it’s structured (tabular) but in addition accommodates textual content within the type of abstracts for every article, and since it’s already tagged with topical phrases which might be aligned with a well-established managed vocabulary (MeSH). As a result of these are medical articles, I’ve referred to as this app ‘Graph RAG for Drugs.’ However this similar construction may be utilized to any area and isn’t particular to the medical discipline.

- What I hope this tutorial and app show is that you would be able to enhance the outcomes of your RAG utility when it comes to accuracy and explainability by incorporating a data graph into the retrieval step. I’ll present how KGs can enhance the accuracy of RAG purposes in two methods: by giving the consumer a approach of filtering the context to make sure the LLM is barely being fed essentially the most related data; and by utilizing area particular managed vocabularies with dense relationships which might be maintained and curated by area specialists to do the filtering.

- What this tutorial and app don’t straight showcase are two different vital methods KGs can improve RAG purposes: governance, entry management, and regulatory compliance; and effectivity and scalability. For governance, KGs can do greater than filter content material for relevancy to enhance accuracy — they’ll implement knowledge governance insurance policies. For example, if a consumer lacks permission to entry sure content material, that content material may be excluded from their RAG pipeline. On the effectivity and scalability aspect, KGs may help guarantee RAG purposes don’t die on the shelf. Whereas it’s straightforward to create a formidable one-off RAG app (that’s actually the aim of this tutorial), many firms battle with a proliferation of disconnected POCs that lack a cohesive framework, construction, or platform. Which means a lot of these apps are usually not going to outlive lengthy. A metadata layer powered by KGs can break down knowledge silos, offering the muse wanted to construct, scale, and keep RAG purposes successfully. Utilizing a wealthy managed vocabulary like MeSH for the metadata tags on these articles is a approach of making certain this Graph RAG app may be built-in with different methods and lowering the danger that it turns into a silo.

The code to organize the info is in this pocket book.

As talked about, I’ve once more determined to make use of this dataset of fifty,000 analysis articles from the PubMed repository (License CC0: Public Domain). This dataset accommodates the title of the articles, their abstracts, in addition to a discipline for metadata tags. These tags are from the Medical Topic Headings (MeSH) managed vocabulary thesaurus. The PubMed articles are actually simply metadata on the articles — there are abstracts for every article however we don’t have the complete textual content. The information is already in tabular format and tagged with MeSH phrases.

We are able to vectorize this tabular dataset straight. We may flip it right into a graph (RDF) earlier than we vectorize, however I didn’t do this for this app and I don’t know that it might assist the ultimate outcomes for this type of knowledge. A very powerful factor about vectorizing the uncooked knowledge is that we add Unique Resource Identifiers (URIs) to every article first. A URI is a singular ID for navigating RDF knowledge and it’s needed for us to travel between vectors and entities in our graph. Moreover, we are going to create a separate assortment in our vector database for the MeSH phrases. This can enable the consumer to seek for related phrases with out having prior data of this managed vocabulary. Under is a diagram of what we’re doing to organize our knowledge.

We’ve got two collections in our vector database to question: articles and phrases. We even have the info represented as a graph in RDF format. Since MeSH has an API, I’m simply going to question the API on to get different names and narrower ideas for phrases.

Vectorize knowledge in Weaviate

First import the required packages and arrange the Weaviate consumer:

import weaviate

from weaviate.util import generate_uuid5

from weaviate.lessons.init import Auth

import os

import json

import pandas as pdconsumer = weaviate.connect_to_weaviate_cloud(

cluster_url="XXX", # Substitute together with your Weaviate Cloud URL

auth_credentials=Auth.api_key("XXX"), # Substitute together with your Weaviate Cloud key

headers={'X-OpenAI-Api-key': "XXX"} # Substitute together with your OpenAI API key

)

Learn within the PubMed journal articles. I’m utilizing Databricks to run this pocket book so chances are you’ll want to alter this, relying on the place you run it. The aim right here is simply to get the info right into a pandas DataFrame.

df = spark.sql("SELECT * FROM workspace.default.pub_med_multi_label_text_classification_dataset_processed").toPandas()

In case you’re operating this domestically, simply do:

df = pd.read_csv("PubMed Multi Label Textual content Classification Dataset Processed.csv")

Then clear the info up a bit:

import numpy as np

# Substitute infinity values with NaN after which fill NaN values

df.exchange([np.inf, -np.inf], np.nan, inplace=True)

df.fillna('', inplace=True)# Convert columns to string sort

df['Title'] = df['Title'].astype(str)

df['abstractText'] = df['abstractText'].astype(str)

df['meshMajor'] = df['meshMajor'].astype(str)

Now we have to create a URI for every article and add that in as a brand new column. That is necessary as a result of the URI is the way in which we are able to join the vector illustration of an article with the data graph illustration of the article.

import urllib.parse

from rdflib import Graph, RDF, RDFS, Namespace, URIRef, Literal# Perform to create a sound URI

def create_valid_uri(base_uri, textual content):

if pd.isna(textual content):

return None

# Encode textual content for use in URI

sanitized_text = urllib.parse.quote(textual content.strip().exchange(' ', '_').exchange('"', '').exchange('<', '').exchange('>', '').exchange("'", "_"))

return URIRef(f"{base_uri}/{sanitized_text}")

# Perform to create a sound URI for Articles

def create_article_uri(title, base_namespace="http://instance.org/article/"):

"""

Creates a URI for an article by changing non-word characters with underscores and URL-encoding.

Args:

title (str): The title of the article.

base_namespace (str): The bottom namespace for the article URI.

Returns:

URIRef: The formatted article URI.

"""

if pd.isna(title):

return None

# Substitute non-word characters with underscores

sanitized_title = re.sub(r'W+', '_', title.strip())

# Condense a number of underscores right into a single underscore

sanitized_title = re.sub(r'_+', '_', sanitized_title)

# URL-encode the time period

encoded_title = quote(sanitized_title)

# Concatenate with base_namespace with out including underscores

uri = f"{base_namespace}{encoded_title}"

return URIRef(uri)

# Add a brand new column to the DataFrame for the article URIs

df['Article_URI'] = df['Title'].apply(lambda title: create_valid_uri("http://instance.org/article", title))

We additionally need to create a DataFrame of the entire MeSH phrases which might be used to tag the articles. This might be useful later after we need to seek for comparable MeSH phrases.

# Perform to wash and parse MeSH phrases

def parse_mesh_terms(mesh_list):

if pd.isna(mesh_list):

return []

return [

term.strip().replace(' ', '_')

for term in mesh_list.strip("[]'").cut up(',')

]# Perform to create a sound URI for MeSH phrases

def create_valid_uri(base_uri, textual content):

if pd.isna(textual content):

return None

sanitized_text = urllib.parse.quote(

textual content.strip()

.exchange(' ', '_')

.exchange('"', '')

.exchange('<', '')

.exchange('>', '')

.exchange("'", "_")

)

return f"{base_uri}/{sanitized_text}"

# Extract and course of all MeSH phrases

all_mesh_terms = []

for mesh_list in df["meshMajor"]:

all_mesh_terms.lengthen(parse_mesh_terms(mesh_list))

# Deduplicate phrases

unique_mesh_terms = record(set(all_mesh_terms))

# Create a DataFrame of MeSH phrases and their URIs

mesh_df = pd.DataFrame({

"meshTerm": unique_mesh_terms,

"URI": [create_valid_uri("http://example.org/mesh", term) for term in unique_mesh_terms]

})

# Show the DataFrame

print(mesh_df)

Vectorize the articles DataFrame:

from weaviate.lessons.config import Configure#outline the gathering

articles = consumer.collections.create(

title = "Article",

vectorizer_config=Configure.Vectorizer.text2vec_openai(), # If set to "none" you have to all the time present vectors your self. May very well be every other "text2vec-*" additionally.

generative_config=Configure.Generative.openai(), # Make sure the `generative-openai` module is used for generative queries

)

#add ojects

articles = consumer.collections.get("Article")

with articles.batch.dynamic() as batch:

for index, row in df.iterrows():

batch.add_object({

"title": row["Title"],

"abstractText": row["abstractText"],

"Article_URI": row["Article_URI"],

"meshMajor": row["meshMajor"],

})

Now vectorize the MeSH phrases:

#outline the gathering

phrases = consumer.collections.create(

title = "time period",

vectorizer_config=Configure.Vectorizer.text2vec_openai(), # If set to "none" you have to all the time present vectors your self. May very well be every other "text2vec-*" additionally.

generative_config=Configure.Generative.openai(), # Make sure the `generative-openai` module is used for generative queries

)#add ojects

phrases = consumer.collections.get("time period")

with phrases.batch.dynamic() as batch:

for index, row in mesh_df.iterrows():

batch.add_object({

"meshTerm": row["meshTerm"],

"URI": row["URI"],

})

You may, at this level, run semantic search, similarity search, and RAG straight in opposition to the vectorized dataset. I gained’t undergo all of that right here however you possibly can have a look at the code in my accompanying notebook to try this.

Flip knowledge right into a data graph

I’m simply utilizing the identical code we used within the last post to do that. We’re mainly turning each row within the knowledge into an “Article” entity in our KG. Then we’re giving every of those articles properties for title, summary, and MeSH phrases. We’re additionally turning each MeSH time period into an entity as properly. This code additionally provides random dates to every article for a property referred to as date revealed and a random quantity between 1 and 10 to a property referred to as entry. We gained’t use these properties on this demo. Under is a visible illustration of the graph we’re creating from the info.

Right here is the best way to iterate by means of the DataFrame and switch it into RDF knowledge:

from rdflib import Graph, RDF, RDFS, Namespace, URIRef, Literal

from rdflib.namespace import SKOS, XSD

import pandas as pd

import urllib.parse

import random

from datetime import datetime, timedelta

import re

from urllib.parse import quote# --- Initialization ---

g = Graph()

# Outline namespaces

schema = Namespace('http://schema.org/')

ex = Namespace('http://instance.org/')

prefixes = {

'schema': schema,

'ex': ex,

'skos': SKOS,

'xsd': XSD

}

for p, ns in prefixes.gadgets():

g.bind(p, ns)

# Outline lessons and properties

Article = URIRef(ex.Article)

MeSHTerm = URIRef(ex.MeSHTerm)

g.add((Article, RDF.sort, RDFS.Class))

g.add((MeSHTerm, RDF.sort, RDFS.Class))

title = URIRef(schema.title)

summary = URIRef(schema.description)

date_published = URIRef(schema.datePublished)

entry = URIRef(ex.entry)

g.add((title, RDF.sort, RDF.Property))

g.add((summary, RDF.sort, RDF.Property))

g.add((date_published, RDF.sort, RDF.Property))

g.add((entry, RDF.sort, RDF.Property))

# Perform to wash and parse MeSH phrases

def parse_mesh_terms(mesh_list):

if pd.isna(mesh_list):

return []

return [term.strip() for term in mesh_list.strip("[]'").cut up(',')]

# Enhanced convert_to_uri perform

def convert_to_uri(time period, base_namespace="http://instance.org/mesh/"):

"""

Converts a MeSH time period right into a standardized URI by changing areas and particular characters with underscores,

making certain it begins and ends with a single underscore, and URL-encoding the time period.

Args:

time period (str): The MeSH time period to transform.

base_namespace (str): The bottom namespace for the URI.

Returns:

URIRef: The formatted URI.

"""

if pd.isna(time period):

return None # Deal with NaN or None phrases gracefully

# Step 1: Strip present main and trailing non-word characters (together with underscores)

stripped_term = re.sub(r'^W+|W+$', '', time period)

# Step 2: Substitute non-word characters with underscores (a number of)

formatted_term = re.sub(r'W+', '_', stripped_term)

# Step 3: Substitute a number of consecutive underscores with a single underscore

formatted_term = re.sub(r'_+', '_', formatted_term)

# Step 4: URL-encode the time period to deal with any remaining particular characters

encoded_term = quote(formatted_term)

# Step 5: Add single main and trailing underscores

term_with_underscores = f"_{encoded_term}_"

# Step 6: Concatenate with base_namespace with out including an additional underscore

uri = f"{base_namespace}{term_with_underscores}"

return URIRef(uri)

# Perform to generate a random date inside the final 5 years

def generate_random_date():

start_date = datetime.now() - timedelta(days=5*365)

random_days = random.randint(0, 5*365)

return start_date + timedelta(days=random_days)

# Perform to generate a random entry worth between 1 and 10

def generate_random_access():

return random.randint(1, 10)

# Perform to create a sound URI for Articles

def create_article_uri(title, base_namespace="http://instance.org/article"):

"""

Creates a URI for an article by changing non-word characters with underscores and URL-encoding.

Args:

title (str): The title of the article.

base_namespace (str): The bottom namespace for the article URI.

Returns:

URIRef: The formatted article URI.

"""

if pd.isna(title):

return None

# Encode textual content for use in URI

sanitized_text = urllib.parse.quote(title.strip().exchange(' ', '_').exchange('"', '').exchange('<', '').exchange('>', '').exchange("'", "_"))

return URIRef(f"{base_namespace}/{sanitized_text}")

# Loop by means of every row within the DataFrame and create RDF triples

for index, row in df.iterrows():

article_uri = create_article_uri(row['Title'])

if article_uri is None:

proceed

# Add Article occasion

g.add((article_uri, RDF.sort, Article))

g.add((article_uri, title, Literal(row['Title'], datatype=XSD.string)))

g.add((article_uri, summary, Literal(row['abstractText'], datatype=XSD.string)))

# Add random datePublished and entry

random_date = generate_random_date()

random_access = generate_random_access()

g.add((article_uri, date_published, Literal(random_date.date(), datatype=XSD.date)))

g.add((article_uri, entry, Literal(random_access, datatype=XSD.integer)))

# Add MeSH Phrases

mesh_terms = parse_mesh_terms(row['meshMajor'])

for time period in mesh_terms:

term_uri = convert_to_uri(time period, base_namespace="http://instance.org/mesh/")

if term_uri is None:

proceed

# Add MeSH Time period occasion

g.add((term_uri, RDF.sort, MeSHTerm))

g.add((term_uri, RDFS.label, Literal(time period.exchange('_', ' '), datatype=XSD.string)))

# Hyperlink Article to MeSH Time period

g.add((article_uri, schema.about, term_uri))

# Path to avoid wasting the file

file_path = "/Workspace/PubMedGraph.ttl"

# Save the file

g.serialize(vacation spot=file_path, format='turtle')

print(f"File saved at {file_path}")

OK, so now we’ve got a vectorized model of the info, and a graph (RDF) model of the info. Every vector has a URI related to it, which corresponds to an entity within the KG, so we are able to travel between the info codecs.

I made a decision to make use of Streamlit to construct the interface for this graph RAG app. Just like the final weblog submit, I’ve stored the consumer circulation the identical.

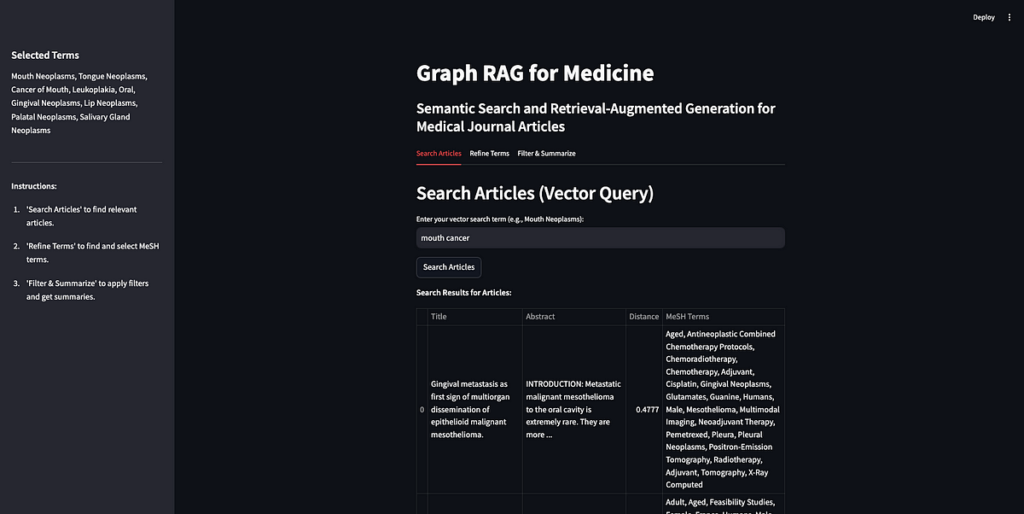

- Search Articles: First, the consumer searches for articles utilizing a search time period. This depends solely on the vector database. The consumer’s search time period(s) is distributed to the vector database and the ten articles nearest the time period in vector area are returned.

- Refine Phrases: Second, the consumer decides the MeSH phrases to make use of to filter the returned outcomes. Since we additionally vectorized the MeSH phrases, we are able to have the consumer enter a pure language immediate to get essentially the most related MeSH phrases. Then, we enable the consumer to broaden these phrases to see their different names and narrower ideas. The consumer can choose as many phrases as they need for his or her filter standards.

- Filter & Summarize: Third, the consumer applies the chosen phrases as filters to the unique ten journal articles. We are able to do that for the reason that PubMed articles are tagged with MeSH phrases. Lastly, we let the consumer enter an extra immediate to ship to the LLM together with the filtered journal articles. That is the generative step of the RAG app.

Let’s undergo these steps one after the other. You may see the complete app and code on my GitHub, however right here is the construction:

-- app.py (a python file that drives the app and calls different capabilities as wanted)

-- query_functions (a folder containing python information with queries)

-- rdf_queries.py (python file with RDF queries)

-- weaviate_queries.py (python file containing weaviate queries)

-- PubMedGraph.ttl (the pubmed knowledge in RDF format, saved as a ttl file)

Search Articles

First, need to do is implement Weaviate’s vector similarity search. Since our articles are vectorized, we are able to ship a search time period to the vector database and get comparable articles again.

The principle perform that searches for related journal articles within the vector database is in app.py:

# --- TAB 1: Search Articles ---

with tab_search:

st.header("Search Articles (Vector Question)")

query_text = st.text_input("Enter your vector search time period (e.g., Mouth Neoplasms):", key="vector_search")if st.button("Search Articles", key="search_articles_btn"):

attempt:

consumer = initialize_weaviate_client()

article_results = query_weaviate_articles(consumer, query_text)

# Extract URIs right here

article_uris = [

result["properties"].get("article_URI")

for end in article_results

if end result["properties"].get("article_URI")

]

# Retailer article_uris within the session state

st.session_state.article_uris = article_uris

st.session_state.article_results = [

{

"Title": result["properties"].get("title", "N/A"),

"Summary": (end result["properties"].get("abstractText", "N/A")[:100] + "..."),

"Distance": end result["distance"],

"MeSH Phrases": ", ".be part of(

ast.literal_eval(end result["properties"].get("meshMajor", "[]"))

if end result["properties"].get("meshMajor") else []

),

}

for end in article_results

]

consumer.shut()

besides Exception as e:

st.error(f"Error throughout article search: {e}")

if st.session_state.article_results:

st.write("**Search Outcomes for Articles:**")

st.desk(st.session_state.article_results)

else:

st.write("No articles discovered but.")

This perform makes use of the queries saved in weaviate_queries to ascertain the Weaviate consumer (initialize_weaviate_client) and seek for articles (query_weaviate_articles). Then we show the returned articles in a desk, together with their abstracts, distance (how shut they’re to the search time period), and the MeSH phrases that they’re tagged with.

The perform to question Weaviate in weaviate_queries.py appears like this:

# Perform to question Weaviate for Articles

def query_weaviate_articles(consumer, query_text, restrict=10):

# Carry out vector search on Article assortment

response = consumer.collections.get("Article").question.near_text(

question=query_text,

restrict=restrict,

return_metadata=MetadataQuery(distance=True)

)# Parse response

outcomes = []

for obj in response.objects:

outcomes.append({

"uuid": obj.uuid,

"properties": obj.properties,

"distance": obj.metadata.distance,

})

return outcomes

As you possibly can see, I put a restrict of ten outcomes right here simply to make it less complicated, however you possibly can change that. That is simply utilizing vector similarity search in Weaviate to return related outcomes.

The tip end result within the app appears like this: