Sentiment evaluation may be a useful software for organizations to establish and handle their prospects’ ache factors. In a Repustate case study, a financial institution in South Africa seen that many customers had stopped doing enterprise with them they usually have been involved as to why this was taking place. To achieve additional readability on this situation, they collected social media knowledge to grasp what their prospects have been saying about them.

The financial institution realized that lots of their shoppers have been dissatisfied with the customer support: lengthy ready instances (particularly throughout lunch and peak hours) and even the working hours have been inconvenient. Performing sentiment evaluation on over 2 million items of textual content knowledge, they not solely had recognized the problem, however now knew the best way to resolve it.

Administration improved the working hours and elevated the variety of tellers in every department. Additionally they by no means had unmanned teller stations throughout lunchtime or peak hours to make sure that prospects have been served on time. In consequence, there was a big drop in buyer churn charges and an increase within the variety of new shoppers.

Basically, sentiment evaluation is the method of mining textual content knowledge to extract the underlying emotion behind it to be able to add worth and pinpoint vital points in a enterprise. On this tutorial, I’ll present you the best way to construct your individual sentiment evaluation mannequin in Python, step-by-step.

- How one can Carry out Sentiment Evaluation in Python?

- Python Pre-Requisites

- Studying the Dataset

- Information Preprocessing

- TF-IDF Transformation

- Constructing and Evaluating the ML Mannequin

- Sentiment Evaluation with Python: Subsequent Steps

You’re in all probability already conversant in Python, but when not — it’s a highly effective programming language with an intuitive syntax. To not point out it’s one of the vital fashionable decisions throughout the info science group, which makes it good for our tutorial.

We are going to use the Trip Advisor Hotel Reviews Kaggle dataset for this evaluation, so be sure that to have it downloaded earlier than you begin to have the ability to code alongside.

First issues first: putting in the mandatory gear. You want a Python IDE — I recommend utilizing Jupyter. (Should you don’t have already got it, observe this Jupyter Pocket book tutorial to set it up in your machine.)

Be sure to have the next libraries put in as properly: NumPy, pandas, Matplotlib, seaborn, Regex, and scikit-learn.

Let’s begin by loading the dataset into Python and studying the top of the info body:

import pandas as pd

df = pd.read_csv('tripadvisor_hotel_reviews.csv')

df.head()

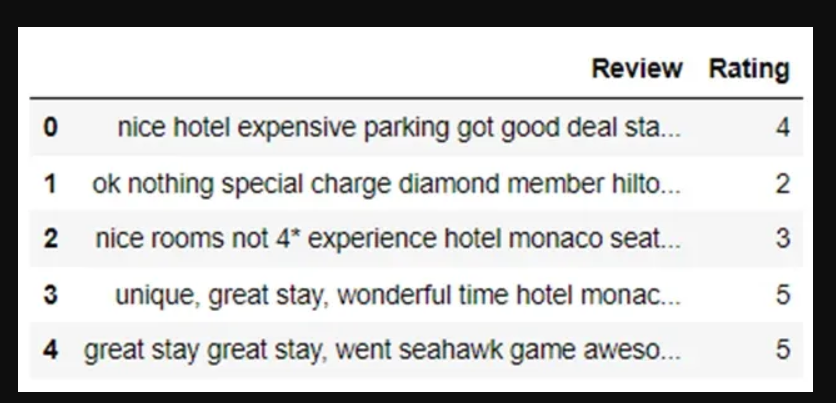

The code above ought to render the next output:

This dataset solely has 2 variables: “Evaluate” which incorporates company’ impressions of the resort and “Score” — the corresponding numerical analysis (or, in easier phrases, the variety of stars they’ve left).

Now, let’s check out the variety of rows within the knowledge body:

len(df.index) # 20491

We be taught that it contains 20,491 evaluations.

As we already know the TripAdvisor dataset has 2 variables — consumer evaluations and rankings, which vary from 1 to five. We are going to use “Scores” to create a brand new variable referred to as “Sentiment.” In it, we’ll add 2 classes of sentiment as follows:

- 0 to 1 will probably be encoded as -1 as they point out detrimental sentiment

- 3 will probably be labeled as 0 because it has a impartial sentiment

- 4 and 5 will probably be labeled as +1 as they point out optimistic sentiment

Let’s create a Python perform to perform this categorization:

import numpy as np

def create_sentiment(ranking):if ranking==1 or ranking==2:

df['Sentiment'] = df['Rating'].apply(create_sentiment)

return -1 # detrimental sentiment

elif ranking==4 or ranking==5:

return 1 # optimistic sentiment

else:

return 0 # impartial sentiment

Now, let’s check out the top of the info body once more:

Discover that we have now a brand new column referred to as “Sentiment” — this will probably be our goal variable. We are going to prepare a machine studying mannequin to foretell the sentiment of every evaluation.

First, nonetheless, we have to preprocess the “Evaluate” column to be able to take away punctuation, characters, and digits. The code seems like this:

from sklearn.feature_extraction.textual content import re

def clean_data(evaluation):no_punc = re.sub(r'[^ws]', '', evaluation)

no_digits = ''.be a part of([i for i in no_punc if not i.isdigit()])

return(no_digits)

On this approach, we’ll remove pointless noise and solely retain data that’s precious to the ultimate sentiment evaluation.

Lets check out the primary evaluation within the knowledge body to see what sort of punctuation we’d be eradicating?

df['Review'][0]

Discover that it incorporates commas. The preprocessing perform will cope with these. Apply it onto this column and let’s take a look at the evaluation once more:

df['Review'] = df['Review'].apply(clean_data)

df['Review'][0]

All of the commas are gone and we’re left with clear textual content knowledge.

Now, we have to convert this textual content knowledge right into a numeric illustration in order that it may be ingested into the ML mannequin. We are going to do that with the assistance of scikit-learn’s TF-IDF Vectorizer bundle.

TF-IDF stands for “time period frequency-inverse doc frequency” — a statistical measure that tells us how related a phrase is to a doc in a group. In easier phrases, it converts phrases right into a vector of numbers the place every phrase has its personal numeric illustration.

TF-IDF is calculated primarily based on 2 metrics:

- Time period frequency

- Inverse doc frequency

Let’s take a look at every individually.

Time period Frequency

It’s actually what it says on the tin — what number of instances a time period is repeated in a single doc. Phrases that seem extra ceaselessly in a bit of textual content are thought of to have a number of significance. For instance, on this sentiment evaluation tutorial, we repeat the phrases “sentiment” and “evaluation” a number of instances, due to this fact, an ML mannequin will contemplate them extremely related.

Inverse Doc Frequency

Quite than specializing in particular person items, inverse doc frequency measures what number of instances a phrase is repeated throughout a set of paperwork. And reverse of the earlier metric, right here the upper frequency is — the decrease the relevance. This helps the algorithm remove naturally occurring phrases similar to “a”, “the”, “and”, and many others, as they are going to seem ceaselessly throughout all paperwork in a corpus.

Now that you just perceive how TF-IDF works, let’s use this algorithm to vectorize our knowledge:

from sklearn.feature_extraction.textual content import TfidfVectorizer

tfidf = TfidfVectorizer(strip_accents=None,

lowercase=False,

preprocessor=None)X = tfidf.fit_transform(df['Review'])

We now have efficiently remodeled the evaluations in our dataset right into a vector that may be fed right into a machine studying algorithm!

We are able to now prepare our algorithm on the evaluation knowledge to categorise its sentiment into 3 classes:

First, let’s carry out a train-test cut up:

from sklearn.model_selection import train_test_split

y = df['Sentiment'] # goal variable

X_train, X_test, y_train, y_test = train_test_split(X,y)

Now, match a logistic regression classifier on the coaching dataset and use it to make predictions on the take a look at knowledge:

from sklearn.linear_model import LogisticRegression

lr = LogisticRegression(solver='liblinear')

lr.match(X_train,y_train) # match the mannequin

preds = lr.predict(X_test) # make predictions

Lastly, consider the efficiency:

from sklearn.metrics import accuracy_score

accuracy_score(preds,y_test) # 0.86

Our mannequin has an accuracy of roughly 0.86, which is kind of good.

And that concludes our tutorial! For a greater understanding of the idea, right here is the entire sentiment evaluation Python code I’ve used:

import pandas as pd

import numpy as np

from sklearn.feature_extraction.textual content import re

from sklearn.feature_extraction.textual content import TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

df = pd.read_csv('tripadvisor_hotel_reviews.csv')

def create_sentiment(ranking):res = 0 # impartial sentiment

if ranking==1 or ranking==2:

res = -1 # detrimental sentiment

elif ranking==4 or ranking==5:

res = 1 # optimistic sentiment

return res

df['Sentiment'] = df['Rating'].apply(create_sentiment)

def clean_data(evaluation):

no_punc = re.sub(r'[^ws]', '', evaluation)

no_digits = ''.be a part of([i for i in no_punc if not i.isdigit()])

return(no_digits)

df['Review'] = df['Review'].apply(clean_data)

tfidf = TfidfVectorizer(strip_accents=None,

lowercase=False,

preprocessor=None)

X = tfidf.fit_transform(df['Review'])

y = df['Sentiment']

X_train, X_test, y_train, y_test = train_test_split(X,y)

lr = LogisticRegression(solver='liblinear')

lr.match(X_train,y_train)

preds = lr.predict(X_test)

accuracy_score(preds,y_test)