GPUs function the elemental computational engines for AI. Nonetheless, In large-scale coaching environments, general efficiency just isn’t restricted by processing pace, however by the pace of the community communication between them.

Massive language fashions are educated on hundreds of GPUs, which creates an enormous quantity of cross-GPU visitors. In these programs, even the smallest delays compound. A microsecond lag when GPUs share knowledge could cause a series response that provides hours to the coaching job. Due to this fact, these programs require a specialised community that’s designed to switch giant quantities of information with minimal delay.

The normal method of routing GPU knowledge by the CPU created a extreme bottleneck at scale. To repair this bottleneck, applied sciences like RDMA and GPUDirect had been invented to primarily construct a bypass across the CPU. This creates a direct path for GPUs to speak to at least one one other.

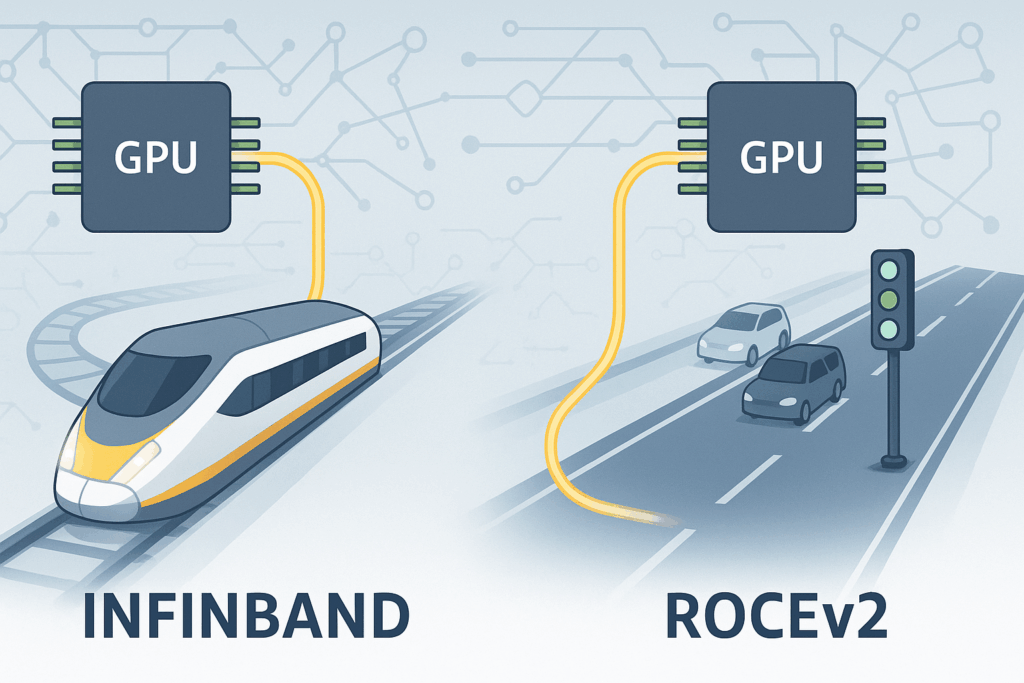

This direct communication methodology wants a community that may deal with the pace. The 2 foremost selections obtainable at the moment to supply this are InfiniBand and RoCEv2.

So, how do you select between InfiniBand and RoCEv2? It’s a giant deal, forcing you to steadiness uncooked pace towards funds and the way a lot hands-on tuning you’re keen to do.

Let’s take a more in-depth have a look at every expertise to see its strengths and weaknesses.

Primary Ideas

Earlier than we examine InfiniBand and RoCEv2, let’s first perceive how conventional communication works and introduce some primary ideas like RDMA and GPU Direct.

Conventional Communication

In conventional programs, a lot of the knowledge motion between machines is dealt with by the CPU. When a GPU finishes its computation and must ship knowledge to a distant node, it follows the next steps –

- The GPU writes the info to the system (host) reminiscence

- The CPU copies that knowledge right into a buffer utilized by the community card

- The NIC (Community Interface Card) sends the info over the community

- On the receiving node, the NIC delivers the info to the CPU

- The CPU writes it into system reminiscence

- The GPU reads it from system reminiscence

This method works effectively for small programs, nevertheless it doesn’t scale for AI workloads. As extra knowledge will get copied round, the delays begin to add up, and the community struggles to maintain up.

RDMA

Distant Direct Reminiscence Entry (RDMA) permits an area machine to entry the reminiscence of a distant machine immediately with out involving the CPU within the knowledge switch course of. On this structure, the community interface card handles all reminiscence operations independently, permitting it to learn from or write to distant reminiscence areas with out creating intermediate copies of the info. This direct reminiscence entry functionality eliminates the standard bottlenecks related to CPU-mediated knowledge transfers and reduces general system latency.

RDMA proves significantly worthwhile in AI coaching environments the place hundreds of GPUs should share gradient data effectively. By bypassing working system overhead and community delays, RDMA permits the high-throughput, low-latency communication important for distributed machine studying operations.

GPUDirect RDMA

GPUDirect is NVIDIA’s manner of letting GPUs discuss on to different {hardware} by PCIe connections. Usually, when a GPU must switch knowledge to a different gadget, it has to take the great distance round. The information goes from GPU reminiscence to system reminiscence first, then the receiving gadget grabs it from there. GPUDirect skips the CPU solely. Knowledge strikes immediately from one GPU to a different.

GPUDirect RDMA extends this to community transfers by permitting the NIC to entry GPU reminiscence immediately utilizing PCIe.

Now that we perceive ideas like RDMA and GPUDirect, let’s look into the infrastructure applied sciences InfiniBand and RoCEv2 that assist GPUDirect RDMA.

InfiniBand

InfiniBand is a high-performance networking expertise designed particularly for knowledge facilities and supercomputing environments. Whereas Ethernet was constructed to deal with normal visitors, InfiniBand is designed to satisfy excessive pace and low latency for AI workloads.

It’s like a high-speed bullet practice the place each the practice and the tracks are designed to take care of the pace. InfiniBand follows the identical idea, all the pieces together with the cables, community playing cards, and switches are designed to maneuver knowledge quick and keep away from any delays.

How does it work?

InfiniBand works utterly in another way from common Ethernet. It doesn’t use the common TCP/IP protocol. As an alternative, it depends by itself light-weight transport layers designed for pace and low latency.

On the core of InfiniBand is RDMA, which permits one server to immediately entry the reminiscence of one other with out involving the CPU. InfiniBand helps RDMA in {hardware}, so the community card, referred to as a Host Channel Adapter or HCA, handles knowledge transfers immediately with out interrupting the working system or creating additional copies of information.

InfiniBand additionally makes use of a lossless communication mannequin. It avoids dropping packets even underneath heavy visitors through the use of credit-based circulation management. The sender transmits knowledge solely when the receiver has sufficient buffer house obtainable.

In giant GPU clusters, InfiniBand switches transfer knowledge between nodes with extraordinarily low latency, usually underneath one microsecond. And since all the system is constructed for this function, all the pieces from the {hardware} to the software program works collectively to ship constant, high-throughput communication.

Let’s perceive a easy GPU-to-GPU communication utilizing the next diagram -

- GPU 1 fingers off knowledge to its HCA, skipping the CPU

- The HCA initiates an RDMA write to the distant GPU

- Knowledge is transferred over the InfiniBand change

- The receiving HCA writes the info on to GPU 2’s reminiscence

Power

- Quick and predictable – InfiniBand delivers ultra-low latency and excessive bandwidth, protecting giant GPU clusters operating effectively with out hiccups.

- Constructed for RDMA – It handles RDMA in {hardware} and makes use of credit-based circulation management to keep away from packet drops, even underneath heavy load.

- Scalable – Since all components of the system are designed to work collectively, efficiency just isn’t impacted if extra nodes are added to the cluster.

Weaknesses

- Costly – {Hardware} is dear and largely tied to NVIDIA, which limits flexibility.

- More durable to handle – Setup and tuning require specialised expertise. It’s not as simple as Ethernet.

- Restricted interoperability – It doesn’t play effectively with customary IP networks, making it much less versatile for general-purpose environments.

RoceV2

RoCEv2 (RDMA over Converged Ethernet model 2) brings the advantages of RDMA to straightforward Ethernet networks. RoCEv2 takes a unique method than InfiniBand. As an alternative of needing customized community {hardware}, it simply makes use of your common IP community with UDP for transport.

Consider it like upgrading an everyday freeway with an categorical lane only for crucial knowledge. You don’t must rebuild all the highway system. You simply want to order the quick lane and tune the visitors alerts. RoCEv2 makes use of the identical idea, it delivers high-speed and low-latency communication utilizing the prevailing Ethernet system.

How does it work?

RoCEv2 brings RDMA to straightforward Ethernet by operating over UDP and IP. It really works throughout common Layer 3 networks without having a devoted material. It makes use of commodity switches and routers, making it extra accessible and cost-effective.

Like InfiniBand, RoCEv2 permits direct reminiscence entry between machines. The important thing distinction is that whereas InfiniBand handles circulation management and congestion in a closed, tightly managed surroundings, RoCEv2 depends on enhancements to Ethernet, equivalent to –

Precedence Circulation Management(PFC) – Prevents packet loss by pausing visitors on the Ethernet layer based mostly on precedence.

Specific Congestion Notification(ECN) – Mark packets as a substitute of dropping them when congestion is detected.

Knowledge Middle Quantized Congestion Notification(DCQCN) – A congestion management protocol that reacts to ECN alerts to handle visitors extra easily.

To make RoCEv2 work effectively, the underlying Ethernet community must be lossless or near it. In any other case, RDMA efficiency drops. This requires cautious configuration of switches, queues, and circulation management mechanisms all through the info middle.

Let’s perceive a easy GPU-to-GPU communication utilizing the next diagram with RoCEv2 –

- GPU 1 fingers off knowledge to its NIC, skipping the CPU.

- The NIC wraps the RDMA write in UDP/IP and sends it over Ethernet.

- Knowledge flows by customary Ethernet switches configured with PFC and ECN.

- The receiving NIC writes the info on to GPU 2’s reminiscence.

Power

Price-effective – RoCEv2 runs on customary Ethernet {hardware}, so that you don’t want a specialised community material or vendor-locked elements.

Simpler to deploy – Because it makes use of acquainted IP-based networking, it’s simpler for groups already managing Ethernet knowledge facilities to undertake.

Versatile integration – RoCEv2 works effectively in combined environments and integrates simply with current Layer 3 networks.

Weaknesses

Requires tuning – To keep away from packet loss, RoCEv2 relies on cautious configuration of PFC, ECN, and congestion management. Poor tuning can damage efficiency.

Much less deterministic – In contrast to InfiniBand’s tightly managed surroundings, Ethernet-based networks can introduce variability in latency and jitter.

Advanced at scale – As clusters develop, sustaining a lossless Ethernet material with constant conduct turns into more and more troublesome.

Conclusion

In a large-scale GPU cluster, compute energy is nugatory if the community can’t deal with the load. Community efficiency turns into simply as very important because the GPUs as a result of it holds the entire system collectively. Applied sciences like RDMA and GPUDirect RDMA assist minimize out the same old slowdowns by eliminating pointless interruptions and CPU copying, letting GPUs discuss immediately to one another.

Each InfiniBand and RoCEv2 pace up GPU-to-GPU communication, however they take totally different approaches. InfiniBand builds its personal devoted community setup. It supplies glorious pace and low latency, however at a really excessive value. RoCEv2 supplies extra flexibility through the use of the prevailing Ethernet setup. It’s simpler on the funds, nevertheless it wants correct tuning of PFC and ECN to make it work.

On the finish of the day, it’s a traditional trade-off. Go together with InfiniBand in case your prime precedence is getting the best possible efficiency potential, and funds is much less of a priority. However if you would like a extra versatile resolution that works together with your current community gear and prices much less upfront, RoCEv2 is the best way to go.