On this article let’s dive into the reason of the research paper on InfiniteHiP, a brand new LLM-framework to deal with the longer token size of the LLM fashions and we will even implement it with ollama fashions in python.

Analysis Paper Hyperlink -: https://arxiv.org/pdf/2502.08910

GitHub Repository Hyperlink -: https://github.com/DeepAuto-AI/hip-attention

Let’s have a look on what are we going to be taught on this article —

- Introduction

- Different Works

- Working of InfiniteHiP

- Implementation with OLLAMA fashions

- Conclusion

Giant Language Fashions (LLMs) have remodeled AI-driven purposes, from chatbots to doc evaluation and code technology. Nevertheless, a serious limitation of those fashions is context size. Most fashionable LLMs, together with these utilized in retrieval-augmented technology (RAG), authorized doc evaluation, chatbots, and AI-assisted analysis, wrestle to course of lengthy inputs attributable to quadratic consideration complexity (consideration is calculated for brand spanking new tokens with all previous tokens which result in quadratic improve as enter sequence size improve) and reminiscence constraints (method of storing the Key-Worth cache).

- Chatbots: Longer reminiscence retention enhances contextual understanding, resulting in extra coherent conversations.

- Doc Processing: Effectively dealing with hundreds of pages is crucial for summarization, extraction, and evaluation.

- AI Analysis: Maintaining in depth reference materials inside context improves accuracy and relevance in responses.

The problem arises as a result of conventional Transformer-based architectures require quadratic computational sources as context size will increase. Moreover, the key-value (KV) cache, which shops beforehand computed consideration info, grows linearly with context dimension, rapidly consuming obtainable GPU reminiscence.

InfiniteHiP addresses these points by enabling fashions to course of as much as 3 million tokens on a single GPU whereas sustaining effectivity and accuracy. This breakthrough permits LLMs to deal with considerably longer contexts with out requiring extra fine-tuning or extreme reminiscence consumption.

Let’s focus on among the earlier approaches which can be proposed to cut back excessive price of consideration mechanisms. They’re —

- FlashAttention and FlashAttention-2 (Source)

FlashAttention, launched by Tri Dao et al., is an algorithm designed to cut back the reminiscence and computational overhead of the eye mechanism in Transformers. It achieves this by reorganizing the computation to raised make the most of the GPU reminiscence hierarchy, resulting in vital speedups with out approximations. Constructing upon this, FlashAttention-2 additional enhances efficiency by bettering parallelism and work partitioning, reaching as much as a 2x speedup over the unique FlashAttention and reaching 50–73% of the theoretical most FLOPs/s on A100 GPUs. - Streaming LLMs (Source)

StreamingLLM addresses two main challenges in deploying LLMs for streaming purposes primarily, excessive reminiscence consumption from storing key-value (KV) caches and restricted generalization to longer texts past coaching limits.

To unravel this, consideration sinks (preliminary tokens that appeal to sturdy consideration scores ) are retained in reminiscence, permitting the mannequin to take care of context with out storing all previous tokens. StreamingLLM additional optimizes effectivity through the use of a sliding window KV cache to handle reminiscence and relative positional encoding to enhance coherence. This allows LLMs to deal with infinitely lengthy sequences with out requiring fine-tuning. - H₂O (Heavy-Hitter Oracle) Framework (Source)

The H₂O (Heavy-Hitter Oracle) framework addresses this problem by figuring out and retaining “heavy-hitter” tokens ( people who considerably affect consideration scores ) throughout the KV cache. This dynamic eviction coverage balances the retention of current tokens and heavy-hitters, successfully lowering reminiscence utilization with out compromising efficiency. - HiP (HIERARCHICALLY PRUNED) Consideration (Source)

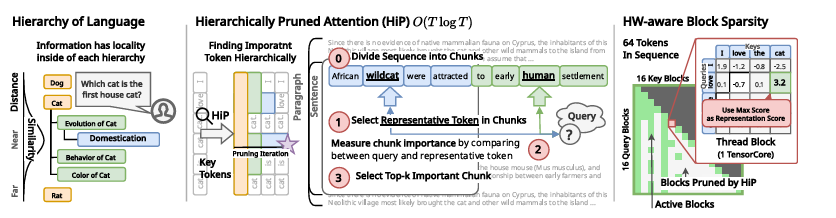

HiP is an modern strategy designed to boost the effectivity of Giant Language Fashions (LLMs) when processing lengthy sequences. Conventional consideration mechanisms in LLMs face challenges with lengthy contexts attributable to their quadratic time and house complexity, resulting in elevated computational prices and reminiscence utilization. HiP addresses these points by introducing a dynamic sparse consideration mechanism that reduces each time complexity to O(T log T) and house complexity to O(T), the place T represents the sequence size. That is achieved by means of a novel tree-search-like algorithm that generates an consideration masks on-the-fly, figuring out essentially the most vital components for every question with out the necessity for retraining the mannequin. By implementing HiP, pretrained LLMs can effectively scale to course of thousands and thousands of tokens on commonplace GPUs, considerably lowering latency and reminiscence utilization whereas sustaining high-quality output.

Let’s first see the fundamentals of HiP, which is able to assist us perceive in regards to the structure and dealing of the InfiniteHiP framework.

HiP functioning and structure —

Now, the standard consideration mechanism requires T² pairwise computation to generate the pairwise relationship between the sequence of T tokens. Regardless of, the success this quadratic complexity hinders the rising calls for of utilizing the longer contexts of which purposes are mentioned above. Though, flash consideration reduces the house complexity to O(T) however the time complexity nonetheless stays O(T²).

So, let’s see how HiP reduces this time complexity to O(T log T).

HiP relies on the concept of “consideration locality”, which implies that tokens (phrases or symbols) which can be shut to one another typically have comparable consideration scores. As a substitute of computing consideration for each single token, HiP does the next:

Step 1: Dividing the Sequence into Chunks (Fig. 1 left)

- HiP splits the lengthy sequence into smaller teams (chunks), every containing a number of tokens.

- It selects a single “consultant” token from the center of every chunk to summarize the significance of all the chunk.

- This reduces the variety of tokens being instantly thought-about in consideration computations, making the method a lot quicker.

Step 2: Approximating the Most Essential Tokens (Prime-k Estimation) (Fig. 1 center)

- HiP computes consideration scores for under the chosen consultant tokens as a substitute of each single token.

- It identifies crucial chunks based mostly on these scores.

- It refines its choice step-by-step, zooming in on essentially the most related tokens inside every chunk, till it reaches a ultimate set of key tokens for consideration calculation.

- This hierarchical strategy (narrowing down in steps) is why it’s known as Hierarchically Pruned Consideration.

Step 3: Environment friendly Consideration Computation

- As soon as the top-k most essential tokens are chosen, HiP performs consideration calculations solely on these key tokens, considerably lowering the computational price.

- As a substitute of O(T²), the place T is the variety of tokens, HiP solely requires O(T log T) time to find out which tokens ought to obtain consideration.

- The precise sparse consideration operation then takes O(T) time, making it extraordinarily environment friendly in comparison with commonplace consideration mechanisms.

How HiP Optimizes GPU Efficiency?

Computations in fashionable GPUs are carried out utilizing specialised Matrix Multiplication Models (MMUs) like TensorCores (in NVIDIA GPUs). HiP is designed to take full benefit of those {hardware} capabilities by:

- Processing consideration in “blocks” (as a substitute of particular person tokens) to optimize matrix operations and pace up token processing.

- Utilizing a tiled computation technique, guaranteeing most GPU effectivity when computing consideration scores.

- Integrating into high-performance LLM serving frameworks like vLLM and SGlang, that are designed for real-time and large-scale mannequin deployment.

How HiP manages reminiscence?

- Shops solely O(log T) tokens in quick GPU reminiscence, that are essentially the most incessantly accessed tokens, guaranteeing fast lookup and retrieval.

- Tokens that aren’t wanted instantly are stored in the principle system reminiscence as a substitute of occupying helpful GPU reminiscence.

- A excessive cache hit ratio ensures that the majority essential tokens stay in quick entry reminiscence, bettering effectivity.

Now, since we find out about HiP, let’s dive deep into the working and structure of infiniteHiP evaluating it with HiP.

How InfiniteHiP improves HiP

Let’s see a determine for getting the architectural pruning in InfiniteHiP.

- Bettering HiP’s Pruning Technique-

– As a substitute of a purely hierarchical pruning strategy, InfiniteHiP introduces Modular Sparse Consideration.

– It doesn’t simply discard much less essential tokens however dynamically adjusts the pruning course of based mostly on the question.

– This implies completely different components of the sequence obtain completely different ranges of consideration pruning, making the method much more environment friendly. - Optimizing Reminiscence Utilization-

– InfiniteHiP makes use of an LRU-based (Least Just lately Used) cache eviction coverage, that means it dynamically decides which tokens to dump based mostly on real-time utilization frequency.

– Steadily accessed tokens stay in GPU reminiscence whereas much less accessed ones are offloaded earlier, guaranteeing minimal reminiscence latency. - Out-of-Size (OOL) Generalization: Extending Context Size-

– InfiniteHiP modifies RoPE embeddings in actual time, permitting fashions to course of sequences far past their coaching limits. Thereby, introducing dynamic RoPE (Rotary Positional Embeddings).

– This works with out requiring any fine-tuning, making InfiniteHiP a training-free answer for extending context size.

– Therefore, InfiniteHiP permits any pre-trained Transformer mannequin to deal with longer contexts with out retraining prices.

Now, let’s see how will you implement HiP in python, keep in mind you’ll require the CUDA put in in your gadget to make it work.

As of now InfiniteHiP is just not licensed for use for business use, let’s see how can we implement HiP with Ollama fashions.

First, of all begin your ollama server and use the mannequin of your selection eg. Ollama3.2 . To get a information on the way to do it check with this text.

Methods to run ollama fashions on cloud :- https://medium.com/p/3e01fc12512f

Now, since our server is began lets create an setting and setup our HiP. Seek advice from the official github repository — https://github.com/DeepAuto-AI/hip-attention.

First, create an setting after which observe the code.

# Clone the HiP Consideration repository

git clone https://github.com/DeepAuto-AI/hip-attention.git

cd hip-attention# Set up dependencies

pip set up -e "."

pip set up -e ".[sglang]"

pip set up torch torchvision torchaudio # set up the model suitable together with your cuda

You would possibly must restart your kernel after this set up.

import torch

import requests

from hip_attn import hip_attention_12, HiPAttentionArgs12# Exchange this together with your URL

OLLAMA_API_URL = "https://localhost:1848/api/chat"

# Set gadget

gadget = "cuda"

# Outline mannequin parameters

batch_size = 1

kv_len = 128 * 1024 # Key-Worth cache size (128K tokens)

q_len = 32 * 1024 # Question sequence size (32K tokens)

num_heads = 32

num_kv_heads = 8

head_dims = 128

dtype = torch.bfloat16

# Initialize random tensors for Q, Okay, and V

q = torch.randn((batch_size, q_len, num_heads, head_dims), dtype=dtype, gadget=gadget)

okay = torch.randn((batch_size, kv_len, num_kv_heads, head_dims), dtype=dtype, gadget=gadget)

v = okay.clone()

# Run HiP Consideration

output, metadata = hip_attention_12(q=q, okay=okay, v=v, args=HiPAttentionArgs12())

print("HiP Consideration Output Form:", output.form)

# Perform to ship request to Ollama mannequin

def query_ollama(immediate):

strive:

response = requests.submit(url, knowledge=json.dumps(payload), headers=headers)

# Verify if the request was profitable (standing code 200)

if response.status_code == 200:

output = response.textual content

return output

else:

print(f"Did not get a response. Standing code: {response.status_code}")

return ("Error response:", response.textual content)

besides Exception as e:

print(f"An error occurred: {e}")

# Instance immediate

response = query_ollama("Clarify the function of transformers in LLMs.")

print("Ollama Response:", response)

This may implement the HiP consideration to you code. For extra particulars you’ll be able to check with the github hyperlink added above.

Now, let’s transfer in direction of the conclusion.

InfiniteHiP represents a major breakthrough in long-context LLM inference, addressing computational effectivity, reminiscence optimization, and scalability past current frameworks like HiP and FlashAttention2. By combining Modular Sparse Consideration, superior KV cache offloading, and dynamic RoPE changes, InfiniteHiP permits pre-trained LLMs to deal with sequences as much as 3 million tokens — all with out retraining.

With its extremely parallelized and memory-efficient structure, InfiniteHiP achieves 7.24× quicker inference, reduces GPU reminiscence utilization to only 3.34% of FA2, and serves as a plug-and-play answer for any Transformer-based mannequin. Its seamless integration with SGLang and Ollama additional makes it splendid for real-world deployments in AI analysis, doc evaluation, and conversational AI.

As LLMs proceed to evolve, InfiniteHiP paves the best way for scalable, environment friendly, and cost-effective long-context processing bringing us nearer to AI fashions able to reasoning over huge quantities of data with out constraints.

LinkedIn — https://www.linkedin.com/in/kronikalkodar/

Portfolio — https://mradulv.vercel.app

GitHub — https://github.com/KroNicalKODER