- Why Can’t Our AI Agents Just Get Along?

- What Exactly Is Agent2Agent (A2A)?

- How Does A2A Work Under the Hood?

- A2A vs. MCP: Tools vs. Teammates

- A2A vs. Existing Agent Orchestration Frameworks

- A Hands-On Example: The “Hello World” of Agent2Agent

- Getting Started with A2A in Your Projects

- Conclusion: Towards a More Connected AI Future

- References

Why Can’t Our AI Brokers Simply Get Alongside?

Think about you’ve employed a workforce of super-smart AI assistants. One is nice at information evaluation, one other writes eloquent experiences, and a 3rd handles your calendar. Individually, they’re sensible. However right here’s the rub: they don’t communicate the identical language. It’s like having coworkers the place one speaks solely Python, one other solely JSON, and the third communicates in obscure API calls. Ask them to collaborate on a mission, and also you get a digital model of the Tower of Babel.

That is precisely the issue Google’s Agent2Agent (A2A) Protocol goals to unravel. A2A is a brand new open customary (introduced in April 2025) that provides AI Agents a standard language – a form of common translator — to allow them to talk and collaborate seamlessly. It’s backed by an impressively massive coalition (50+ tech corporations together with the likes of Atlassian, Cohere, Salesforce, and extra) rallying behind the thought of brokers chatting throughout platforms. In brief, A2A issues as a result of it guarantees to interrupt AI brokers out of their silos and allow them to work collectively like a well-coordinated workforce quite than remoted geniuses.

What Precisely Is Agent2Agent (A2A)?

At its core, A2A is a communication protocol for AI brokers. Consider it as a standardized frequent language that any AI agent can use to speak to another agent, no matter who constructed it or what framework it runs on. As we speak, there’s a “framework jungle” of agent-building instruments — LangGraph, CrewAI, Google’s ADK, Microsoft’s Autogen, you title it. With out A2A, in the event you tried to make a LangGraph agent immediately chat with a CrewAI agent, you’d be in for a world of customized integration ache (image two software program engineers frantically writing glue code so their bots can gossip). Enter A2A: it’s the bridge that lets various brokers share info, ask one another for assist, and coordinate duties with out customized duct-tape code.

In plainer phrases, A2A does for AI brokers what web protocols did for computer systems — it offers them a common networking language. An agent inbuilt Framework A can ship a message or process request to an agent inbuilt Framework B, and due to A2A, B will perceive it and reply appropriately. They don’t have to know the messy particulars of one another’s “inner mind” or code; A2A handles the interpretation and coordination. As Google put it, the A2A protocol lets brokers “talk with one another, securely alternate info, and coordinate actions” throughout completely different platforms. Crucially, brokers do that as friends, not as mere instruments — that means every agent retains its autonomy and particular expertise whereas cooperating.

A2A in Plain English: A Common Translator for AI Coworkers

Let’s placed on our creativeness hats. Image a busy workplace, however as a substitute of people, it’s populated by AI brokers. We have now Alice the Spreadsheet Guru, Bob the E-mail Whiz, and Carol the Buyer Help bot. On a traditional day, Alice would possibly want Bob to ship a consumer a abstract of some information Carol offered. However Alice speaks Excel-ese, Bob speaks API-jsonish, and Carol speaks in pure language FAQs. Chaos ensues — Alice outputs a CSV that Bob doesn’t know the way to learn, Bob sends an e-mail that Carol can’t parse, and Carol logs a problem that by no means will get again to Alice. It’s like a foul comedy of errors.

Now think about a magical convention room with real-time translation. Alice says “I want the most recent gross sales figures” in Excel-ese; the translator (A2A) relays “Hey Carol, are you able to get gross sales figures?” in Carol’s language; Carol fetches the info and speaks again in plain English; the translator ensures Alice hears it in Excel phrases. In the meantime, Bob chimes in robotically “I’ll draft an e-mail with these figures,” and the translator helps Bob and Carol coordinate on the content material. Instantly, our three AI coworkers are working collectively easily, every contributing what they do finest, with out misunderstanding.

That translator is A2A. It ensures that when one agent “talks,” the opposite can “hear” and reply appropriately, even when internally one is constructed with LangGraph and one other with Autogen (AG2). A2A offers the frequent language and etiquette for brokers: the way to introduce themselves, the way to ask one other for assist, the way to alternate info, and the way to politely say “Bought it, right here’s the consequence you needed.” Identical to common translator, it handles the heavy lifting of communication so the brokers can give attention to the duty at hand.

And sure, safety people, A2A has you in thoughts too. The protocol is designed to be safe and enterprise-ready from the get-go — authentication, authorization, and governance are built-in, so brokers solely share what they’re allowed to. Brokers can work collectively with out exposing their secret sauce (inner reminiscence or proprietary instruments) to one another It’s collaboration with privateness, type of like docs consulting on a case with out breaching affected person confidentiality.

How Does A2A Work Beneath the Hood?

Okay, so A2A is sort of a lingua franca for AI brokers — however what does that truly appear to be technically? Let’s peek (flippantly) below the hood. The A2A protocol is constructed on acquainted internet applied sciences: it makes use of JSON-RPC 2.0 over HTTP(S) because the core communication methodology. In non-engineer communicate, meaning brokers ship one another JSON-formatted messages (containing requests, responses, and so forth.) by way of customary internet calls. No proprietary binary goobledygook, simply plain JSON over HTTP — which is nice, as a result of it’s like talking in a language each internet service already understands. It additionally helps nifty extras like Server-Despatched Occasions (SSE) for streaming updates and async callbacks for notifications. So if Agent A asks Agent B a query that can take some time (perhaps B has to crunch information for two minutes), B can stream partial outcomes or standing updates to A as a substitute of leaving A dangling in silence. Actual teamwork vibes there.

When Agent A desires Agent B’s assist, A2A defines a transparent course of for this interplay. Listed here are the important thing items to know (with out drowning in spec particulars):

- Agent Card (Functionality Discovery): Each agent utilizing A2A presents an Agent Card — mainly a JSON “enterprise card” describing who it’s and what it could actually do. Consider it like a LinkedIn profile for an AI agent. It has the agent’s title, an outline, a model, and importantly a listing of expertise it provides. For instance, an Agent Card would possibly say: “I’m ‘CalendarBot v1.0’ — I can schedule conferences and examine availability.” This lets different brokers uncover the fitting teammate for a process. Earlier than Agent A even asks B for assist, A can take a look at B’s card to see if B has the talents it wants. No extra guessing at nighttime!

- Agent Expertise: These are the person capabilities an agent has, as listed on its Agent Card. As an illustration, CalendarBot might need a ability

"schedule_meeting"with an outline “Schedules a gathering between individuals given a date vary.” Expertise are outlined with an ID, a human-friendly title, description, and even instance prompts. It’s like itemizing out companies you provide. This makes it clear what requests the agent can deal with. - Duties and Artifacts (Job Administration): When Agent A desires B to do one thing, it sends a Job request. A process is a structured JSON object (outlined by the A2A protocol) that describes the job to be carried out. For instance, “Job: use your

schedule_meetingability with inputs X, Y, Z.” The 2 brokers then interact in a dialog to get it carried out: B would possibly reply with questions, intermediate outcomes, or confirmations. As soon as completed, the result of the duty is packaged into an Artifact — consider that because the deliverable or results of the duty. If it was a scheduling process, the artifact may be a calendar invite or a affirmation message. Importantly, duties have a lifecycle. Easy duties would possibly full in a single go, whereas longer ones keep “open” and permit back-and-forth updates. A2A natively helps long-running duties — brokers can maintain one another posted with standing updates (“Nonetheless engaged on it… virtually carried out…”) over minutes or hours if wanted. No timeouts ruining the social gathering.

- Messages (Agent Collaboration): The precise information exchanged between brokers — context, questions, partial outcomes, and so forth. — are despatched as messages. That is primarily the dialog occurring to perform the duty. The protocol lets brokers ship several types of content material inside messages, not simply plain textual content. They might share structured information, recordsdata, and even media. Every message can have a number of components, every labeled with a content material sort. As an illustration, Agent B may ship a message that features a textual content abstract and a picture (two components: one “textual content/plain”, one “picture/png”). Agent A will know the way to deal with every half. If Agent A’s interface can’t show photographs, A2A even permits them to barter a fallback (perhaps B will ship a URL or a textual content description as a substitute). That is the “consumer expertise negotiation” bit — making certain the receiving aspect will get the content material in a format it could actually use. It’s akin to 2 coworkers determining whether or not to share information by way of PowerPoint, PDF, or simply an e-mail, primarily based on what every can open.

- Safe Collaboration: All this communication is finished with safety in thoughts. A2A helps customary authentication (API keys, OAuth, and so forth., just like OpenAPI auth schemes) in order that an agent doesn’t settle for duties from simply anybody. Plus, as talked about, brokers don’t should reveal their inner workings. Agent B will help Agent A with out saying “By the way in which, I’m powered by GPT-4 and right here’s my complete immediate historical past.” They solely alternate the mandatory information (the duty particulars and outcomes), preserving proprietary stuff hidden. This preserves every agent’s independence and privateness — they cooperate, however they don’t merge into one massive blob.

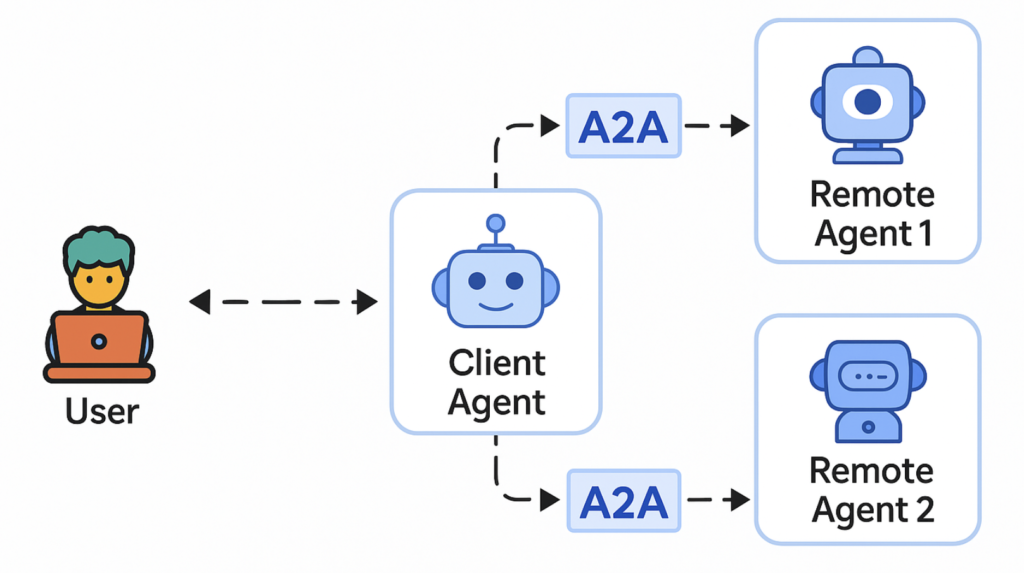

In abstract, A2A units up a consumer–server mannequin between brokers: when Agent A wants one thing, it acts as a Consumer Agent and Agent B performs the Distant Agent (server) position. A2A handles how the consumer finds the fitting distant agent (by way of Agent Playing cards), the way it sends the duty (JSON-RPC message), how the distant agent streams responses or remaining outcomes, and the way each keep in sync all through. All utilizing web-friendly requirements so it’s straightforward to plug into current apps. If this sounds a bit like how internet browsers discuss to internet servers (requests and responses), that’s no coincidence — A2A primarily applies comparable ideas to brokers speaking to brokers, which is a logical strategy to maximise compatibility.

A2A vs. MCP: Instruments vs. Teammates

You might need additionally heard of Anthropic’s Mannequin Context Protocol (MCP) — one other current open customary within the AI house. (When you haven’t already, check out my other post on breaking down MCP and how one can construct your individual customized MCP server from scratch). How does A2A relate to MCP? Are they opponents or mates? The brief reply: they’re complementary, like two items of a puzzle. The lengthy reply wants a fast analogy (after all!).

Consider an AI agent as an individual making an attempt to get a job carried out. This individual has instruments (like a calculator, internet browser, database entry) and may have colleagues (different brokers) to collaborate with. MCP (Mannequin Context Protocol) is actually about hooking up the instruments. It standardizes how an AI agent accesses exterior instruments, APIs, and information sources in a safe, structured means. For instance, by way of MCP, an agent can use a “Calculator API” or “Database lookup API” as a plugin, with a standard interface. “Consider MCP like a USB-C port for AI — plug-and-play for instruments,” as one description goes. It offers brokers a uniform approach to say “I want X instrument” and get a response, no matter who made the instrument.

A2A, however, is about connecting with the teammates. It lets one autonomous agent discuss to a different as an equal accomplice. As a substitute of treating the opposite agent like a dumb instrument, A2A treats it like a educated colleague. Persevering with our analogy, A2A is the protocol you’d use when the individual decides, “Really, I want Bob’s assistance on this process,” and turns to ask Bob (one other agent) for enter. Bob would possibly then use his personal instruments (perhaps by way of MCP) to help, and reply again by way of A2A.

In essence, MCP is how brokers invoke instruments, A2A is how brokers invoke one another. Instruments vs. Teammates. One is like calling a perform or utilizing an app; the opposite is like having a dialog with a coworker. Each approaches usually work hand-in-hand: an agent would possibly use MCP to fetch some information after which use A2A to ask one other agent to investigate that information, all throughout the similar complicated workflow. In reality, Google explicitly designed A2A to complement MCP’s performance, not exchange it.

A2A vs. Present Agent Orchestration Frameworks

When you’ve performed with multi-agent programs already, you may be considering: “There are already frameworks like LangGraph, AutoGen, or CrewAI that coordinate a number of brokers — how is A2A completely different?” Nice query. The distinction boils all the way down to protocol vs. implementation.

Frameworks like LangGraph, AutoGen, and CrewAI are what we’d name agent orchestration frameworks. They supply higher-level constructions or engines to design how brokers work collectively. As an illustration, AutoGen (from Microsoft) permits you to script conversations between brokers (e.g., a “Supervisor” agent and a “Employee” agent) inside a managed surroundings. LangGraph (a part of LangChain’s ecosystem) permits you to construct brokers as nodes in a graph with outlined flows, and CrewAI offers you a approach to handle a workforce of role-playing AI brokers fixing a process. These are tremendous helpful, however they are usually self-contained ecosystems — all of the brokers in that workflow are sometimes utilizing the identical framework or are tightly built-in by way of that framework’s logic.

A2A just isn’t one other workflow engine or framework. It doesn’t prescribe how you design the logic of agent interactions or which brokers you employ. As a substitute, A2A focuses solely on the communication layer: it’s a protocol that any agent (no matter inner structure) can use to speak to another agent. In a means, you’ll be able to think about orchestration frameworks as completely different places of work with their very own inner processes, and A2A as the worldwide cellphone/e-mail system that connects all of the places of work. When you maintain all of your brokers inside one framework, you may not really feel the necessity for A2A instantly — it’s like everybody in Workplace A already shares a language. However what if you need an agent from Workplace A to delegate a subtask to an agent from Workplace B? A2A steps in to make that attainable with out forcing each brokers emigrate to the identical framework. It standardizes the “API” between brokers throughout completely different ecosystems.

The takeaway: A2A isn’t right here to interchange these frameworks – it’s right here to attach them. You would possibly nonetheless use LangGraph or CrewAI to deal with the inner decision-making and immediate administration of every agent, however use A2A because the messaging layer when brokers want to achieve out to others past their little silo. It’s like having a common e-mail protocol even when every individual makes use of a special e-mail consumer. Everybody can nonetheless talk, whatever the consumer.

A Fingers-On Instance: The “Good day World” of Agent2Agent

No tech dialogue could be full with out a “Good day, World!” instance, proper? Happily, the A2A SDK offers a delightfully easy Good day World agent as an example how this works. Let’s stroll by way of a pared-down model of it to see A2A in motion.

First, we have to outline what our agent can do. In code, we outline an Agent Talent and an Agent Card for our Good day World agent. The ability is the aptitude (on this case, mainly simply greeting the world), and the cardboard is the agent’s public profile that advertises that ability. Right here’s roughly what that appears like in Python:

from a2a.sorts import AgentCard, AgentSkill, AgentCapabilities

# Outline the agent's ability

ability = AgentSkill(

id="hello_world",

title="Returns whats up world",

description="Simply returns whats up world",

tags=["hello world"],

examples=["hi", "hello world"]

)

# Outline the agent's "enterprise card"

agent_card = AgentCard(

title="Good day World Agent",

description="Only a whats up world agent",

url="http://localhost:9999/", # the place this agent might be reachable

model="1.0.0",

defaultInputModes=["text"], # it expects textual content enter

defaultOutputModes=["text"], # it returns textual content output

capabilities=AgentCapabilities(streaming=True), # helps streaming responses

expertise=[skill] # listing the talents it provides (only one right here)

)

(Sure, even our Good day World agent has a resume!) Within the code above, we created a ability with ID "hello_world" and a human-friendly title/description. We then made an AgentCard that claims: “Hello, I’m Good day World Agent. You possibly can attain me at localhost:9999 and I understand how to do one factor: hello_world.” That is mainly the agent introducing itself and its skills to the world. We additionally indicated that this agent communicates by way of plain textual content (no fancy photographs or JSON outputs right here) and that it helps streaming (not that our easy ability wants it, however hey, it’s enabled).

Subsequent, we have to give our agent some brains to really deal with the duty. In an actual state of affairs, this would possibly contain connecting to an LLM or different logic. For Good day World, we will implement the handler in probably the most trivial means: each time the agent receives a hello_world process, it should reply with, you guessed it, “Good day, world!” 😀. The A2A SDK makes use of an Agent Executor class the place you plug within the logic for every ability. I received’t bore you with these particulars (it’s primarily one perform that returns the string "Good day World" when invoked).

Lastly, we spin up the agent as an A2A server. The SDK offers an A2AStarletteApplication (constructed on the Starlette internet framework) to make our agent accessible by way of HTTP. We tie our AgentCard and Agent Executor into this app, then run it with Uvicorn (an async internet server). In code, it’s one thing like:

from a2a.server.apps import A2AStarletteApplication

from a2a.server.request_handlers import DefaultRequestHandler

from a2a.server.duties import InMemoryTaskStore

import uvicorn

request_handler = DefaultRequestHandler(

agent_executor=HelloWorldAgentExecutor(),

task_store=InMemoryTaskStore(),

)

server = A2AStarletteApplication(

agent_card=agent_card,

http_handler=request_handler

)

uvicorn.run(server.construct(), host="0.0.0.0", port=9999)

Whenever you run this, you now have a dwell A2A agent operating at http://localhost:9999. It can serve its Agent Card at an endpoint (so another agent can fetch http://localhost:9999/.well-known/agent.json to see who it’s and what it could actually do), and it’ll hear for process requests on the acceptable endpoints (the SDK units up routes like /message/ship below the hood for JSON-RPC calls).

You possibly can take a look at the total implementation within the official A2A Python SDK on GitHub.

To check it out, we may fireplace up a consumer (the SDK even offers a easy A2AClient class):

Step 1: Set up the A2A SDK utilizing both uv or pip

Earlier than you get began, be sure to have the next:

- Python 3.10 or greater

- uv (non-obligatory however really helpful for sooner installs and clear dependency administration) — or simply keep on with pip in the event you’re extra comfy with that

- An activated digital surroundings

Possibility 1: Utilizing uv (really helpful)

When you’re working inside a uv mission or digital surroundings, that is the cleanest approach to set up dependencies:

uv add a2a-sdkPossibility 2: Utilizing pip

Want good ol’ pip? No drawback — simply run:

pip set up a2a-sdkBoth means, this installs the official A2A SDK so you can begin constructing and operating brokers straight away.

Step 2: Run the Distant Agent

First, clone the repo and begin up the Good day World agent:

git clone https://github.com/google-a2a/a2a-samples.git

cd a2a-samples/samples/python/brokers/helloworld

uv run .This spins up a fundamental A2A-compatible agent able to greet the world.

Step 3: Run the Consumer (from one other terminal)

Now, in a separate terminal, run the check consumer to ship a message to your shiny new agent:

cd a2a-samples/samples/python/brokers/helloworld

uv run test_client.pyClick on right here to see an instance output

INFO:__main__:Making an attempt to fetch public agent card from: http://localhost:9999/.well-known/agent.json

INFO:httpx:HTTP Request: GET http://localhost:9999/.well-known/agent.json "HTTP/1.1 200 OK"

INFO:a2a.consumer.consumer:Efficiently fetched agent card information from http://localhost:9999/.well-known/agent.json: {'capabilities': {'streaming': True}, 'defaultInputModes': ['text'], 'defaultOutputModes': ['text'], 'description': 'Only a whats up world agent', 'title': 'Good day World Agent', 'expertise': [{'description': 'just returns hello world', 'examples': ['hi', 'hello world'], 'id': 'hello_world', 'title': 'Returns whats up world', 'tags': ['hello world']}], 'supportsAuthenticatedExtendedCard': True, 'url': 'http://localhost:9999/', 'model': '1.0.0'}

INFO:__main__:Efficiently fetched public agent card:

INFO:__main__:{

"capabilities": {

"streaming": true

},

"defaultInputModes": [

"text"

],

"defaultOutputModes": [

"text"

],

"description": "Only a whats up world agent",

"title": "Good day World Agent",

"expertise": [

{

"description": "just returns hello world",

"examples": [

"hi",

"hello world"

],

"id": "hello_world",

"title": "Returns whats up world",

"tags": [

"hello world"

]

}

],

"supportsAuthenticatedExtendedCard": true,

"url": "http://localhost:9999/",

"model": "1.0.0"

}

INFO:__main__:

Utilizing PUBLIC agent card for consumer initialization (default).

INFO:__main__:

Public card helps authenticated prolonged card. Making an attempt to fetch from: http://localhost:9999/agent/authenticatedExtendedCard

INFO:httpx:HTTP Request: GET http://localhost:9999/agent/authenticatedExtendedCard "HTTP/1.1 200 OK"

INFO:a2a.consumer.consumer:Efficiently fetched agent card information from http://localhost:9999/agent/authenticatedExtendedCard: {'capabilities': {'streaming': True}, 'defaultInputModes': ['text'], 'defaultOutputModes': ['text'], 'description': 'The total-featured whats up world agent for authenticated customers.', 'title': 'Good day World Agent - Prolonged Version', 'expertise': [{'description': 'just returns hello world', 'examples': ['hi', 'hello world'], 'id': 'hello_world', 'title': 'Returns whats up world', 'tags': ['hello world']}, {'description': 'A extra enthusiastic greeting, just for authenticated customers.', 'examples': ['super hi', 'give me a super hello'], 'id': 'super_hello_world', 'title': 'Returns a SUPER Good day World', 'tags': ['hello world', 'super', 'extended']}], 'supportsAuthenticatedExtendedCard': True, 'url': 'http://localhost:9999/', 'model': '1.0.1'}

INFO:__main__:Efficiently fetched authenticated prolonged agent card:

INFO:__main__:{

"capabilities": {

"streaming": true

},

"defaultInputModes": [

"text"

],

"defaultOutputModes": [

"text"

],

"description": "The total-featured whats up world agent for authenticated customers.",

"title": "Good day World Agent - Prolonged Version",

"expertise": [

{

"description": "just returns hello world",

"examples": [

"hi",

"hello world"

],

"id": "hello_world",

"title": "Returns whats up world",

"tags": [

"hello world"

]

},

{

"description": "A extra enthusiastic greeting, just for authenticated customers.",

"examples": [

"super hi",

"give me a super hello"

],

"id": "super_hello_world",

"title": "Returns a SUPER Good day World",

"tags": [

"hello world",

"super",

"extended"

]

}

],

"supportsAuthenticatedExtendedCard": true,

"url": "http://localhost:9999/",

"model": "1.0.1"

}

INFO:__main__:

Utilizing AUTHENTICATED EXTENDED agent card for consumer initialization.

INFO:__main__:A2AClient initialized.

INFO:httpx:HTTP Request: POST http://localhost:9999/ "HTTP/1.1 200 OK"

{'id': '66f96689-9442-4ead-abd1-69937fb682dc', 'jsonrpc': '2.0', 'consequence': {'type': 'message', 'messageId': 'b2f37a5c-d535-4fbf-a43e-da1b64e04b22', 'components': [{'kind': 'text', 'text': 'Hello World'}], 'position': 'agent'}}

INFO:httpx:HTTP Request: POST http://localhost:9999/ "HTTP/1.1 200 OK"

{'id': 'edaf70e3-909f-4d6d-9e82-849afae38756', 'jsonrpc': '2.0', 'consequence': {'type': 'message', 'messageId': 'ee44ce5e-0cff-4247-9cfd-4778e764b75c', 'components': [{'kind': 'text', 'text': 'Hello World'}], 'position': 'agent'}}When you run the consumer script, you’ll see a flurry of logs that stroll you thru the A2A handshake in motion. The consumer first discovers the agent by fetching its public Agent Card from http://localhost:9999/.well-known/agent.json. This tells the consumer what the agent can do (on this case, reply to a pleasant “whats up”). However then one thing cooler occurs: the agent additionally helps an authenticated prolonged card, so the consumer grabs that too from a particular endpoint. Now it is aware of about each the fundamental hello_world ability and the additional super_hello_world ability obtainable to authenticated customers. The consumer initializes itself utilizing this richer model of the agent card, and sends a process asking the agent to say whats up. The agent responds — twice on this run — with a structured A2A message containing "Good day World", wrapped properly in JSON. This roundtrip might sound easy, but it surely’s truly demonstrating your complete A2A lifecycle: agent discovery, functionality negotiation, message passing, and structured response. It’s like two brokers met, launched themselves formally, agreed on what they may assist with, and exchanged notes — all with out you needing to jot down customized glue code.

This easy demo may not remedy actual issues, but it surely proves a vital level: with only a little bit of setup, you’ll be able to flip a bit of AI logic into an A2A-compatible agent that another A2A agent can uncover and make the most of. As we speak it’s a whats up world toy, tomorrow it might be a fancy data-mining agent or an ML mannequin specialist. The method could be analogous: outline what it could actually do (expertise), stand it up as a server with an AgentCard, and growth — it’s plugged into the agent community.

Getting Began with A2A in Your Tasks

Excited to make your AI brokers truly discuss to one another? Listed here are some sensible tips to get began:

- Set up the A2A SDK: Google has open-sourced an SDK (at present for Python, with others prone to comply with). It’s as straightforward as a pip set up:

pip set up a2a-sdk. This offers you the instruments to outline brokers, run agent servers, and work together with them. - Outline Your Brokers’ Expertise and Playing cards: Take into consideration what every agent in your system ought to be capable to do. Outline an

AgentSkillfor every distinct functionality (with a reputation, description, and so forth.), and create anAgentCardthat lists these expertise and related information concerning the agent (endpoint URL, supported information codecs, and so forth.). The SDK’s documentation and examples (just like the Good day World above) are nice references for the syntax. - Implement the Agent Logic: That is the place you join the dots between the A2A protocol and your AI mannequin or code. In case your agent is actually an LLM immediate, implement the executor to name your mannequin with the immediate and return the consequence. If it’s doing one thing like an internet search, write that code right here. The A2A framework doesn’t restrict what the agent can do internally — it simply defines the way you expose it. As an illustration, you would possibly use OpenAI’s API or a neighborhood mannequin inside your executor, and that’s completely nice.

- Run the A2A Agent Server: Utilizing the SDK’s server utilities (as proven above with Starlette), run your agent so it begins listening for requests. Every agent will sometimes run by itself port or endpoint. Make sure that it’s reachable (in the event you’re inside a company community or cloud, you would possibly deploy these as microservices).

- Join Brokers Collectively: Now the enjoyable half — have them discuss! You possibly can both write a consumer or use an current orchestrator to ship duties between brokers. The A2A repo comes with pattern shoppers and even a multi-agent demo UI that may coordinate messages between three brokers (as a proof-of-concept). In observe, an agent can use the A2A SDK’s

A2AClientto programmatically name one other agent by its URL, or you’ll be able to arrange a easy relay (even cURL or Postman would do to hit the REST endpoint with a JSON payload). A2A handles the routing of the message to the fitting perform on the distant agent and provides you again the response. It’s like calling a REST API, however the “service” on the opposite finish is an clever agent quite than a fixed-function server. - Discover Samples and Group Integrations: A2A is new, but it surely’s gaining traction quick. The official repository offers pattern integrations for widespread agent frameworks — for instance, the way to wrap a LangChain/LangGraph agent with A2A, or the way to expose a CrewAI agent by way of A2A. This implies you don’t should reinvent the wheel in the event you’re already utilizing these instruments; you’ll be able to add an A2A interface to your current agent with a little bit of glue code. Additionally control neighborhood tasks — on condition that over 50 organizations are concerned, we will anticipate many frameworks to supply native A2A assist shifting ahead.

- Be a part of the Dialog: Since A2A is open-source and community-driven, you will get concerned. There’s a GitHub discussions forum for A2A, and Google welcomes contributions and suggestions. When you encounter points or have concepts (perhaps a function for negotiating, say, picture captions for visually impaired brokers?), you’ll be able to pitch in. The protocol spec is in draft and evolving, so who is aware of — your suggestion would possibly change into a part of the usual!

Conclusion: In the direction of a Extra Linked AI Future

Google’s Agent2Agent protocol is an formidable and thrilling step towards a future the place AI brokers don’t dwell in isolation, however as a substitute kind an interoperable ecosystem. It’s like instructing a bunch of hyper-specialized robots the way to maintain a dialog — as soon as they’ll discuss, they’ll workforce as much as deal with issues none of them may remedy alone. Early examples (like a hiring workflow the place completely different brokers deal with candidate sourcing, interviewing, and background checks present how A2A can streamline complicated processes by letting every agent give attention to its specialty and handing off duties seamlessly. And that is just the start.

The truth that so many business gamers are backing A2A suggests it would change into the de facto customary for multi-agent communication — the “HTTP for AI brokers,” if you’ll. We’re not fairly there but (the protocol was simply introduced in 2025, and a production-ready model continues to be within the works), however the momentum is robust. With corporations from software program giants to startups and consulting corporations on board, A2A has an actual shot at unifying how brokers interoperate throughout platforms. This might spur a wave of innovation: think about with the ability to mix-and-match the perfect AI companies from completely different distributors as simply as putting in apps in your cellphone, as a result of all of them communicate A2A.

A2A represents a big transfer towards modular, collaborative AI. As builders and researchers, it means we will begin designing programs of AIs like we design microservices — every doing one factor effectively, and a easy customary connecting them. And as customers, it means our future AI assistants would possibly coordinate behind the scenes on our behalf: reserving journeys, managing our good houses, operating our companies — all by chatting amicably by way of A2A.

References

[1] Google, Saying the Agent2Agent Protocol (A2A) (2025), https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

[2] A2A GitHub Repository, A2A Samples and SDK (2025), https://github.com/google-a2a/a2a-samples

[3] A2A Draft Specification, Agent-to-Agent Communication Protocol Spec (2025), https://github.com/google-a2a/A2A/blob/main/docs/specification.md

[4] Anthropic, Mannequin Context Protocol: Introduction (2024), https://modelcontextprotocol.io