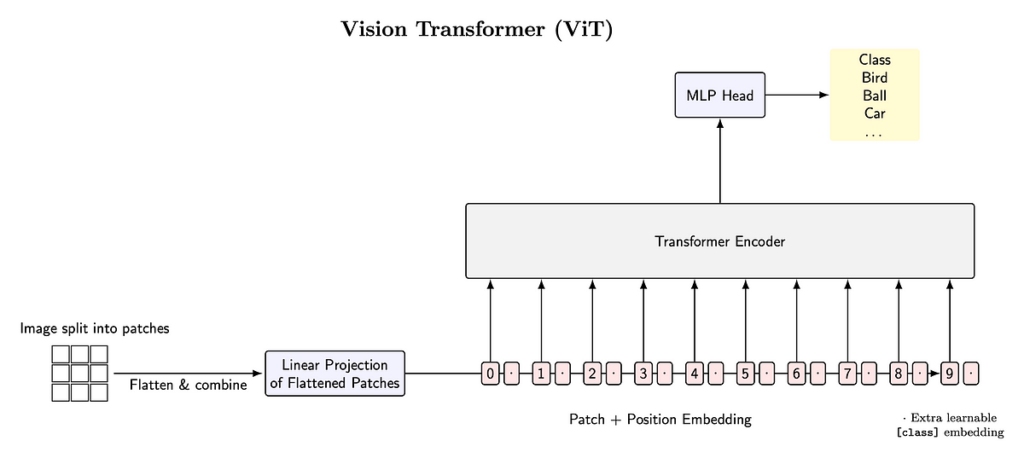

Pictures are throughout us, however how does a pc really see what’s inside an image? A analysis staff at Google Analysis, Mind Staff, requested this query in a manner that was each easy and groundbreaking, resulting in a 2021 ICLR paper with the mesmerizing title “An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale.” This examine rapidly caught the world’s consideration, amassing greater than fifty thousand citations in only some years. At first look, such a quantity can appear baffling. But upon nearer inspection, one realizes this paper touched a nerve on the intersection of laptop imaginative and prescient, machine studying, and pure language processing, reworking how researchers and practitioners worldwide strategy visible recognition duties.

To understand this breakthrough, it helps to know the phenomenon of transformers. Initially invented for text-based duties, transformers use “self-attention” to determine which elements of a sequence — often phrases — ought to most strongly affect one another in capturing that means. Because of their scalability and success in language functions, transformers steadily grew to become the spine of many pure language processing breakthroughs, guiding automated translations and textual content turbines. Nonetheless, in laptop imaginative and prescient, conventional…