Think about you personal an internet retailer with 1000’s of shoppers.

You wish to ship personalised provides — however right here’s the issue:

You’ve no concept which clients have comparable procuring habits.

For those who ship the identical e mail to everybody, some will adore it, some will ignore it, and a few may even unsubscribe. That’s wasted effort and cash.

That is the place clustering is available in — a strong machine studying approach that teams comparable clients collectively with out you telling the algorithm what the teams must be.

By the tip of this put up, you’ll know:

- What clustering is (in plain English)

- How Ok-Means clustering works

- How to decide on the correct variety of clusters

- How companies really use it

- A fast, easy-to-follow instance

You wish to personalize provides for purchasers, however you don’t know who’s much like whom.

- Acquire buyer information: age, gender, location, buy historical past, go to frequency, and so on.

- Apply a clustering algorithm to group clients with comparable habits.

- Ship focused promotions to every group.

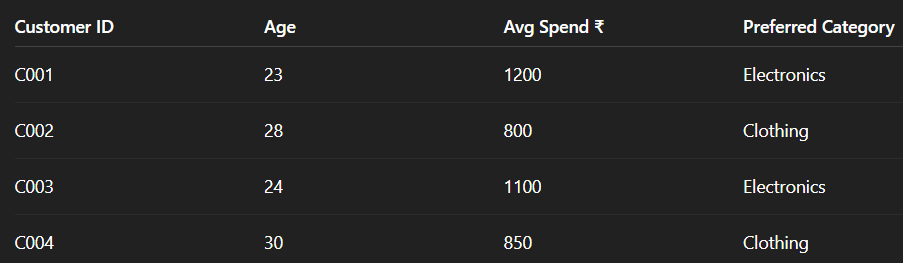

Earlier than Clustering:

We simply have a listing of shoppers.

After Clustering:

- Group 1 → Principally electronics consumers (C001, C003)

- Group 2 → Principally clothes consumers (C002, C004)

📢 Now you’ll be able to ship electronics provides to Group 1 and style provides to Group 2.

🌍 Actual-World Functions

Clustering is an unsupervised studying approach.

Meaning:

- The information doesn’t have labels (like “excessive spender” or “funds purchaser”)

- The algorithm finds pure teams based mostly on similarity.

To determine which factors belong collectively, we measure distance between them.

💡 Rule of Thumb:

- Small distance → factors are comparable

- Giant distance → factors are totally different

If one function (like revenue) has large values and one other (like buy frequency) is small, the bigger numbers dominate the clustering.

✅ Resolution: Use Z-score Standardization

- Imply = 0

- Customary deviation = 1

- All options contribute equally

Ok-Means is a partitioning algorithm that divides information into Ok teams based mostly on similarity.

Steps:

- Plot WCSS (Inside-Cluster Sum of Squares) vs. Ok

- Decide the “elbow” level the place the drop slows

- Measures how effectively factors match their clusters

- Vary: -1 to 1 → increased is healthier

As soon as clusters are made, analyze them:

- Cluster 1: Excessive revenue, combined spending → Upsell premium merchandise

- Cluster 2: Low revenue, low spending → Provide funds offers

- Cluster 3: Younger age group → Push stylish merchandise

📢 That is the place clustering creates enterprise worth.

When you’ve gotten many options (20+), visualizing is tough.

t-SNE:

- Reduces dimensions to 2D/3D

- Retains comparable factors shut collectively

- Used for visualization, not modeling

🖥 Mini Python Instance

import pandas as pd

from sklearn.cluster import KMeans

from sklearn.preprocessing import StandardScaler# Instance information

information = pd.DataFrame({

'Age': [23, 28, 24, 30],

'Avg_Spend': [1200, 800, 1100, 850]

})

# Scale information

scaler = StandardScaler()

scaled_data = scaler.fit_transform(information)

# Apply Ok-Means

kmeans = KMeans(n_clusters=2, random_state=42)

information['Cluster'] = kmeans.fit_predict(scaled_data)

print(information)

Clustering isn’t simply an ML idea — it’s a enterprise development instrument.

Whether or not you’re segmenting clients, detecting fraud, or optimizing provide chains, clustering helps flip uncooked information into actionable insights.