KVCache is a way that accelerates Transformers by caching the outcomes of Consideration calculations.

In language fashions utilizing Transformers, the output token from the present inference is concatenated with the enter tokens and reused because the enter tokens for the subsequent inference. Subsequently, within the (N+1)th inference, the N tokens are precisely the identical as within the earlier inference, with just one new token added.

KVCache shops the reusable computation outcomes from the present inference and hundreds them to be used within the subsequent inference. In consequence, not like typical caches, cache misses don’t happen.

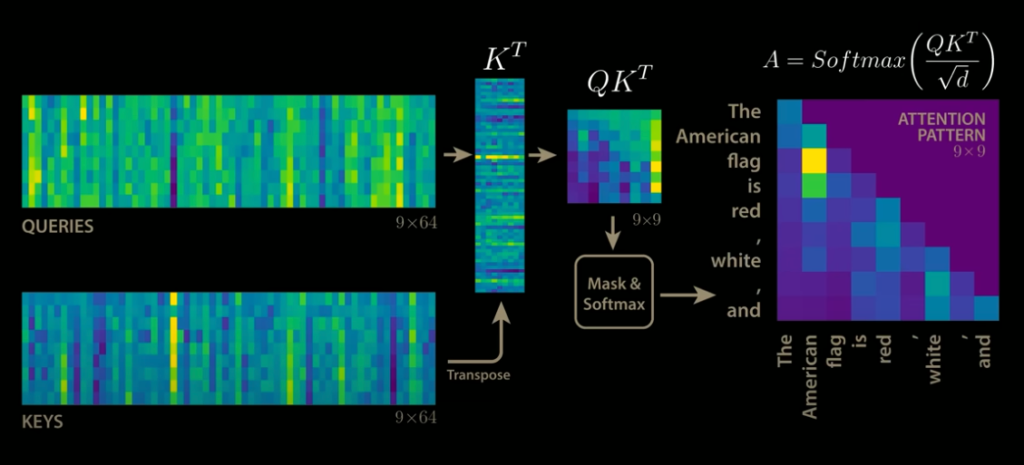

In Consideration, the output is computed by multiplying Question (Q) and Key (Okay) to acquire QK, making use of Softmax, after which performing a matrix multiplication with Worth (V). When decoding N tokens has been accomplished and the (N+1)th token is inferred, the column measurement of the QK matrix turns into (N+1). In consequence, the processing time will increase as decoding progresses.

When utilizing KVCache, the results of the earlier Q and Okay matrix multiplication is cached in VRAM, and solely the matrix multiplication for the newly added token is computed. This result’s then built-in with the beforehand cached outcome. In consequence, solely the newly added token must be processed, resulting in quicker efficiency

When a brand new token is added to Q and Okay, it might appear that not solely the underside row but in addition the rightmost column of QK would change. Nonetheless, in Transformers, future tokens are masked to stop them from being referenced, so solely the underside row of QK is up to date. In consequence, solely the underside row of QKV can also be up to date, and KVCache features appropriately even when a number of Consideration layers are stacked.

With out KVCache, the processing time will increase non-linearly with the size of the enter tokens. By utilizing KVCache, the processing time may be made linear with respect to the variety of enter tokens.

Along with accelerating Transformer decoding, KVCache can also be used for immediate caching in LLMs. Immediate caching permits quick execution of a number of totally different questions on the identical context by storing and reusing the KVCache.

Furthermore, as a variation of RAG, a technique known as CAG (Cache-Augmented Technology) has been proposed. It quickens RAG by caching complete context paperwork into KVCache.

KVCache shops the outcomes of matrix multiplications in VRAM, which results in a major enhance in VRAM utilization. To handle this subject, DeepSeek has launched a way that compresses the KVCache.