Whereas LLMs dominate in the present day’s AI discourse, Meta’s LCMs characterize a daring leap towards machines that don’t simply discuss — however assume.

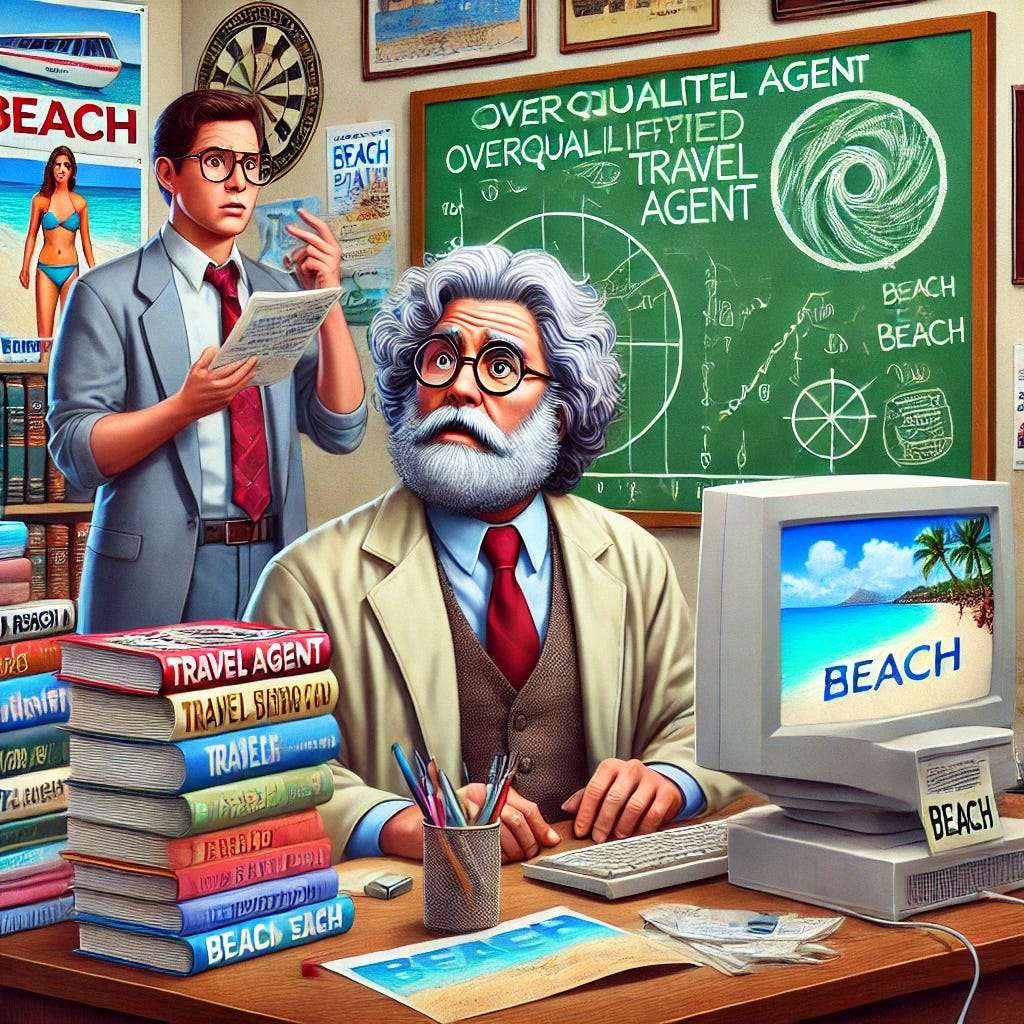

Image this: You Stroll right into a journey company (sure, fake it’s the 90s), and as an alternative of the same old sun-kissed, relaxed journey agent asking you the place you wish to go, you’re greeted by somebody who has learn each e-book on journey, studied historic maritime commerce routes, and has a PhD in cultural anthropology. You inform them, “I simply need a week on the seashore,” and instantly, they launch right into a deep evaluation of the historic significance of seaside leisure, the psychology of rest, and why your selection of a blue-striped seashore towel displays a unconscious craving for stability.

Welcome to the world of Massive Idea Fashions (LCMs).

So…What Precisely Are Massive Idea Fashions?

You’ve in all probability heard about Massive Language Fashions (LLMs), like ChatGPT, that generate human-like textual content. However Massive Idea Fashions take issues up a notch by not simply predicting phrases however deeply understanding summary ideas, patterns, and relationships throughout totally different domains. They don’t simply know information — they join dots that you just didn’t even know had been in the identical image.

LCMs don’t cease at “Hawaii has good seashores.” No, no. They’ll analyze historic climate traits, examine totally different sand granularities, consider native seafood sustainability, and contemplate whether or not your persona kind is extra fitted to Maui’s adventurous hikes or Kauai’s laid-back vibes. All earlier than you’ve even picked out your sun shades.

In principle, that is unimaginable. An LCM can help in scientific breakthroughs, authorized analysis, and even assist design higher cities by understanding and predicting human habits at an unparalleled degree. However like our overqualified journey agent, generally they go method too deep.

Say you’re planning a trip. A easy AI mannequin may recommend locations primarily based in your funds, most well-liked actions, and previous journeys. An LCM, however, may start thinking about whether or not your eager for a tropical getaway is rooted in a organic want for vitamin D because of decreased serotonin ranges in winter. It would analyze the historic migration patterns of birds close to your vacation spot and ponder whether or not that might subtly have an effect on your temper. You simply wished to e-book a visit — now you’re knee-deep in a philosophical dialogue in regards to the essence of rest.

The potential for LCMs is mind-blowing, however it additionally raises questions. When does evaluation develop into over evaluation? When do insights develop into irrelevant to human decision-making? Certain, it’s fascinating that an AI can inform me that my choice for ocean over mountains ties again to some historic genetic predisposition — however does it really assist me pack my suitcase?

That is the problem of growing LCMs for real-world use. They’re immensely highly effective, however with out correct tuning, they danger drowning customers in an excessive amount of data, making easy choices unnecessarily sophisticated. No person needs an AI that, when requested the place to eat, supplies an in depth historic and sociological evaluation of the evolution of delicacies earlier than really recommending a restaurant.

State of affairs: “Assist me plan a 3-day journey to the seashore with my youngsters!”

LLM’s Strategy

Step 1: Spits out a wildly artistic itinerary:

“Day 1: Hunt for mermaid scales at dawn! Day 2: Construct a sandcastle rivaling Hogwarts! Day 3: Practice seagulls to sing ‘Child Shark’!”

Strengths: Tremendous enjoyable, youngsters like it

Oops: Forgets to say tides exist. The sandcastle washes away in 10 minutes.

LCM’s Strategy

Step 1: Asks boring-but-important questions: “What’s your funds? Allergy symptoms? Does your 5-year-old nonetheless nap?”

Plan:

“Day 1: Low-tide scavenger hunt (no mermaids, however cool shells). Day 2: Pre-noon castle-building (avoids meltdowns). Day 3: Pre-pack sandwiches (seagulls love stealing fries).”

Strengths: No tears, no sunburns, no chapter.

Ultimate Verdict:

LLMs are the category clowns — hilarious however why are they microwaving a telephone?

LCMs are the corridor screens — smarter, however they’ll yell at you for laughing.

Final Winner: You, as a result of AI chaos = infinite leisure.

- LLMs are like GPS that solely reads poetry: “Flip left the place the willow weeps…”

- LCMs are GPS + climate app + pediatrician: “Flip left, pack raincoats, and nap time is at 2 PM.”

- Multi-Modal Studying: Mixes textual content (your request), photos (seashore maps), and information (tide tables) like a smoothie of knowledge.

- Data Graphs: Hyperlinks ideas (“youngsters” → “brief consideration spans”, “seashore” → “sunscreen SPF 50”).

- Neural-Symbolic Combine: Combines sample recognition (neural nets) with logic guidelines (“If youngsters, then snacks > 3”)

- Meta’s Secret Sauce: Trains on structured datasets (e.g., journey guides, security protocols) and messy social media posts.

Tech Smackdown: LLM vs. LCM

Identical to we’d in all probability inform our overqualified journey agent to dial it again and simply recommend a pleasant seashore, builders of LCMs want to make sure these fashions supply actionable insights reasonably than existential deep dives. The trick is to verify these fashions improve decision-making reasonably than overwhelm it.

So, the subsequent time you hear about LCMs shaping industries, simply bear in mind — there’s a positive line between useful and hilariously overqualified. And in case your AI ever begins psychoanalyzing your trip decisions, perhaps it’s time to inform it, “Hey, let’s hold it easy. Simply e-book the journey.”

Or, you already know, simply seize your seashore towel and go.