Assessing Mannequin Accuracy

With a primary understanding of logistic regression below our belt, just like

linear regression our concern now shifts to how nicely do our fashions predict.

As within the final information, we are going to use caret::practice() and match three 10-fold cross

validated logistic regression fashions.

Extracting the accuracy measures on this

case, classification accuracy, we see that each cv_model1 and cv_model2 had

a mean accuracy of 83.88%.

Nevertheless, cv_model3 which used all predictor

variables in our information achieved a mean accuracy fee of 87.58%.

set.seed(123)

cv_model1 <- practice(Attrition ~ MonthlyIncome, information = churn_train, methodology = ”glm”, household = ”binomial”, trControl = trainControl(methodology = ”cv”, quantity = 10))

set.seed(123)

cv_model2 <- practice(Attrition ~ MonthlyIncome + OverTime, information = churn_train,methodology = ”glm”, household = ”binomial”,trControl = trainControl(methodology = ”cv”, quantity = 10))

# extract out of pattern efficiency measures

abstract(resamples(checklist(

model1 = cv_model1,

model2 = cv_model2,

model3 = cv_model3)))

$statistics$Accuracy

We are able to get a greater understanding of our mannequin’s efficiency by assessing

the confusion matrix.

We are able to use the caret::confusionMatrix()

to compute a confusion matrix.

We have to provide our mannequin’s predicted class

and the actuals from our coaching information.

The confusion matrix supplies a wealth

of data.

Notably, we are able to see that though we do nicely predicting

circumstances of non-attrition, be aware the excessive specificity, our mannequin does significantly

poor predicting precise circumstances of attrition, be aware the low sensitivity.

By default the predict() operate predicts the response class for a caret

mannequin nonetheless, you may change the sort argument to foretell the chances.

?caret::predict.practice

# predict class

pred_class <- predict(cv_model3, churn_train)

# create confusion matrix

confusionMatrix(information = relevel(pred_class, ref = ”Sure”), reference = relevel(churn_train$Attrition, ref = ”Sure”))

# create confusion matrix

confusionMatrix(information = relevel(pred_class, ref = ”Sure”),

reference = relevel(churn_train$Attrition, ref = ”Sure”))

One factor to level out, within the confusion matrix above you’ll be aware the metric No

Data Price: 0.839.

This represents the ratio of non-attrition vs. attrition in our coaching information (desk(churn_train$Attrition) %>% prop.desk()).

Consequently, if we merely predicted ”No” for each worker we’d nonetheless

get an accuracy fee of 83.9%.

Subsequently, our objective is to maximise our accuracy

fee over and above this no data baseline whereas additionally attempting to steadiness

sensitivity and specificity.

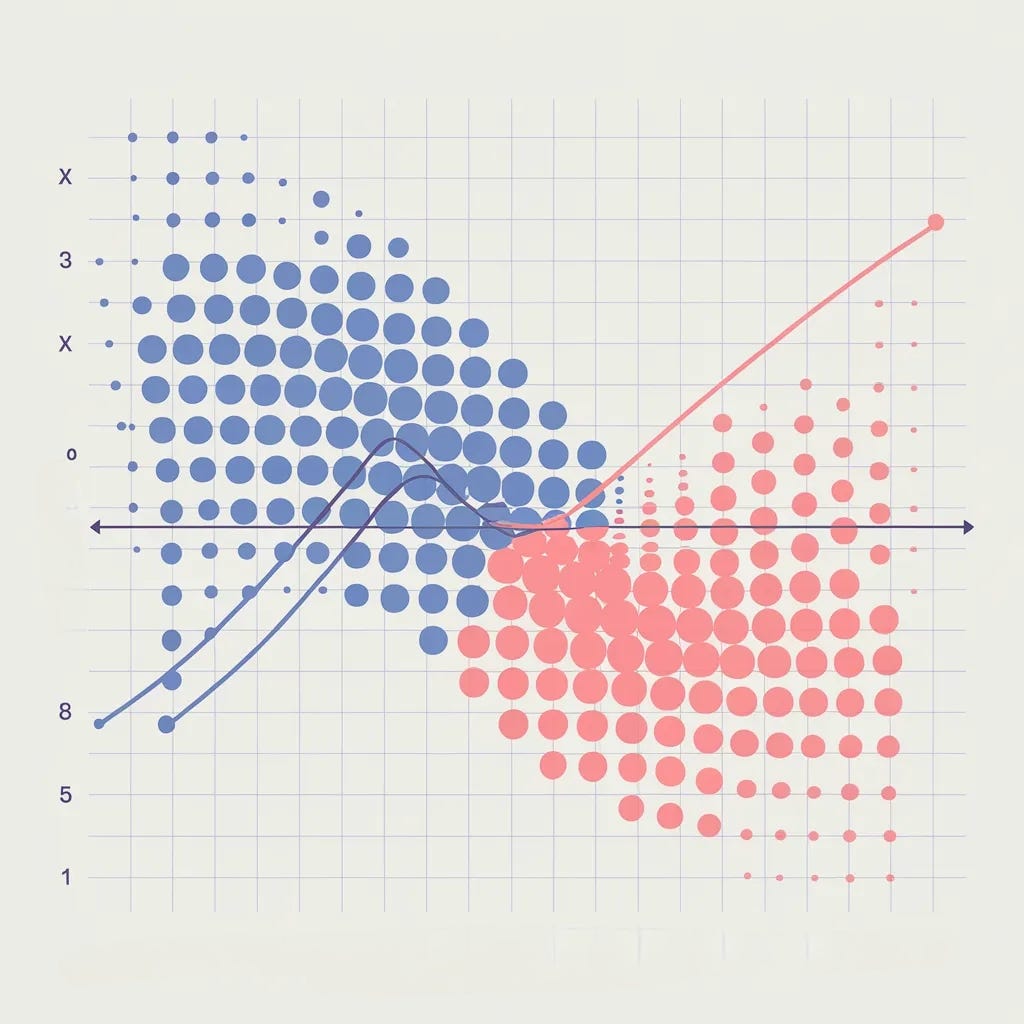

To that finish, we plot the ROC curve which is displayed in determine.

If we evaluate our easy mannequin (cv_model1) to our full mannequin (cv_model3), we see the carry achieved with the extra correct

mannequin.

# putting in the package deal

library(ROCR)

# Compute predicted chances

m1_prob <- predict(cv_model1, churn_train, sort = ”prob”)$Sure

m3_prob <- predict(cv_model3, churn_train, sort = ”prob”)$Sure

# Compute AUC metrics for cv_model1 and cv_model3

perf1 <- prediction(m1_prob, churn_train$Attrition) %>% efficiency(measure = ”tpr”, x.measure = ”fpr”)

perf2 <- prediction(m3_prob, churn_train$Attrition) %>% efficiency(measure = ”tpr”, x.measure = ”fpr”)

# Plot ROC curves for cv_model1 and cv_model3

plot(perf1, col = ”black”, lty = 2)

plot(perf2, add = TRUE, col = ”blue”)

legend(0.8, 0.2, legend = c(”cv_model1”, ”cv_model3”), col = c(”black”, ”blue”), lty = 2:1, cex = 0.6)

Much like linear regression, we are able to carry out a PLS logistic regression to

assess if lowering the dimension of our numeric predictors helps to enhance

accuracy.

There are 16 numeric options in our information set so this code

performs a 10-fold cross-validated PLS mannequin whereas tuning the variety of

principal parts to make use of from 1–16.

The optimum mannequin makes use of 14 principal

parts which isn’t lowering the dimension by a lot.

Nicely, the imply

accuracy of 0.876 is not any higher than the typical CV accuracy of cv_model3

(0.876).

# Carry out 10-fold CV on a PLS mannequin tuning the variety of PCs to

# use as predictors

set.seed(123)cv_model_pls <- practice(Attrition ~ ., information = churn_train,methodology = ”pls”, household = ”binomial”,trControl = trainControl(methodology = ”cv”, quantity = 10), preProcess = c(”zv”, ”middle”, ”scale”),tuneLength = 16)

within the AUC represents the ’carry’ that we obtain with mannequin 3.

# Mannequin with lowest RMSE

cv_model_pls$bestTune# Plot cross-validated RMSE

ggplot(cv_model_pls)