🚀 Constructing a Actual-Time Bitcoin Value Prediction System with Azure, Databricks, and Energy BI

If you happen to’ve ever stared at a Bitcoin value chart and puzzled in case you may predict its subsequent transfer, you’re not alone. However as an alternative of simply guessing, I made a decision to construct a real-time prediction system that makes use of machine studying and stay information to forecast the following value level. Right here’s how I introduced this undertaking to life — step-by-step, byte-by-byte.

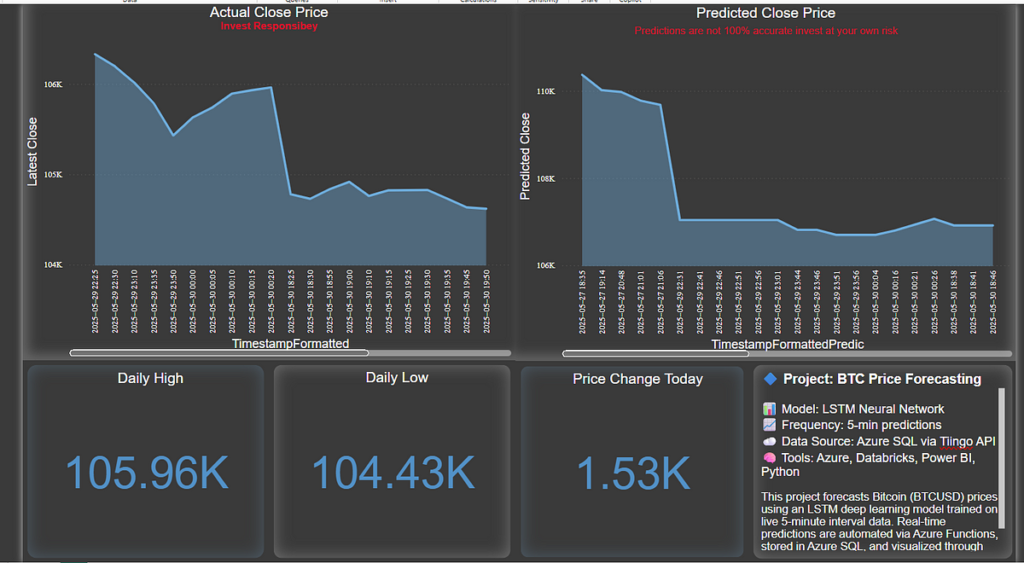

This undertaking is a full-fledged real-time Bitcoin value prediction pipeline that:

- Collects BTC value information each minute.

- Predicts the following 5-minute shut value utilizing an LSTM mannequin.

- Shops each precise and predicted costs in Azure SQL.

- Backs up the uncooked information in Azure Blob Storage.

- Visualizes real-time updates on a stay Energy BI dashboard.

- Tingo API: Fetches stay Bitcoin information.

- Azure Features: Triggers each minute to get BTC information from the Tingo API.

- Azure Storage: Briefly shops the information for processing.

- Azure Blob Storage: Shops uncooked information as backup.

- Azure SQL Database: Shops each historic information and mannequin predictions.

- Databricks: Hosts and runs the LSTM mannequin to foretell the following 5-minute shut.

- Energy BI: Connects to Azure SQL by way of DirectQuery for stay dashboard updates.

- Azure Operate App: Configured a timer set off to name the Tingo API each 5 minutes.

- Blob Storage: Linked to the Operate App for uncooked information backup.

- Azure SQL: Created two tables:

StockPricesandBTC_Predictions.

- Written in Python, deployed utilizing VS Code.

- Operate fetches JSON response from Tingo API.

- Pushes parsed information to Azure SQL & Blob.

- Used a Databricks pocket book to preprocess information.

- Educated an LSTM mannequin utilizing TensorFlow/Keras.

- Saved scaler and mannequin artifacts.

- Added prediction code to learn the most recent information from Azure SQL and write predicted costs again.

- Separated coaching and prediction notebooks.

- Scheduled the prediction pocket book utilizing the Databricks Job Scheduler to run each 5 minutes.

- Used DirectQuery mode to hook up with Azure SQL.

- Created dynamic visuals for:

- Newest BTC shut value

- Predicted value

- Value distinction (change)

- Max/Min values of the day

- Quantity chart for previous 3 days

- Used customized formatting and a black background for a contemporary look.

- API Limits: Needed to monitor day by day/hourly limits from Tingo.

- Azure Quotas: Requested vCPU quota enhance for Databricks cluster.

- Push Failures: GitHub push safety flagged secrets and techniques; cleaned historical past utilizing

git filter-repo. - Timestamp Formatting: Ensured time granularity in Energy BI included minutes.

- Actual-time updates

- Clear distinction between precise and predicted costs

- KPI tiles for fast glances

- Time-series line charts with customized timestamps

- Trendy darkish theme for knowledgeable look

- Constructing a real-time ML pipeline is achievable with cloud platforms.

- Automation is vital: use Azure Features and Databricks jobs.

- Visualization issues: a very good dashboard could make the information come alive.

- Safety is crucial: at all times use

.gitignoreand clear your repo earlier than pushing.

- Cloud: Azure Features, Azure Storage, Azure Blob, Azure SQL, Databricks

- ML: Python, LSTM (Keras), Scikit-learn

- Visualization: Energy BI

- Others: Tingo API, GitHub

- Combine extra options like RSI, Transferring Averages.

- Lengthen predictions to 10 or 15-minute intervals.

- Add e mail or Slack alerts on main value modifications.

- Use a message queue like Azure Occasion Hubs for higher information movement management.

Thanks for studying! 🙌

If you happen to discovered this attention-grabbing, take a look at the GitHub Repo or shoot me a message — I’d love to attach and chat extra about real-time information pipelines or something crypto! 🚀