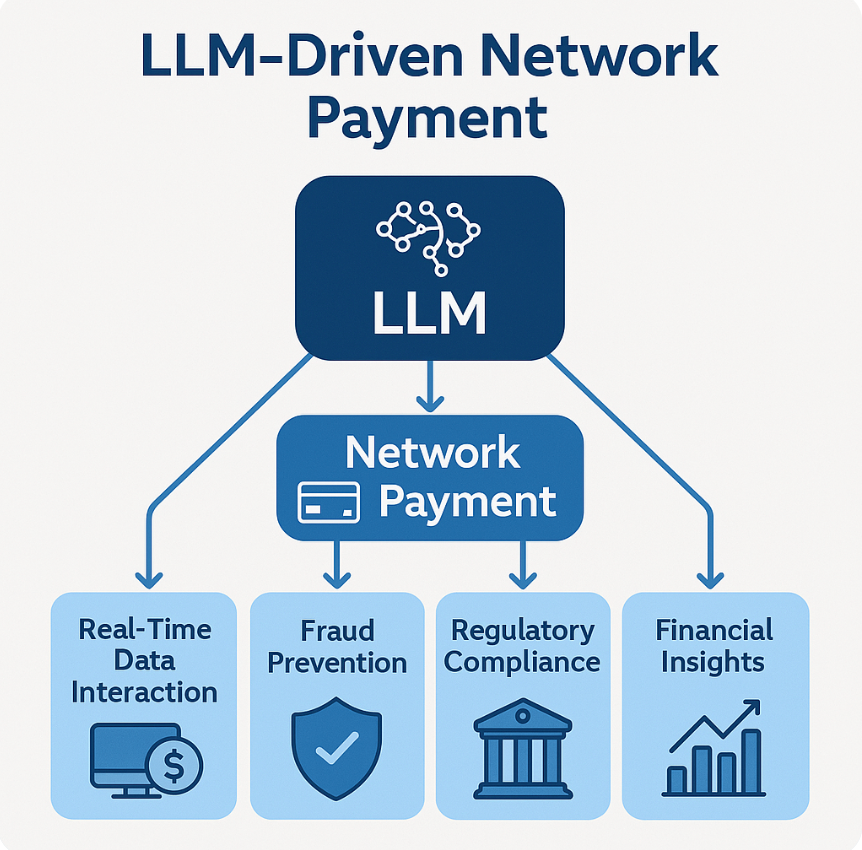

Giant Language Fashions (LLMs) are poised to revolutionize digital finance, particularly within the realm of community cost programs. Their integration brings transformative potential throughout key areas similar to real-time information entry, fraud detection, regulatory compliance, cost reconciliation, and monetary analytics. This text explores how LLM-powered platforms are reshaping monetary operations — delivering clever automation, enhanced decision-making, and unified entry to essential cost information throughout the ecosystem.

Drawback Panorama and LLM-Pushed Options

1. Fragmented Knowledge and Disconnected Techniques:

As-Is:

Conventional cost programs typically contain a number of fragmented platforms and databases the place transaction information, fraud detection, cost reconciliation, and buyer assist programs are siloed. This results in inefficiencies, duplication of effort, and difficulties in accessing a unified view of monetary information.

To- Be:

- Unified Question Platform: The LLM-driven platform aggregates all payment-related information (transaction statuses, fraud checks, reconciliation, and so forth.) right into a single level of entry. Customers can question a variety of monetary data seamlessly, while not having to navigate totally different programs.

- 24/7 Availability: The platform permits clients, retailers, and monetary professionals to get solutions to their queries anytime, enhancing buyer expertise.

2. Sluggish and Inefficient Buyer Help:

As-Is:

- Companies within the cost ecosystem typically depend on customer support representatives to reply repetitive and time-consuming queries associated to cost statuses, transaction histories, and fraud. This ends in lengthy response occasions and excessive customer support prices.

To-Be:

- Instantaneous Responses: By leveraging pure language processing (NLP) by way of an LLM, IntelliQuery Paynet automates responses to payment-related inquiries, offering real-time solutions to buyer questions while not having human intervention. This accelerates response occasions, reduces reliance on assist groups, and improves total buyer satisfaction.

- 24/7 Availability: The platform permits clients, retailers, and monetary professionals to get solutions to their queries anytime, enhancing buyer expertise.

3. Complicated Fraud Detection:

As-Is:

Fraud detection in cost programs typically includes complicated, time-consuming processes. Figuring out fraudulent transactions requires analyzing patterns throughout massive datasets and dealing throughout numerous programs. Fraud detection options which are siloed or lack real-time evaluation can miss or delay the identification of fraudulent actions, resulting in monetary losses.

To-Be:

- Actual-Time Fraud Detection: The LLM-powered platform integrates machine studying and fraud detection algorithms, enabling the real-time identification and flagging of suspicious transactions. The system can immediately notify customers about probably fraudulent actions and supply insights into the transaction’s danger profile.

- Proactive Fraud Prevention: The system permits companies to take fast motion to halt fraud, thereby decreasing the window of alternative for fraudsters and minimizing monetary losses.

4. Guide Question Administration and Knowledge Retrieval:

- As-Is: In conventional programs, companies depend on guide processes to question transaction information, reconcile funds, or confirm cost statuses. This typically includes logging into a number of programs, cross-referencing information, and taking time to manually discover and analyze the info.

To-Be:

- Pure Language Queries: The platform permits customers to enter pure language queries to immediately retrieve complicated monetary information, eradicating the necessity for guide cross-referencing of information or navigating by way of a number of programs.

- Knowledge Accessibility: Customers can ask easy questions like “Has cost 12345 been processed?” or “What’s the standing of transaction X?” and get fast solutions, saving time and sources.

5. Inefficiency in Fee Reconciliation:

As-is:

Reconciliation of funds between totally different programs (e.g., cost processors, banks, retailers) will be an error-prone, guide, and time-consuming course of. Fee discrepancies or delays are sometimes found after the actual fact, resulting in friction with clients and operational inefficiencies.

To-Be:

- Actual-Time Reconciliation: The platform integrates with backend cost processing programs to offer up-to-date, correct cost standing and reconciliation data in real-time. This allows companies to shortly determine discrepancies and resolve them earlier than they develop into greater points.

- Automated Stories: IntelliQuery Paynet can generate automated reconciliation stories that assist companies keep on prime of their funds and detect any mismatches shortly.

6. Inconsistent Compliance and Regulatory Monitoring:

As-Is:

- Monetary transactions should adjust to a wide range of laws similar to PCI-DSS, GDPR, and anti-money laundering legal guidelines. Making certain that cost information is dealt with correctly and in compliance with these legal guidelines requires fixed monitoring, which will be complicated and cumbersome.

To-Be:

- Constructed-in Compliance Options: The platform is designed to stick to regulatory requirements like PCI-DSS and GDPR, guaranteeing that consumer information is dealt with securely and appropriately. When accessing monetary information, the platform mechanically

7. Lack of Actual-Time Insights for Monetary Determination-Making:

As-Is:

- Monetary professionals and enterprise leaders typically lack the instruments to entry up-to-date insights into transaction statuses, cost developments, or fraud patterns. This lack of real-time data can delay decision-making, forestall well timed responses to points, and hinder strategic planning.

To-Be:

- Actual-Time Dashboards and Stories: The platform supplies entry to real-time dashboards and analytical stories that assist monetary decision-makers monitor cost volumes, fraud developments, and transaction statuses. This permits companies to make data-driven selections, forecast monetary developments, and allocate sources extra successfully.

- Actionable Insights: The system doesn’t simply present uncooked information; it interprets transaction data into actionable insights that may inform strategic selections and optimize operations.

LLM-driven Community Fee inquiry platform designed to deal with these challenges by enabling companies, retailers, monetary establishments, and end-users to work together with monetary information utilizing pure language queries. This platform leverages Superior Pure Language Processing (NLP) to interpret and reply to complicated payment-related inquiries, making monetary information extra accessible, actionable, and safe.

How It really works

LLM-Pushed Community Fee inquiry platform consists of a number of key parts that work collectively to offer a seamless and clever consumer expertise. Right here’s a breakdown of those parts and their interactions:

1. Person Interface (UI):

Operate:

- Offers the front-end interface for customers to work together with the platform.

- Captures consumer enter (textual content or voice queries).

- Shows the LLM-generated responses and any related visualizations.

Interplay:

- Receives consumer queries and sends them to the backend.

- Shows responses acquired from the backend.

- Handles consumer authentication and session administration.

2. API Gateway:

Operate:

- Acts as a single-entry level for all API requests.

- Handles routing, authentication, and charge limiting.

- Offers a layer of safety and abstraction.

Interplay:

- Receives consumer queries from the UI.

- Routes queries to the suitable backend companies.

- Receives responses from backend companies and sends them to the UI.

3. LLM Orchestration Service:

Operate:

- Manages the interplay with the Giant Language Mannequin (LLM).

- Handles question evaluation, intent recognition, entity extraction, and response era.

- Coordinates information retrieval from different parts.

Interplay:

- Receives consumer queries from the API Gateway.

- Sends queries to the LLM for processing.

- Retrieves information from the Vector Database, Fee Database, and Exterior APIs.

- Sends processed information to the LLM for response era.

- Sends LLM-generated responses to the API Gateway.

Instance: LangChain, LlamaIndex

4. Giant Language Mannequin (LLM):

Operate:

- Processes pure language queries.

- Understands consumer intent and extracts related entities.

- Generates vector embeddings.

- Generates pure language responses.

- Generates SQL queries and API requests.

Interplay:

- Receives queries and information from the LLM Orchestration Service.

- Returns processed information and responses to the LLM Orchestration Service.

Instance: GPT-4, Llama, Bert

5. Vector Database:

Operate:

- Shops vector embeddings of payment-related information.

- Permits environment friendly similarity searches for contextual information retrieval.

Interplay:

- Receives question vectors from the LLM Orchestration Service.

- Returns related information chunks primarily based on similarity search.

- Offers the means to retrieve the unique information from the unique information retailer.

Instance: Pinecone, FAISS, chromeDB

6. RAG (Retrieval-Augmented Technology)

Operate:

- The RAG system retrieves related information from the vector database.

- After retrieving the mandatory context, the RAG system sends it to the LLM, which makes use of the retrieved information to generate a extra correct, context-aware response.

Interplay:

- Retrieve information from vector database.

- Ship information to LLM

7. Fee Community Database:

Operate:

- Shops structured transaction information, buyer data, and different payment-related information.

- Offers a dependable supply of transactional data.

Interplay:

- Receives SQL queries from the LLM Orchestration Service.

- Returns question outcomes to the LLM Orchestration Service.

8. Exterior APIs:

Operate:

- Offers entry to exterior companies, similar to fraud detection, forex conversion, and geolocation.

- Enhances the platform’s capabilities with exterior information.

Interplay:

- Receives API requests from the LLM Orchestration Service.

- Returns API responses to the LLM Orchestration Service.

9. Knowledge Storage (Unique Knowledge Retailer):

Operate:

- Shops the unique information that was used to create the vector embeddings.

- Permits the system to retrieve the total, detailed data related to the vector chunks.

Interplay:

- Receives requests from the LLM Orchestration Service to retrieve authentic information.

- Returns the requested information.

10. Safety and Authentication Service:

Operate:

- Handles consumer authentication and authorization.

- Ensures safe entry to the platform and information.

- Offers encryption and information safety.

Interplay:

- Authenticates consumer requests acquired by the API Gateway.

- Authorizes entry to particular information and functionalities.

- A consumer submits a question by way of the UI.

- The API Gateway receives the question and routes it to the LLM Orchestration Service.

- The LLM Orchestration Service sends the question to the LLM for evaluation.

- The LLM generates a question vector and sends it to the Vector Database.

- The Vector Database returns related information chunks/contextual information.

- The LLM Orchestration Service initiates RAG to retrieve related information from the vector database.

- The LLM Orchestration Service determines if further information is required and retrieves it from the Fee Community Database or Exterior APIs.

- The LLM Orchestration Service sends the mixed information to the LLM for response era.

- The LLM generates a pure language response.

- The LLM Orchestration Service sends the response to the API Gateway.

- The API Gateway sends the response to the UI.

- The UI shows the response to the consumer.

Pseudocode

FUNCTION IntelliQueryPaynet_Inquiry(userQuery):

# 1. LLM: Analyze Person Question

intent, entities = LLM_AnalyzeQuery(userQuery)

# 2. Vectorize Question

queryVector = LLM_GenerateQueryVector(userQuery)

# 3. Vector Database Search

relevantContextChunks = VectorDB_Search(queryVector, topK) # Retrieve top-k most related context chunks

# 4. Retrieve Unique Contextual Knowledge

contextualData = RetrieveOriginalData(relevantContextChunks)

# 5. Decide Knowledge Supply (Fee DB, API, or Context Alone)

IF intent requires particular transaction information from Fee DB:

dataSource = "PaymentDB"

ELSE IF intent requires exterior service information (e.g., fraud scoring):

dataSource = "ExternalAPI"

ELSE:

dataSource = "ContextOnly" # Contextual information is enough

# 6. Generate Structured Question (if wanted)

IF dataSource == "PaymentDB":

sqlQuery = LLM_GenerateSQL(intent, entities)

IF sqlQuery == ERROR:

RETURN "Error: Did not generate SQL question."

ELSE IF dataSource == "ExternalAPI":

apiParams = LLM_GenerateAPIParams(intent, entities)

IF apiParams == ERROR:

RETURN "Error: Did not generate API parameters."

ELSE:

structuredQuery = null # No want for structured question

# 7. Execute Structured Question (if wanted)

IF dataSource == "PaymentDB":

dbResults = ExecuteSQLQuery(sqlQuery, "PaymentDB")

IF dbResults == ERROR:

RETURN "Error: Did not retrieve information from PaymentDB."

ELSE IF dataSource == "ExternalAPI":

apiResults = ExecuteAPIRequest(apiParams, "ExternalAPI")

IF apiResults == ERROR:

RETURN "Error: Did not retrieve information from ExternalAPI."

ELSE:

dbResults = null;

apiResults = null;

# 8. Mix Knowledge Sources

combinedData = CombineData(contextualData, dbResults, apiResults)

# 9. LLM: Generate Response

response = LLM_GenerateResponse(combinedData, intent)

IF response == ERROR:

RETURN "Error: Did not generate response."

# 10. Return Response

RETURN response

# Helper Capabilities (Examples):

FUNCTION LLM_AnalyzeQuery(question):

# LLM to extract intent and entities

RETURN LLM(question, "Analyze intent and entities")

FUNCTION LLM_GenerateQueryVector(question):

# LLM to generate vector illustration of the question

RETURN LLM(question, "Generate vector illustration")

FUNCTION VectorDB_Search(queryVector, topK):

# Vector database seek for top-k related chunks

RETURN VectorDB.search(queryVector, topK)

FUNCTION RetrieveOriginalData(contextChunks):

# Retrieve authentic information related to the context chunks

RETURN OriginalDataStore.retrieve(contextChunks)

FUNCTION LLM_GenerateSQL(intent, entities):

# LLM to generate SQL question primarily based on intent and entities

RETURN LLM(intent, entities, "Generate SQL question")

FUNCTION ExecuteSQLQuery(sqlQuery, database):

# Execute SQL question in opposition to the database

TRY:

outcomes = database.execute(sqlQuery)

RETURN outcomes

EXCEPT:

RETURN ERROR

FUNCTION LLM_GenerateAPIParams(intent, entities):

# LLM to generate API parameters

RETURN LLM(intent, entities, "Generate API parameters")

FUNCTION ExecuteAPIRequest(apiParams, apiEndpoint):

# Execute API request

TRY:

outcomes = API(apiEndpoint, apiParams)

RETURN outcomes

EXCEPT:

RETURN ERROR

FUNCTION CombineData(context, dbResults, apiResults):

# Mix information from totally different sources

mixed = context

IF dbResults != null:

mixed = mixed + dbResults #concatenate, or extra complicated merge logic

IF apiResults != null:

mixed = mixed + apiResults #concatenate, or extra complicated merge logic

RETURN mixed

FUNCTION LLM_GenerateResponse(information, intent):

# LLM to generate pure language response

RETURN LLM (information, intent, "Generate response")

Key Enhancements and Explanations:

Express Knowledge Supply Willpower:

- The dataSource variable clearly signifies whether or not to make use of the Fee DB, Exterior API, or rely solely on the contextual information from the vector database.

Clear Knowledge Mixture:

- The CombineData() perform handles the merging of contextual information with outcomes from the database or API, offering a extra strong method.

Error Dealing with:

- Primary error dealing with is included for SQL question execution and API requests.

Context-First Method:

- The system prioritizes retrieving related contextual data from the vector database earlier than resorting to structured queries, enhancing effectivity and dealing with of pure language nuances.

Modular Design:

- The pseudocode is damaged down into modular features for higher readability and maintainability.

Null Checking:

- Added null checking for dbResults and apiResults to forestall errors.

LLM-Pushed Community Fee Inquiry Platform, supplies a wealth of worth to varied stakeholders throughout the cost community ecosystem. Right here’s an in depth breakdown:

1. Enhanced Buyer Help:

- 24/7 Availability: Offers prompt assist, decreasing wait occasions and enhancing buyer satisfaction.

- Pure Language Interplay: Permits customers to ask questions in plain language, simplifying the assist course of.

- Correct and Constant Solutions: Delivers constant and correct data, decreasing the probability of errors.

- Diminished Help Prices: Automates responses to widespread inquiries, releasing up human brokers to deal with complicated points.

- Quicker Problem Decision: Offers faster entry to data, resulting in sooner decision of cost points.

2. Improved Operational Effectivity:

- Automated Data Retrieval: Streamlines entry to cost information, insurance policies, and documentation.

- Diminished Guide Effort: Minimizes the necessity for guide searches and information retrieval.

- Quicker Determination-Making: Offers fast entry to related data, enabling sooner decision-making.

- Elevated Productiveness: Frees up employees to give attention to extra strategic duties.

3. Enhanced Fraud Detection and Prevention:

- Actual-Time Knowledge Evaluation: Permits real-time evaluation of transaction information to determine potential fraud.

- Contextual Fraud Insights: Offers contextual data to assist determine and stop fraudulent exercise.

- Quicker Fraud Investigations: Streamlines the method of investigating potential fraud circumstances.

4. Simplified Compliance and Regulatory Inquiries:

- Straightforward Entry to Compliance Data: Offers fast entry to cost insurance policies, laws, and procedures.

- Improved Compliance Administration: Simplifies the method of managing compliance necessities.

- Diminished Compliance Danger: Helps be sure that cost operations adjust to related laws.

5. Improved Knowledge Entry and Information Administration:

- Centralized Information Base: Creates a centralized repository of cost data.

- Improved Knowledge Accessibility: Makes cost information and documentation extra accessible to licensed customers.

- Enhanced Information Sharing: Facilitates information sharing amongst cost processing groups.

6. Enhanced Person Expertise:

- Intuitive Interface: Offers a user-friendly interface that simplifies entry to cost data.

- Customized Help: Can present customized assist primarily based on consumer historical past and preferences.

- Elevated Person Satisfaction: Improves the general consumer expertise by offering sooner and extra handy entry to data.

7. Value Discount:

- Diminished Buyer Help Prices: Automates responses to widespread inquiries, decreasing the necessity for human brokers.

- Diminished Operational Prices: Streamlines entry to data and automates duties, decreasing operational prices.

- Diminished Fraud Losses: Helps forestall fraudulent exercise, decreasing monetary losses.

Instance Use Case

A service provider asks:

“Are you able to verify if the transaction #1000 has been processed efficiently?”

LM-Pushed Community Fee engine replies:

“The transaction #1000 was efficiently processed on April 03, 2024.

The quantity will seem within the buyer’s account throughout the

subsequent 3 enterprise days.”

This eliminates back-and-forth with customer support and accelerates decision.

Conclusion:

An LLM-powered Community Fee Platform is a transformative answer designed to streamline cost operations, elevate buyer assist, improve fraud detection, and simplify regulatory compliance. By harnessing superior language intelligence, it empowers organizations to make smarter selections, scale back operational prices, and ship a superior consumer expertise.