Synthetic Intelligence — or AI for brief — is in every single place these days, helping in advanced decision-making, automating repetitive duties, and forecasting tendencies by analysing historic information. However regardless of its spectacular functions, AI is simply the floor layer. Beneath it, two core methodologies drive its capabilities: Machine Studying (ML) and Deep Studying (DL).

Each ML and DL revolve across the identical important objective — enabling machines to study patterns from huge quantities of knowledge. However how does a machine really “study”? What mechanisms energy this course of behind the scenes?

That is the place the perceptron comes into play — a foundational idea that acts as the essential constructing block of neural networks. On this article, we’ll break down what a perceptron is, the way it capabilities, and the way it evolves into extra highly effective fashions just like the Multilayer Perceptron (MLP) — paving the best way to understanding extra superior studying techniques.

Synthetic neural community algorithms are computational fashions impressed by the construction and performance of the human mind, designed to study patterns from information. Such information is both a large historic dataset or a simulated dataset from which they study.

There are a number of forms of synthetic neural networks. The easy perceptron and multilayer perceptron algorithms are foundational on this discipline. So, what precisely is a perceptron?

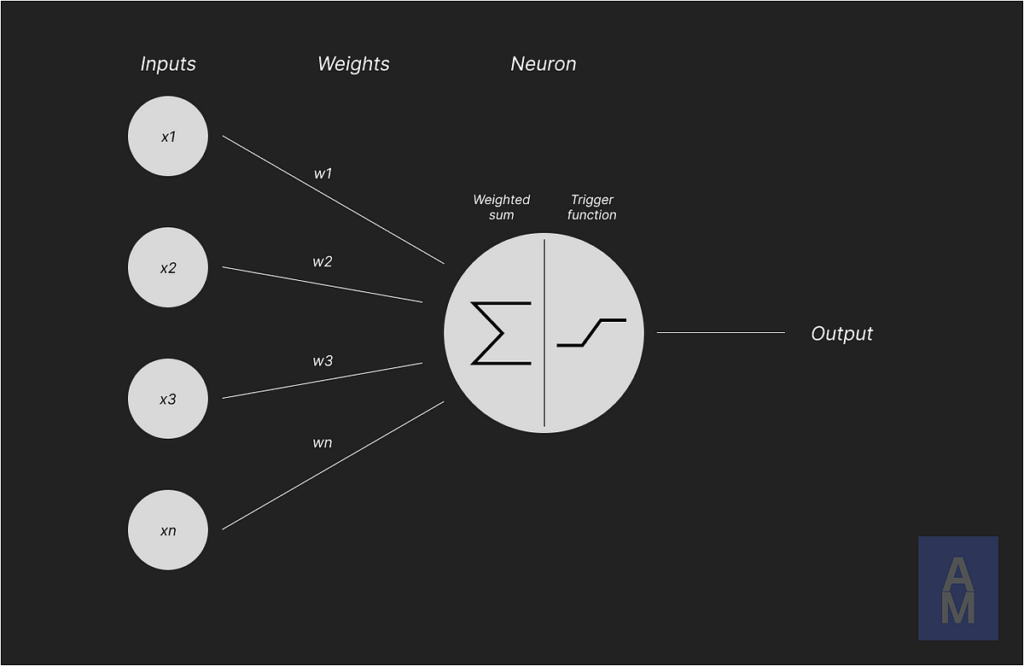

A perceptron is a simplified mathematical illustration of a organic neuron.

Utilized to Machine Studying, a perceptron is primarily used for binary classification. Whereas in Deep Studying, it’s a basic piece when constructing neural community layers. The perceptron is liable for figuring out whether or not its inputs are true or false. Typically, the result’s a quantity between 0 and 1, which will be interpreted as a chance of being true (1) or false (0).

The perceptron consists of a number of components or levels that happen, typically, sequentially. These levels are:

Enter

The perceptron requires enter information that may be represented as a vector: x = [ x1, x2, …, xn ]

Weights

Each weight is tied to a selected x enter aspect. This worth represents the significance of its corresponding enter aspect to the neuron.

As soon as the weights are related to their corresponding inputs, every pair is multiplied ( x1 * w1, x2 * w2, … xn * wn) and the outcomes are summed to kind the weighted sum.

Set off

A set off perform processes the outcome from the weighted sum. Widespread set off capabilities embrace Sigmoid, ReLU, and Softmax.

Output

The ultimate outcome from the perceptron is a scalar produced by the set off perform. There are further levels after this one, such because the “Weights replace” stage. This stage is liable for updating weights related to the present perceptron. To hold out this course of, a loss perform ought to be utilized — we’ll go deeper into this later — to calculate the significance of the weights within the output outcome. As we will see, this could possibly be divided into two new completely different levels: “Error stage” and “Weights replace”.

In brief, a easy perceptron includes one layer, containing just one neuron. It’s the most elementary neural community mannequin.

As we’ve seen, a perceptron consists of two elements: an enter layer and an output layer, the place solely the output layer performs computation. Within the case of a easy perceptron, all of the processing is dealt with by a single neuron within the output layer. However what occurs in a multilayer perceptron mannequin?

A multilayer perceptron consists of three layers:

Enter Layer

Like the easy perceptron mannequin, the multilayer perceptron has its personal enter layer. Nonetheless, the neurons on this layer move the acquired information to the subsequent layer with out processing. Every neuron represents a selected characteristic of the issue being addressed.

Hidden Layer

These layers encompass a number of neurons. Every neuron takes as inputs the outputs of all neurons from the earlier layer by doing a brand new weighted sum and passing it to its set off perform. After processing the info, the neuron sends its output to the neurons of the subsequent layer. The variety of hidden layers and neurons per layer is chosen based mostly on the complexity of the issue.

Output Layer

That is the place the ultimate perceptron result’s generated. As we’ve mentioned, the variety of neurons on this layer is dependent upon the issue to be solved, as an illustration, 1 neuron for binary classification or at the very least 2 neurons for a multiclass classification drawback.

You may need seen that one stage is just not talked about but: the error stage. How is it dealt with in a multilayer perceptron mannequin?

The multilayer perceptron mannequin’s behaviour is segmented into 3 completely different levels:

Ahead Propagation

Ahead propagation entails passing information from the enter layer by every hidden layer to the output layer, making use of transformations by way of weighted sums and activation capabilities at every step. Each neuron calculates the weighted sum based mostly on its inputs and weights, and processes the outcome utilizing its corresponding set off perform (every layer of neurons may use its personal set off perform), so this result’s despatched to the neurons of the subsequent layer.

Error Calculation

As soon as the ahead propagation course of has completed, the subsequent step is the error calculation. There are other ways to calculate the error utilizing loss capabilities, as an illustration, Imply Squared Error (MSE) for regression, and Binary Cross Entropy for binary classification, and so forth.

Backpropagation

The final stage is an important as a result of that is the place studying occurs. Backpropagation updates the weights of neurons throughout all layers by computing and propagating the error (error gradient) backwards from the output layer to the enter layer. As each iteration passes, also referred to as an epoch, the mannequin progressively reduces its error. Meaning the mannequin is studying.

The perceptron might appear to be a easy mannequin at first look, however it laid the groundwork for contemporary neural networks. Understanding the way it works — from weighted inputs and activation capabilities to error calculation — is crucial to understand the basics of how machines study from information.

As we’ve seen, the transition from a easy perceptron to a multilayer perceptron (MLP) introduces the thought of hidden layers and non-linear transformations, permitting fashions to resolve extra advanced issues. These foundational concepts are on the coronary heart of each Machine Studying and Deep Studying techniques.

In future articles, we’ll construct on this basis by exploring key ideas like ahead propagation, backpropagation, and loss capabilities — the mechanisms that really carry neural networks to life and allow machines to enhance with each iteration.