packageVersion(“Robyn”)

## Power multi-core use when operating RStudio

Sys.setenv(R_FUTURE_FORK_ENABLE = “true”)

choices(future.fork.allow = TRUE)

## Verify simulated dataset or load your personal dataset

information(“dt_simulated_weekly”)

head(dt_simulated_weekly)

information(“dt_prophet_holidays”)

head(dt_prophet_holidays)

# Listing the place you wish to export outcomes to (will create new folders)

robyn_directory <- “~/Desktop”

InputCollect <- robyn_inputs(

dt_input = dt_simulated_weekly,

dt_holidays = dt_prophet_holidays,

date_var = “DATE”, # date format have to be “2020-01-01”

dep_var = “income”, # at the moment one dependent variable allowed

dep_var_type = “income”, # “income” or “conversion” allowed

prophet_vars = c(“pattern”, “season”, “vacation”), # “pattern”,”season”, “weekday” & “vacation” allowed

prophet_country = “DE”, # 123 international locations included in dt_prophet_holidays

context_vars = c(“competitor_sales_B”, “occasions”), # e.g. rivals, low cost, unemployment and so on

paid_media_spends = c(“tv_S”, “ooh_S”, “print_S”, “facebook_S”, “search_S”), # obligatory enter

paid_media_vars = c(“tv_S”, “ooh_S”, “print_S”, “facebook_I”, “search_clicks_P”), # if offered,

# Robyn will use this as an alternative of paid_media_spends for modelling. Media publicity metrics

# embody usually, however not restricted to impressions, GRP and so on. If not relevant, use spend as an alternative.

organic_vars = c(“e-newsletter”), # advertising and marketing exercise with out media spend

factor_vars = c(“occasions”), # point out categorical varibales in context_vars or organic_vars

window_start = “2016-01-01”,

window_end = “2018-12-31”,

adstock = “geometric” # geometric or weibull_pdf

)

print(InputCollect)

hyper_names(adstock = InputCollect$adstock, all_media = InputCollect$all_media)

plot_adstock(plot = FALSE)

plot_saturation(plot = FALSE)

# Instance hyperparameters ranges for Geometric adstock

hyperparameters <- record(

facebook_I_alphas = c(0.5, 3),

facebook_I_gammas = c(0.3, 1),

facebook_I_thetas = c(0, 0.3),

print_S_alphas = c(0.5, 1),

print_S_gammas = c(0.3, 1),

print_S_thetas = c(0.1, 0.4),

tv_S_alphas = c(0.5, 1),

tv_S_gammas = c(0.3, 1),

tv_S_thetas = c(0.3, 0.8),

search_clicks_P_alphas = c(0.5, 3),

search_clicks_P_gammas = c(0.3, 1),

search_clicks_P_thetas = c(0, 0.3),

ooh_S_alphas = c(0.5, 1),

ooh_S_gammas = c(0.3, 1),

ooh_S_thetas = c(0.1, 0.4),

newsletter_alphas = c(0.5, 3),

newsletter_gammas = c(0.3, 1),

newsletter_thetas = c(0.1, 0.4),

train_size = c(0.5, 0.8)

)

InputCollect <- robyn_inputs(InputCollect = InputCollect, hyperparameters = hyperparameters)

print(InputCollect)

# Verify spend publicity match and contemplate channel break up if relevant

InputCollect$ExposureCollect$plot_spend_exposure

# Run all trials and iterations. Use ?robyn_run to examine parameter definition

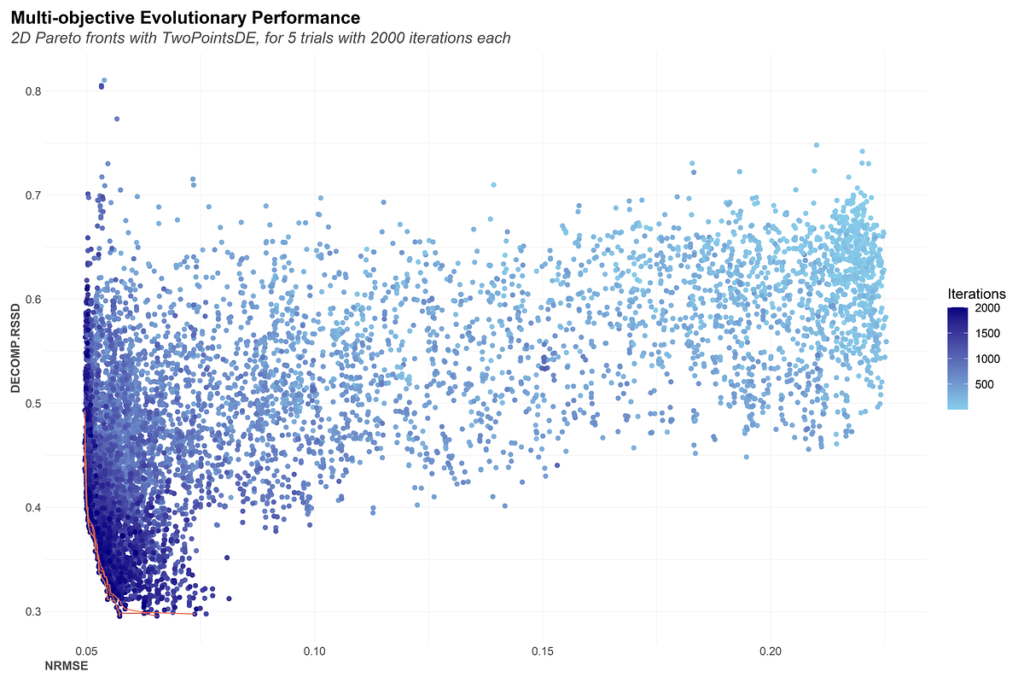

OutputModels <- robyn_run(

InputCollect = InputCollect, # feed in all mannequin specification

cores = NULL, # NULL defaults to (max out there – 1)

iterations = 2000, # 2000 beneficial for the dummy dataset with no calibration

trials = 5, # 5 beneficial for the dummy dataset

ts_validation = FALSE, # 3-way-split time collection for NRMSE validation.

add_penalty_factor = FALSE # Experimental characteristic so as to add extra flexibility

)

print(OutputModels)

OutputModels$convergence$moo_distrb_plot

OutputModels$convergence$moo_cloud_plot

OutputCollect <- robyn_outputs(

InputCollect, OutputModels,

pareto_fronts = “auto”, # robotically choose what number of pareto-fronts to fill min_candidates (100)

# min_candidates = 100, # high pareto fashions for clustering. Default to 100

# calibration_constraint = 0.1, # vary c(0.01, 0.1) & default at 0.1

csv_out = “pareto”, # “pareto”, “all”, or NULL (for none)

clusters = TRUE, # Set to TRUE to cluster comparable fashions by ROAS. See ?robyn_clusters

export = TRUE, # it will create recordsdata domestically

plot_folder = robyn_directory, # path for plots exports and recordsdata creation

plot_pareto = TRUE # Set to FALSE to deactivate plotting and saving mannequin one-pagers

)

print(OutputCollect)

## Examine all mannequin one-pagers and choose one which principally displays your online business actuality

print(OutputCollect)

select_model <- “5_257_2” # Choose one of many fashions from OutputCollect to proceed

#### Model >=3.7.1: JSON export and import (quicker and lighter than RDS recordsdata)

ExportedModel <- robyn_write(InputCollect, OutputCollect, select_model, export = TRUE)

print(ExportedModel)

# To plot any mannequin’s one-pager:

myOnePager <- robyn_onepagers(InputCollect, OutputCollect, select_model, export = FALSE)

print(ExportedModel)

InputCollect$paid_media_selected

AllocatorCollect1 <- robyn_allocator(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

# date_range = “all”, # Default to “all”

# total_budget = NULL, # When NULL, default is whole spend in date_range

channel_constr_low = 0.7,

channel_constr_up = c(1.2, 1.5, 1.5, 1.5, 1.5),

# channel_constr_multiplier = 3,

situation = “max_response”

)

# Print & plot allocator’s output

print(AllocatorCollect1)

plot(AllocatorCollect1)

AllocatorCollect2 <- robyn_allocator(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

date_range = “last_10”, # Final 10 durations, identical as c(“2018-10-22”, “2018-12-31”)

total_budget = 1500000, # Whole funds for date_range interval simulation

channel_constr_low = c(0.8, 0.7, 0.7, 0.7, 0.7),

channel_constr_up = 1.5,

channel_constr_multiplier = 5, # Customise sure extension for wider insights

situation = “max_response”

)

print(AllocatorCollect2)

plot(AllocatorCollect2)

AllocatorCollect3 <- robyn_allocator(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

#channel_constr_low = 0.1,

#channel_constr_up = 10,

# date_range = NULL, # Default: “all” out there dates

situation = “target_efficiency”,

# target_value = 5 # Customise goal ROAS or CPA worth

)

print(AllocatorCollect3)

plot(AllocatorCollect3)

json_file = “~/Desktop/Robyn_202412181043_init/RobynModel-5_257_2.json”

AllocatorCollect4 <- robyn_allocator(

json_file = json_file, # Utilizing json file from robyn_write() for allocation

dt_input = dt_simulated_weekly,

dt_holidays = dt_prophet_holidays,

# date_range = NULL,

situation = “target_efficiency”,

target_value = 2, # Customise goal ROAS or CPA worth

plot_folder = “~/Desktop”,

plot_folder_sub = “my_subdir”

)

RobynRefresh <- robyn_refresh(

json_file = json_file,

dt_input = dt_simulated_weekly,

dt_holidays = dt_prophet_holidays,

refresh_steps = 4,

refresh_iters = 2000,

refresh_trials = 5

)

json_file_rf1 <- “~/Desktop/Robyn_202412181043_init/Robyn_202412181054_rf1/RobynModel-1_133_7.json”

RobynRefresh <- robyn_refresh(

json_file = json_file_rf1,

dt_input = dt_simulated_weekly,

dt_holidays = dt_prophet_holidays,

refresh_steps = 4,

refresh_iters = 2000,

refresh_trials = 5

)

InputCollectX <- RobynRefresh$listRefresh1$InputCollect

OutputCollectX <- RobynRefresh$listRefresh1$OutputCollect

select_modelX <- RobynRefresh$listRefresh1$OutputCollect$selectID

Response <- robyn_response(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

metric_name = “facebook_I”

)

Response$plot

Spend1 <- 80000

Response1 <- robyn_response(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

metric_name = “facebook_I”,

metric_value = Spend1, # whole funds for date_range

date_range = “last_10” # final two durations

)

Response1$plot

Spend2 <- Spend1 + 100

Response2 <- robyn_response(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

metric_name = “facebook_I”,

metric_value = Spend2,

date_range = “last_10”

)

# ROAS for the 100$ from Spend1 stage

(Response2$sim_mean_response – Response1$sim_mean_response) /

(Response2$sim_mean_spend – Response1$sim_mean_spend)

# Instance of getting natural media publicity response curves

sendings <- 30000

response_sending <- robyn_response(

InputCollect = InputCollect,

OutputCollect = OutputCollect,

select_model = select_model,

metric_name = “e-newsletter”,

metric_value = sendings,

date_range = “last_10”

)

# Simulated price per thousand sendings

response_sending$sim_mean_spend / response_sending$sim_mean_response * 1000

# Manually create JSON file with inputs information solely

robyn_write(InputCollect, dir = “~/Desktop”)

# Manually create JSON file with inputs and particular mannequin outcomes

robyn_write(InputCollect, OutputCollect, select_model)

# Choose any exported mannequin (preliminary or refreshed)

json_file <- “~/Desktop/Robyn_202412181043_init/RobynModel-5_257_2.json”

# Non-compulsory: Manually learn and examine information saved in file

json_data <- robyn_read(json_file)

print(json_data)

# Re-create InputCollect

InputCollectX <- robyn_inputs(

dt_input = dt_simulated_weekly,

dt_holidays = dt_prophet_holidays,

json_file = json_file)

# Re-create OutputCollect

OutputCollectX <- robyn_run(

InputCollect = InputCollectX,

json_file = json_file

)

# Or re-create each by merely utilizing robyn_recreate()

RobynRecreated <- robyn_recreate(

json_file = json_file,

dt_input = dt_simulated_weekly,

dt_holidays = dt_prophet_holidays,

quiet = FALSE)

InputCollectX <- RobynRecreated$InputCollect

OutputCollectX <- RobynRecreated$OutputCollect

# Re-export or rebuild a mannequin and examine abstract

myModel <- robyn_write(InputCollectX, OutputCollectX, export = FALSE, dir = “~/Desktop”)

print(myModel)

# Re-create one-pager

myModelPlot <- robyn_onepagers(InputCollectX, OutputCollectX, export = FALSE)

# myModelPlot[[1]]$patches$plots[[7]]

# Refresh any imported mannequin

RobynRefresh <- robyn_refresh(

json_file = json_file,

dt_input = InputCollectX$dt_input,

dt_holidays = InputCollectX$dt_holidays,

refresh_steps = 6,

refresh_mode = “handbook”,

refresh_iters = 1000,

refresh_trials = 1

)

# Recreate response curves

robyn_response(

InputCollect = InputCollectX,

OutputCollect = OutputCollectX,

metric_name = “e-newsletter”,

metric_value = 50000

)