By Andrey Nikishaev, Software program Architect, 25 years within the area

The Combination-of-Brokers (MoA) framework is redefining how we push massive language fashions (LLMs) to greater ranges of accuracy, reasoning depth, and reliability — with out the prohibitive price of scaling a single huge mannequin.

As a substitute of counting on one “jack-of-all-trades” LLM, MoA orchestrates a crew of specialised fashions that collaborate in structured layers, refining outputs step-by-step. This strategy is exhibiting state-of-the-art (SOTA) outcomes utilizing even open-source fashions, surpassing high proprietary LLMs like GPT-4 Omni on a number of benchmarks.

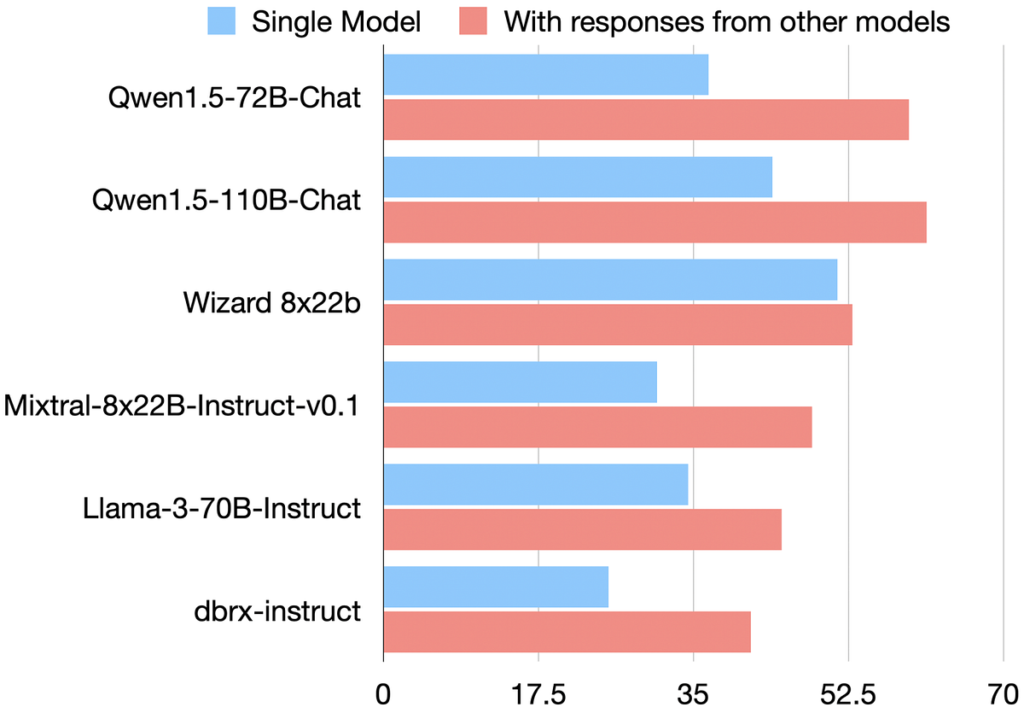

Collaborativeness amongst LLMs. Why mix fashions in any respect? The MoA crew discovered that many off-the-shelf LLMs enhance when consulting one another’s solutions. In experiments on the AlpacaEval 2.0 benchmark, fashions like LLaMA, WizardLM, and Qwen carried out higher (“win fee” towards a GPT-4 reference) when given peer-model solutions along with the immediate.

In Determine 1, every mannequin’s win fee jumps (crimson bars vs blue) when it sees others’ responses — proof that LLMs “inherently collaborate” and may refine or validate solutions primarily based on one another. Crucially, this holds even when the peer solutions are worse than what the mannequin would do alone. In different phrases, a number of views assist an LLM keep away from blind spots. This perception prompted MoA’s design: a framework to harness the collective experience of a number of fashions.

Determine 1: The “collaborativeness” impact — LLMs rating greater on AlpacaEval 2.0 when supplied with different fashions’ solutions (crimson) versus alone (blue). Even high fashions (e.g. Qwen 110B) profit from collaborating with friends, motivating MoA.The Combination-of-Brokers Structure

MoA tackles these points with a structured, multi-agent strategy:

- Layered design — A number of brokers per layer, every taking all earlier outputs as enter.

- Function specialization — Proposers: Generate various candidate solutions. Aggregators: Merge and refine right into a single, higher-quality output.

- Iterative enchancment — Every layer builds on the earlier, step by step boosting accuracy and coherence.

- Mannequin range — Combining various architectures reduces shared weaknesses.

- No fine-tuning required — Works fully through immediate engineering.

Every agent is an LLM assigned one in all two roles: Proposers or Aggregators.

Generate candidate solutions — they “excel at producing helpful reference responses” that add context and various views. A proposer won’t give the perfect last reply itself, however it contributes helpful items of the puzzle.

In contrast, specialise in synthesizing and bettering upon others’ outputs. An excellent aggregator can take a set of tough solutions and merge them right into a single high-quality response, sustaining or enhancing high quality even when some inputs are weak.

Many fashions can act in both function — e.g. GPT-4, Qwen-1.5, and LLaMA confirmed sturdy efficiency each proposing and aggregating — whereas some (WizardLM) had been notably higher as proposers than aggregators. MoA leverages these strengths by assigning every mannequin to the function the place it excels.

MoA organizes brokers into a number of layers (consider it like a small pipeline of fashions).

Determine 2 illustrates an instance with 4 layers and three brokers per layer. In Layer 1, n proposer brokers independently generate solutions to the consumer’s immediate. Their outputs are then handed to Layer 2, the place one other set of brokers (which might reuse the identical fashions or totally different ones) see all earlier solutions as extra context. Every layer’s brokers thus have extra materials to work with — successfully performing iterative refinement of the response.

This course of continues for a number of layers, and eventually an aggregator agent produces the only consolidated reply. Intuitively, earlier layers contribute concepts and partial options, whereas later layers mix and polish them. By the ultimate layer, the reply is way extra complete and strong than any first-pass try.

Determine 2: Combination-of-Brokers structure (simplified to three brokers × 4 layers).

A key design query is easy methods to assign fashions to layers. The MoA paper suggests two standards:

- (a) Efficiency — stronger fashions (greater single-model win-rates) are prime candidates for later layers

- (b) Variety — use a mixture of mannequin sorts so every brings one thing distinctive.

In reality, they discovered heterogeneous fashions contribute way over clones of the identical mannequin.

In MoA’s implementation, the ultimate layer typically has the only greatest mannequin appearing as aggregator, whereas earlier layers could be full of a various set of proposers. Curiously, experiments confirmed many high fashions can do each roles nicely, however some are a lot stronger in a single function.

For instance, WizardLM (a fine-tuned LLaMA variant) excelled as a proposer producing artistic solutions, however struggled as an aggregator to mix others’ content material. GPT-4 (OpenAI) and Qwen-1.5 (Alibaba) had been extra versatile, performing nicely as each aggregator and proposer.

These insights can information builders to decide on an applicable combine — e.g. use an open-source GPT-4-like mannequin as aggregator, and have specialised smaller fashions suggest solutions (maybe a code-specialized mannequin, a reasoning-specialized mannequin, and so forth., relying on the question area).

The MoA structure was evaluated on a number of robust benchmarks, and the outcomes are hanging. Utilizing solely open-source fashions (no GPT-4 in any respect), MoA matched or beat the mighty GPT-4 in total high quality.

AlpacaEval 2.0 (Size-Managed Win Price)

- MoA w/ GPT-4o: 65.7%

- MoA (open-source solely): 65.1%

- MoA-Lite (cost-optimized): 59.3%

- GPT-4 Omni: 57.5%

- GPT-4 Turbo: 55.0%

MT-Bench (Avg. Rating)

- MoA w/ GPT-4o: 9.40

- MoA (open-source): 9.25

- GPT-4 Turbo: 9.31

- GPT-4 Omni: 9.19

FLASK (Talent-Primarily based Analysis) — MoA outperforms GPT-4 Omni in:

- Robustness

- Correctness

- Factuality

- Insightfulness

- Completeness

- Metacognition

Determine 3: Superb-grained analysis (FLASK) radar chart. MoA (crimson dashed) vs GPT-4 (blue) throughout 12 ability dimensions. MoA outperforms GPT-4 on a number of fronts (e.g. factuality, insightfulness), with a light verbosity penalty (decrease conciseness). Qwen-110B (crimson strong) because the MoA aggregator alone trails behind on a number of expertise, exhibiting the multi-agent synergy boosts total efficiency.

It’s essential to emphasise MoA’s effectivity: these positive factors had been achieved with open fashions which can be collectively less expensive than GPT-4. For instance, one MoA configuration used 6 open fashions (like Qwen-110B, LLaMA-70B, and so forth.) throughout 3 layers and nonetheless solely price a fraction of GPT-4’s API utilization.

The crew additionally devised a lighter variant known as MoA-Lite — utilizing simply 2 layers and a smaller aggregator (Qwen-72B) — which nonetheless barely beat GPT-4 Omni on AlpacaEval (59.3% vs 57.5%) whereas being less expensive. In different phrases, even a pared-down MoA can surpass GPT-4 high quality at decrease price.

Basically, MoA faucets into the knowledge of crowds amongst fashions. Every agent contributes distinctive strengths — one would possibly add data, one other checks consistency, one other improves phrasing. The ultimate outcome advantages from all their experience.

An illustrative comparability was made between MoA and a naive LLM ranker ensemble. The ranker would merely generate a number of solutions and have an LLM (like GPT-4 or Qwen) decide the perfect, with out synthesizing them. MoA considerably outperformed that strategy. This confirms MoA’s aggregator isn’t simply choosing one of many inputs; it’s really combining concepts (the paper even discovered the aggregator’s reply has the best overlap with the perfect elements of proposals, through BLEU rating correlation). Collaboration, not simply choice, is the important thing.

For builders, a serious attraction of MoA is cost-effectiveness. By orchestrating smaller open fashions, you possibly can obtain GPT-4-level output with out paying API charges or working a 175B-parameter mannequin for each question. The MoA authors present an in depth price evaluation (see Determine 5).

MoA configurations lie on a Pareto frontier of high quality vs price — delivering excessive win-rates at a lot decrease price than GPT-4. For example, one MoA run produced a 4% greater win-rate than GPT-4 Turbo whereas being 2× cheaper by way of inference price.

Even MoA-Lite (2 layers) achieved the identical win-rate as GPT-4 Omni at equal price, primarily matching GPT-4’s quality-per-dollar, and truly beat GPT-4 Turbo’s high quality at half the associated fee. This opens the door for budget-conscious functions: you may deploy a set of fine-tuned 7B–70B open fashions that collectively rival or surpass a closed 175B mannequin.

Determine 5: Efficiency vs price and latency trade-offs. Left: LC win-rate (high quality) vs API price per question. MoA (blue/orange factors alongside dashed grey frontier) achieves ~60–65% win-rate at a price far under GPT-4 (crimson stars). Proper: Win-rate vs inference throughput (in TFLOPs, proxy for latency). MoA once more is on the Pareto frontier — mixtures of smaller fashions effectively attain prime quality. “Single Proposer” makes use of one mannequin to generate a number of solutions; “Multi Proposer” (MoA) makes use of totally different fashions per layer, which is extra compute-efficient by parallelizing brokers.

One other benefit is flexibility. Since MoA works through prompting, you possibly can dynamically scale the variety of brokers or layers primarily based on the question complexity or obtainable compute. Want a fast, low cost reply? Run MoA-Lite with fewer brokers. Want most high quality? Add a layer of a really massive aggregator (even GPT-4 itself might be utilized in MoA as a last aggregator to push high quality additional).

The framework enables you to mix-and-match open fashions so long as you possibly can immediate them. This additionally means you possibly can specialize brokers: e.g. add a code-specific LLM in Layer 1 to suggest a coding resolution, a math-specific LLM to verify calculations, and so forth., and have the aggregator merge their outputs. Within the paper’s ablations, utilizing various mannequin sorts yielded considerably higher solutions than homogeneous brokers — so range is price leveraging.

The authors have launched their MoA code (Immediate scripts and mannequin configs) on GitHub, making it simpler to breed and adapt. https://github.com/togethercomputer/moa

To implement MoA, you’d run every layer’s brokers in parallel (to reduce latency), collect their outputs, and feed them (with an “combination” system immediate) into the subsequent layer’s brokers. No fine-tuning is required — simply cautious immediate engineering.

It’s sensible to make use of length-controlled era for brokers (guaranteeing none rambles too lengthy) to offer the aggregator balanced inputs.

Additionally, when selecting fashions for every layer, think about using your strongest mannequin as last aggregator (because it has to output the ultimate reply) and smaller/extra various fashions as proposers in earlier layers. The paper’s default MoA used 6 brokers per layer for 3 layers: Qwen-110B as aggregator, and a mixture of Qwen-72B, WizardLM 22B, LLaMA-3 70B, Mixtral 22B, and Mosaic’s MPT (dbrx) as proposers. That blend was chosen for sturdy base efficiency and heterogeneity.

Wanting forward, Combination-of-Brokers factors to a brand new manner of constructing AI programs. As a substitute of counting on one big, all-purpose mannequin, we will create a crew of specialised fashions that work collectively in pure language. That is just like how human groups function. For instance, in a medical setting:

- One agent would possibly recommend potential diagnoses.

- One other may confirm the findings towards medical databases.

- A 3rd (the aggregator) would mix all the things into the ultimate advice.

These agent ecosystems are sometimes extra strong and clear. You may monitor every agent’s contribution, making it simpler to grasp and belief the ultimate output.

Analysis exhibits that even at this time’s fashions, with out additional coaching, can collaborate successfully. After they do, they will exceed the efficiency of any single mannequin working alone.

For manufacturing AI, MoA presents a sensible, cost-efficient path to GPT-4-level high quality by combining open fashions as an alternative of paying for one massive, proprietary mannequin.

As open-source LLMs maintain bettering, MoA-style architectures are prone to develop into the norm — scaling high quality by way of collaboration reasonably than dimension. The period of “LLMs as crew gamers” is simply starting.

- MoA boosts high quality by way of collaboration — A number of LLMs trade and refine one another’s outputs, even when some inputs are weaker, leveraging the “collaborativeness” impact.

- Layered refinement — Every layer of brokers sees prior outputs and the unique immediate, enabling step-by-step enchancment.

- Confirmed benchmark positive factors — Outperform extra expensive fashions

- Price-effective — Matches or beats GPT-4 high quality utilizing cheaper open fashions; MoA-Lite presents sturdy outcomes with decrease compute.

- Flexibility — Simply swap in specialised fashions for area duties or modify layers for pace vs high quality.

- Future-ready — Represents a shift towards multi-agent AI programs that resemble professional groups, probably changing into a normal strategy for production-grade LLM deployments.

References:

The Combination-of-Brokers structure was launched in Wang et al., 2024 (arXiv:2406.04692). https://arxiv.org/pdf/2406.04692