This might be a brief entry for instance create a deterministic setting to serve any machine studying mannequin utilizing Flask.

I’ll use a easy logistic regression model, which is validated and examined for stability utilizing cross-validation. I used Flask to serve the mannequin.

Save the Mannequin

Step one is to avoid wasting the mannequin utilizing pickle. We additionally need to save theDictVectorizer as a result of to make predictions we should apply the identical transformation used throughout coaching.

# SAVE THE MODEL

output_file = f"model_C={C}.bin"

with open(output_file, "wb") as f_out:

pickle.dump((dv, mannequin), f_out)

print(f"The mannequin has been saved to {output_file}")

Create a Internet Service

The code under exposes an endpoint that we are able to entry utilizing the request library.

from flask import Flask, request, jsonify

import pickleC = 1.0

input_file = f"model_C-{C}.bin"

with open(input_file, "rb") as f_in:

dv, mannequin = pickle.load(f_in)

app = Flask("ping")

@app.route("/predict", strategies=["POST"])

def predict():

buyer = request.get_json()

X = dv.remodel(buyer)

y_pred = mannequin.predict_proba(X)[0, 1]

churn = y_pred > 0.5

end result = {"churn": bool(churn), "churn_probability": float(y_pred)}

return jsonify(end result) # JSON RESPONSE

if __name__ == "__main__":

app.run(

debug=True, host="0.0.0.0", port=9696

) # DEBUG MODE REFRESHES THE SERVER AUTOMATICALLY WITH ANY NEW CHANGE

To show the endpoint, we have to run the next command within the terminal:

gunicorn — bind 0.0.0.0:9696 web_service:app

Eat the Internet Service

We are able to eat the online service at this URL http://0.0.0.0:9696/predict. Our predict.pyfile might seem like this:

import requests

url = "http://0.0.0.0:9696/predict"

response = requests.publish(url, json=buyer).json()

print(response)

Now we are able to run the predict file and get a prediction: python predict.py

Create the Docker File

Utilizing Docker, we are able to create an remoted setting, guaranteeing that our mannequin runs constantly on a server simply because it does in our native digital setting.

The next Docker file makes use of Poetry as the principle package deal supervisor and is barely optimized following the steps on this amazing article.

FROM python:3.10-slim# Set up system dependencies wanted for Poetry and Python packages

RUN apt-get replace && apt-get set up -y --no-install-recommends

build-essential gcc curl &&

rm -rf /var/lib/apt/lists/*

# Set up Poetry

RUN pip set up poetry==1.8.3

# Set setting variables for Poetry

ENV POETRY_NO_INTERACTION=1

POETRY_VIRTUALENVS_IN_PROJECT=1

POETRY_VIRTUALENVS_CREATE=1

POETRY_CACHE_DIR=/tmp/poetry_cache

# Set working listing

WORKDIR /app

# Copy dependency recordsdata first for higher caching

COPY pyproject.toml poetry.lock ./

# Set up dependencies with out putting in the undertaking itself

# That is magic. It prevents rebuilding the dependencies everytime the undertaking (code) adjustments

RUN poetry set up --no-root # instructs Poetry to keep away from putting in the present undertaking into the digital setting

# Copy your complete undertaking

COPY web_service.py model_C-1.0.bin ./

# Expose the port

EXPOSE 9696

ENTRYPOINT ["poetry", "run", "gunicorn", "--bind", "0.0.0.0:9696", "web_service:app"]

After, we have to construct the picture and run it:

# BUILD IMAGE

docker construct -t churn_predictor .

# RUN IMAGE

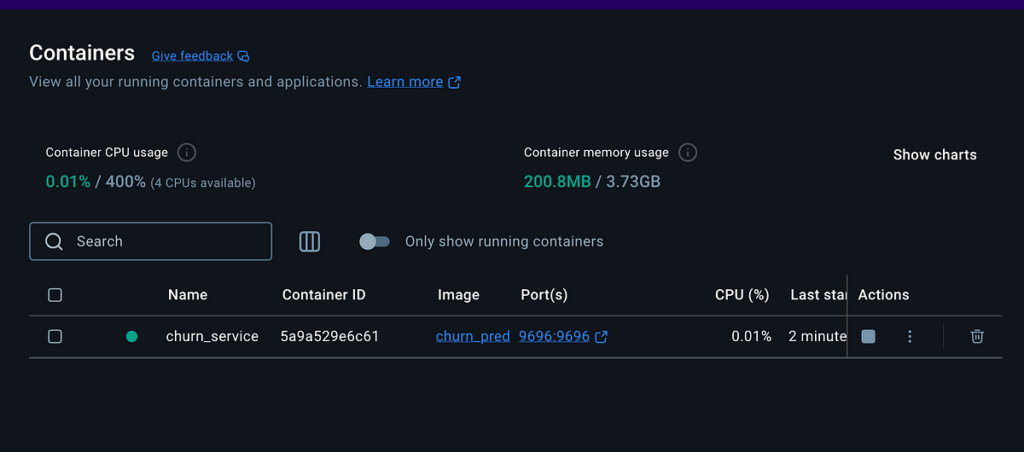

docker run -d -p 9696:9696 --name churn_service churn_predictor

-druns the container in indifferent mode (background).-p 9696:9696maps port 9696 within the container to port 9696 in your native machine— identify churn_serviceassigns a reputation to the container for simpler administration

We are able to run our predicty.pyfile and get a prediction from the Docker picture: python predict.py

The logical subsequent step can be to deploy it to the cloud utilizing a service reminiscent of Amazon Elastic Beanstalk.

💚